Innovium Teralynx 7-based 32x 400GbE Switch External Hardware Overview

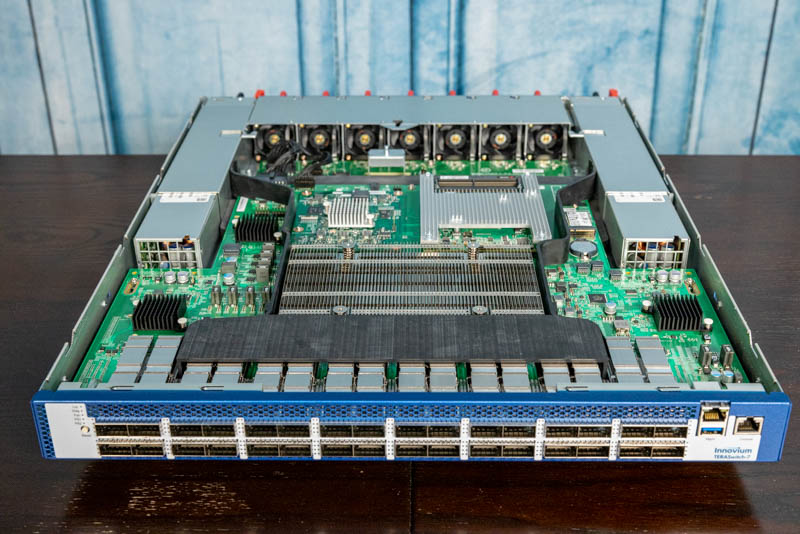

Inside the switch, we have a fairly standard design that we have seen many times previously. With the hot components including the QSFP-DD connectors with their optics/ DACs and the Teralynx 7 upfront, this is going to be a common layout.

Here is another view of inside the switch to hopefully help you associate the subsequent pictures with where they are in the chassis.

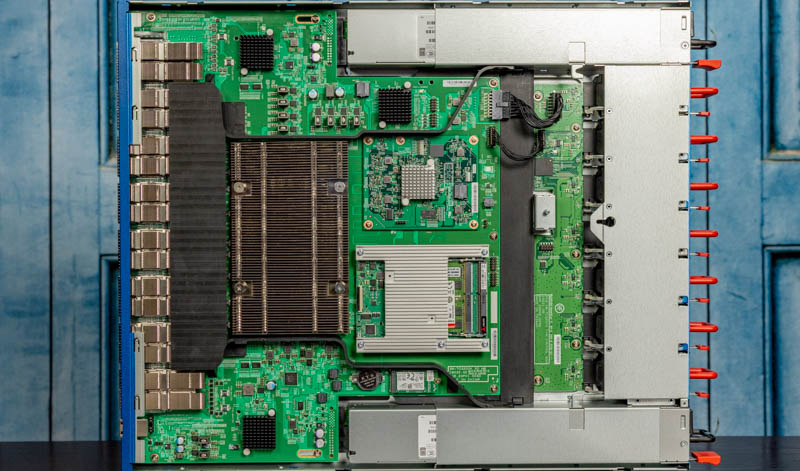

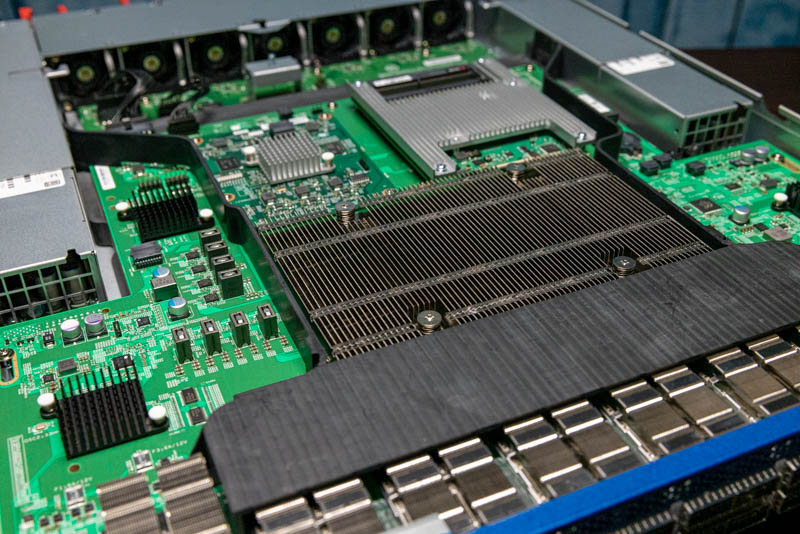

First, we have the Innovium Teralynx 7 ASIC under the massive heatsink. With the 12.8T generation, we do not yet need capabilities such as co-packaged optics.

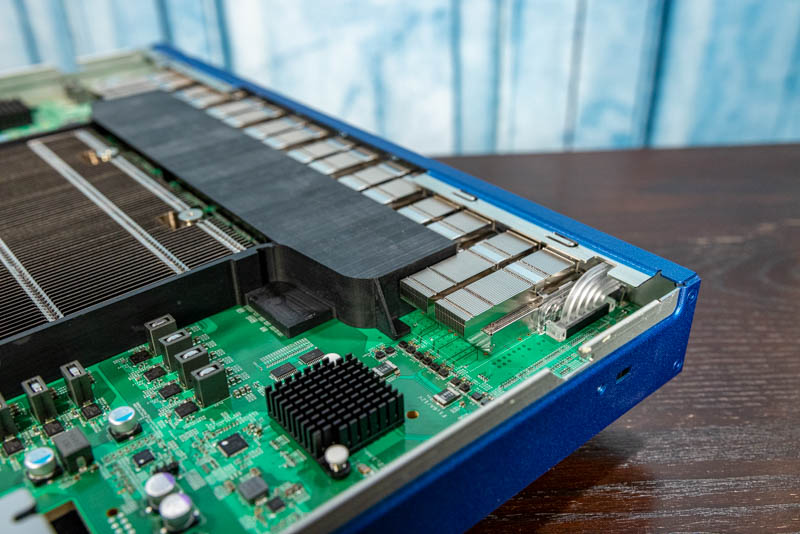

Something that our readers may not have seen before is that the QSFP-DD cages, tightly packed on the front of the system, each has its own heatsink. With 32-ports, this can be a few hundred watts of power consumption just with the modules that will go in these cages.

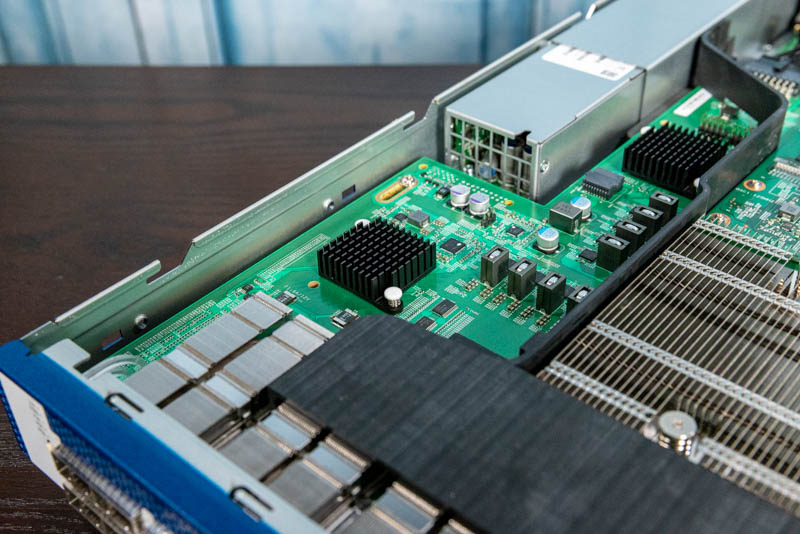

On the left side, we have a CPLD under a big black heatsink behind the QSFP-DD cages. Behind that, we see another heatsink. Innovium told us this is a FPGA.

On the right side, we have another CPLD.

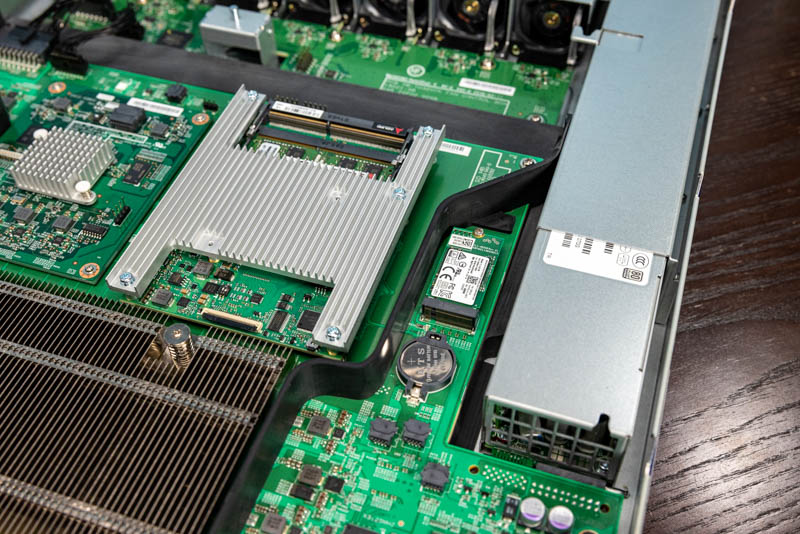

Behind that CPLD one can see a M.2 storage slot for the switch’s SSD.

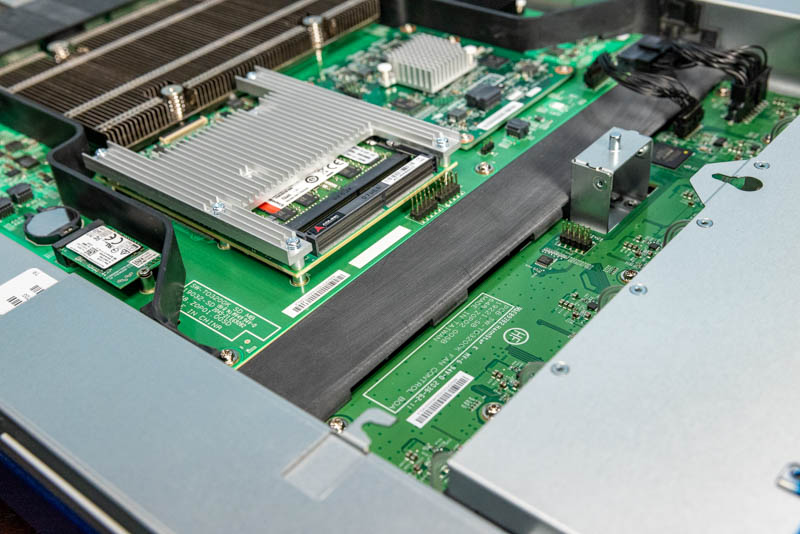

Here is another view of the FPGA heatsink as well as the boundary between the main switch PCB and the fan control PCB below.

Since this switch is designed to run network operating systems such as SONiC, we have an Intel Xeon D-1500 series CPU. Apparently, there are options here for a D-1527 and a D-1548 (and perhaps others.)

Next to that module is a Baseboard Management Controller or BMC board. This board has an ASPEED AST2520 controller, similar to the common AST2500 we saw for years in servers we reviewed.

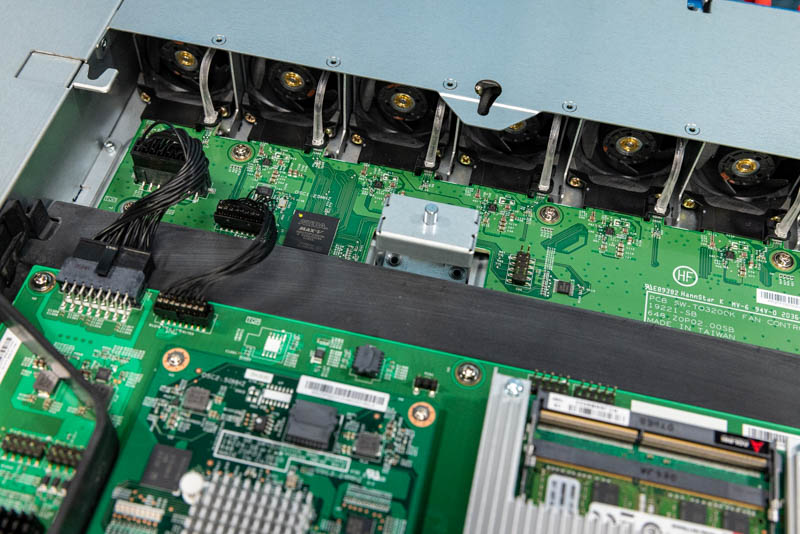

The fans are hot-swappable so we have the connectors here.

Something we wanted to point out quickly is just how much more emphasis there is on airflow here. We can see ducting over the middle QSFP-DD connectors and tightly around the Teralynx 7 ASIC. That ducting extends to the rear of the chassis over the Xeon D and the BMC. The two sides are channels that seem to utilize the PSU fans for airflow.

We also found this airflow guide between the PCBs in the chassis. On 3.2T or 32x 100GbE switches such as those we deploy in the STH lab, we usually do not see all of this airflow ducting and just bare PCBs.

Of course, as we always point out, there is a bare FPGA. This one is an Altera Max V on the fan control PCB. These are often used to monitor fans, provide status lights for the fan and other use cases. On virtually every high-end switch we look at, we find bare FPGAs from Altera or Xilinx.

Something we want to leave our readers with is just how much a modern switch resembles a server. We have a Xeon D processor, a BMC, storage, redundant power supplies, a dedicated management port, USB, and a console port. The big difference is that in many servers we have bigger CPUs and less networking while here we have an enormous amount of networking and relatively little CPU to run SONiC. This is not an accident. Instead, this is how cloud providers are purposefully architecting their networking.

While this is the hardware side, the software side is interesting as well. Let us get to the performance testing.

Looks like it is 1M SERDES shipped and not 1M 400G ports.

I’d say this is one of the best high-end switch reviews I’ve ever seen. I’d like to see more on SONiC installs and ops. Good work though.

How STH kills time before the AMD Milan launch ——-> Oh here’s some ultra amazing switch.

Second the request for articles on SONiC installs and ops. These switch teardowns are fantastic.

OMG, 32x 400Gbps. And in the meantime small business/office users are waiting for fanless 10Gbps switch with more than just 2-4 10GigEs.

That market share number for Innovium feels a little too high. Any idea why?

If you are looking closely, it is not total switch market share.

This is really cool. I can help with the interconnections. We should talk if your are interested in QSFP-QQ 400G connectors, cables and optics.

That switch consumes more power than my entire rack at home lol. Great review though!