Why 51.2Tbps Switching

Two forces are pulling these 51.2T switches into the market. The first is everyone’s favorite topic, AI. The second is also the power consumption and radix implications.

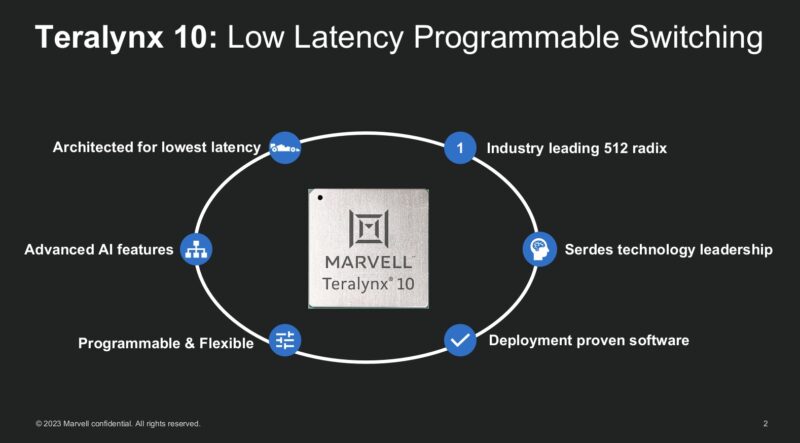

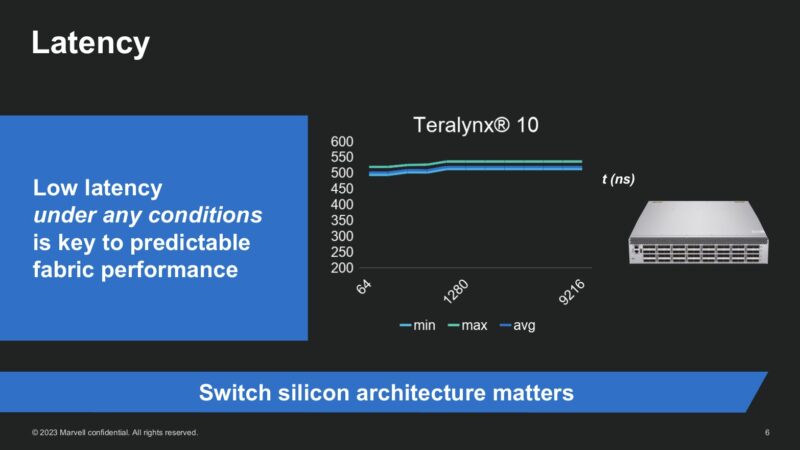

Marvell markets the Teralynx 10 with around 500ns latency while at the same time pushing huge bandwidth. That predictable latency, along with the congestion control, programmability, and telemetry from the switch chip helps ensure that large clusters can stay operating at peak performance. Having AI accelerators idle waiting for the network is a very expensive proposition.

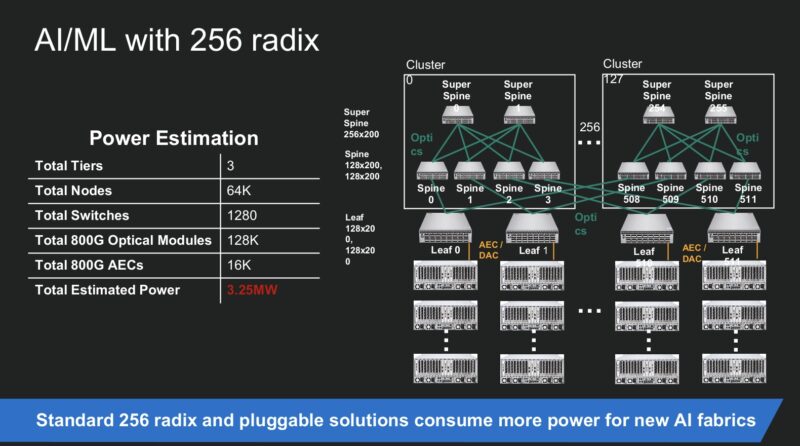

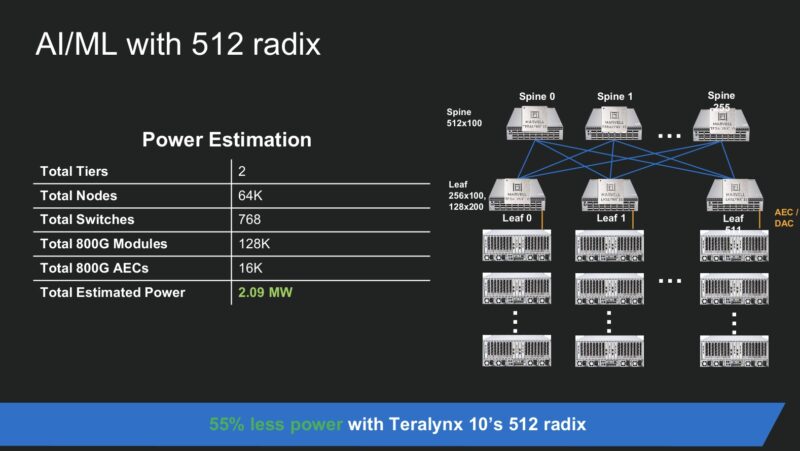

Another example is the radix. Having larger switches can reduce the layers of switching. That, in turn, lowers the number of switches, optics, cables, and so forth needed to connect a cluster.

Since the Teralynx 10 can handle 512 radix, connecting via up to 512x 100GbE links, some networks can shrink from requiring three levels of switching to only two. At the scale of a fairly large AI training cluster, that can be not just savings on capital equipment, but also a lot of power. Marvell sent us this example where the larger radix yields over 1MW lower power consumption.

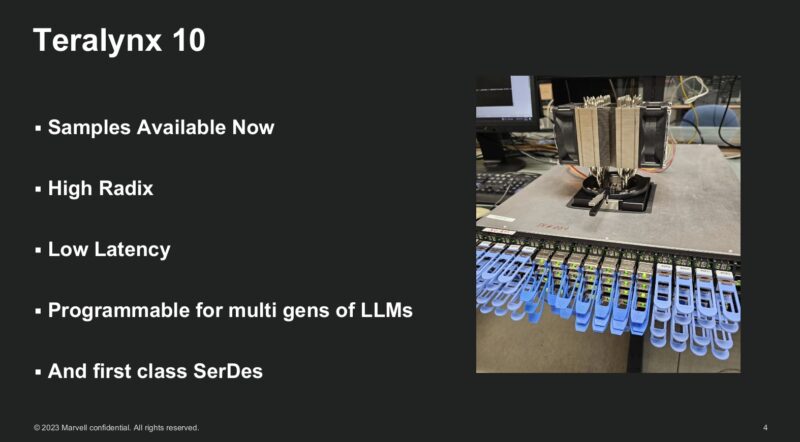

Also, in that slide deck Marvell sent was this. We have covered the left-hand side, but on the right we can see a switch with modules plugged in, and a fun cooler on the top of it sticking out from the case. We showed the massive heatsink during our internal overview, and this appears to be what a desktop prototype looks like.

That prototype was just fun, so we thought folks might like to see it.

Final Words

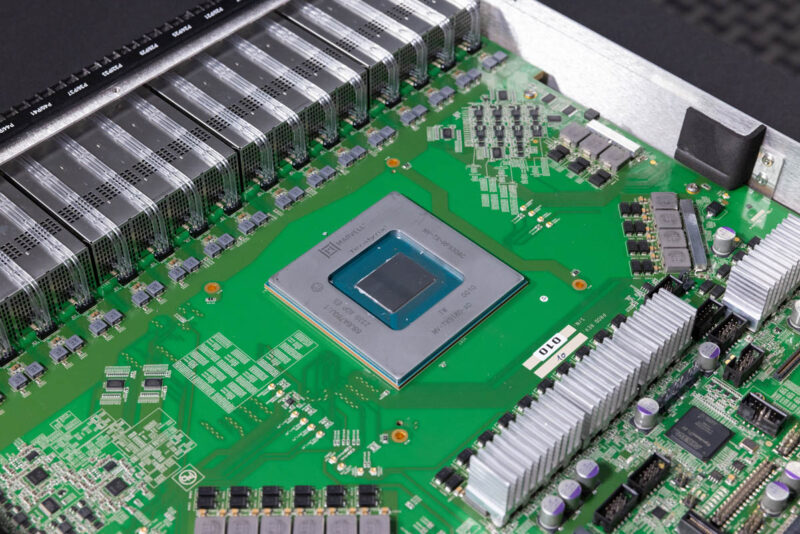

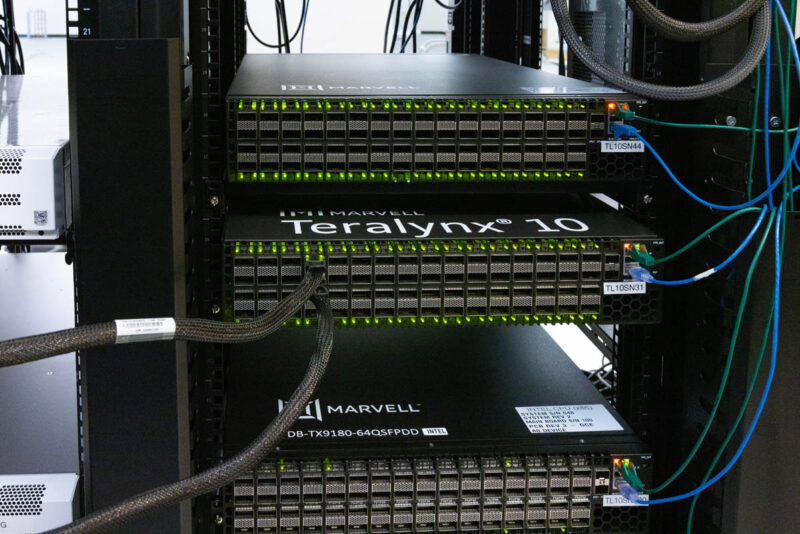

We often see the front of switches and maybe the rear in photos online and in data centers. Very infrequently, we see what makes those switches work. A quick thank you is in order to Marvell for letting us not just see the switches running but also tear apart the switch all the way down to the silicon.

The Innovium, now Marvell folks, have been one of the few, if only, teams in the industry to go head-to-head against Broadcom and come away with hyper-scale wins. We have seen other huge silicon providers fail in that quest. Teralynx 10 is likely going to be the company’s biggest product line since Teralynx 7 just given the market demand for high radix, high bandwidth, low latency switching in AI clusters. Competition in this space is very good.

Hopefully, our readers enjoyed this one. Of course, with all things networking, there are many more layers. We could do an entire pieces on even the optical modules, let alone the software, performance, and more. Still, it is cool to get to show what goes on inside these switches. If you are looking at 51.2T switches, give Teralynx 10 a look.

We’ve got a B200 cluster planned. Maybe we’ll see if a Marvell switch works then. It’ll depend on what NVIDIA also comes to the table with as it’s a cluster in the theme of thousands of B200s

I’m a fan of showing all this networking gear.

My only wish is that these types of speeds hurry up and make it into the lower power consumer space!

How can it be, that those fans are not hot swappable? We are talking about ultra high end hardware?!