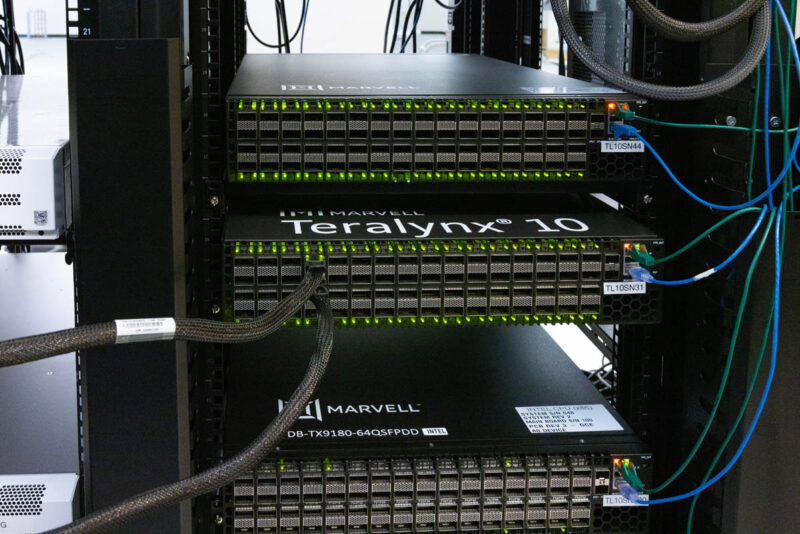

Testing Marvell Teralynx 10 in the Lab

In another building, Marvell has a lab running these switches. The company cleared it out briefly so we could film the switches running.

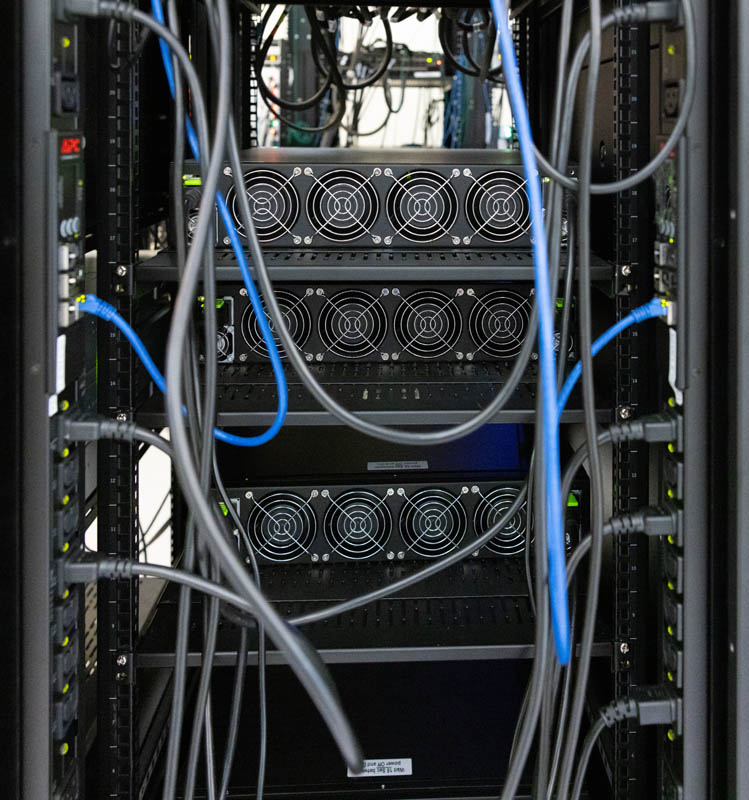

Here is the back side.

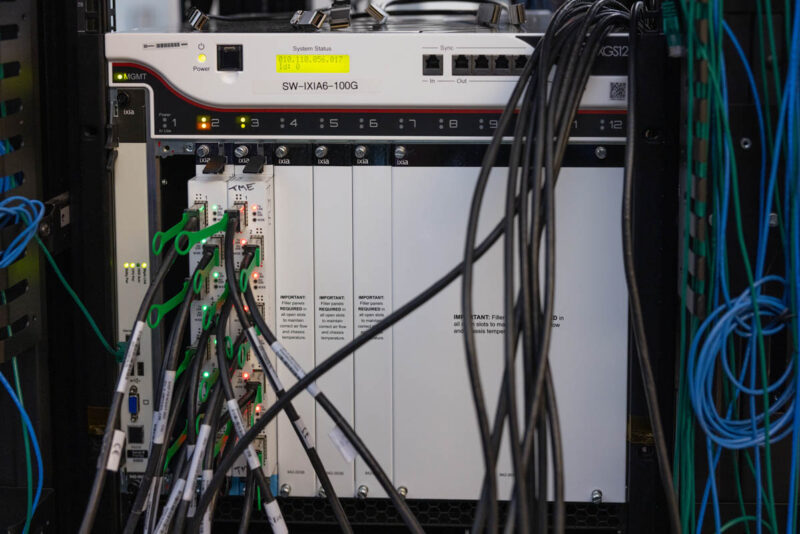

Next to the Teralynx 10 switches, there were Keysight Ixia AresONE 800GbE test boxes.

Generating 800GbE of traffic on a port is not trivial these days since it is faster than PCIe Gen5 x16 in servers. It was cool to see this gear running in the lab. We purchased a neat, used Spirent box back in the day, and the idea was to use it for 10GbE testing, but Spirent declined to help with a press/ analyst license. Equipment like this 800GbE box is eye-wateringly expensive.

The company also had a larger chassis in the lab that was used for 100GbE testing. As a switch vendor, Marvell needs this kind of gear to validate performance under different conditions.

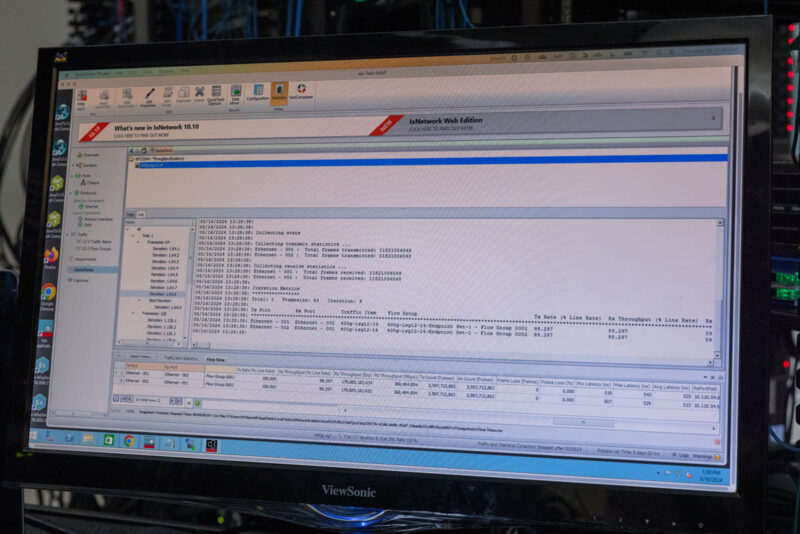

Here is a dual 400GbE example running at around 99.3% line rate over the Teralynx switches.

We had originally planned to film a big segment in the lab, but after putting on ear protection and my Apple Watch buzzing with a 95db warning, we decided to skip that idea.

Still, it was great to see the hardware, but the next question is, “Why?”

We’ve got a B200 cluster planned. Maybe we’ll see if a Marvell switch works then. It’ll depend on what NVIDIA also comes to the table with as it’s a cluster in the theme of thousands of B200s

I’m a fan of showing all this networking gear.

My only wish is that these types of speeds hurry up and make it into the lower power consumer space!

How can it be, that those fans are not hot swappable? We are talking about ultra high end hardware?!