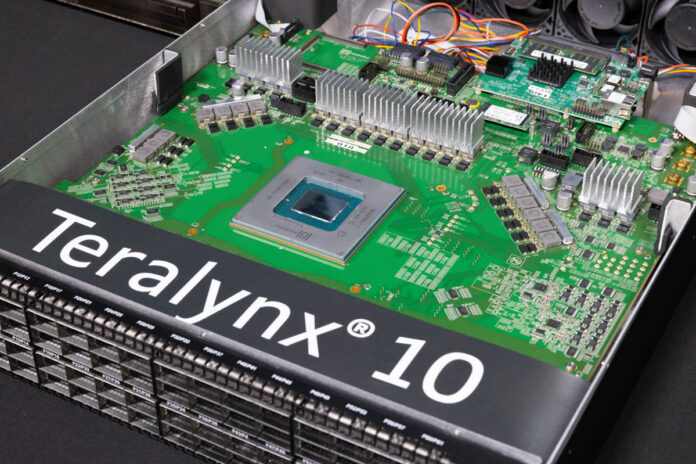

Today, we are going to take a look inside a massive switch spanning 64 ports of 800GbE. The Marvell Teralynx 10 is a 51.2Tbps switch that will be the generation we see in AI clusters in 2025. As part of the “big” week on STH, this is a big network switch, making it fun to look at.

Of course, for this one, we also have a video:

Feel free to open this in its own tab, window, or app for the best viewing experience. Also, thank you to Marvell for helping us make this by getting us access to the switch and labs at its headquarters and sponsoring this so we could travel up and make this.

Marvell Teralynx Background

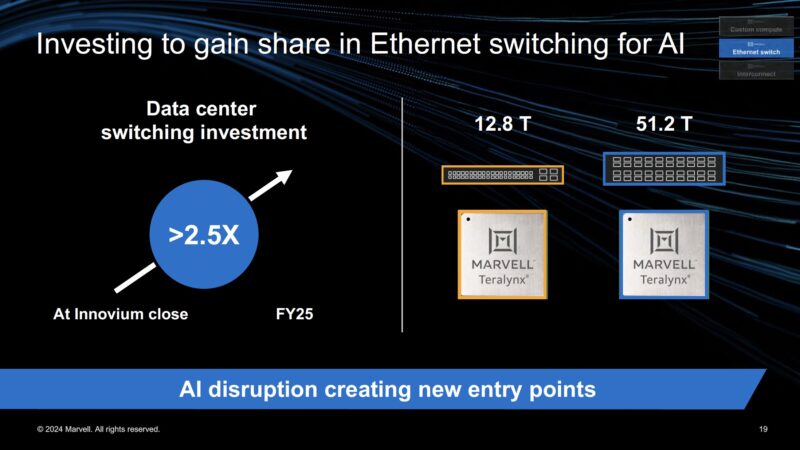

Here is quick bit on how we got here. Marvell acquired Innovium in 2021. That happened after our Inside an Innovium Teralynx 7-based 32x 400GbE Switch piece, where we took apart the startup’s 12.8Tbps (32-port 400GbE) generation.

Innovium has been the most successful startup of its generation, making inroads into hyper-scale data centers. As an example, in 2019 Intel announced it would acquire Barefoot Networks for Ethernet Switch Silicon. By the Intel Q4 2022 Earnings the company announced it would divest of this Ethernet switching business. Broadcom is huge in the merchant switch chip business, and Innovium/ Marvell has made inroads into hyper-scale data centers where others have spent a lot of money and failed.

Given the scale of AI cluster built-outs, the 51.2Tbps switch chip generation will be big. We asked Marvell if we could update our 2021 Teralynx 7 tear-down and look at the new Marvell Teralynx 10.

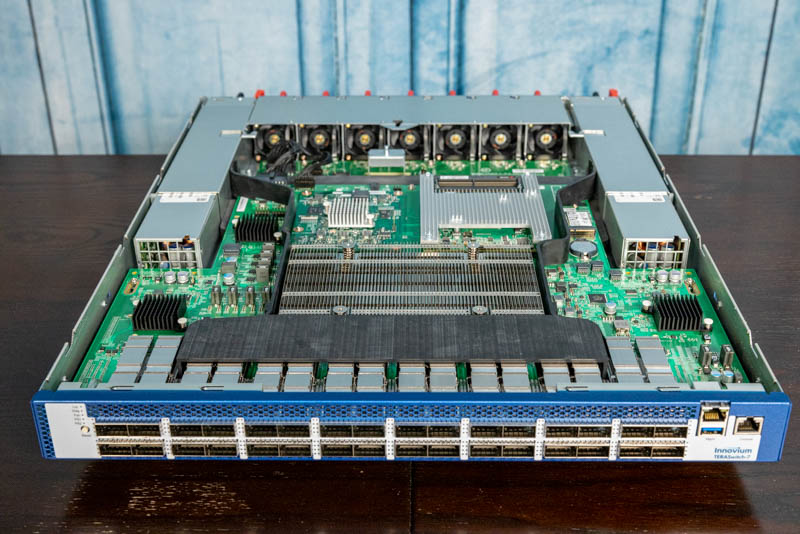

Marvell Teralynx 10 Switch External Overview and OSFP Optics

Taking a look at the front of the switch, we see a 2U chassis that is mostly made up of OSFP cages and airflow channels.

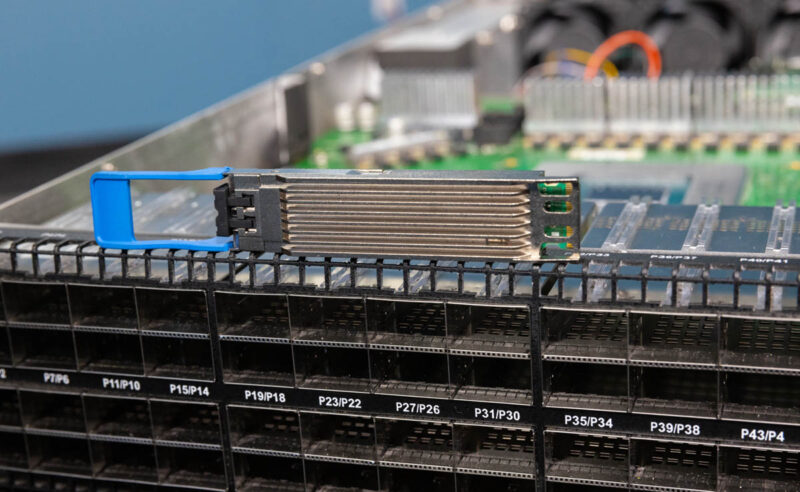

There are a total of 64 OSFP ports. Each runs at 800Gbps speeds.

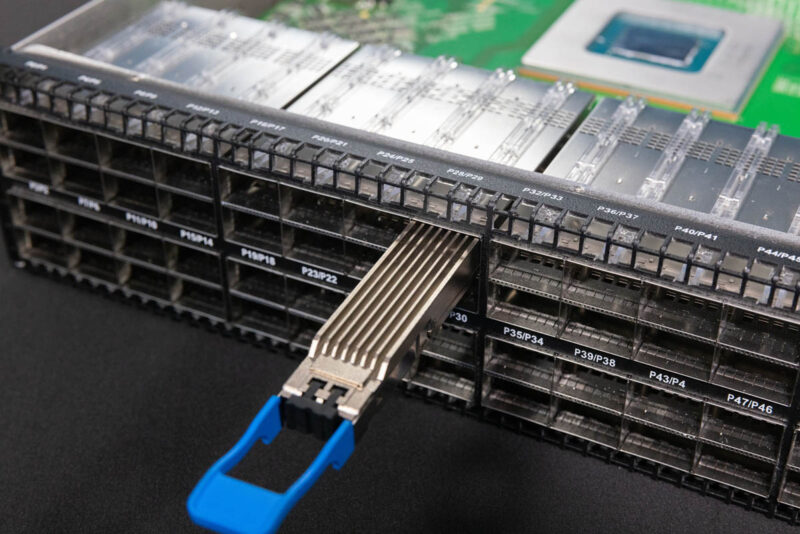

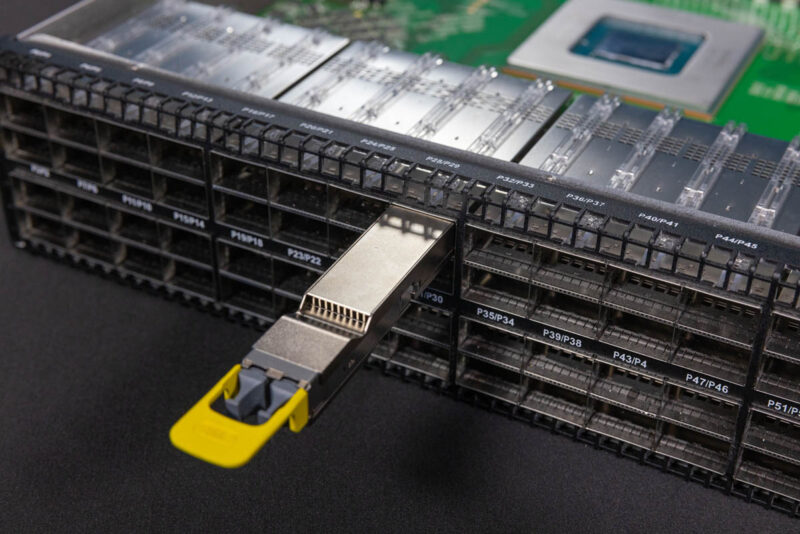

In each of these goes OSFP pluggable optics. These tend to be a bit larger than what you may be accustomed to with QSFP+/ QSFP28 generations as an example.

Marvell brought a few of the optics because after it purchased Inphi it also sells many of the components in these optical modules. We have gone into that a few times, such as in the Marvell COLORZ 800G Silicon Photonics Module and Orion DSP for Next-Gen Networks piece. This is the type of switch where those optics can be used. Another aspect is that the ports can run at speeds other than just 800Gbps.

One of the cool things we saw was a number of long-range optical modules. These can push 800Gbps speeds at hundreds of kilometers in distance, if not more. These are cool because they fit into the OSFP cages, and one does not need to use one of the big long-range optical boxes that have been used for many years in the industry.

OSFP has another impact on the switch. Since the OSFP modules can have their own integrated heatsinks, the cages do not have heatsinks. When we have taken apart some 100GbE and 400GbE switches, the optical cages need to have heatsinks because the modules are using so much power.

On the right of the switch we have our management and console ports.

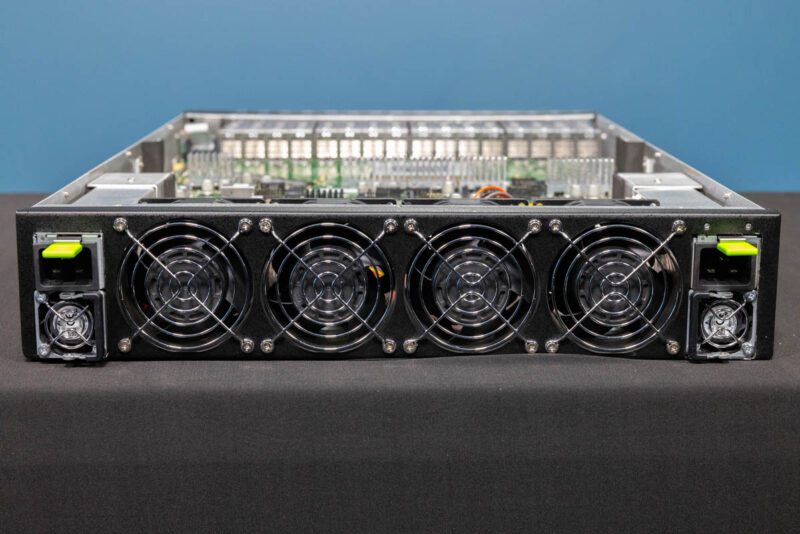

Looking at the rear of the switch, there are fans and power supplies (with their own fans.)

Given that this switch can use something like 1.8kW of optics, and we have a 500W switch chip, expect that we need power supplies rated for over 2kW.

Next, let us get inside the switch to see what is powering these OSFP cages.

We’ve got a B200 cluster planned. Maybe we’ll see if a Marvell switch works then. It’ll depend on what NVIDIA also comes to the table with as it’s a cluster in the theme of thousands of B200s

I’m a fan of showing all this networking gear.

My only wish is that these types of speeds hurry up and make it into the lower power consumer space!

How can it be, that those fans are not hot swappable? We are talking about ultra high end hardware?!