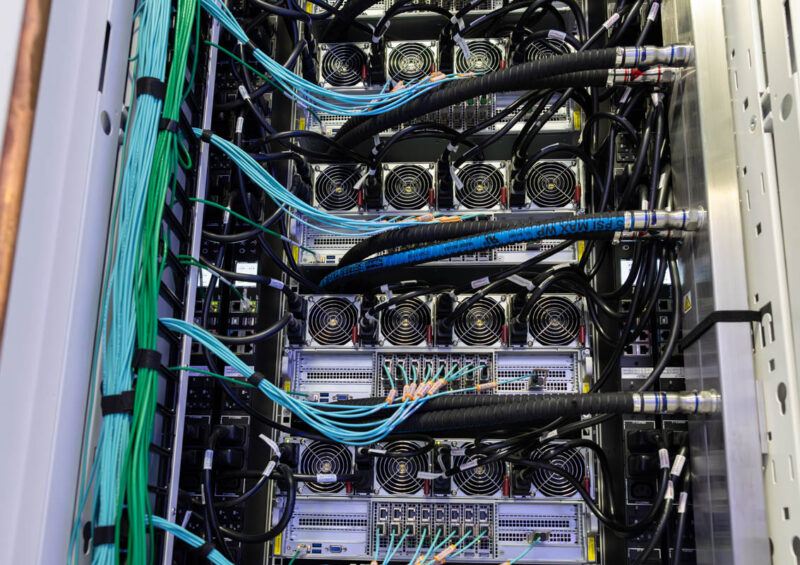

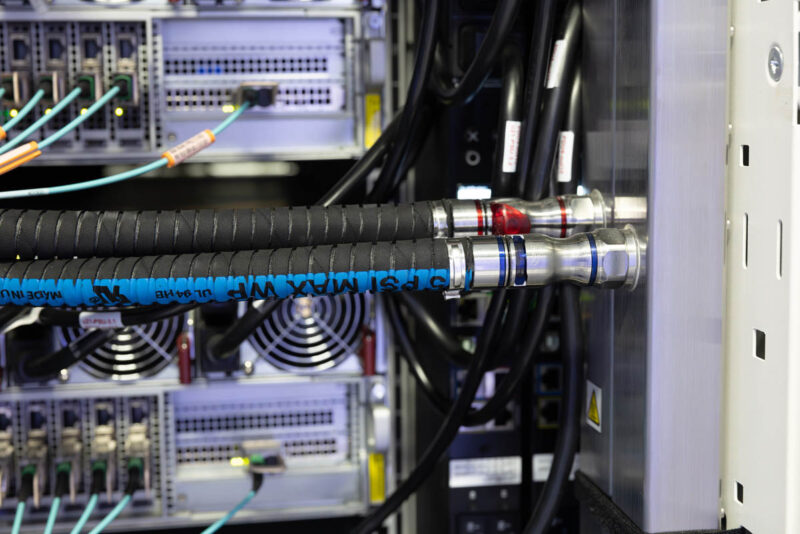

At the back of the racks we see fiber for the 400GbE connections to the GPU and CPU complexes, as well as copper for the management network. These NICs are also on their own tray to be easily swappable without removing the chassis, but they are on the rear of the chassis. There are four power supplies for each of the servers that are also hot-swappable and fed via 3-phase PDUs.

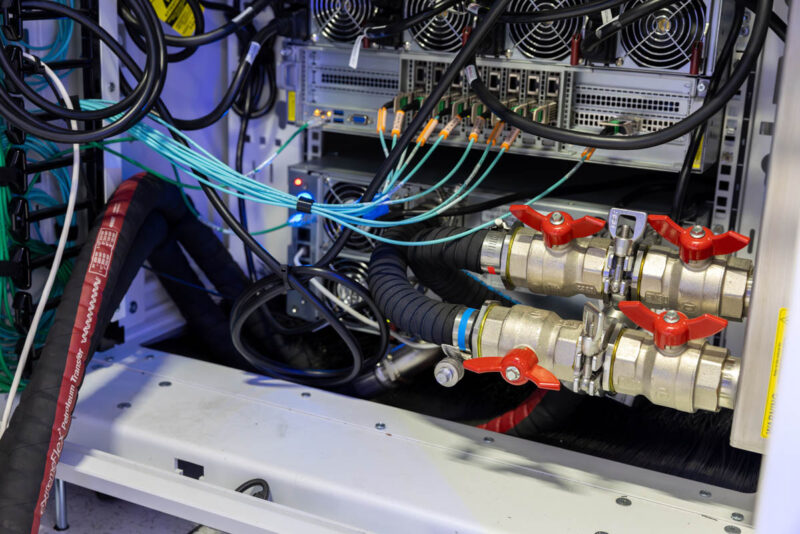

At the bottom of the rack, we have the CDUs or coolant distribution units. These CDUs are like giant heat exchangers. In each rack, there is a fluid loop that feeds all of the GPU servers. We are saying fluid, not water, here because usually, these loops need fluid tuned to the materials found in the liquid cooling blocks, tubes, manifolds, and so forth. We have articles and videos on how data center liquid cooling works if you want to learn more about the details of CDUs and fluids.

Each CDU has redundant pumps and power supplies so that if one of either fails, it can be replaced in the field without shutting down the entire rack. Since I had replaced a pump in one of these before, I thought about doing it at Colossus. Then I thought that might not be the wisest idea since we already had footage of me replacing a pump last year.

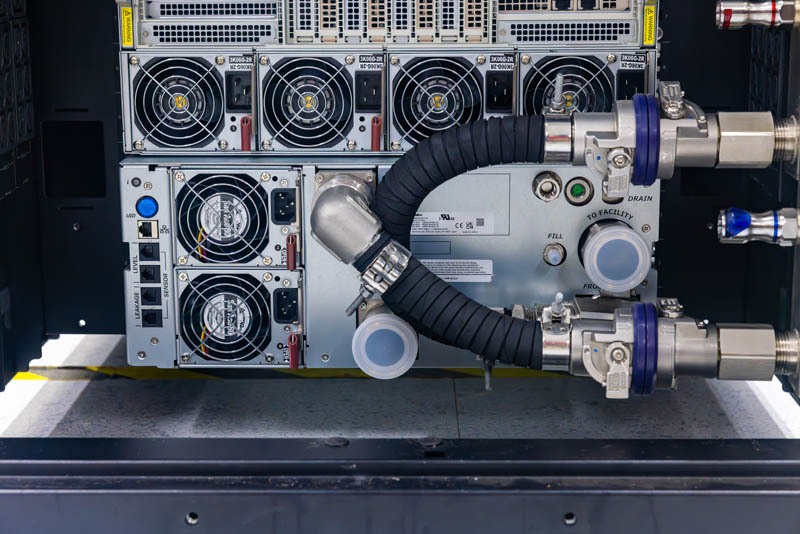

The xAI racks have a lot going on, but while filming the 2023 piece, we had a clearer shot of the Supermicro CDU. Here, you can see the input and output to facility water and to the rack manifold. You can also see the hot-swappable redundant power supplies for each CDU.

Here is the CDU in a Colossus rack hidden by various tubes and cables.

On each side of the Colossus racks, we have the 3-phase PDUs as well as the rack manifolds. Each of the front mounted 1U manifolds that feed the 4U Universal GPU systems, is in turn fed by the rack manifold that connects to the CDU. All of these components are labeled with red and blue fittings. Luckily, this is a familiar color coding scheme with red for warm and blue for cooler portions of the loop.

Something you are likely to have noticed from these photos is that there are still fans here. Fans are used in many liquid-cooled servers to cool components like the DIMMs, power supplies, low-power baseboard management controllers, NICs, and so forth. At Colossus, each rack needs to be cooling neutral to the data hall to avoid installing massive air handlers. The fans in the servers pull cooler air from the front of the rack, and exhaust the air at the rear of the server. From there, the air is pulled through rear door heat exchangers.

While the rear door heat exchangers may sound fancy, they are very analogous to a radiator in a car. They take exhaust air from the rack and pass it through a finned heat exchanger/radiator. That heat exchanger has liquid flowing through it, just like the servers, and the heat can then be exchanged to facility water loops. Air is pulled through via fans on the back of the units. Unlike most car radiators, these have a really slick trick. In normal operation, these light up blue. They can also light up in other colors, such as red if there is an issue requiring service. When I visited the site under construction, I certainly did not turn on a few of these racks, but it was neat to see these heat exchangers, as they were turned on, go through different colors as the racks came online.

These rear door heat exchangers serve another important design purpose in the data halls. Not only can they remove the miscellaneous heat from Supermicro’s liquid cooled GPU servers, but they can also remove heat from the storage, CPU compute clusters, and networking components as well.

In the latter part (2017-2021) of my almost decade working in the primary data center for an HFT firm, we moved from air cooled servers to immersion cooling.

From the server side that basically meant finding a vendor willing to warranty servers cooled this way, removing the fans, replacing thermal paste with a special type of foil and (eventually) using power cords made of a more expensive outing coating (so they didn’t turn rock hard from the mineral oil cooling fluid.)

But from the switch side (25 GbE) no way the network team was going to let me put their Arista switches in the vats…Which made for some awkwardly long cabling and eventually a problem with oil wicking out the vats via the twinax cabling (yuck!).

I would look at immersion cooling as a crude (but effective) “bridge technology” between the worlds of the past with 100% air cooling for mass market scale out servers, and a future heavy on plumbing connections and water blocks.

This is extremely impressive.

However, coming online in 122 days is not without issue. For example, this facility uses at least 18 unlicensed/unpermitted portable methane gas generators that are of significant concern to the local population – one that already struggles with asthma rates and air quality alerts. There is also some question as to how well the local utility can support the water requirements of liquid cooling at this scale. One of the big concerns about liquid cooling with datacenters is the impact to the water cycle. When water is typically consumed it ends up as wastewater feeding back to treatment facilities where it ends up back in circulation relatively quickly.

Water-based cooling systems used in datacenters use evaporation – which has a much longer cycle: atmosphere -> clouds -> rainwater -> water table.

Other clusters and datacenters used by the likes of Meta, Amazon, Google, Microsoft, etc take the time and caution to minimize these kinds of environmental impact.

Again, very impressive from a technical standpoint but throwing this together to have it online in record time should not have to come at the expense of the local population for the arbitrary bragging rights of a billionaire.

Patrick – great video. Before you were born, there was a terrific movie (based on a book) titled: Colossus: The Forbin Project. https://www.imdb.com/title/tt0064177/ Highly recommended

There is no 9 links per server but only 8 . 1 is for management …

Musk is a shitty person and should not run companies that the USA depends on strategically, but yeah its a cool datacenter.

what what an amazing cluster and datacenter. Super cool that you got to tour it and show all of this to us!

I hope for Supermicro that they prepaid the bill, not like X/Twitter/Musk did with Wiwynn

Interesting data centre. Too bad Musk is behind it. What a jerk.

100% agreed on the Musk comments. So much god worship out there overlooking the accomplishments from Shotwell, Straubel, Eberhard, Tarpenning and countless others. Interesting article though ;-)

What would be even cooler than owning 100k GPUs would be putting out any AI products, models, or research that was interesting and impactful. xAI is still-born as a company because no researcher with a reputation to protect is willing to join it, the same reason Tesla’s self driving models make no significant progress.

Why so many guys hate Mask. Anyway, his gaint XAI DC is amazing project.

> There is no 9 links per server but only 8 . 1 is for management …

On each GPU node: one 400GbE link for each of 8 GPUs, plus another 400GbE for the CPU, plus gigabit IPMI.

Good article, wondering why did the choose x86 based CPUs instead of grace CPU’s?

To Skywalker: I guess it’s most likey caused by schedule(H100 while not blackwell SKU) and X software environment.

With Elon Musk the news are fun and interesting to watch ! :D

What he is doing is amazing !

The most impressive part is that they will soon double that capacity with the new Nvidia H200 batch deployment.

What the Video didn’t explain is whether DX cooling or ground water to water, air to water heat exchangers, etc are used for liquid cooling. I’m not surprised very limited information was given.

It is interesting to note that this data center is located near the Mississippi River, a constant and reliable water source and high megawatt power generation facilities near by. Complexes such as “The Bumblehive “ (DX cooling) near Lake Utah, again a large reliable source of water. This complex has its own power substation/direct power feed from generation facilities.

Nobody here is concerned with a super intelligence being developed in this facility. Instead, the dire concern is pollution, lol.

We have no idea what we’re creating. It’s insane the lack of awareness.

What OS is everything running on?