At SC21, Patrick, our Editor-in-Chief was able to see an updated system that STH has been tracking for years. The Ingrasys ES2000 is the latest evolution of a NVMe-oF chassis. These systems utilize Ethernet-based SSDs like the Kioxia EM6 25GbE NVMe-oF SSD we recently covered.

Ingrasys ES2000 for Kioxia EM6 NVMe-oF SSDs at SC21

First, you may have already seen this in the Top 10 Showcases of SC21 video. You can see a bit more at 05:08 about the Kioxia EM6 NVMe-oF SSD and Ingrasys System:

As always, open this in a YouTube window, tab, or app for the best viewing experience.

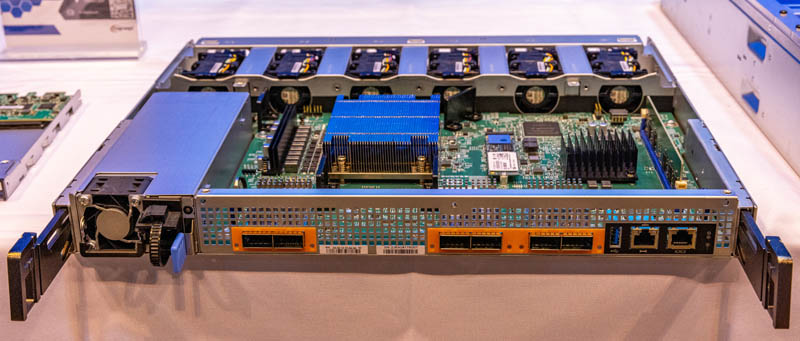

The front of the chassis is a 24-bay solution with some heavier duty lever latches than are on most servers.

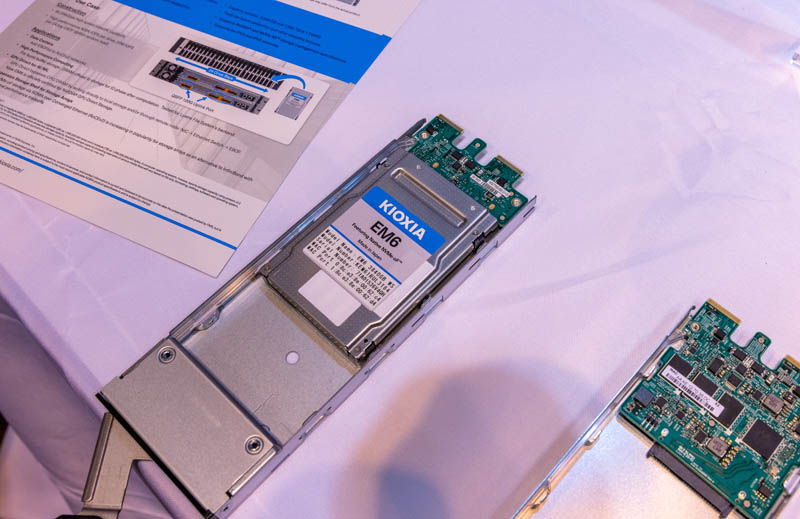

Drives plug into the chassis via a hot swap backplane. What is really interesting here is that the connection uses EDSFF connectors. Here is the Kioxia EM6 drive in a tray.

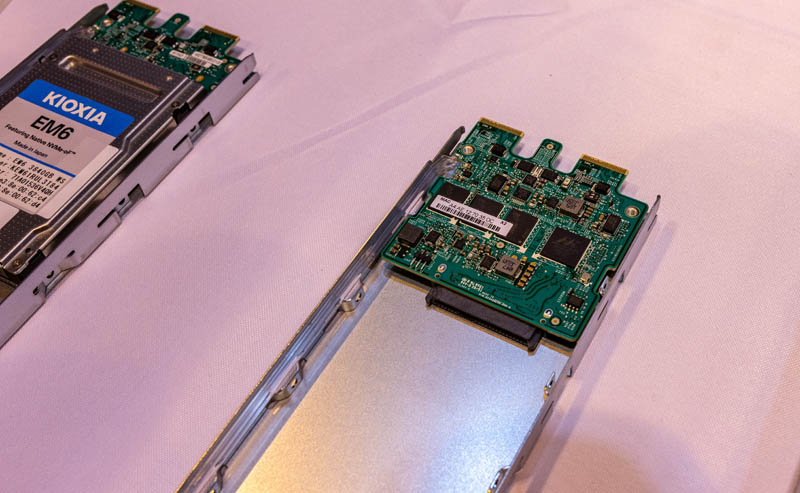

One of the reasons these trays are so deep is so that interposer/ adapters can be used. This utilizes a Marvell controller (88SN3400 from the looks of it) and takes a NVMe SSD and turns it into a NVMe-oF SSD with 25GbE as the output. This adapter card also has onboard DRAM which is interesting itself.

Behind the drives, we have the two controller nodes. a controller node connects to one of the two EDSFF connectors providing dual 25GbE paths to the drive.

Here is an example of the controller backplane with a row of EDSFF connectors. You can read more about the E1 and E3 EDSFF form factors here. This design allows the Ingrasys to handle NVMe-oF but also E1 and E3 SSDs in the same chassis.

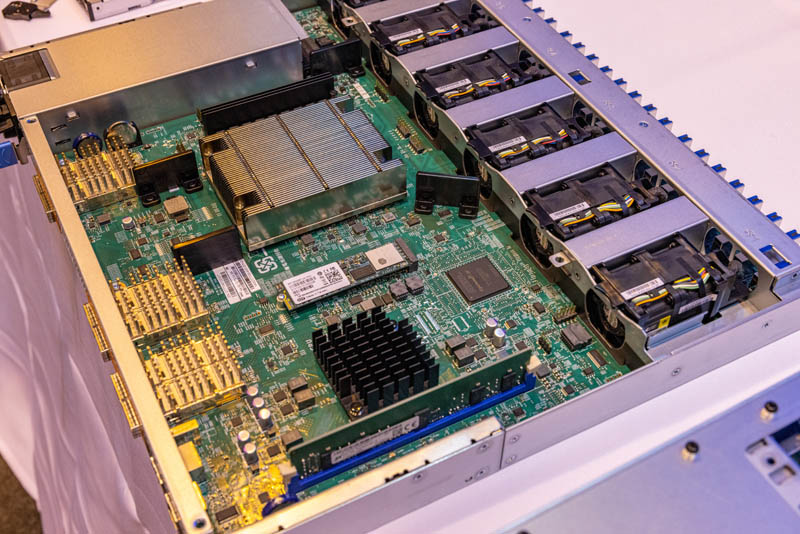

Here is a look at the controller node. The big chip is the Marvell 98EX5630 Ethernet switch running SONiC. The small heatsink is the Intel Atom C3538 CPU with a M.2 SSD and 8GB of DRAM.

Each controller node has six ports on the rear. This is designed so that one can use existing 100GbE infrastructure. 6x 100GbE on the rear of the chassis = 600Gbps. 25GbE for each drive * 24 drives = 600Gbps providing a 1:1 ratio. This is also being designed for the 200GbE future.

The chassis supports two of these nodes and that gives each drive path to two different switch nodes each with six uplink ports to get to the network. The idea then is that servers can mount NVMe-oF and use the drives directly without needing to have local storage. This really highlights the importance of DPUs because one would want to manage how much storage each server gets and a DPU provides an endpoint to do that provisioning and management in servers.

Final Words

This is ultra-cool technology and something we wish we could show you more of. The ability to remove traditional servers from the loop is tantalizing, especially with DPUs. Hopefully, we will be able to in 2022. Stay tuned as this year winds down.

No real flying cars in sigth, and wars poverty and diseases still rampant, but…we have rocket launchers landing in sync, and…THIS. Clearly, we live in the future :D