Power Consumption and Noise

The unit comes with a 12V DC power input, but you can also use the USB Type-C port.

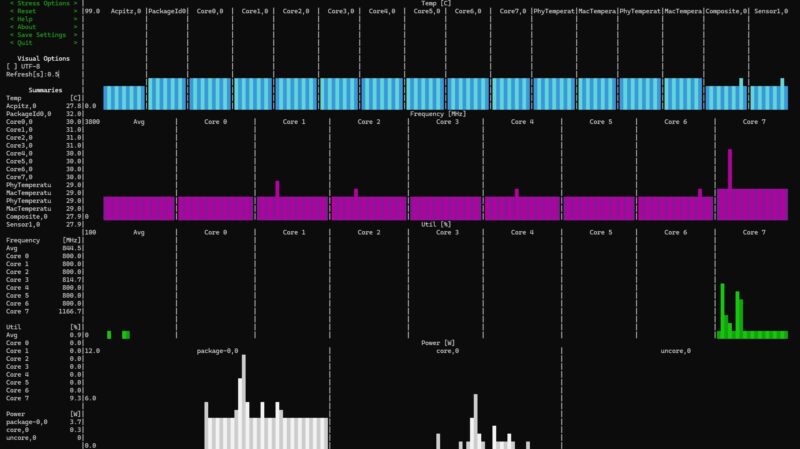

At idle, the N100 version had a 3-5W package idle but 10-13W system idle power consumption.

The N305 was 4-5W at the package and 10-14W at the system level.

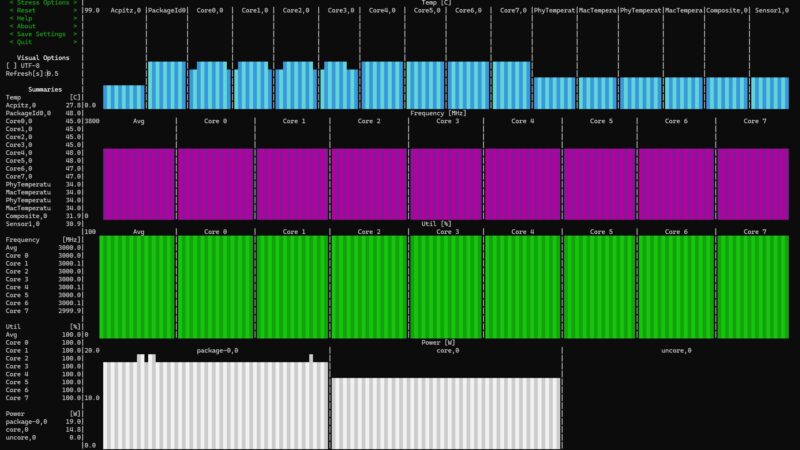

Under load, the N100 version was 10-12W at the package and 22-25W at the system level. It ran for six hours at 2.9GHz without issue even as a fanless system running stress-ng.

The Intel Core i3-N305 fanned version raised our noise floor in the studio from 34dba to 38-40dba. The fans were not silent. Power consumption increased to around 19W at the package and 34-36W a the wall under load. Like the N100 system, the N305 just stayed at this level and did not downclock like many mini PCs do.

Key Lessons Learned

Labels, this system needs them. Otherwise, it is built very well. For those that just want 10Gbase-T and do not want to fiddle with SFP+ to 10Gbase-T adapters, then this works well. It also has newer 10Gbase-T NICs that can run at 2.5GbE and 5GbE speeds where some of the ultra-cheap SFP+ mini PCs out there can only do 1/10G because they use ancient NICs to keep costs lower. As another benefit, the Marvell AQC113C is generally much better than the older NICs in terms of power consumption.

These are also much larger than the nano systems like the iKoolCore R2 that we have seen previously from the company.

Alex did the dimensions and the iKoolCore R2 Max uses slightly less space than an Apple Mac Mini M4. Both have 10Gbase-T. If you combine this with the MikroTik CRS304-4XG-IN and a NAS like the TerraMaster F8-SSD Plus you can get a really low power and quiet 10GbE setup.

Perhaps the big thing is that iKoolCore seems to be listening to its users and iterating. Many asked for 10Gbase-T and fanless when we did the R2 review, now we have the R2 Max with 10Gbase-T albeit in a larger chassis.

Final Words

Dual 10Gbase-T, dual 2.5GbE, dual M.2, and a fanless N100 or fanned N305 make for a very compelling system. If you wanted a 10Gbase-T mini PC as a router or as a small virtualization box (or for a virtualized router) then the iKoolCore R2 Max is great. There are certainly limitations to having only up to 9 PCIe Gen3 lanes in the platform, and four of them being used for the two 10Gbase-T NICs, and two being used for the 2.5GbE NICs. Still, many have 10GbE NAS units and perhaps that is the idea here, just to use boot drives and network storage if you want a virtualization setup.

Overall, this is a very cool box. It costs at least $100 more than the cheapest 10GbE SFP+ boxes we have seen, but it also has a better design, 10Gbase-T, and newer NICs.

Thankyou for including some tests of it’s networking/firewall capability.

Are the drivers for the AQC113C NICs available in generic Linux or are they proprietary? (I.e., could I install, say, Ubuntu or Alpine and get the NICs working?)

How much power do those NICs draw when plugged versus unplugged?

They run out of the box in Ubuntu 24.04.

Looks nice! Does anyone make a unit of comparable quality but with the 10gig ports brought out as SFP+ while keeping a pair of RJ-45s at 2.5GbE?

Such an odd juxtaposition of relatively high production values and careful looking layout with a genuinely cursed placement of the fan module.

Out of not entirely idle curiosity; does the board freak out if it doesn’t detect the fans in an expected state, like laptops or servers typically do; or would it be fine so long as there’s enough air movement from somewhere to keep the heatsink at suitable temperature?

Could you post some detailed pictures of the wiring harness for the fans? There are some really nice 40x10mm fans out there that would probably silence the N305, assuming the wiring harness isn’t something weird and custom

AQC113 10GBe ports will be supported very soon in FreeBSD/pfSense:

https://bugs.freebsd.org/bugzilla/show_bug.cgi?id=282805

Let’s say I would like to run VMs on this machine, would the PCIe Gen3 x1 link for the NVMe be problematic if I use both NVMe slots, or even just one ?

I don’t understand much about PCIe but I see that most drives are usually PCIe 3 x4.

So that mean x1 would only be the fourth of its normal performance.

Thanks

Hi Patrick!

Something I couldn’t determine from their Wiki (which is great to see!) Do you know from your testing if 1x NVMe is connected can it take advantage of PCIe Gen3 x1 speeds (8GT/s) or is bandwidth always PCIe Gen2 x1 speeds because of the ASM118X chip that acts as a switch?

Why don’t you ever mention openwrt when reviewing these devices?