ICC Vega R-116i Performance

For this exercise, we are using our legacy Linux-Bench scripts which help us see cross-platform “least common denominator” results we have been using for years as well as several results from our updated Linux-Bench2 scripts. We are going to show off a few results, and highlight a number of interesting data points in this article.

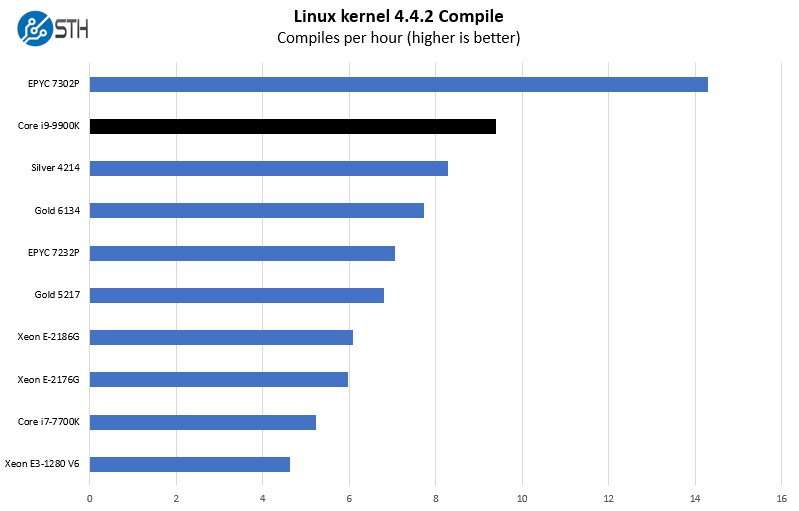

Python Linux 4.4.2 Kernel Compile Benchmark

This is one of the most requested benchmarks for STH over the past few years. The task was simple, we have a standard configuration file, the Linux 4.4.2 kernel from kernel.org, and make the standard auto-generated configuration utilizing every thread in the system. We are expressing results in terms of compiles per hour to make the results easier to read:

Here you can clearly see the impact of higher clock speeds. The Intel Xeon Silver 4214 has 12 cores, yet cannot compete with the ICC Vega R-116i’s 8 higher clocked cores here. This is a multi-threaded performance dominated benchmark so seeing this type of performance is simply excellent.

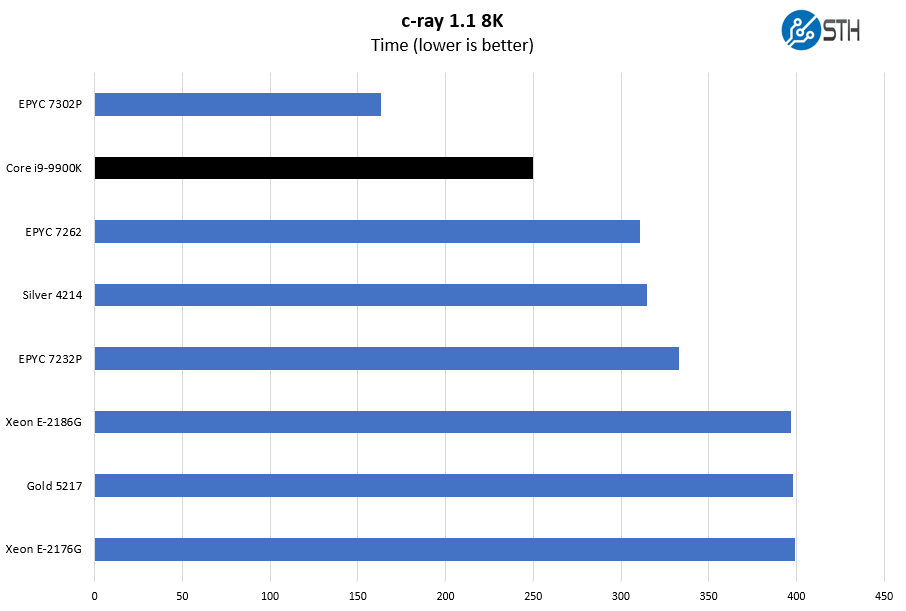

c-ray 1.1 Performance

We have been using c-ray for our performance testing for years now. It is a ray tracing benchmark that is extremely popular to show differences in processors under multi-threaded workloads. We are going to use our 8K results which work well at this end of the performance spectrum.

The c-ray 8K benchmark tends to perform very well on AMD architectures due to caches. Here, the 8 core and 128MB L3 cache AMD EPYC 7262 gets out-performed by the ICC Vega R-116i’s Core i9-9900K.

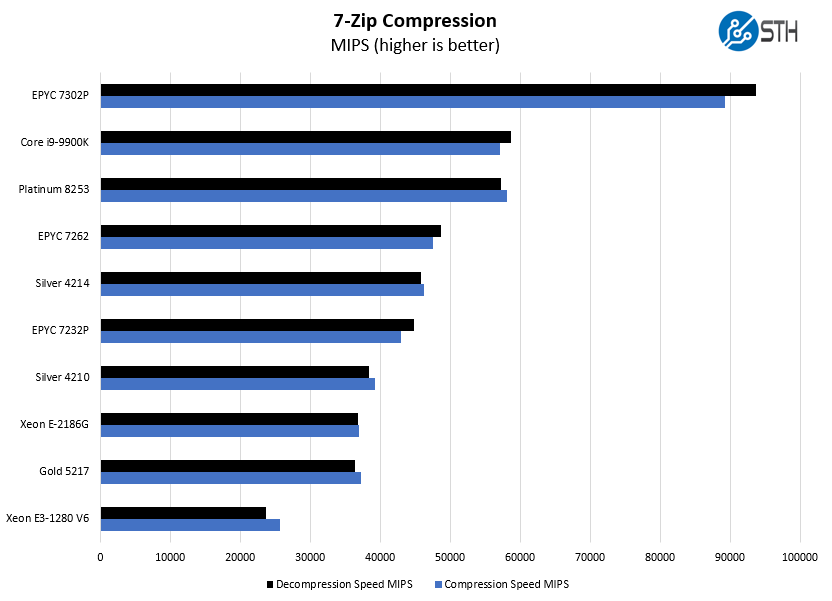

7-zip Compression Performance

7-zip is a widely used compression/ decompression program that works cross-platform. We started using the program during our early days with Windows testing. It is now part of Linux-Bench.

Perhaps the most interesting result in this benchmark is going head-to-head with an Intel Xeon Platinum 8253. Again, clock speeds make up for only having half the cores.

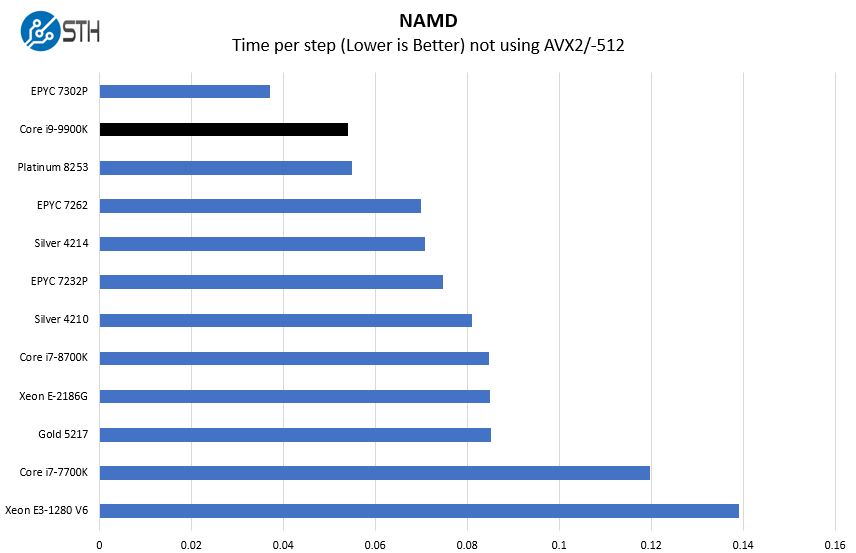

NAMD Performance

NAMD is a molecular modeling benchmark developed by the Theoretical and Computational Biophysics Group in the Beckman Institute for Advanced Science and Technology at the University of Illinois at Urbana-Champaign. More information on the benchmark can be found here. With GROMACS we have been working hard to support AVX-512 and AVX2 supporting AMD Zen architecture. Here are the comparison results for the legacy data set:

We again see a performance about that of a 12 core AMD EPYC 7002 series processor or a medium clocked 12-16 core 2nd generation Intel Xeon Scalable processor.

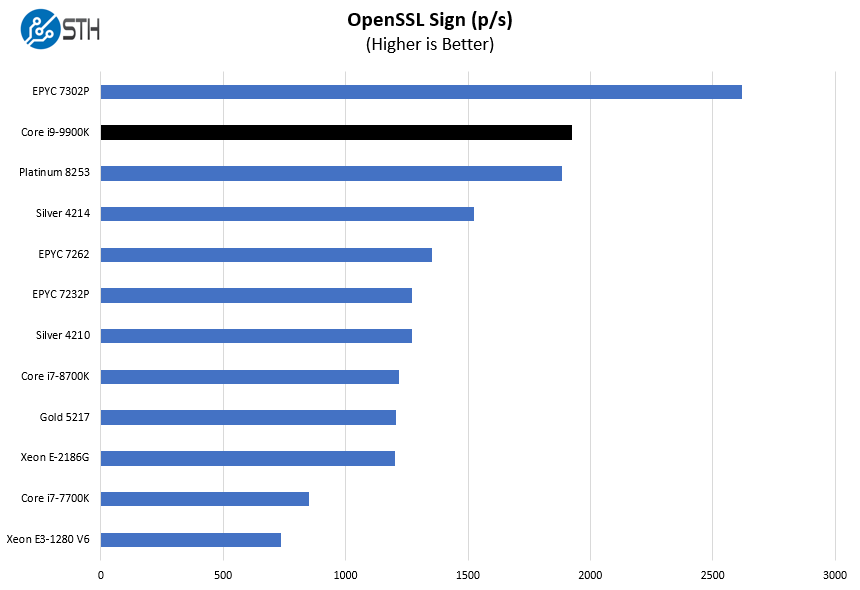

OpenSSL Performance

OpenSSL is widely used to secure communications between servers. This is an important protocol in many server stacks. We first look at our sign tests:

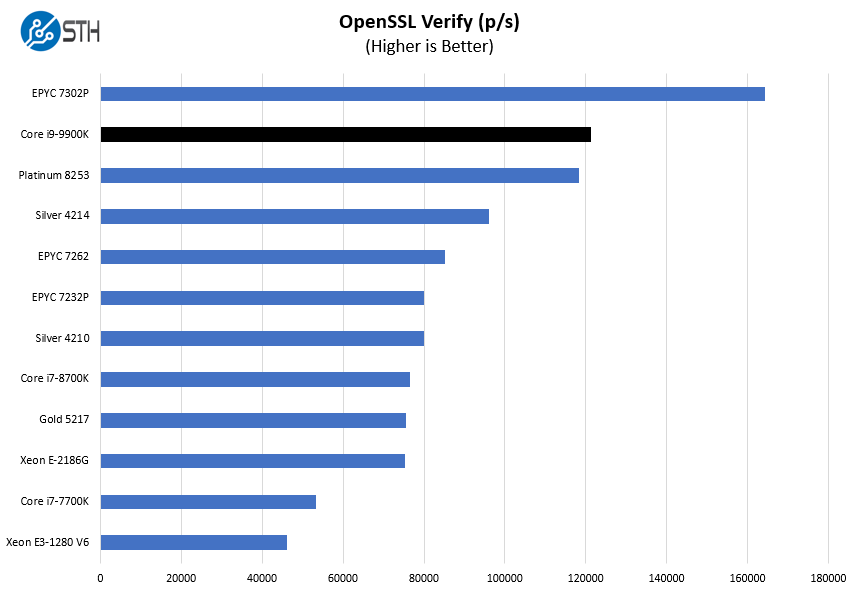

Here are the verify results:

One item we wanted to call out here is the improvement over some of the previous generation Core i7 and Intel Xeon E parts. We only had the Intel Xeon E-2186G benchmarked in time for the comparison, not the newer E-2288G that we will have benchmarked soon. Adding cores and clock speed in the Vega R-116i has a noticeable impact.

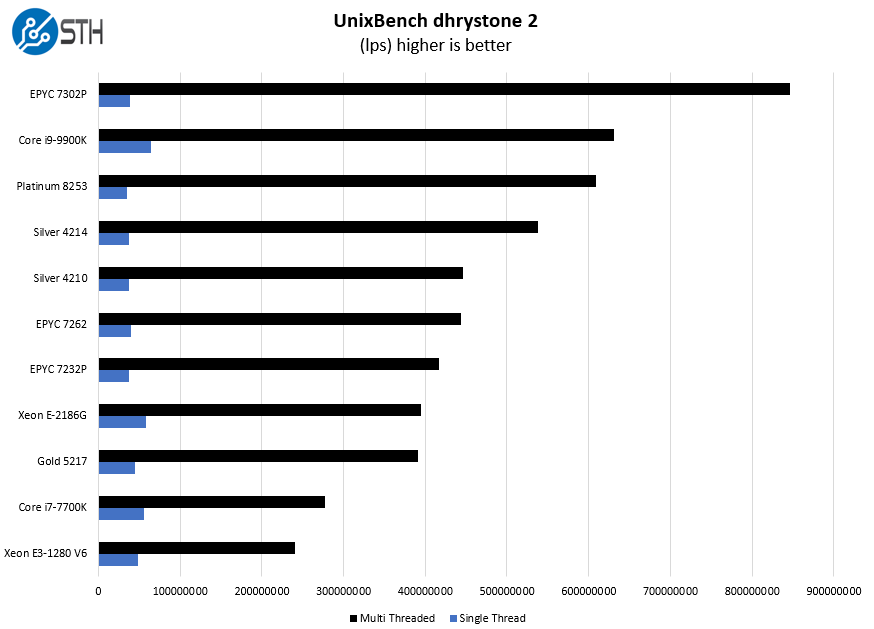

UnixBench Dhrystone 2 and Whetstone Benchmarks

Some of the longest-running tests at STH are the venerable UnixBench 5.1.3 Dhrystone 2 and Whetstone results. They are certainly aging, however, we constantly get requests for them, and many angry notes when we leave them out. UnixBench is widely used so we are including it in this data set. Here are the Dhrystone 2 results:

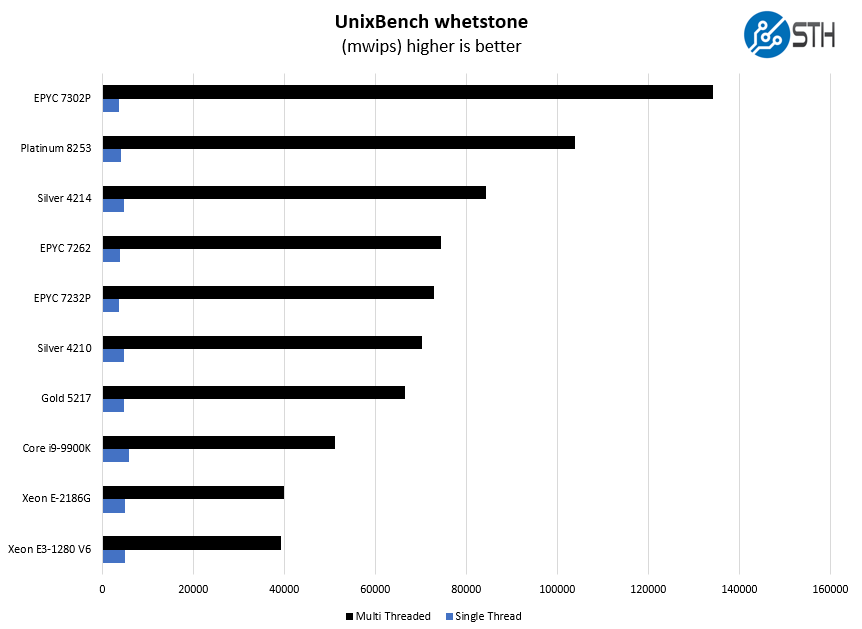

Here are the whetstone figures:

The dhrystone 2 figures for the ICC Vega R-116i are strong however we see the whetstone figures falling a bit below the other tests. In either case, the single-thread performance is very good.

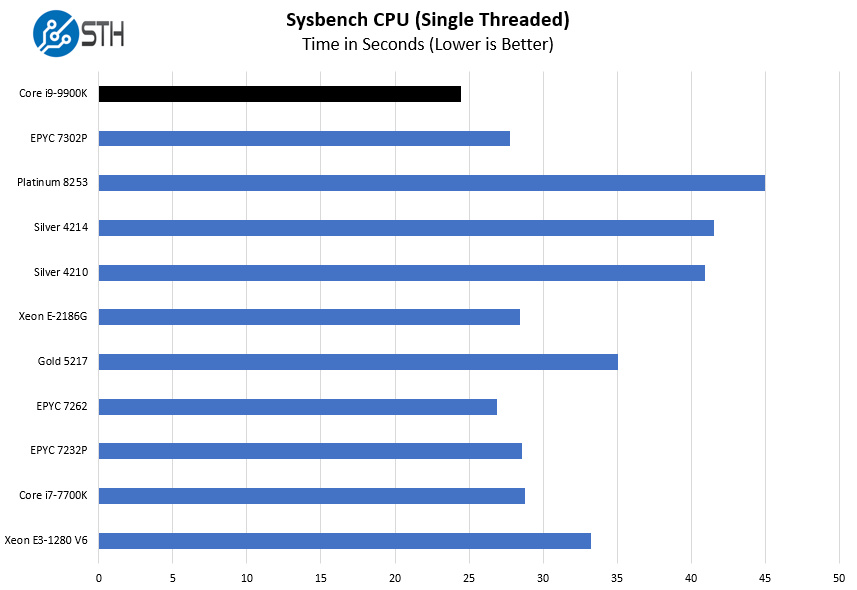

Sysbench CPU test

Sysbench is another one of those widely used Linux benchmarks. We specifically are using the CPU test, not the OLTP test that we use for some storage testing.

Here, we are showing single-threaded results sorted from the fastest multi-thread CPU to the lowest. On this chart, the Vega R-116i tops both sets of numbers.

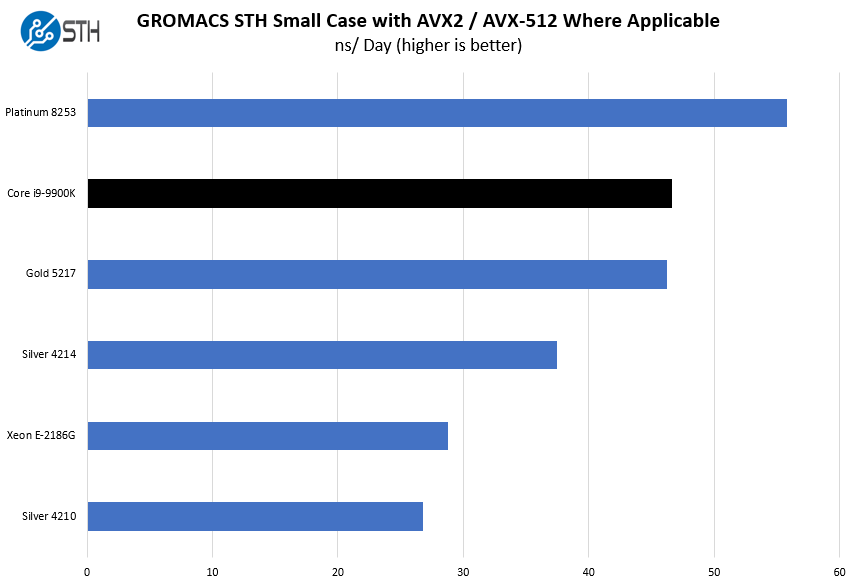

GROMACS STH Small AVX2/ AVX-512 Enabled

During our initial benchmarking efforts, we have found that our version of GROMACS was taking advantage of AVX-512 on Intel CPUs. We also found that it was not taking proper advantage of the AMD EPYC 7002 architecture. We have had one of the lead developers on our dual AMD EPYC 7742 machine and changes have been implemented. This review is coming out before the new dataset is ready. As a result, we are going to show Intel results for an AVX-512 comparison:

We are only showing Intel numbers here. As you can see, the Vega R-116i performs extremely well, besting Xeon Gold handily in one test we might expect favors the Xeon Scalable architecture.

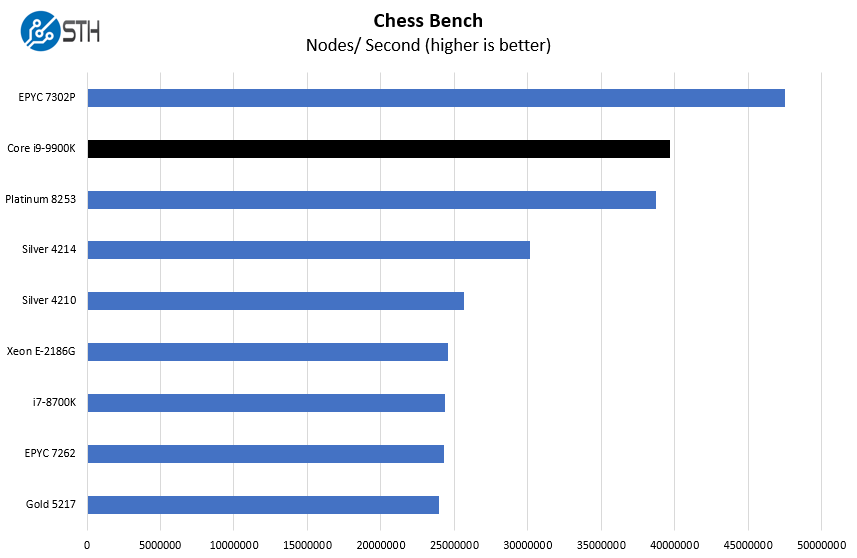

Chess Benchmarking

Chess is an interesting use case since it has almost unlimited complexity. Over the years, we have received a number of requests to bring back chess benchmarking. We have been profiling systems and are ready to start sharing results:

Here again, we see the Vega R-116i perform between the lower and 12 core/ 16 core AMD EPYC CPUs and still better than the Intel Xeon Platinum 8253.

We also ran GeekBench 5 on this server so you can compare it to other results out there. Here is a link to that result. Hopefully, these examples highlight why the Vega R-116i’s high clock speed across eight threads is truly impressive.

Next, we are going to look at the Vega R-116i power consumption, the STH Server Spider, and our final words.

Yes, I’m sure the buy/sell orders get sent out MUCH quicker than with some loser 4 GHz processor when both are on common 1 GbE networks….

(This is a moronic concept, IMO; catering to stupid traders thinking their orders ‘get thru quicker’? LOL!)

It’s for environments where latency matters, and the winner (e.g. the fastest one) takes all. And as you say if 1 GbE is “common” then there are certainly other factors that could be differentiators. That 25% in clock advantage could be to shave 20% off decision time or run that much more involved/complex algorithms in the same timeframe as your competitors.

Calling people whose reality you don’t understand stupid reveals quite a bit about yourself.

Does the CPU water cooler blow hot air over the motherboard towards the back of the case?

Mark this is a very healthy industry that pushes computing. There are firms happy to spend on exotic solutions because they can pay for themselves in a day

@Marl Dotson – for use in a latency sensitive situation you would a install fast Infiniband card in one of the PCIe expansion slots. You certainly would not use the built in Ethernet

That cooler looks to be very simular to Dynatron’s, probably an Asetek unit.

https://www.dynatron.co/product-page/l8

@David, I’m not familiar with the specific properties of the rad used here, however the temp of the liquid in the closed loop system should not exceed +4-8°C Delta T.

@Dan. Wow. Okay. At least one 10Gb card would be the basic standard here: 1Gb LAN is a bottleneck as @hoohoo pointed out. Kettle or pot?

Follow up: is this an exciting product? 5Ghz compute on 8 cores is great, but there are several other bottlenecks in the hardware, in addition to the Nic card.

Hi,

i would like to make a recommendation concerning the CPU Charts for the future.

It would be helpful if you could add near to the name of the cpu, the cores and the thread count. For example EPYC 7302p (C16/T32). It would be easier for us to see the difference since its a little hard to keep track in our head each models specs.

Thank you

A couple of comments here assuming that onboard 1GbE is the main networking here. You’ve missed the Solarflare NIC. As shown it’s a dual SFP NIC in a low-profile PCIe x8 format. That’s at least a 10GbE card according to their current portfolio. It may even be a 10/25GbE card; https://solarflare.com/wp-content/uploads/2019/09/SF-119854-CD-9-XtremeScale-X2522-Product-Brief.pdf

For those commenting on the network interface – this is the entire point of the SolarFlare network adapter. This card will leverage RDMA to ensure lowest latency across the fabric. The Intel adapters will either go unused or be there for boot/imaging purposes due to the native guest driver support which negates the need to inject SolarFlare drivers in the image.

It is unlikely you would see anything that doesn’t support RDMA (be it RoCE or some ‘custom’ implementation) used by trading systems. You need the low latency provided by such solutions otherwise all the improvements locally via high clock CPU/RAM etc are lost across the fabric.

For some perspective here, I think HedRat, Alex, and others are spot on. You would expect a specialized NIC to be in a system like this and not an Intel X710 or similar.

The dual 1GbE I actually like a lot. One for an OS NIC to get all of the non-essential services off the primary application adapter. One for a potential provisioning network. That is just an example but I think this has a NIC configuration I would expect.

There is a specialized NIC and even room for another card, be it accelerator or a different, custom NIC. If you have to hook up your system to a broker or an exchange you’ll have to use standard protocols. The onboard Ethernet may still be useful for management or Internet access.

the storage support the NVME SSD?