ICC Vega R-116i Topology

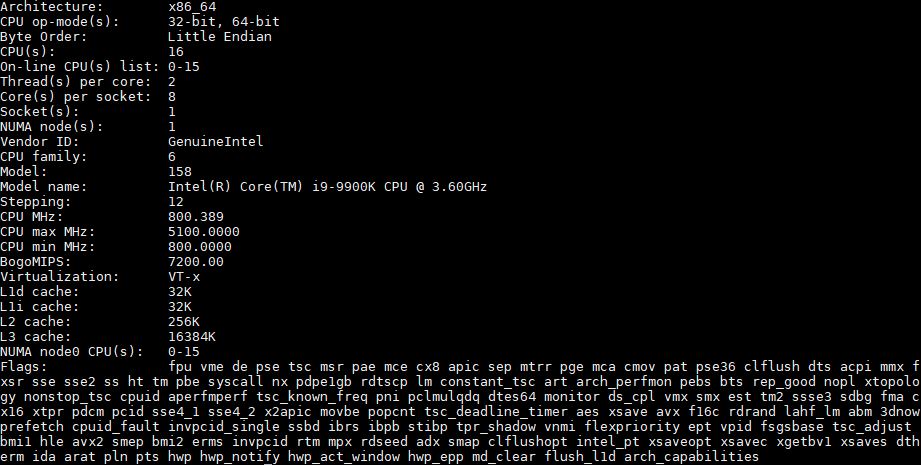

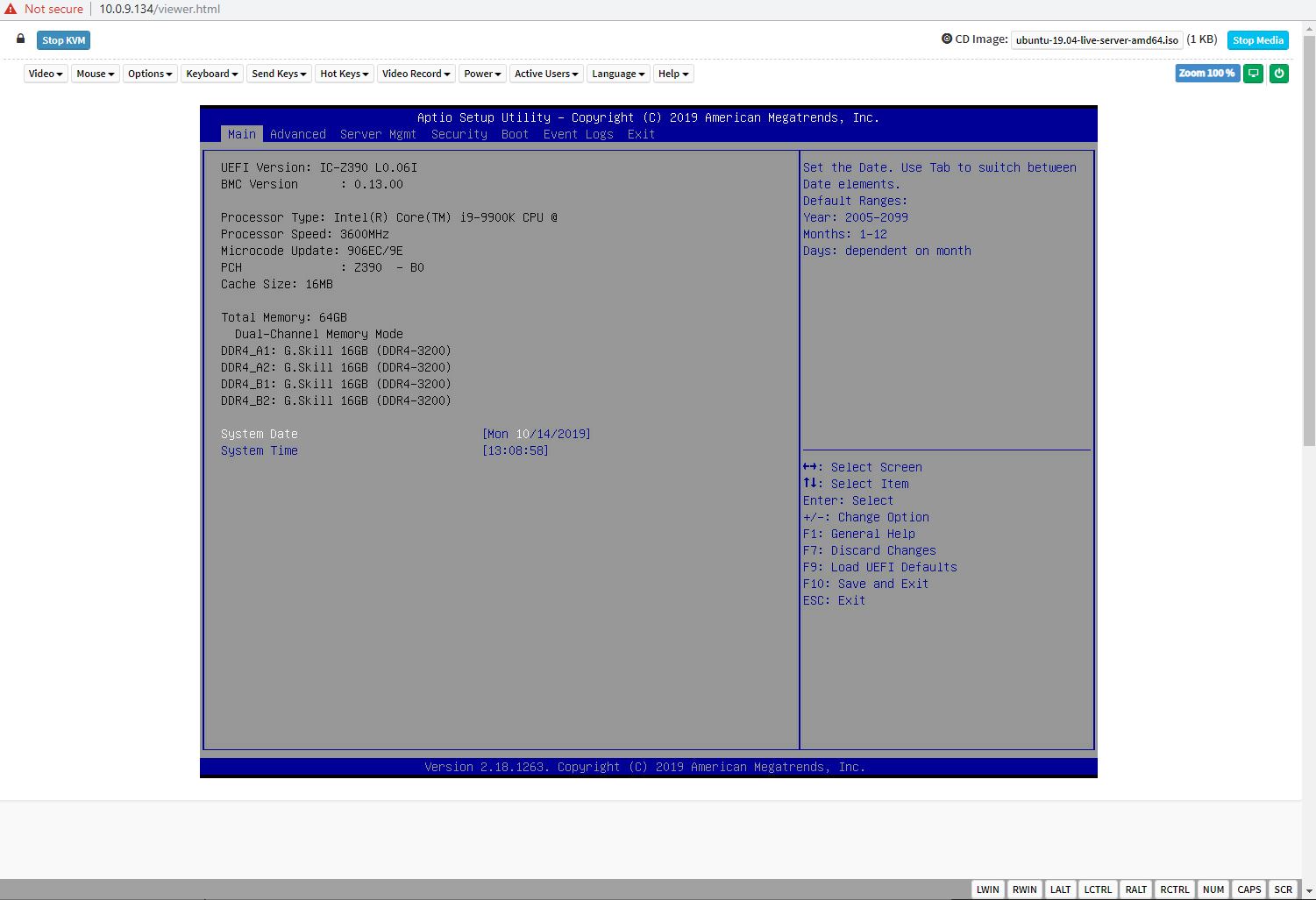

At the heart of the ICC Vega R-116i is an Intel Core i9-9900K processor. This is an 8-core solution. You can see that even in the lscpu output we have a 5.1GHz maximum clock speed when the CPU is rated at only 5.0GHz maximum. The water cooling allows for higher boost clocks across more cores.

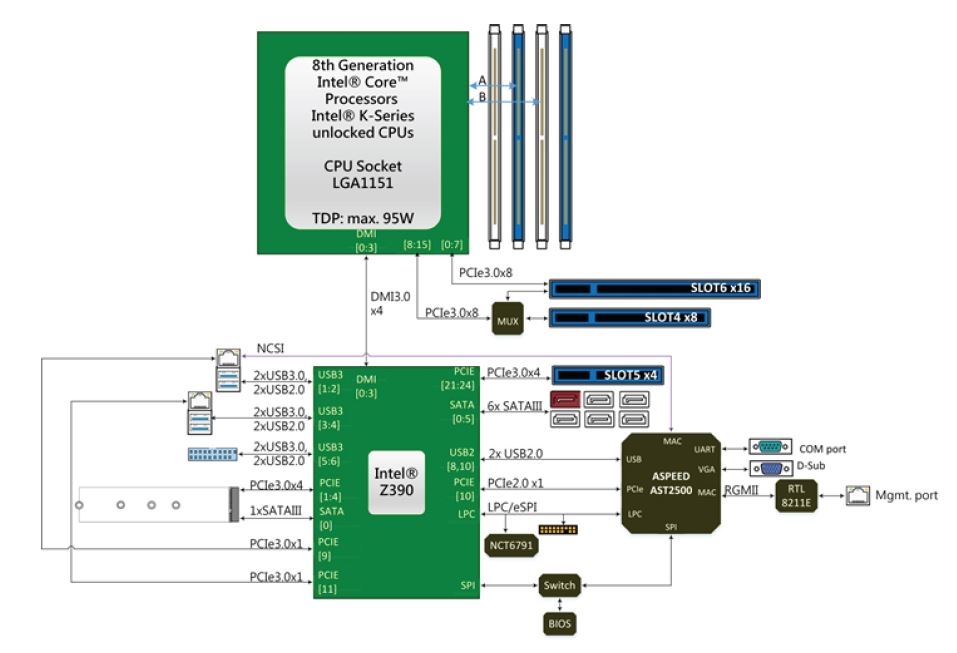

Here is the block diagram of the motherboard. One can see the dual-channel memory, PCIe slots, and even the six SATA III 6.0gbps slots. An M.2 slot and the Aspeed AST2500 are wired into the PCH as well.

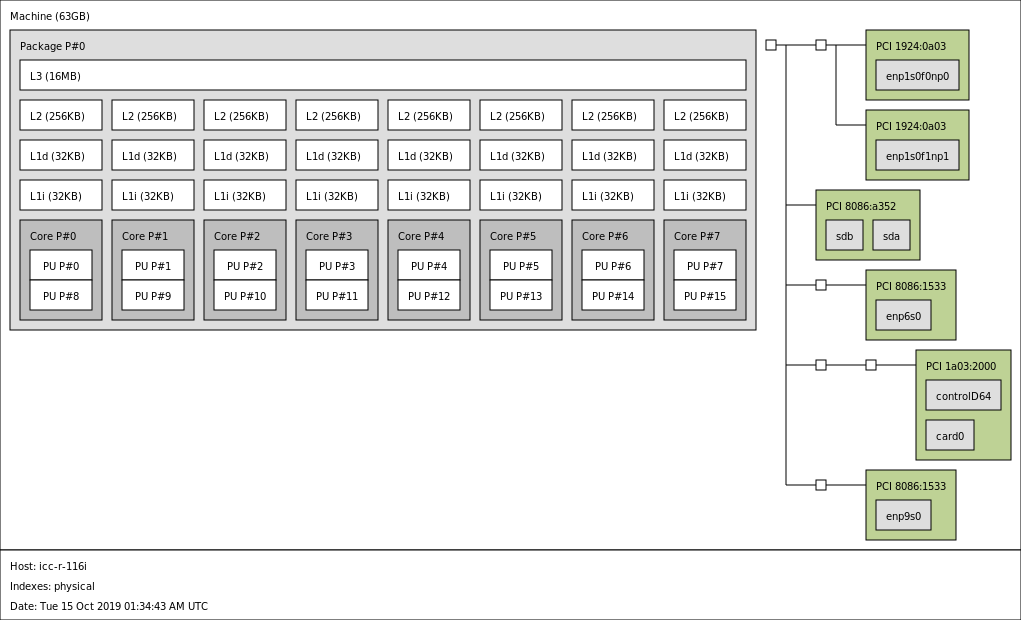

Here is what our test system looked like from a topology standpoint:

Overall, this is a very simple and common system topology in its class. There is nothing exotic to see here which is what we want in this type of server. A single NUMA node also decreases latency over dual-socket solutions.

Next, we are going to look at the management and BIOS for the system.

ICC Vega R-116i Management and BIOS

Turning on the ICC Vega R-116i, one can see that little details are done right. The International Computer Concepts logo is displayed on the splash screen. One can also see the BMC’s IP address printed on the splash screen. This is a feature that not many servers had years ago but major OEMs like Dell and HPE have all implemented. It is great to see this on a custom motherboard solution like ICC is using.

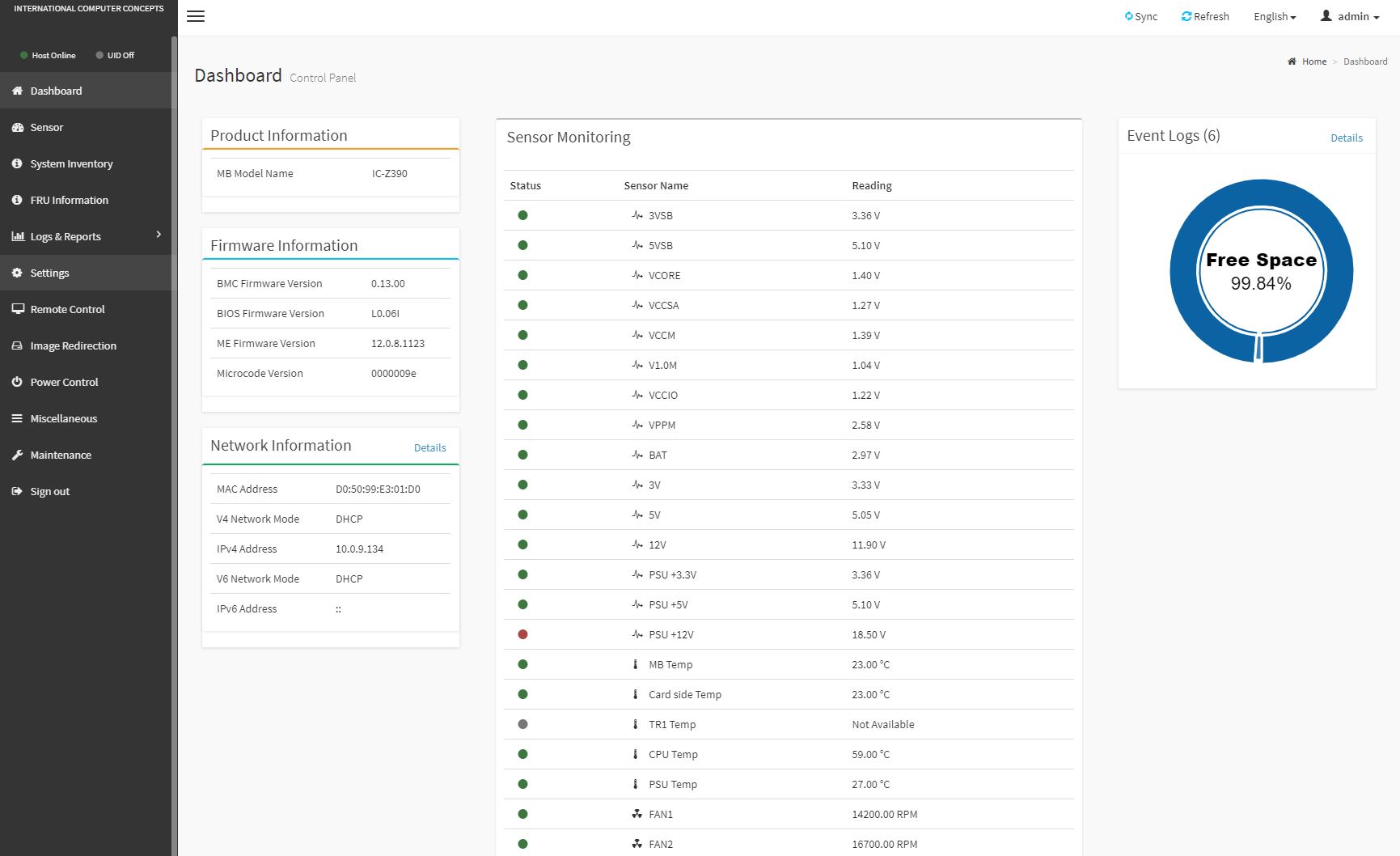

The IPMI management web interface looks like it is an AMI MegaRAC SP-X solution with the ICC skin. That means one gets a responsive solution that works well on both a desktop/ notebook along with mobile devices.

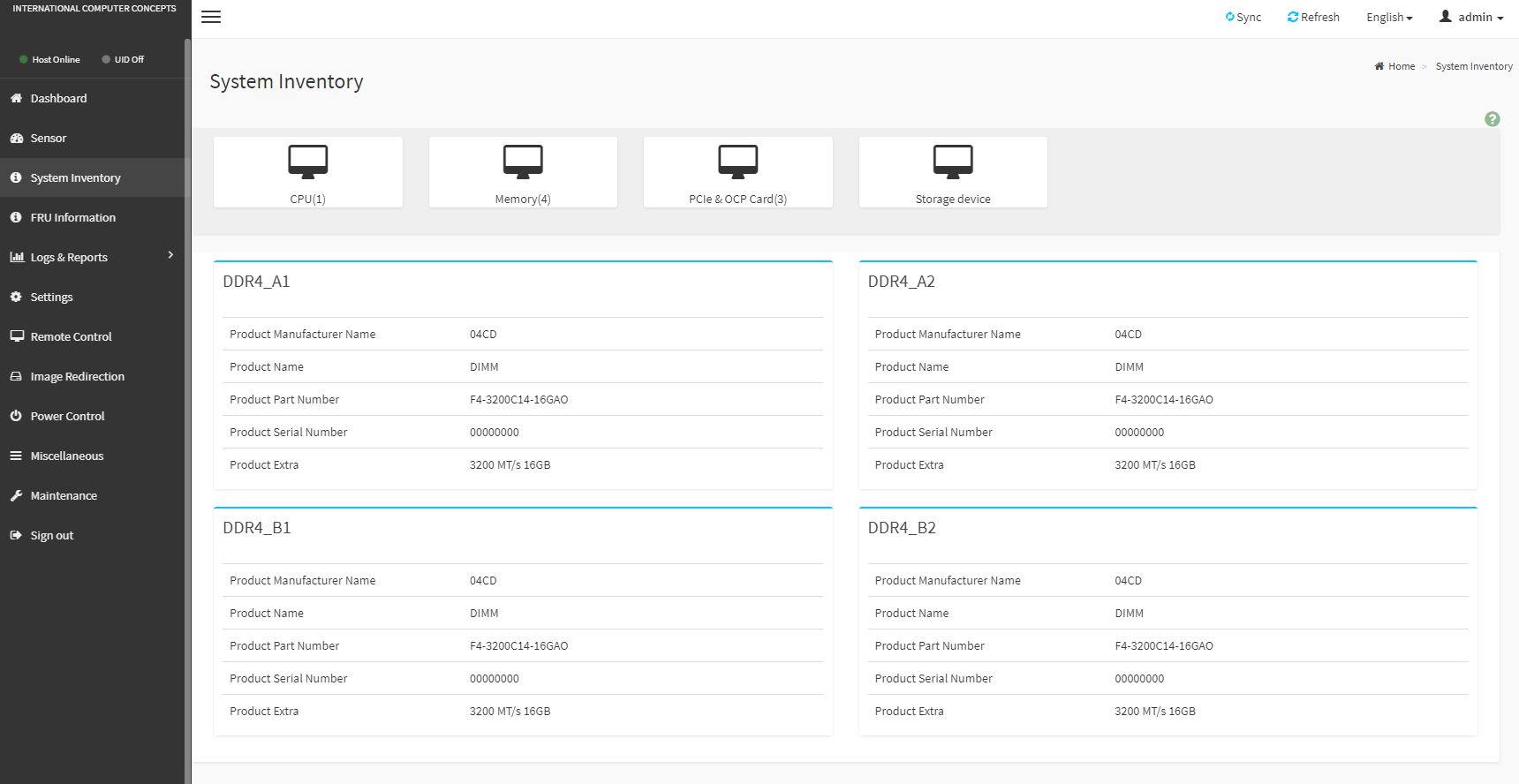

There are a number of sensors and also features like component inventories that the solution offers. We are looking at the web version, but one can use standard management tools to get to this data.

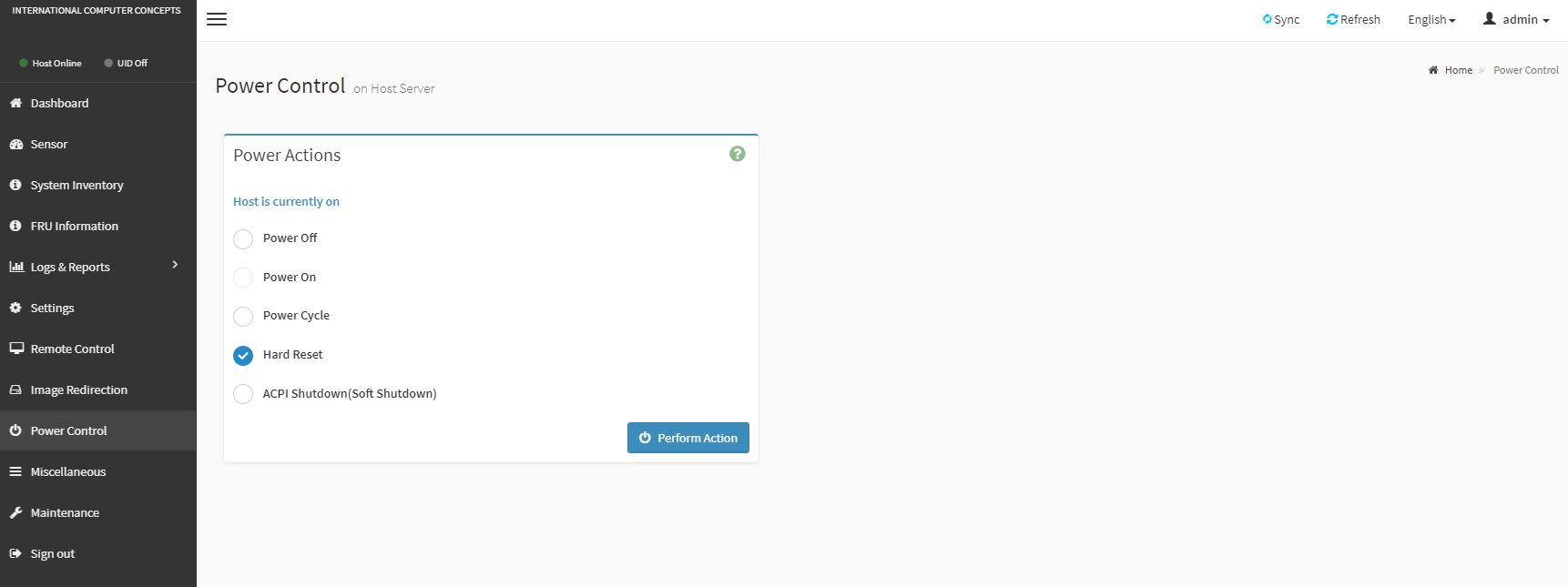

There are also standard IPMI features like power control so one can remotely reboot a system if needed.

ICC also includes iKVM functionality for a remote keyboard/ video/ mouse. There is also a remote media feature as well. Companies like Dell EMC and HPE charge handsomely for the iKVM feature because it is so valuable to their customers for reducing remote hands work in the data center.

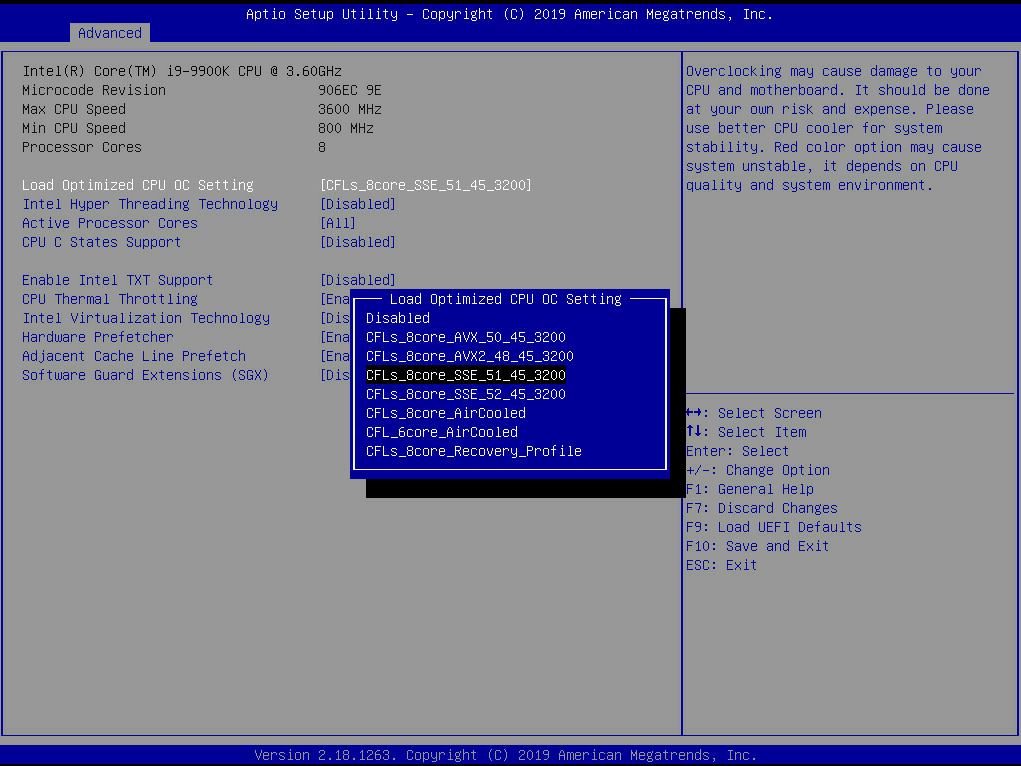

As a quick note here, the solution also has a number of pre-configured overclocking profiles. ICC has custom profiles depending on the different workloads you may want to run on the Vega R-116i.

These settings can be accessed remotely via the BMC which is useful if one is doing testing using different algorithms prior to putting them online.

Next, we are going to look at the Vega R-116i performance.

Yes, I’m sure the buy/sell orders get sent out MUCH quicker than with some loser 4 GHz processor when both are on common 1 GbE networks….

(This is a moronic concept, IMO; catering to stupid traders thinking their orders ‘get thru quicker’? LOL!)

It’s for environments where latency matters, and the winner (e.g. the fastest one) takes all. And as you say if 1 GbE is “common” then there are certainly other factors that could be differentiators. That 25% in clock advantage could be to shave 20% off decision time or run that much more involved/complex algorithms in the same timeframe as your competitors.

Calling people whose reality you don’t understand stupid reveals quite a bit about yourself.

Does the CPU water cooler blow hot air over the motherboard towards the back of the case?

Mark this is a very healthy industry that pushes computing. There are firms happy to spend on exotic solutions because they can pay for themselves in a day

@Marl Dotson – for use in a latency sensitive situation you would a install fast Infiniband card in one of the PCIe expansion slots. You certainly would not use the built in Ethernet

That cooler looks to be very simular to Dynatron’s, probably an Asetek unit.

https://www.dynatron.co/product-page/l8

@David, I’m not familiar with the specific properties of the rad used here, however the temp of the liquid in the closed loop system should not exceed +4-8°C Delta T.

@Dan. Wow. Okay. At least one 10Gb card would be the basic standard here: 1Gb LAN is a bottleneck as @hoohoo pointed out. Kettle or pot?

Follow up: is this an exciting product? 5Ghz compute on 8 cores is great, but there are several other bottlenecks in the hardware, in addition to the Nic card.

Hi,

i would like to make a recommendation concerning the CPU Charts for the future.

It would be helpful if you could add near to the name of the cpu, the cores and the thread count. For example EPYC 7302p (C16/T32). It would be easier for us to see the difference since its a little hard to keep track in our head each models specs.

Thank you

A couple of comments here assuming that onboard 1GbE is the main networking here. You’ve missed the Solarflare NIC. As shown it’s a dual SFP NIC in a low-profile PCIe x8 format. That’s at least a 10GbE card according to their current portfolio. It may even be a 10/25GbE card; https://solarflare.com/wp-content/uploads/2019/09/SF-119854-CD-9-XtremeScale-X2522-Product-Brief.pdf

For those commenting on the network interface – this is the entire point of the SolarFlare network adapter. This card will leverage RDMA to ensure lowest latency across the fabric. The Intel adapters will either go unused or be there for boot/imaging purposes due to the native guest driver support which negates the need to inject SolarFlare drivers in the image.

It is unlikely you would see anything that doesn’t support RDMA (be it RoCE or some ‘custom’ implementation) used by trading systems. You need the low latency provided by such solutions otherwise all the improvements locally via high clock CPU/RAM etc are lost across the fabric.

For some perspective here, I think HedRat, Alex, and others are spot on. You would expect a specialized NIC to be in a system like this and not an Intel X710 or similar.

The dual 1GbE I actually like a lot. One for an OS NIC to get all of the non-essential services off the primary application adapter. One for a potential provisioning network. That is just an example but I think this has a NIC configuration I would expect.

There is a specialized NIC and even room for another card, be it accelerator or a different, custom NIC. If you have to hook up your system to a broker or an exchange you’ll have to use standard protocols. The onboard Ethernet may still be useful for management or Internet access.

the storage support the NVME SSD?