HPE ProLiant MicroServer Gen10 Storage

Storage on the HPE ProLiant MicroServer Gen10 is SATA III 6.0gbps. In this class of server, where low power operation is key, as is keeping costs down, this makes sense. Furthermore, it is highly unlikely anyone will use dual-port capabilities of SAS devices in a MicroServer Gen10.

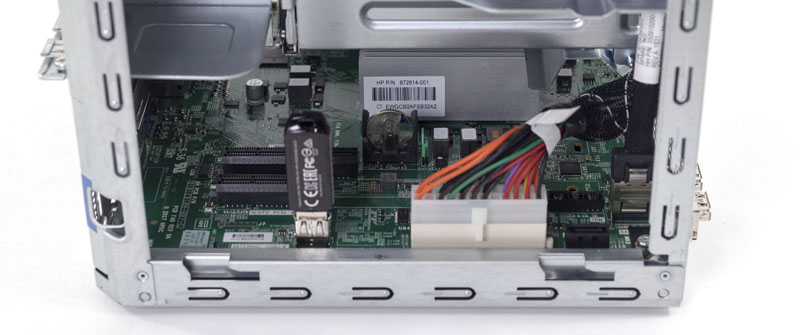

Storage is provided using two headers. The first is a 7-pin standard SATA header. The second is via an SFF-8087 header which provides four ports. SFF-8087 is commonly used for SAS, but here it is a SATA only affair.

The controller situation is that the SATA controllers come via AMD and Marvell. The 7-pin SATA connector uses the AMD SATA controller data path. The SFF-8087 header utilizes the Marvell 88SE9230 header.

Advanced Micro Devices, Inc. [AMD] FCH SATA Controller

Marvell Technology Group Ltd. 88SE9230 PCIe SATA 6Gb/s ControllerThis Marvell 88SE9230 will support RAID 1 as an example, but it does not have a power loss protected write cache so we would not want to run RAID 5 on it.

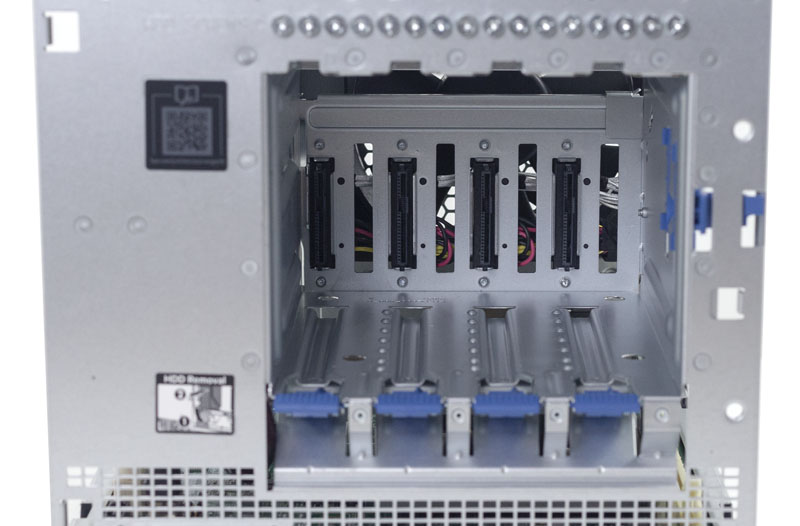

The actual drive bays themselves are unique as well. One removes the front bezel and there are four 3.5″ bays.

Drives slide into place along guide rails and have a latch that secures the drives in place.

These 3.5″ bays utilize guide pegs that screw into hard drives. HPE has a smart system whereby the guide pegs are screwed into the chassis above the hard drive bays. For edge servers, that is a great idea.

Total physical installation time for a drive is generally around a minute. You will want a T15 bit to install the guide pegs. We would have preferred either a Philips head design. In the absence of that, it would have been nice to see “T15” printed next to the “HDD Screws” label.

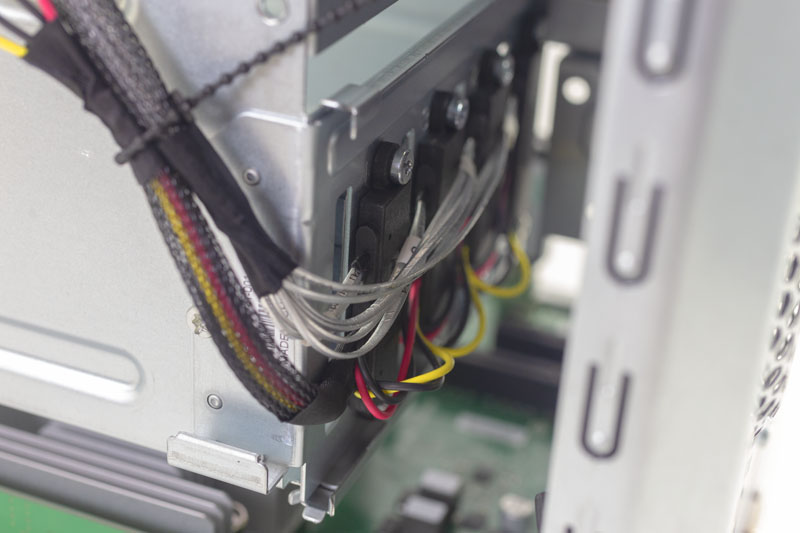

We should note here that the HPE ProLiant MicroServer Gen10 is not a hot-swap system. At the rear of the 3.5″ bays, one can see the drive connectors.

These connectors are the ends of cables with power daisy chained across. Each connector is screwed into the chassis. This must be a marginally less expensive solution than utilizing a standard PCB to deliver power and data connectivity to drives. The PCB still needs power and data connectivity, along with testing to ensure that the power and data pins are correct lengths for hot swap. Still, this is a feature we would have liked to have seen in the HPE ProLiant MicroServer Gen10.

Test Configuration

Our HPE ProLiant Microserver Gen10 featured the AMD Opteron X3421 APU. The part is the higher-end part that you can get in this series and in the Microserver Gen10 and we are not aware of other easy-to-order systems with these processors.

- System: HPE ProLiant MicroServer Gen10

- APU: AMD Opteron X3421

- RAM: 2x 8GB DDR4-2400

- Networking PCIe: Intel X520-da2 dual SFP+ 10GbE

- OS SSD: Intel DC S3700 400GB

- Storage HDDs: 4x Western Digital Red 10TB

- OS: Ubuntu 16.04 LTS (HWE Kernel), Ubuntu 18.04 LTS, CentOS 7.5-1804, Proxmox VE 5.3

Unlike previous MicroServer generations, this is not a socketed part. Instead, you need to order the HPE ProLiant MicroServer Gen10 with the APU you want to use.

We added a 2.5″ SSD where the optical drive would go. This required adding a power cable and a SATA cable. We wish that HPE would pre-wire this. Luckily, we have a lab full of parts so this was easy to do.

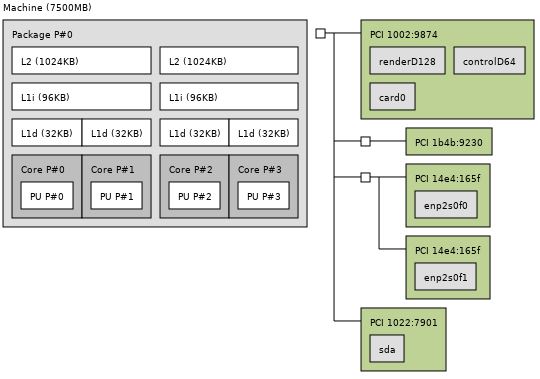

HPE ProLiant MicroServer Gen10 Topology

As part of our review, we show the topology of servers. This is more pertinent on the larger server side. Here is what our system came configured with:

As one can see, this is a fairly simple SoC design. AMD is blazing ahead with multi-chip packages in higher-end parts above the Opteron X3000 and AMD EPYC 3251 segment. Here, things are relatively simple.

Next, we wanted to talk management and BIOS for the HPE ProLiant MicroServer Gen10.

That conclusion is really insightful. If you want multiple low cost servers with hard drives for clustered storage, this is a really good platform. If you just want more power, I keep buying up into the ML110 G10 because that’s so much more powerful. It’s also larger so sometimes you can’t afford the space.

Finally, an honest review that actually shows cons as well was what’s good.

We use MSG10s at our branch offices. They’re great, but servicing them sucks. We’ve been training finance people to do the physical service on the MSG10s.

One issue you didn’t bring up is that there’s a lock for the front to secure all the drives. We had one where it wasn’t locked, employee flipped the lock and ended breaking the bezel.

I hope you guys do more ProLiant reviews. They need a site with technical depth and business sense reviewing their products not just getting lame 5 paragraph “it’s great” reviews.

To anyone not using the MSG10, they do have downsides, but they’re cheap so you have to expect that it isn’t a Rolls Royce at a KIA price tag

It’s too bad this doesn’t have dual x8 PCIe. It also needs 10G. This is 2019. To get 10G or 25G you’ve gotta burn the x8 which leaves you with only a x1 slot left. Can’t do much with that.

What about the virtualization capabilities? In your article on the APU, your lscpu output reported AMD-V. It would be nice to know if it’s fully implemented in BIOS and if there are reasonable IOMMU groups. Also, Intel consumer/entry level processors lack Access Control Services support on the processors PCIe root ports, so would be nice to see what this AMD APU offers.

Why do I care? Because it would be nice to have a dedicated VM running less trusted graphical applications for digital signage. In a home office use case, one could use virtualization to combine a Linux NAS and a Kodi HTPC on the same hardware. Exposing the Kodi VM instead of the server OS to your family might have advantages.

Like the others small HPE ProLiant MicroServers in the past, an attractive looking little box. I wish it were possible to install an externally accessible SFF x8 mobile rack such as a Supermicro CSE-M28SAB and the motherboard had x2 SFF 8087 ports or better M28SACB and 8643, thus could have x2 RAID 10 volumes.

Well, I suppose you could put a ZOTAC GT 710 1GB graphics card in the spare slot, as it is a purpose-built PCIe x1 card. Marginally better than the iGPU if you have the need, and handy to keep around if your onboard graphics quits working on a server and a small x1 slot is all you have left to work with.

Is the idle power measurement with disks spinning?

It is a very good system, I got two with the dual core APU and 16G memory. It is possible to use the PCIe slt for an SSD card as well, I have an intel i750 that fits inside and works fine. I reckon that a couple of modifications to a future design could really make it quite good.

Running latest version of Solaris on this works a treat hosting files over NFS.

The price of these just doesn’t justify the performance. I have an older model I was able to pick up for a great price (NEW) and works great for very small stuffs, but choked when I attempted a large ZFS Freenas setup.

Under Test Configuration, you have 4x10Tb HDD’s and a 400GB SSD. I thought that that this machine could only handle a maximum of 16TB overall, is this not true?

Does anybody know, if the harddisks can be used in JBOD mode? I intend to run a Linux-based software RAID-5, because i do not want to rely on the controller hardware and RAID-5 isn’t supported anyway. Power outage is no concern to me as i am using an UPS. Thanks for any experience shared.

I cannot find anywhere if this little box does support hardware passthrough like VT-d for Intel. Can anybody tell?

Got it as micro server. Noise and energy focused. thoughts:

– louder than my strict requirements: 30dB, good for office, not good for audiofiles and bedroom. Need to sync it with drives (Seagate 2TB – 20dB) by 12cm fan replacement. Can’t really hear the tiny fan, usually opposite is the case.

– accessories: only electric cable, no optical drive as pictured everywhere

– annoying connection to optical drive / ssd, what a collosal waste of time for the customer straight away… need to search for very specific floppy cable hack, with very low availability on the market. Overall manhours very high, just to make HP save 1$

– annoying connection to fan by some generic 6pin port, replacement will be difficult as they shuffle pins and PWM management. Another monster expense to count on.

– paper limit of 16TB doesn’t apply in real life of course. My config is 3x2TB RAID0 + 10TB (nightly scrubber), all 4 is 20dB (the max for any of my setups), even the big one from the brand called “WD” (first time trying this brand after 20years with Seagate only, they now offer 10-12TB drives with idle 20dB!)

– BIOS is from AMI, triggers Marwell and Broadcom first, you can set them up to set up hardware RAID etc which you won’t do, but be sure boot time will be very long thanks to this. You can set TDP to 12(!)W to 35W.

– PCIx4 (unfortunate just x1) can be used for 10to8gbit (capped by PCI) Aquantia card which is now very widely used in appliances. I have direct link to QNAP 5to3gbit (capped by manufacturer) USB3 adapter, again Aquantia, this way i can use this connection for 3gbit locally, while server will connect aggregated 2gbit to switch. Use case: video editing..

– PCIx8 can then stay free for NVME, Gfx etc

– one use case is NAS showcase, with synology btrfs encryptfs not-so-cool implementation, getting 240MB/s on samba. I thought there’s much more potentional as the raw aes256 benchmark throws 700MB/s. Now I noticed the 1/4 CPU utillization, guessing 1 core is used, once i randd if=/dev/null of=/volume1/test bs=8k in 2 shells in parallel, the speed hit 400MB/s, still not challenging RAID0 cap of spinning drives, good enough for this whacky file encryption. Machine loves multiple users.

– I assume Freenas ZFS will give better numbers. Will continue tests with more use cases (different NAS systems, VPN gateway role, VM).

– without encryption getting 350MB/s, which is a limit of 5to3gbit USB QNAP card

– dont have 2 SSDs there to check 10gbit saturation, but prehistoric marvell controller is connected via PCIe2x2, guaranteeing 10gbit and nothing more, which is good to save energy

– system is so cold during testing, that i think of just turning off the fan or use fixed low noise low rpm one

– no vibrations are transferred from hard drives, this time i have no use for pads

– overall performance for price is good, and performance for energy is fantastic.

1)you want to compares commercial NASes, ok, the first model of same performance is qnap 472xt, 1200$, 33W (bleh) on idle…wait they told us commercial NASes always save more energy if not money? here you pay 2x for 2x energy waste

2)you want to compare embeds like top routers Nighhawk, WRT32, ok, they eat just a bit less energy but 4 to 10x slower performance on all ciphers – cant serve more than 1 user

3)you wanna compare xeon anything, you scale up performance with cores, but in each case, consumption will be horrid

4)finally you wanna compare with Gen8, seriously, i dont get this moaning about how gen10 sucks, you really love noisy 35w “micro”servers with half performance?

I’m thinking of getting one of these, can i use SSD in this unit, and does Windows Server 2016/2019 have any issues on this hardware?

Makes a nice Avigilon DVR… all decked out and still under $2k and much more peppy than the Gen8.

buy.hpe.com website had this listed as $109.99, but they canceled all the orders claiming a pricing error in their automated system. Course, they didn’t take the price off the website until after several complaints.

I`ve just bought HPE ProLiant MicroServer Gen10 and now I want to install SSD NVMe Transcend 220S 512GB into PCIex8 slot. I want to install it via SilverStone ECM24 adapter (M.2 to PCIe x4).

Does anybody know, if the SSD can be used as bootable for Windows 10 or Windows server 2016? Whether to configure the BIOS in this case?

Thanks for a help.

of course you can boot anything from any drive. Windows, FreeBSD, linux, proxmom from USB, HDD, SDD, NVME. I’ve got all of these drives inside and booted from all of them.

the only thing i dislike is the stupid fan there, it’s a very tough job to trick the system to believe it’s running its lousy fan. Wiring is completely different and i shorted one server and returned (later the seller, a biggest retailer, quit on selling them). If you use voltage reducer or wiring tricks, the motherboard will compensate (run faster). Really annoying to hear the fan go up and down all day.

Hi,

What to do to use SAS disks in Microserver Gen10 (not Plus); can I use SAS disks in this bay? Is it possible to use SAS disks and connect the disk bay cable to a hardware RAID controller with cache battery

Best regards

I can confirm.

SAS Disk work with the original Enclosure and Cables.

You only need a SAS HBA or RAID Controller 6/12Gbit