HPE ProLiant MicroServer Gen10 Plus Internal Hardware Overview

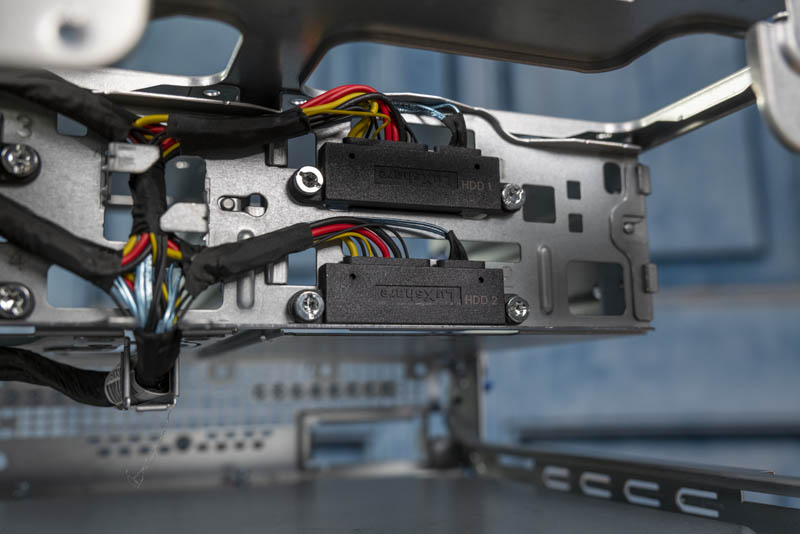

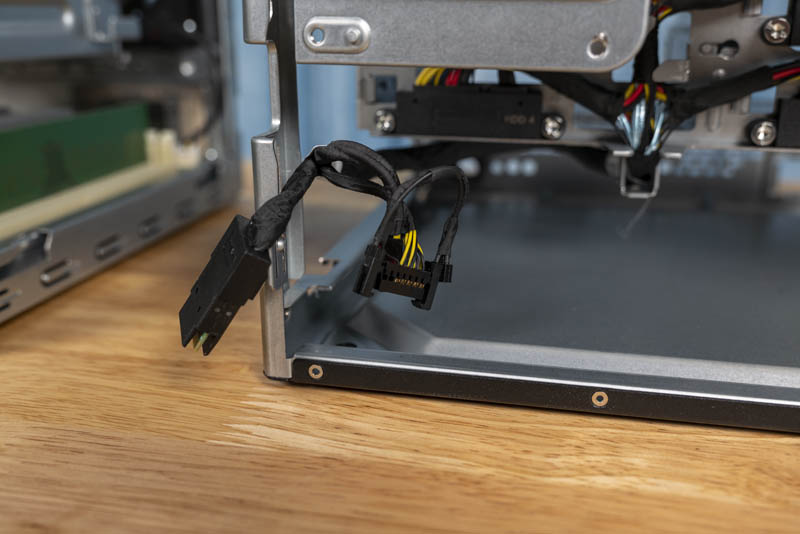

One area that HPE is saving costs in the MicroServer Gen10 Plus is with the backplanes. Instead, these are direct cabled units. HPE screws in these cable ends to create a pseudo backplane which is functional. There is one major drawback. These are not hot-swap bays. Hot-plug functionality is not enabled here. If one wants to install a new SATA drive, one needs to power off the unit, then back on. This is one feature that we wish HPE provided, especially for edge virtualization. Downtime must occur if one is going to service drives.

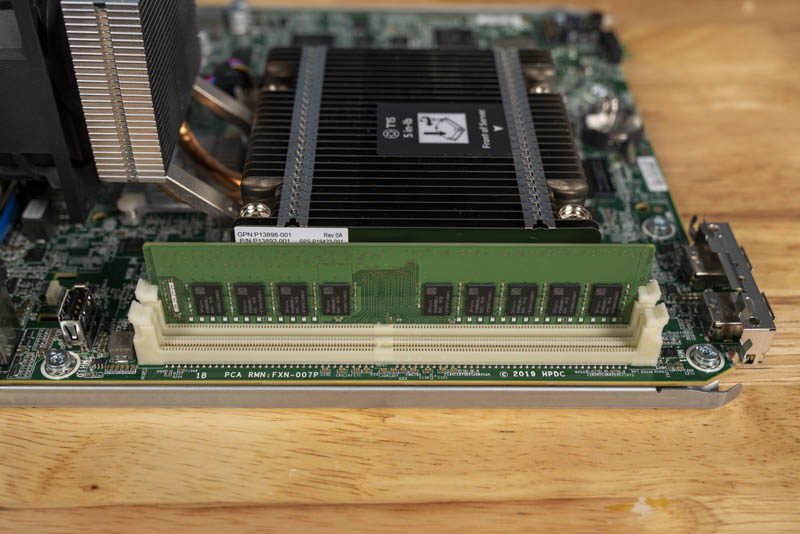

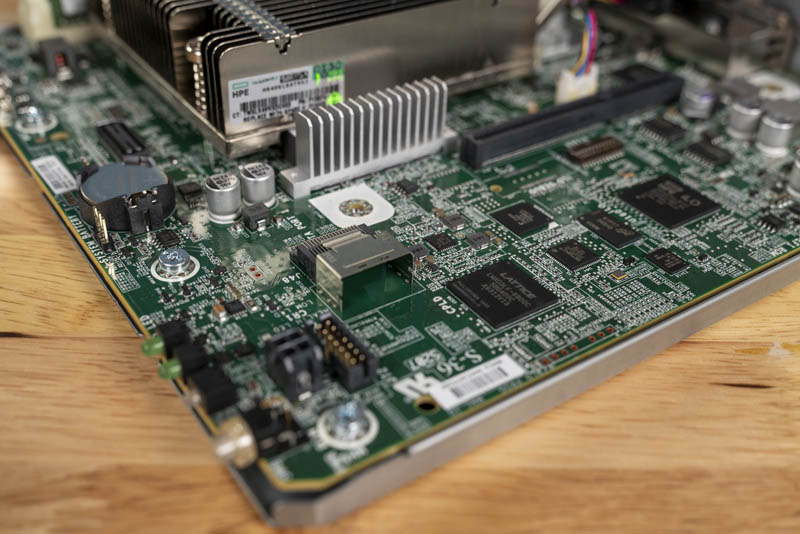

On the motherboard, we de-populated the risers and CPU heatsink to get a look at the system. One can see this is a very compact and dense motherboard. A key feature is the Intel LGA1151 CPU socket which allows HPE to create one motherboard and support both the lower-cost Pentium CPU as well as the performance-optimized Xeon E-2224 CPU. We are not going into alternative CPU options here, but that is a focus on our subsequent piece. We will cover the performance of the two HPE SKUs later in this review.

There are two DDR4 DIMM sockets. These can take ECC UDIMMs and HPE officially supports up to 2x 16GB for 32GB of memory. With the Xeon E-2224 SKU, we get DDR4-2666 operation. For the Pentium Gold G5420 we get DDR4-2400 operation. Both SKUs support two DIMMs and ECC memory. The socket itself supports up to four DIMMs per socket in two-channel memory mode, but HPE does not have the space for that in such a compact form factor.

There is still one USB 2.0 port on the platform and this is an internal USB Type-A header. It would have been nice if this was USB 3.0 as that would make it more practical for internal boot media.

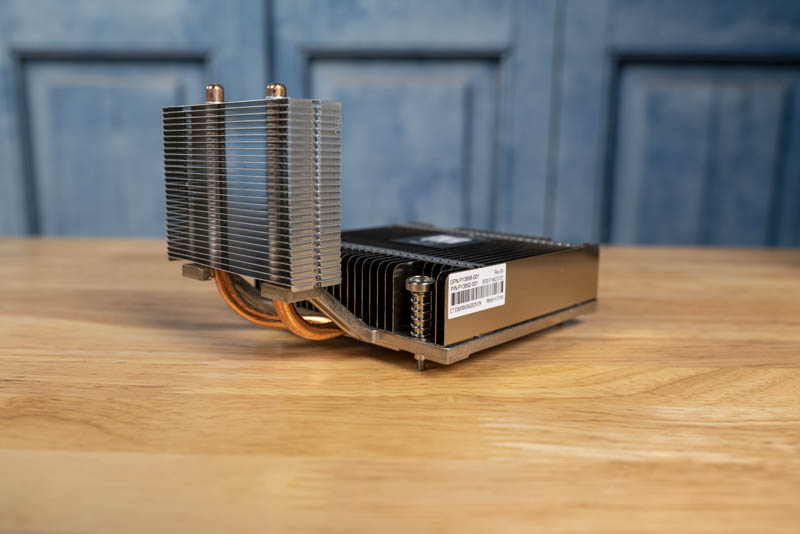

The heatsink you are seeing may look substantial, and it is. It is a passively cooled unit with a heat pipe that helps get the heatsink into the airflow of the chassis fan. This is a very nice design.

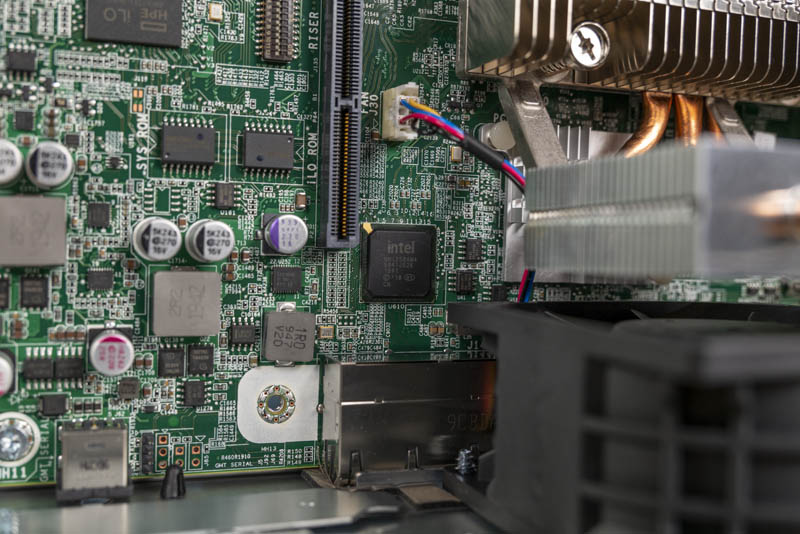

We mentioned the four 1GbE ports previously. These are powered by the Intel i350-am4 NIC chip. This is a high-end 1GbE NIC that has been around since 2011. It is expected to be supported through 2029 making it a very long life part. Here is the NIC on Intel Ark. You will note the $36.37 list price. For some context, the Intel i210-at, the lower-end single port NICs, are $3.20 each. Inexpensive consumer Realtek NICs cost well under a dollar. As you can see, this is an area where HPE designed a premium network controller where there was ample opportunity to cut costs. At the same time, by using the i350-am4, HPE gets excellent OS support that we will see in our OS testing section of this review.

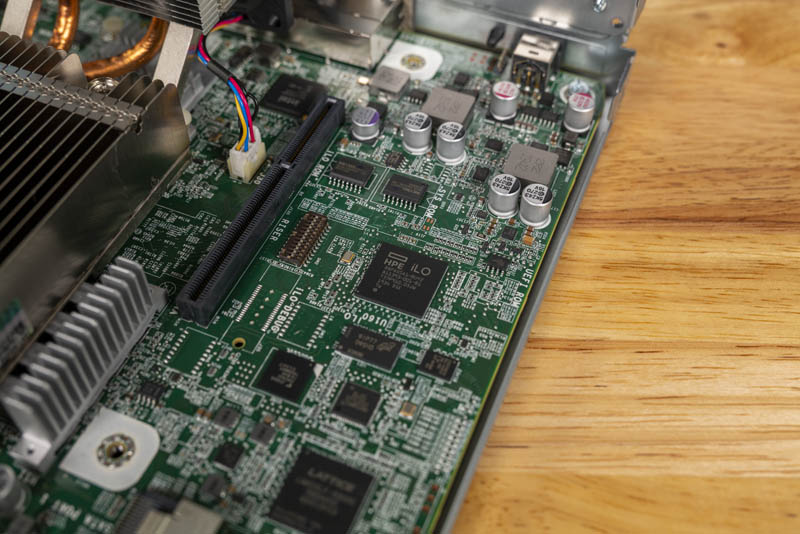

Another big feature is the addition of the HPE iLO 5 BMC. This adds cost to the unit, but also makes this system a first-class iLO manageable server, just like other servers like the HPE ProLiant ML110 Gen10 and HPE ProLiant ML350 Gen10 tower servers and the HPE ProLiant DL20 Gen10 and HPE ProLiant DL325 Gen10 rack servers. Competitive systems, such as the Dell EMC PowerEdge T40 (review almost complete) do not have BMCs to save costs at the expense of manageability.

PCIe expansion is via a PCIe Gen3 x16 slot. Given the power and cooling of this system that we will discuss on the final page of this review, we suggest that this is not suitable for higher-end SmartNICs and GPUs, even the NVIDIA Tesla T4. HPE has a low power AMD Radeon GPU option for those who need display outputs.

The top slot may look like a PCIe x1 slot at first, but it is designed to be used with the iLO Enablement Kit option. For STH readers, we highly recommend equipping this option and we will go into more depth on that later in this review.

The SATA connectivity is powered by the Intel PCH. This may look like a SFF-8087 SAS connector, but it is being used here for four SATA ports. There are no additional SATA ports or SATADOM headers onboard which means there is no extra SATA port for boot drives. We wish there was a powered SATADOM header onboard as that would allow for all four drive bays to be used solely for storage.

The motherboard connectors are greatly reduced from previous versions. HPE is using custom connectors to deliver power and data to the rest of the chassis except for the SFF-8087 cable.

Overall, this is a great hardware package with the HPE ProLiant MicroServer Gen10 Plus. There are a few areas where HPE has the opportunity to innovate and make a category-killing product. We like the hardware direction HPE took.

Next, we are going to look at the system topology then the management stack before getting to our OS and performance testing.

I’ve skimmed this and wow. This is another STH Magnum Opus. I’ll read the full thing later today and pass it along to our IT team that manages branch offices.

I made it to page 4 before I ordered one. That iLO enablement kit isn’t stocked in the channel so watch out. I’m now excited beyond compare for this.

excellent review – would love to see one with Intel Xeon D Processor based model as well!

A really nice review, thanks a lot. impressed with the Xeon performance at this kind of low power system. I should/really want to get one, replacing my old gen 7 microserver home server.

Too bad they didn’t go EPYC 3000

I like seeing bloggers and other guys review stuff, but STH ya’ll are in a different league. It’s like someone who understands both the technical and market aspects doing reviews. I think this format is even better than the GPU server review you did earlier this week.

I’d like to know your thoughts about two or three of these versus a single ML110 or ML350. Is it worth going smaller and getting HA even if you’ve got 3 servers? I know that’s not part of this review. Maybe it’s a future guide.

@Teddy1974 Can you let me know more about the iLO enablement kit comment please so I can investigate? This is a shipping product.

I would use this for backup repository and perhaps as an SVN repository too

You’re Windows 10 testing is genius but you missed why. What you’ve created is a Windows 10 Pro remote desktop system that can be managed using iLO, is small and compact and it’s got 4 internal 3.5″ bays.

If you plug RDP in, it’s a high-storage compact desktop when others this small in the market have shunned 3.5″.

gentle suggestion: perhaps when taking photos of “small” items like this, have another human hold a ruler to give perspective of size (more helpful than a banana :)

Thanks for mentioning the price within the article. Good info all around.

Not impressed by this product nor this review; need more infos on thermal performances.

Review lacks any discussion of thermal performance other than showing us the pretty picture of the iLO page and a brief mention of thermal limits on the PCI3 Gen3 slot with certain add-in cards.

Complete lack of discussion of thermal performance of horizontally mounted HDD in this device where the review already admits to possible thermal issues with the design.

For me this review looks like a Youtube “unboxing” article for HPE products and not a serious product performance review.

Patrick, you can do better than this. Srsly.

Sleepy – we used up to 7.2k RPM 10TB WD/HGST HDDs and did not see an issue. We also discussed maximum headroom for drives + PCIe + USB powered devices is around 70W given the 180W PSU and how the fan ramps at around 10min at ~110W.

In the next piece, we have more on adding CPUs/ PCIe cards and we have touched the 180W PSU limit without thermal issues. Having done that, the thermal performance/ issue you mention is not present. If the unit can handle thermals up to the PSU’s maximum power rating, then it is essentially a non-issue.

A random question, if I may : will the Gen10Plus physically stack on top of / below a Gen10 or Gen8 Microserver cleanly? It looks like it should but confirmation would be appreciated :)

In the “comparison” article (between the MSG10 and the MSG10+), you wrote about the “missing” extra fifth internal SATA port: “[…] I think we have a solution that we will show in the full review we will publish for the MicroServer Gen10+.”

I really had hoped to read about this solution! Or did I just miss it?

Also, I’d like to know more about the integrated graphics: If I’m understanding it correctly, the display connectors on the back (VGA and DisplayPort, both marked blue) are for management only; meaning that even if you have a CPU with integrated GPU, that is not going to do much for you. (This is in line with the Gen8, but a definite difference with respect to the MSG10!) So … what GPU is it? A Matrox G200 like on the Gen8? Or something with a little more oomph?

Personally, I’m saddened to see that HPE skimped on making the iGPU unusable. :-(

TomH – the Gen10 Plus is slightly wider if you look at dimensions. You can probably stack a Gen10 atop a Gen10 Plus but not the other way around.

Nic – great point. As mentioned in the article, we ended up splitting this piece into a review of the unit for sale, and some of the customizations you can do beyond HPE’s offerings. It was already over 6K words. For this, we ended up buying 2 more MSG10+ units to test in parallel and get the next article out faster.

Patrick – Thanks for the update: I’ll be eagerly awaiting the follow-up article! :-)

Thanks Patrick – had hoped the “indent” on the top might be the same size as previous models, despite the overall dimensional differences, but guess not!

Patrick – when we could expect second part of article? I am curious to order this machine just now!

In the next 2 weeks. It is about half-written. We have 2 more inbound MSG10+ units to help get testing more parallel.

Patrick – sounds great! Btw, next to the cmos battery, there is undocumented 60pin connector. Do you have any idea what is this for?

HCX – not yet.

On the win10 setup was the embed RAID controller used?

run24josh – we did not install on a RAID array in this instance, but HPE has great documentation on how to use the S100i with Windows such as https://support.hpe.com/hpesc/public/docDisplay?docId=a00036381en_us

Does iLO Enablement Kit allows you to use server after OS boot, ot is this the same as big servers where iLO advance licence is needed?

Disk temperatures when loaded with say 4* WD Red would be interesting =)

Nikolas Skytter -> 4* WD40EFRX -> About 32C in idle (ambient around 20-21C), max 36C when all disks testing with badblocks. Fan speed 8% (idle).

Patrick – I have found that undocumented connector exists on several supermicro motherboards as well.. and guess what.. undocumented in manual as well. Starting to be really curious..

OK, sadly it looks its really only debug connector, at least on other boards.

Lucky you, how were you able to install the latest Proxmox VE 6.1 on this server?

As soon as the OS loads, the Intel Ethernet Controller I350-AM4 turns off completely :\

Hi, could you please test if this unit can boot from nvme/m.2 disk in pcie slot without problem? There are some settings in bios that points to it, even there is no m.2 slot. Thanks!

This boots NVMe no problem.

Wonder if there would be a clever way to power this thing with redundant external power supplies

Having skipped the GEN10 and still owning a GEN7 and GEN8 Microserver this Plus version looks like a worthy replacement. Although I would have liked to see that HPE switched to an internal PSU, ditched the 3.5 HDD bays for 6 or 8 2.5 SSD bays (the controller can handle 12 lanes) and used 4x SODIMMS sockets to give 4 memory lanes. I also agree with Kennedy that 10Gbit would be a nice option (for at least 2 ports).

How did you manage to connect to the iLO interface? My enablement board did not have the usual tag with the factory-set password on it. Is there some default password for those models?

@Raphaël PWD is on bottom side of case, together with some other tags.

Has anyone else had / having issues when running VM’s? I have the E2224 Xeon model 16Gb RAM, but keep having performance issues. Namely storage.

Current setup 1x Evo 850 500Gb SSD 2x Seagate Barracuda 7.2k 2Tb Spindle disks.

Installing the Hypervisor works fine. Tried ESXi 6.5,6.7 and 7 and used the HPE images. All installed to USB and then tried to SSD all install and run ok, but when setting up a VM, it becomes slow – 1.5hrs to install a windows 10 image, then the image is unuseable.

Installed Windows Server 2019 Eval on to bare metal, Installs ok, but then goes super sluggish when running Hyper-V to the point of being unusable. Updated to the latest BIOS etc using the SPP iso.

Example. Copy 38Gb file from my Nas to local storage under 2k19, get full 1Gbps, start a hyper-v vm, it slows to a few kbps, even copying from USB on the Windows 2019 server, not VM, Mouse becomes jumpy and unresponsive.

Dropped the VM vCPU to 2, then one, still no difference.

Tried 2 other SSD’s.

BIOS settings were set to General Compute performance, and Virtualization Max performance.

Beginning to think I have a faulty unit.

Hi! Do you think that it could be possible to add a SAS raid controller on the PCI express and use it with the provided sas connector?

It would look a little frankenstein but with a NVME on the minipcie and a proper raid controller this would be a perfect microserver for ESXI

Hi Patrick, thx for the amazing review!

After reading this review I decided to buy this amazing device with the Xeon CPU and 2x Crucial 32GB. So-far-so-good. But I have a strange problem. The Sata disk (1Tb) is performing very bad (10-20 MB/s) in Vmware, Xen and HyperV. After replacing them, i still had the same problem. SSD is performing better, 200-300 MB/s, but still not optimal.

After connecting an 6TB Sata disk via RDM in VMWare it was performing better and when I install clean Ubuntu it works as suppose to be. But Virtualization is a disaster. I hope I do not have a faulty device.

@Chris: You are discribing exactly the same problem I have!

Nice to see half microserver. It’d be a tease if I had choice at the time buying full microserver. Especially for the noise. Full microserver just can’t be tweaked for noise and would spin non-standard fan on non-standar connector with non-standard pwm up and down.

There’s even 4 aggregable NICs and 1 slot for 10gbit.

The only issue is CPU choice this time, 3226 passmark / 54W or 7651 passmark / 71W. That’s far above from i’d expect for a microserver. In the era of almost zero watt i7 NUCs. Otherwise, nice build.

Hi, I was googling HPE Microserver Gen 10 plus Windows 10 and got to this article. I have been trying to install Windows 10 Pro on it. First it would not installed when the S100i SW RAID Array was enabled and two drives set up as RAID 1. W10 did not recognize the array. I reset the control to SATA and was able to finish the installation. However, the display driver was not installed (use Microsoft basic display adapter driver) and device manager shows unknown devices: 2 x Base System Device and PCI simple communications controller.

I updated the BIOS to the latest one U48 2.16. The Windows updates did not update any driver. I’ve check the HPE support site and found no driver for Windows 10 (unlike Gen 10). I am wondering how you have installed Windows 10 on this machine. Thank you for any feedback.

It´s possible to remove this riser and add another one to add a nvme and a GPU?

@Jonh Peng: Hi John, I found this article due to the same reason as you. Have you solved the troubles with Windows 10 yet? Have you tried to install drivers which are available for HPE MicroServer Gen 10 (not plus)?

btw the price difference between pentium and xeon in europe is massive. it’s like almost double.

what is VGA “management”? can it connect to display or not? confused about the secretivness about it.. i don’t care about transcoding, but i assume i can log in to console via VGA/DP port.

VGA is connected to iLO so it is more like a traditional server console display output.

Ouch. The price has gone up by over $100 since March! HP Site lists the P16006-001 at $676.99 now.

Thomas – check resellers. They are often less expensive.

Hi there. Thanks for posting this article, there’s lots of great information.

One thing I am trying to find out is what is the maximum resolution from either the VGA port or the Display port, under Windows Server 2016.

We would like to run them at 1920×1080

HPE were unable to help (not sure why, they make them but ???)

Any help would be appreciated.

Has anyone tested 64 GB RAM?

Can you please disclose which reseller? Cannot find iLO Enablement Kit below $90. Thanks

Do the USB3.2 Gen 2 ports have Displayport 1.4a support?

i.e., can they emit 4K 60hz HDR?

Any BIOS updates needed if I need to get an E-2236 for better performance?

My i5-6500 won’t work on it.

I was wondering if I could get more specs on the power supply? I’ve just ordered a few microservers but I’d like to have a spare PS on hand. Looking up the official part number has turned up nothing relevant.

I am wondering if anyone has had issues with the fan noise when running Debian or Proxmox? I’ve found that even when they are idle, the fans after a while will spike to 100% and sit there indefinitely till I restart the system.

Just curious if there is a solution to the problem, perhaps additional tools to install or compatible drivers, or something I can change in the BIOS to stop this happening?

@Jonh Peng & @Michal, did you have any success with Windows 10 in the end?

Can I install and run Windows Server 2019 from an M.2 drive in a PCIe board without need for additional drivers? If so, do I need a specific type of card or M.2 SSD?

@SomebodyInGNV, I have W10 running using a Silverstone ECM22 PCIe Expansion Card with a

WD Black 500GB SN750 M.2 2280 NVME PCI-E Gen3 Solid State Drive (WDS500G3X0C).

Hi there,

Do you think a RAID card (LSI 9267-8i for ex.) could be used keeping power connector on the MB and using the card’s data port ?

Thx

Very useful and enjoyable review, one the best I’ve read in years :-)

I wonder if I could seek your thoughts…. I have an Adaptec 3405 which I’ve used for years. I know it’s an old card now (purchased about 2007) but works great and is super solid with every SATA and SAS drive configuration I’ve used with it. It has never let me down and I know how it ticks.

I appreciate the drives can be SATA only in the HPE Gen 10+, because of the back-plane to the drives, but would you say there is a reasonable chance this card will work with this HP’s motherboard as long as I continue to use SATA drives only? I have a low profile card bracket for it and it’s not a particularly wide card (so I’m pretty sure it will physically fit) but I’m most concerned the HP’s firmware won’t like it. The server it is currently in was built only in 2019 and works fine. Do you have any experience of using older RAID cards that are from non-HP vendors?

Many thanks in advance for even just your thoughts – alternatively whether you can recommend any hardware RAID cards that are suitable for this unit if you think my Adaptec re-use might be unwise would also be appreciated :-)

Great Review! I actually bought a MicroServer Gen10 plus on the recommendation form this article. unfortunately, when I received it, I could not access the bios. It seems like the F9-F11 keys do not work. ILO 5 is disabled (per the msg in the boot screen). I am at a loss. I know the keyboard works ( can navigate a Ubuntu installation with it and Ctrl+alt+Del works). Ive tried to clear the NVRAM using the #6 jumper, to no avail.

Any ideas on how I can get into the bios? I’ve tried every USB port including the internal one. any helps would be greatly apprecaited

hi, I’ve stumbled on this guide while looking for ways to properly install windows 10 pro on the gen10 plus. so far, while windows runs, the driver situation is just horrible. so may unrecognized drivers, and the display is basically locked in a single resolution. so drivers are available anywhere in HP’s site and windows update doesnt pull in any either. just wondering if anyone has been able to resolve the driver situation. thanks

Jati Indrapramasto, it is interesting to see someone with the problem of not being able to find desktop OS (Windows 10) drivers for a box instead of the other way around of being able to find all of the desktop drivers you could need but no server OS (Server 2019) drivers.

I guess this nice little box is a server at heart.

It is a real pleasure not having to manipulate driver files to work with Server 2019 on this sweet little server.

Jati Indrapramasto, I forgot to wish you luck with finding drivers for Windows 10. Good luck hope you are successful.

Seems that, at the moment at least, the G5420 model is fairly hard to find. Given that this box is now nearly 3 years old, do we have any idea if HPE are going be replacing this soon, or is this purely a victim of the semiconductor shortage?

Hi, will there be a review/update to the new v2 version of the Microserver Gen10 Plus? The new one seems to have among other things PCIe Gen4, built in TPM, different USB port layout. Thank you!

I tried to get proper answer from HPE about potential thermal issue, but no answer. Actually, one of the sensors (if not mistaken, BMC 07 or so) in the range around 75 – 80C and allowable value is 105C.

All other sensors shows much lower temperatures and they are in very mid of the allowed range.

Even this is still in the “normal” range, it is not good to have temperature of something close to the limit for all the time and everything else in the mid of range. It imply the opinion that this component will fail faster than if temperature is lower.

Really nice in-depth articles! – Would be really nice if you would also review the new “HPE ProLiant MicroServer Gen11” and compare it to the “Gen10+ v2”. – I look forward to it. :)