This is a review I have wanted to do for a long time. At STH we have a ton of Intel Xeon D coverage. As a result, when we first saw the new HPE ProLiant EC200a Xeon D Server for SMB Hybrid Cloud we have been trying to get one to review since then. Now that it is very late in the product lifecycle, we were able to grab one and show you around. This was one vision of the edge that HPE attempted, but you may not have heard of. As a result, we wanted to explore what was offered, and offer some market perspective.

HPE EC200a Video

If you want to listen to a review rather than read, you can check the accompanying video.

We have more detail in the review here but there is a bit more color on the video version. We suggest opening this on YouTube and playing while you read the review, or just to watch later.

HPE EC200a Hardware Overview

The unit is relatively small in stature and designed to sit on a desk. HPE has a number of mounting options for the ProLiant EC200a including a stand and wall mount. The system itself is designed to be light (excluding hard drive weight) a characteristic that helps with versatile mounting.

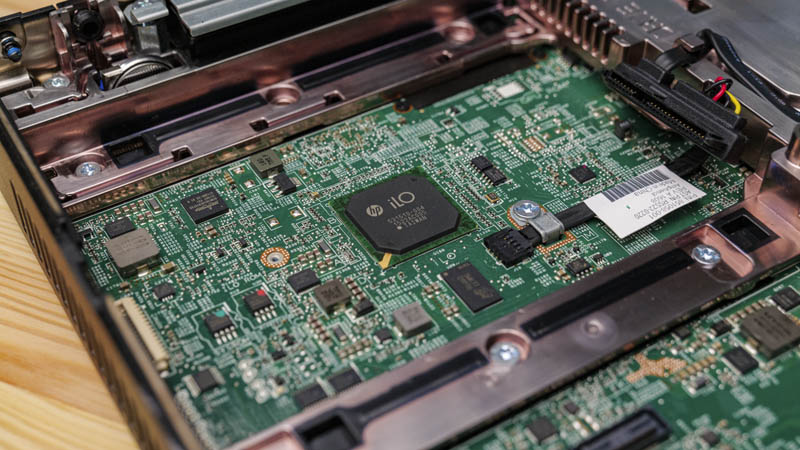

On the front of the system we get one USB 3.0 port and a single USB 2.0 port. Moving to the rear, we get two USB 3.0 ports and a legacy VGA port. The main feature perhaps is the three NIC ports. One is a HPE iLO4 out-of-band management port. The other two are Intel i366 Ethernet ports labeled WAN and LAN. Above these three ports is a blank for an optional, yet hard to find, LAN expansion model which added four more ports using a non-standard (proprietary system-specfici form factor), and now hard to obtain, NIC.

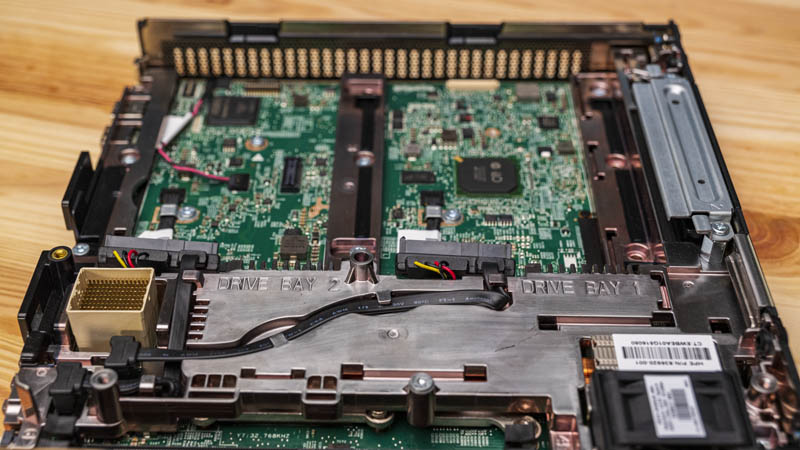

Inside the system, we can see a solution that is designed with the motherboard on the bottom, with space for two 3.5″ hard drives above the main PCB.

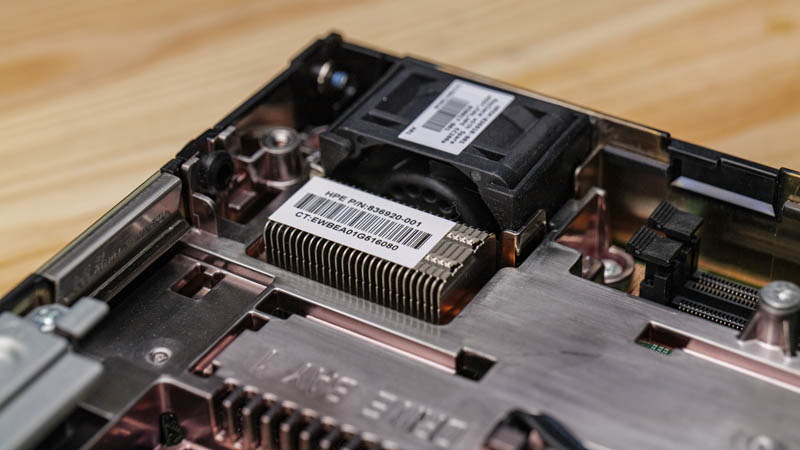

Cooling is provided via a single 40mm fan. There is also a heatsink that is connected via a heat pipe to the Intel Xeon D-1518 4-core/ 8-thread processor that is built into this unit.

The heat pipe assembly is required since the Xeon D-1518 CPU sits under a metallic looking, yet plastic piece next to the two DDR4 DIMM slots. These two DDR4 DIMM slots take up to 32GB DDR4 RDIMMs for a total of 64GB of memory. Given DDR4 pricing and technology today, these are very inexpensive to obtain compared to when the system was first launched.

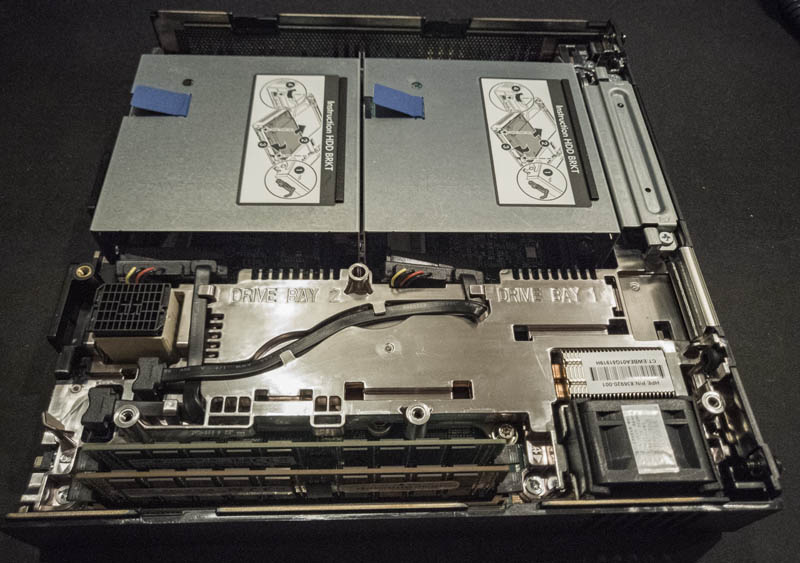

HPE has a very nicely cabled solution to get data and power to two SATA drives. The system is designed for 3.5″ hard drives with mounting brackets that our unit did not come with.

This is what the system looks like with the 3.5″ drive holders, thanks to our STH forum member mmo who sent these in.

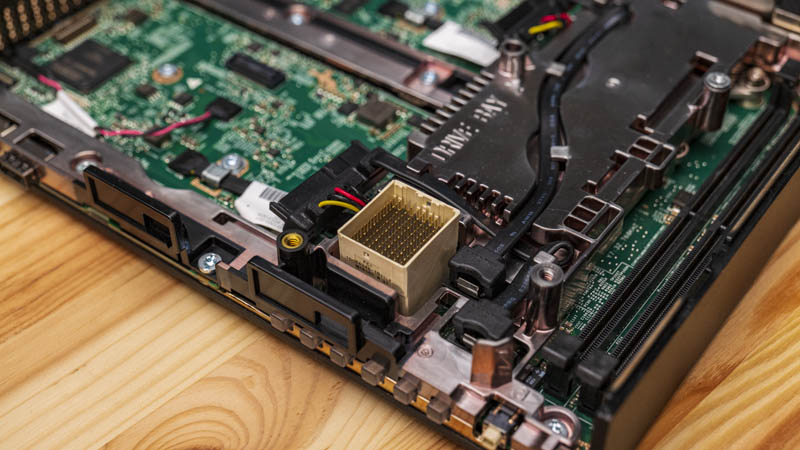

That high-density connector is actually used for the non-standard NIC that was optional in these systems. The NIC started at the front right of the chassis here and proceeded over the plastic cover internally to the opposite side rear of the chassis. We would have preferred if this was some more standard NIC form factor whether that is low-profile PCIe, FlexLOM, OCP NIC 2.0 / 3.0, or something similar. This is another example of where a more standard form factor would have extended the usefulness of the system. In contrast, many of the Supermicro Xeon D-1500 quad-core solutions had either 4x 1GbE built-in or 10GbE networking built-in plus standard PCIe slots at a lower cost. Also, the HPE ProLiant MicroServer Gen10 Plus and more contemporaneous MicroServer Gen10 both used standard PCIe slot form factors for expansion.

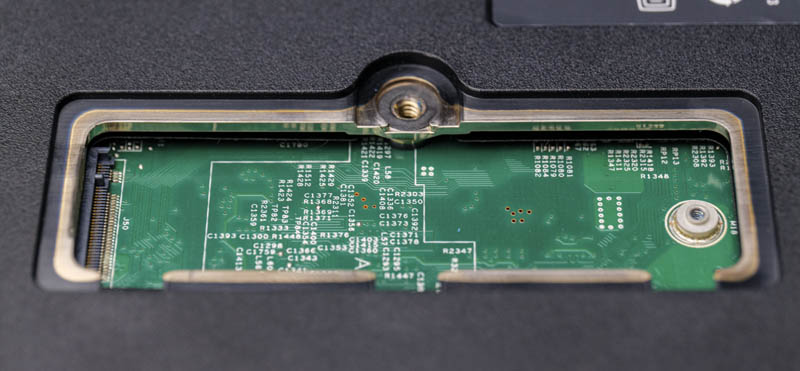

There is another area of expansion. On the bottom of the system, we have an M.2 2280 NVMe drive slot so one can add a SSD to this system along with the hard drives.

Another feature of the bottom of the system is the storage connector. This is normally covered by a small rubber cover (shown in the video) and it allows for external storage expansion.

We did not have the expansion unit, but it hooked into the bottom of the chassis and utilized this connector to provide an additional 4x SATA drive bays. These drive bays used more standard hot-swap trays and commonly were sold with 4TB drives which would increase the total system capacity to 6x SATA III drives.

Another feature we wanted to talk about is management. If you have seen our Introducing Project TinyMiniMicro series, there is some overlap here. One of the major markets for the EC200a is retail and other companies have taken Project TMM nodes and adapted them for retail use as well. While Project TMM nodes typically utilize Intel vPro or AMD DASH for management, the EC200a utilizes the more feature-rich iLO4 solution. We are going to discuss this more in the management section.

One item we wanted to close our hardware overview with was the look and feel. HPE did a great job of making the outside of the system look interesting and similar to other ProLiant branding. The inside of the system looks like it has metal reinforcement everywhere. In reality, most of this system is adorned with plastic including the outer body and these internal metallic-looking surfaces. The engineering is mostly excellent internally, however, the feel of the unit certainly is of a much less expensive unit compared with the Project TinyMiniMicro nodes.

Next, let us get to our test configuration and management before getting to the performance, power consumption, noise, and our final thoughts.

This seems similar in spirit to the recently reviewed HP EC200A.

This is superior to the EC200A along all the dimensions that one would be considering these units (primarily CPU performance, networking, IPMI licensing cost), but the latter has been available fairly cheaply used.

Now that it’s been a few years, what does everyone seem to be using this for? It’s almost like a mini/micro pc in a larger form factor so it can take bigger physical drives.