Today we are going to take a look at one of HPE’s new network adapters. For many years, HPE utilized the FlexLOM. Now, it is following the industry trend and using an OCP NIC 3.0 form factor, mostly. In our HPE 25GbE NIC review, we are going to see what HPE offers, and then compare it with its competition.

HPE Ethernet 25Gb NVIDIA ConnectX-4 OCP Adapter Overview

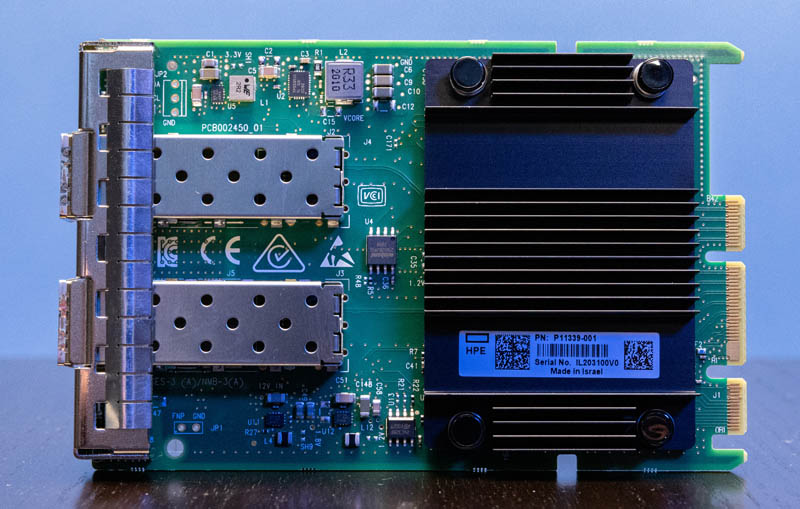

First off, let us get to the big point. Here we have two SFP28 cages on the faceplate. These SFP28 cages are the 25GbE upgrade over the SFP+ 10GbE generation.

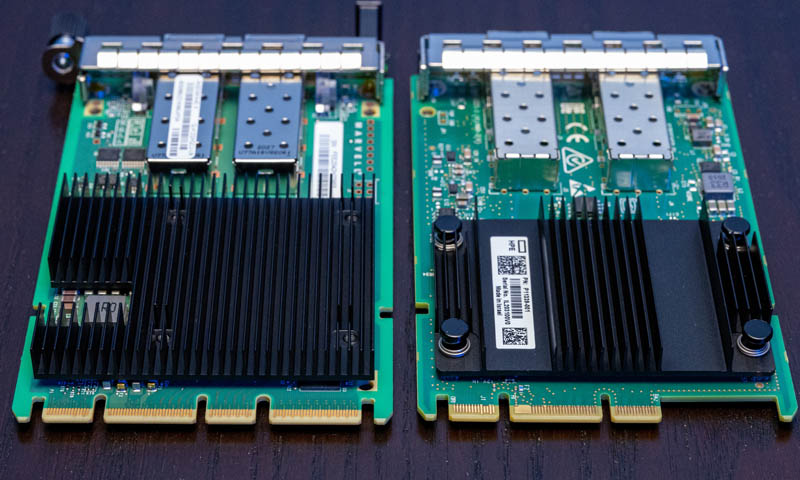

Something that is pertinent to this portion is how HPE constructs its faceplate. Most vendors utilizing the OCP NIC 3.0 form factor have a handle and external retention in the form of a thumbscrew. That is commonly called the “SFF with Pull Tab” retention mechanism (there is a LFF larger OCP NIC 3.0 spec, but most NICs you see today are SFF.) This makes servicing NICs much easier than in previous LOM/ mezzanine generations because one can quickly swap the NICs from the rear of the chassis. Below, we have a Lenovo Marvell OCP NIC 3.0 and the HPE NVIDIA version and one can clearly see the difference. HPE utilizes the “SFF with Internal Lock” design so it is designed to require someone maintaining the server to open the box to service the NIC.

One other item that is less standard, but we wish that HPE followed Lenovo’s lead on is adding larger LED status lights. The LED lights on the Lenovo unit are absolutely huge.

Looking at the card from overhead, one can see the cages and then the heatsink. The notches near the center of the heatsink (top and bottom below) are there for internal retention latching. The NIC is built around the Mellanox, now NVIDIA ConnectX-4 Lx dual 25GbE NIC chip. These are relatively low power and have been around for a long time. Mellanox was ahead on the 25GbE generation and that has made the ConnectX-4 Lx a very common NIC.

Something we will quickly note here is that the edge connector is not the full 4C+OCP x16 connector. Instead, this is the 2C+OCP connector for a Gen3 x8 link. A dual 25GbE NIC does not need a full x16 lane configuration for only 50Gbps of network bandwidth.

Here is a picture of Lenovo’s adapter utilizing the 4C+OCP connector and HPE’s using the 2C+OCP.

Underneath the card, there are a few components and HPE has a sticker with its information on a protective shroud.

Overall, this is fairly normal for a 25GbE NIC and looks a lot like the Mellanox/ NVIDIA card. For your reference, this is P11339-001 and the CX4621A. You will also see this NIC in one of our upcoming reviews.

Performance

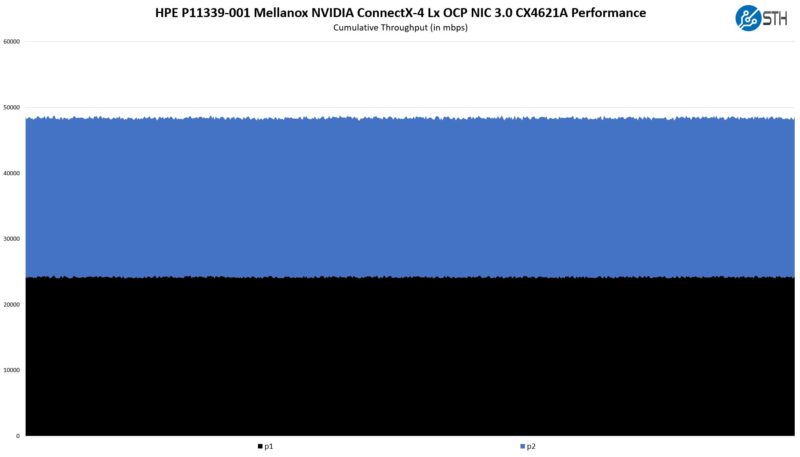

We just quickly tested that we could pass traffic on the NICs:

As one would expect, we were successful. We also tested 10Gbps but the rate that we did not get a chance to test, and yet may be important for some, is 1Gbps. Officially the ConnectX-4 Lx supports 1GbE speeds. For those running HPE servers where one port may be used on a 1GbE/10GbE management network and the other on a 25GbE network, this may be important.

Final Words

Overall, this is one of those cards that is not overly exciting. 25GbE is widely deployed these days and the ConnectX-4 Lx has been around for some time. Our Mellanox ConnectX-4 Lx Mini-Review was from 2019 before NVIDIA acquired Mellanox. Still, it is always interesting to look at these NICs and see how HPE’s approach to NICs differs from others. It is slightly disappointing that HPE went with the internal latch rather than the pull tab option since it means that a NIC replacement, installation, or removal on a Lenovo server will be much faster. Still, compared to the older FlexLOM, this is a move towards an industry standard that we have to approve of.

Did I miss where you mention price? Otherwise the review said nothing more than “no surprise”.

That is an pretty old card. Successor is cx5/cx6 even from HPE.