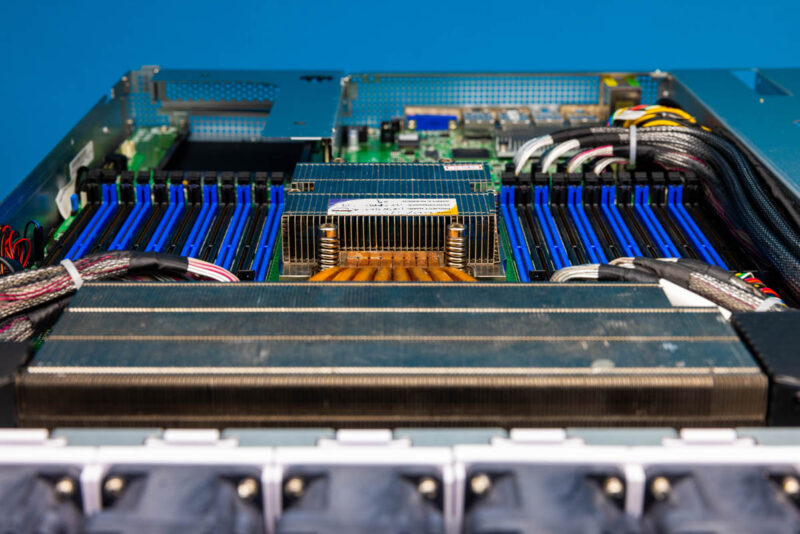

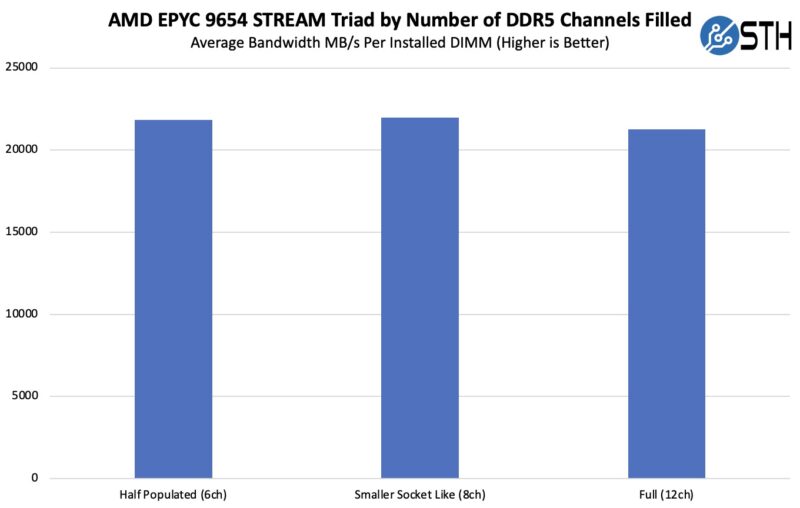

Patrick recently had a conversation he asked me to make some data for. On a consultation, the client asked whether they should fully populate the memory channels in an AMD EPYC 9004 server deployment. A big factor was that the organization in question was replacing some of its AMD EPYC 7002 “Rome” and Cascade Lake generation servers on a 5-year refresh cycle, so the organization thought it could save money and power by populating fewer memory sockets.

Testing Different Memory Configurations with AMD EPYC Genoa

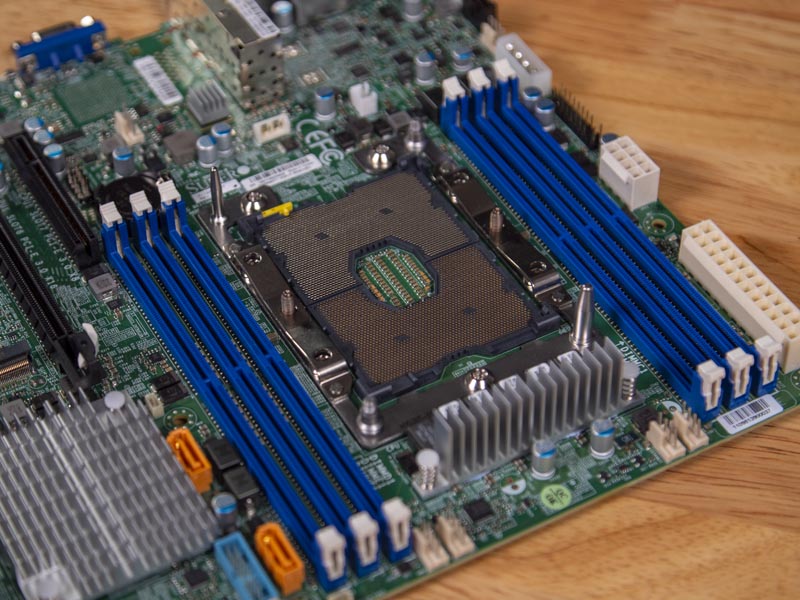

We tested three quick configurations. One was half populated which aligns with the six channel memory per CPU of the Skylake/ Cascade Lake Xeon Generations. Those are also the 1st Gen and 2nd Gen Intel Xeon Scalable.

The second was an 8-channel configuration that matched the 8-channels from Ice Lake (3rd Gen Xeon Scalable), and AMD EPYC 7002/ 7003 (Rome/ Milan.) It is also similar to the 4th Gen and 5th Gen Intel Xeon Scalable (Sapphire Rapids and Emerald Rapids.)

Our final configuration was a full 12-channel configuration with 1 DIMM per channel that we would expect to maximize memory bandwidth.

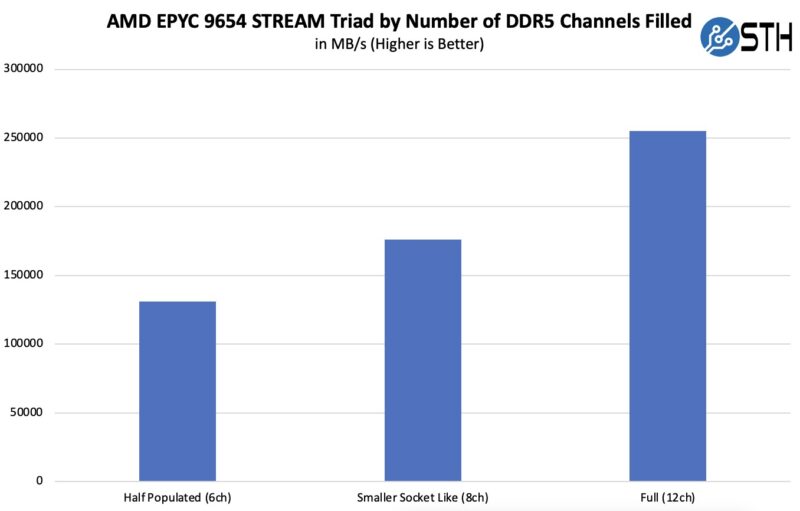

Here is what we got scaling the number of DIMMs.

Many would argue that we are losing efficiency by adding more memory channels and that each incremental DIMM is not adding as much. This is true, but this is a 95%+ scaling curve and not one that has poor scaling. Here is a look at that kind of scaling.

That is pretty good. While it is below the figures for a socket like the NVIDIA GH200 480GB Grace Hopper, the AMD EPYC setup also has more memory capacity, and it is using 2022 DDR5 speed given that is when Genoa was first released. We fully expect Turin with 12 channels of DDR5 to surpass those figures simply with the increase in DDR5 speeds. Intel Granite Rapids-AP with MCR DIMMs should be close to the lower capacity GH200 120GB/ 240GB in terms of memory bandwidth.

Final Words

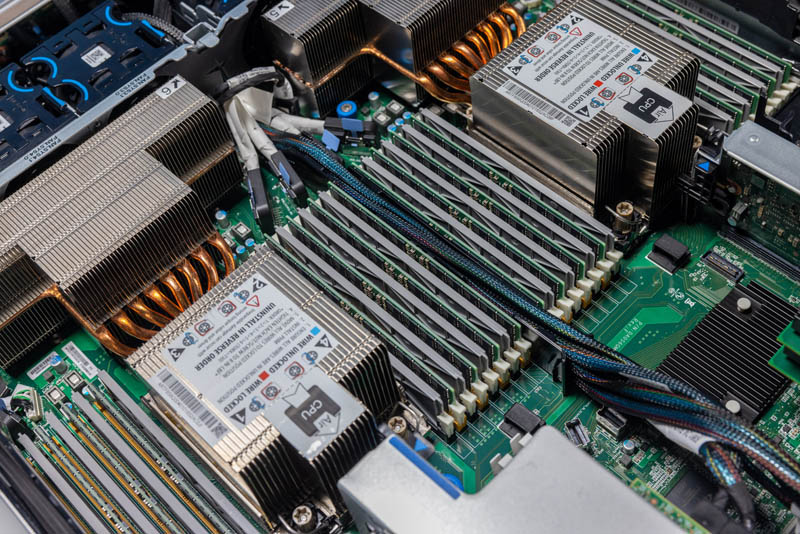

This is just a quick reminder to folks that populating memory channels is a good idea in platforms, especially up to 1 DIMM per channel (1DPC.) Going to 2DPC means one can get more capacity but at the cost of the speed of each DIMM. That is why we usually test servers with full 1DPC configurations. Of course, if you just need more memory capacity, then doubling the number of DIMMs and losing some memory frequency is often worthwhile. If you just want to learn more about DDR5, we have a great guide in Why DDR5 is Absolutely Necessary in Modern Servers.

The message is clear: Fill up those memory channels STH!

So fill up the individual channels but if you have a server with 2dpc you should only fill one dimm because you’ll lose speed at the cost of capacity?

This completely depends on things like your use case, and your budget. Maximize what you have to.

In general, the only good way to size your hardware is to perform tests using your software and then calculate the total lifecycle cost including power, maintenance, mid-cycle upgrades, etc. In general, I’d expect 1 DPC to be a win over <1 DPC in almost every case, just because it makes the (expensive!) CPU more efficient for a very small additional cost (given the same amount of total RAM)

Going from 1 DPC to 2 DPC depends on the amount of RAM that your use needs. If you need tons of RAM, then you need tons of RAM, and 2 DPC will be more efficient than buying hyper-expensive maximum-size DIMMs, buying 2x as many servers, or swapping data to/from disk.

Empty DIMM slots present less cross section to air flow.

So I would like to also know about CPU temperature vs. DIMM slot utilization.

Micheal’s article: “DDR5 Memory Channel Scaling Performance With AMD EPYC 9004 Series” found that on the geometric mean using only 10 channels costs 10% memory performance with a potential savings of several hundred dollars or more. For some workloads the performance was equal.

@emerth

Server OEMs put dummy DIMMs (blocks of plastic) in the slots if they are not populated. That ensures that the airflow profile is the same regardless of the number of DIMMs.

Thanks, @Chris S!

So more memory channels means more memory bandwidth? Okay, but the pertinent question isn’t “How much does this increase the available memory bandwidth?”, it’s “How does this impact performance (in our use case)?” This entire article is saying nothing of value.

Wasn’t this always a question how the motherboard / memory controller is designed?

Whether its a dual, quad, hexa or octa channel design.

So the 1st question to me would be what the maximum number of channels is, that the particular mainboard supports. If it fits with the number of memory slots on board well, then it is a good idea to use them all.

I gotta be honest, I second what Vincele said. It depends on the budget and apps you’re using.

In my experience, there is practically run to run variance of a difference for rendering tasks – aka might not be worth the extra money for populating all the channels.