This week we were at the Xilinx Developer Forum where one of the keynote surprises as an AMD EPYC powered server that allegedly set an AI inferencing record. Since this is STH, we tracked down the server and want to show what the AMD EPYC-based Xilinx Alveo BOXX is made of. It turns out that the physical server was quite unique in its design and implementation.

Introducing the AMD EPYC and Xilinx Alveo BOXX

On stage, AMD’s CTO Mark Papermaster stood alongside Xilinx CEO Victor Peng and discussed their shared belief that we need a faster interconnect called CCIX. Although the companies did not make an announcement on specific products, we could see future AMD EPYC designs and Xilinx FPGAs mated via CCIX.

Xilinx and AMD talking the CCIX future and announcing an AMD EPYC + Xilinx Alveo FPGA box @XilinxInc @AMDServer @AMD #XDF2018 pic.twitter.com/B4SzV6A1J5

— STH (@ServeTheHome) October 2, 2018

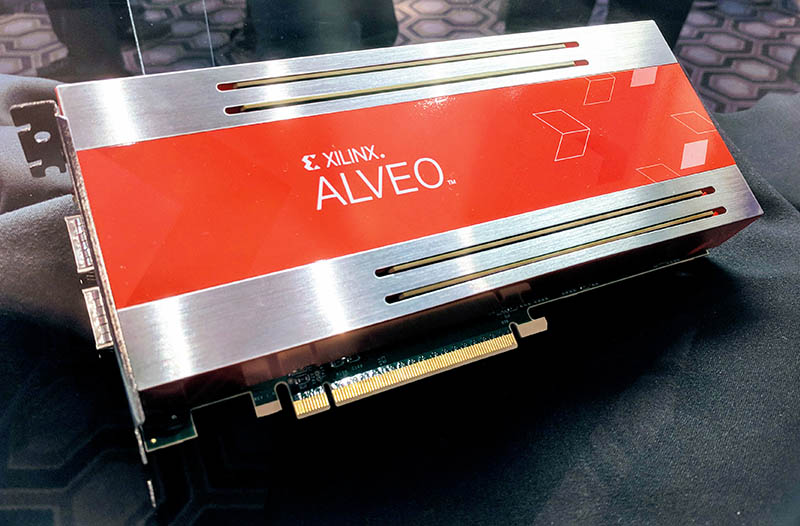

Shown off during this keynote segment was a server with eight Xilinx Alveo FPGAs. The Xilinx Alveo is a 16nm Xilinx UltraScale+ FPGA on PCIe solution announced at XDF 2018. Xilinx has heard that it needs to move its ecosystem to be easier to work with. Instead of providing chips for others to integrate into PCIe products, Xilinx is moving that direction with Alveo.

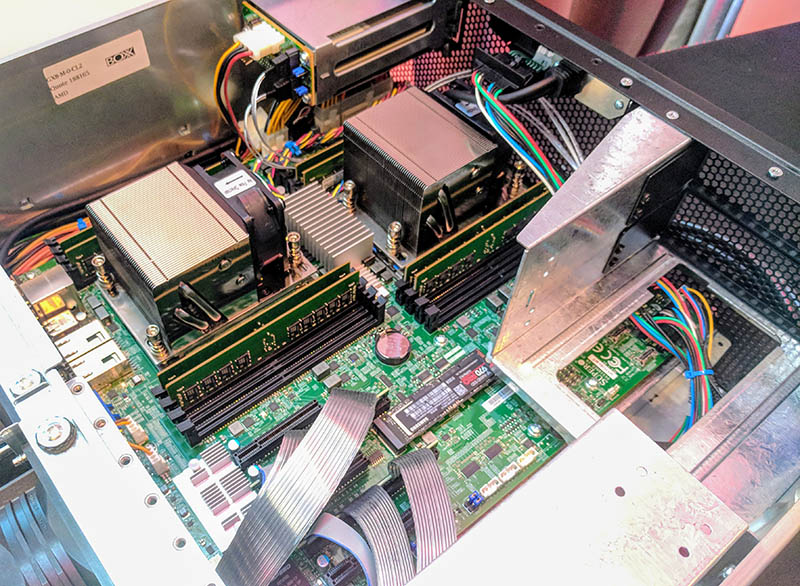

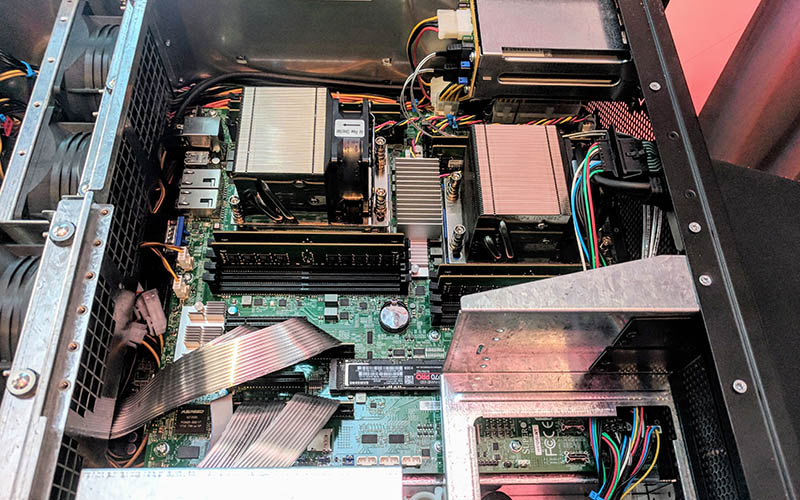

At STH, we review a lot of servers, but something about this particular machine looked a bit different. The two groups of four PCIe cards are at different chassis elevations. The storage array looks paltry compared to what we have seen in other solutions. Something was different. After the show, we found the server tucked neatly in a corner with lots of people looking at it.

A Look at the AMD EPYC and Xilinx Alveo BOXX

Here is the glamor shot with eight Xilinx Alveo FPGA cards present.

Here is a cool fact for the STH networking crowd. Each of these Xilinx Alveo cards has dual QSFP28 100GbE ports onboard. That means that there are a total of 1.6Tbps of networking bandwidth available in this server, just from the FPGAs. You can also see four 16GB DDR4-2400 DIMMs sticking out from the metal shroud.

Each set of four Alveo cards is set on its own PCB. These are not leveraging the high amounts of AMD EPYC PCIe lanes. Instead, each PCB was connected back to the main server via a PCIe 3.0 x16 ribbon cable.

For a brief moment, I thought about removing the Alveo cards to see if the system was using PCIe switches or was bifurcating the AMD EPYC PCIe 3.0 x16 slot into a 4×4 configuration. Without a screwdriver, and being watched over by someone who looked at me like, you are not taking this apart, we are going to leave that one for another day.

The AMD EPYC motherboard was a Supermicro H11DSi-NT. You can read our Supermicro H11DSi-NT Dual AMD EPYC Motherboard Review for more information on the platform. We noticed the NVMe storage was Samsung 970 Pro M.2 NVMe based. We usually recommend not using Samsung consumer drives in Supermicro servers. Each AMD EPYC CPU slot was flanked by four DIMMs instead of the full eight possible. If you configure a server, this is really a minimum configuration we would recommend with Naples as you can read about in our piece: AMD EPYC Naples Memory Population Performance Impact.

The motherboard also faced the opposite direction we would have expected. Since the motherboard faces with its “rear” I/O facing the middle of the chassis, wires are run from the internal network ports to external headers. Likewise, other I/O is cabled to the chassis exterior.

The system itself seems to be a BOXX GX8-M rackmount server shown off at SC17.

BOXX GX8-M Rackmount Server with 8 #RadeonInstinct MI25 Accelerators at #SC17. pic.twitter.com/xPFfJXxAo0

— Radeon Instinct (@RadeonInstinct) November 14, 2017

At SC17 the BOXX GX8-M was shown off with the AMD MI25 and announced here.

Final Words

This was a really fun box to see. Normally these on-stage demo boxes are ordinary eight, ten, or sixteen PCIe GPU affairs. Instead, we saw a bespoke server from BOXX that was designed to primarily use the 1.6Tbps of network bandwidth available from the Xilinx Alveo FPGA accelerator cards themselves rather than host CPUs, memory and networking.

This demo has broader implications. With the new Arm Cortex cores and the ACAP design, we know that Xilinx is positioning its next generation as a solution that may not need a traditional host computer.

Really interesting stuff, especially the last paragraph. Out of curiosity, why the gimping of the PCIe bandwidth to the slots? Is it expected that the data coming across the massive network bandwidth will be handled on the FPGAs, and never need to make it to the host CPU?