A 2024 New Year’s resolution is to publish some of the cool content that we have on the shelf but never got to publish for one reason or another. Today, we are going to show the Pure Storage FlashBlade//S. This is Pure Storage’s all-flash array that goes well beyond just plugging SSDs into an off-the-shelf x86 server sourced from a server vendor with a fancy bezel. Instead, Pure Storage has an architecture more akin to how some larger cloud providers manage flash, and bring that to the enterprise.

The Mea Culpa

We took these photos in early September 2022. That was a bit after the Pure Storage FlashBlade//S launch and while I was in town filming something else. Pure Storage’s Castro Street location was about a 15-minute walk from my old home in Mountain View, so I knew the area well.

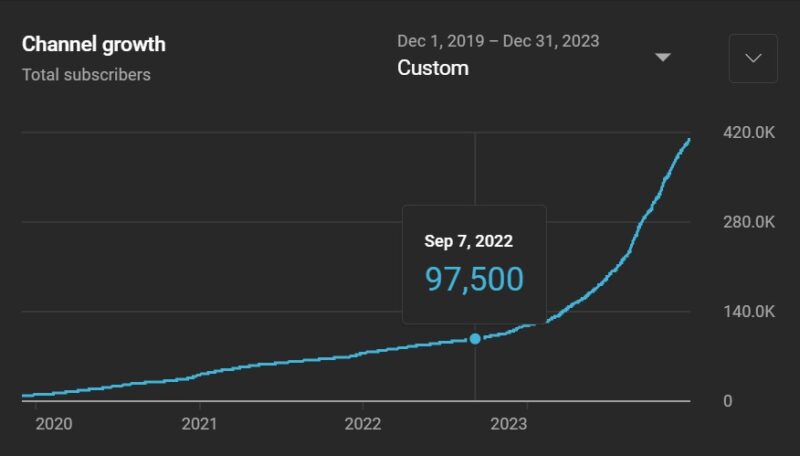

In late Q3 2022, the STH YouTube channel was growing at around 2500 subscribers per month, and there was a question of whether we could close 2500 subscribers to 100K by the end of Q3 in just three weeks. We did, but with a cost. This was one of the pieces I was thinking of doing a fun video on that we sacrificed in the name of growth. In hindsight, it was probably the right move for STH.

Just after that visit, we hit the launch cycle for the AMD EPYC 9004 “Genoa” launch in November and then the 4th Gen Intel Xeon Scalable “Sapphire Rapids” in January 2023. Since our coverage of those reaches several million readers, we start doing content months before both for STH and helping companies like Supermicro launch their platforms.

This piece got put on the shelf until this 2024 New Year’s resolution. Luckily, storage hardware platforms move at a slower pace, so this is still very relevant, albeit later in the cycle than we had planned. With that, it is time to get into the hardware.

Hands-on With Pure Storage FlashBlade//S Hardware

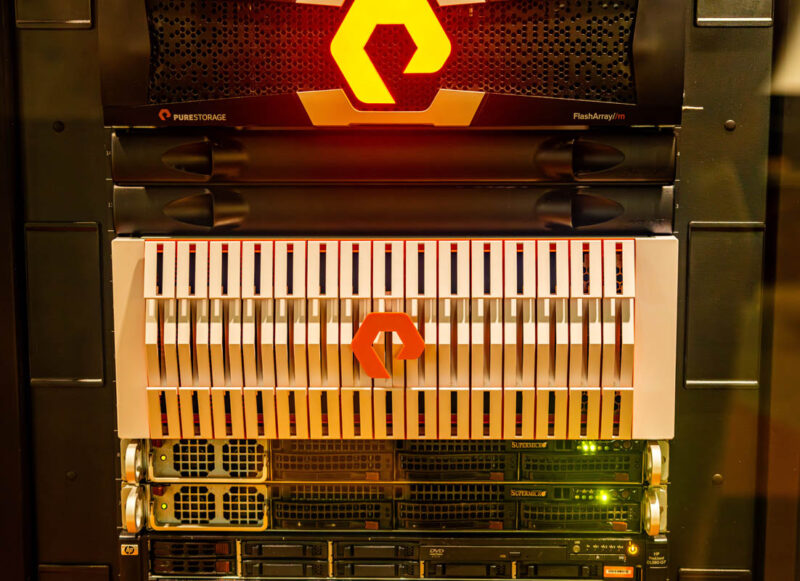

When I arrived at Pure Storage, I was ushered past the glass-encased rack with systems running, but there was a power cable running to the conference room across the hallway.

Sitting on a wire rack was a Pure Storage FlashBlade//S with its attractive bezel.

The FlashBlade//S uses QLC NAND, compression, and orchestrated data placement to bridge the gap between flash arrays and hard drives. While this is not going to take over for cold archival storage in most cases, flash continues to push further into the hybrid array space. That is the trend that Pure Storage is riding with the FlashBlade//S. Still, this is not just a simple system with an attractive bezel. Instead, it has a lot of engineering behind it.

More specifically, behind the bezel is an array of up to ten blades.

This chassis was configured with the FlashBlade//S //200 modules which are designed for higher compression levels to make better use of the flash.

The company also has an option for the //500 nodes with more compute and more performance per blade.

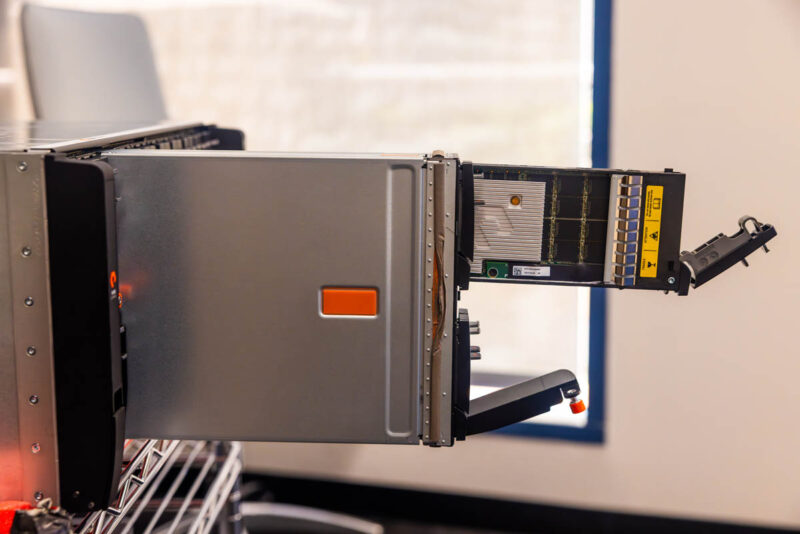

We were allowed to start pulling components out before we turned the array on, so that is exactly what we did.

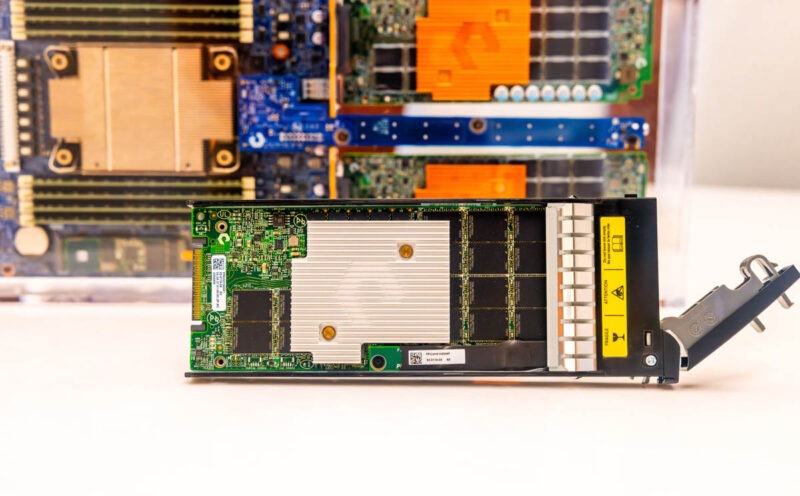

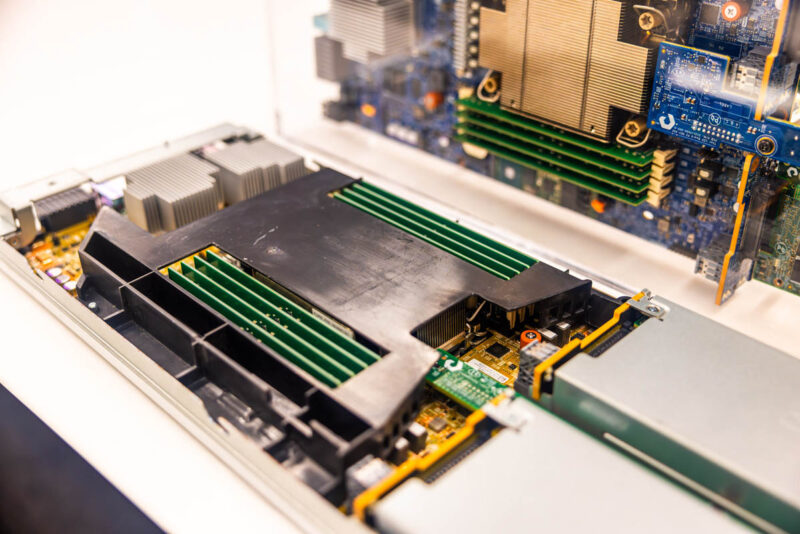

The blades each have up to four Direct Flash Modules, or DFMs. While these may look like off-the-shelf SSDs that forgot their casings, they are custom modules because Pure Storage manages data placement to flash across its arrays instead of relying on drives to do that individually.

Starting with the DFMs, these are 24TB to 48TB each. With four modules, that is up to 192TB per blade. In 2022, this was a huge amount. Now that we have drives like the Solidigm 61.44TB SSDs in the lab, 48TB is not as large. The difference, however, is that The FlashBlade//S is managing data compression, placement, hot spots, wear leveling and so forth at the blade level.

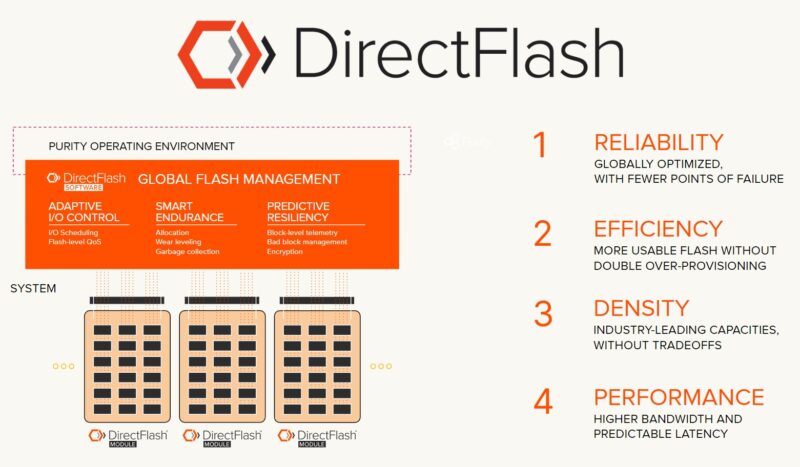

This global flash management is called DirectFlash. The high-level idea behind what Pure Storage is doing is managing all of the NAND flash plugged into systems at the CPU/ OS level instead of at the SSD controller. Instead of having a specific NAND package or SSD get hammered, reducing reliability and performance, especially with QLC, DirectFlash allows the array to identify the hot spot and adjust data placement accordingly. That is just one example of how this works.

For some context, cloud providers have been looking to manage their SSDs similarly. SSDs have not just NAND flash but also DRAM onboard. Managing flash in software means that extra DRAM is unnecessary, and one can get better reliability and fault tolerance from flash storage. Being able to handle garbage collection and wear leveling better means that Pure Storage says its DFM-based arrays are significantly more reliable.

Of course, this is STH, so we opened up some of the blades to see what was inside.

Inside a Pure Storage FlashBlade//S Blade

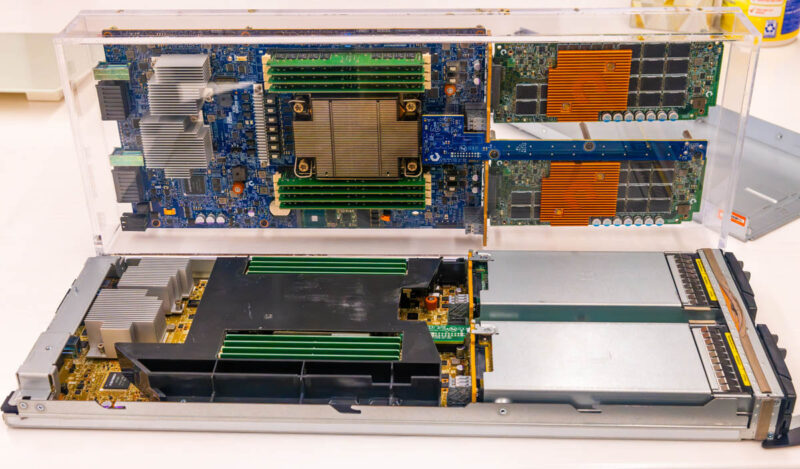

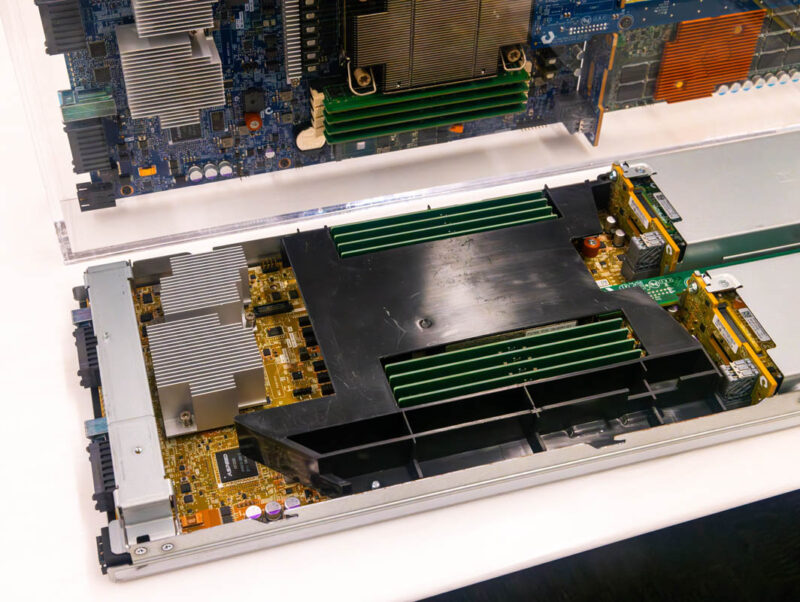

We were able to pull some of the blades out, and the company also had a version in a case.

Inside, we can see the DFM area with a custom backplane for the blade. Then behind that, we can see the CPU.

The CPU is an Ice Lake-era (3rd Gen Intel Xeon Scalable) design in a single-socket node. That means each node has eight channels of DDR4 memory and is a PCIe Gen4 generation sever node.

For loyal STH readers, I did ask why Pure Storage was using Ice Lake Xeon instead of Sapphire Rapids. Our Hands-on Benchmarking with Intel Sapphire Rapids Xeon Accelerators piece was published shortly after this visit and was already in progress at the time. An 800Gbps QAT crypto and compression accelerator could be useful in an array like this. The reason I was given was that the validation work was happening well before Sapphire Rapids was available since this array launched roughly 7 months before the 4th Gen Xeons.

Each node also needs a Lewisburg Refresh Intel PCH to function as well as its 100GbE networking, explaining the two big heatsinks we see near the rear of the node. Management is provided via an industry-standard ASPEED AST2500 BMC. While customers are not expected to get to that level of hardware management, that is a path to collect telemetry data for Purity to use.

At the rear of the blades, we have connectors for the mid-plane.

Since this is a blade architecture, we need to have I/O modules and power supplies, and for those, we need to get to the rear of the chassis.

Hi Patrick,

which protocols are supported NFS,SMB or is directly RAW? I look for a Netapp replacement but the most are struggling with deduplication. ZFS/IX was not an option, I talked with IX so far.

FlashBlade supports. NFS, SMB and even S3 through Ethernet and IP connectivity

Comparison to Vast storage options would be relevant.

I have spoken to the VAST folks about this. Timing is rough. They actually have a cool architecture using BlueField 1 (not 2) and AIC chassis that we have shown on STH.

Can you do Pure’s other arrays? I’m in love with your teardowns on gear like this. Pure’s so into the software, they need STH to satisfy everyone who just wants to know about the hardware. STH is the only place for that.

I have a FlashBlade S, actually like it. The hardware is far better than the original FlashBlade, and their support is fantastic as always. It won’t blow your socks off on single session transfer speeds (it’s still fast but limited to a single blade), but really shines in parallel performance. If you’re doing object storage these are great.

Is there a video for this content you made? Really like to see it more from performance perspective

I’ve been basically obsessed with pure since before flashblade despite having never touched one

there’s some awesome architecture talks recorded on YT from tech field day including some about design considerations for these, would recommend

I have always been impressed by Pure my former employer bought several back in 2013 or so on some of their early designs. At the time we were amazed that we could run benchmarks on 14 blades at once using 8Gb FC and that we still had difficulty maxing out the storage back then something like maybe 6GB/S I cant really remember and like 175K IOPS and it was killing our Netapp and other storage in every way…. course by todays standards that can be beat by a single NVME drive.. nuts to think about.

Just an update on dfm sizes. Pure has shipped 75tb dfms for almost 6 months and 150tb dfms will ship soon.