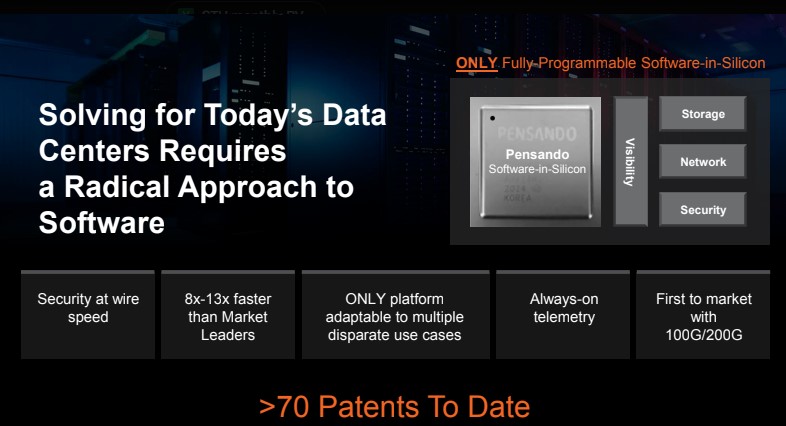

AMD Pensando DPU Running VMware ESXio

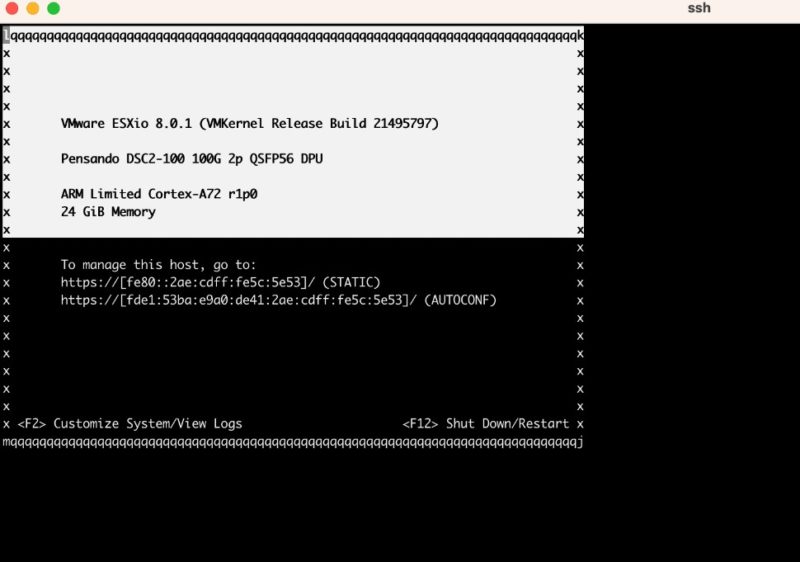

Since we wanted to show this a bit clearer, you can log into the Pensando DSC2-100 (dual 100GbE) DPU via a console. Once logged in, we can see the Arm Cortex-A72 and 24GB of memory and the ESXio splash screen. VMware needs to run its OS and software on the DPU, that is ESXio.

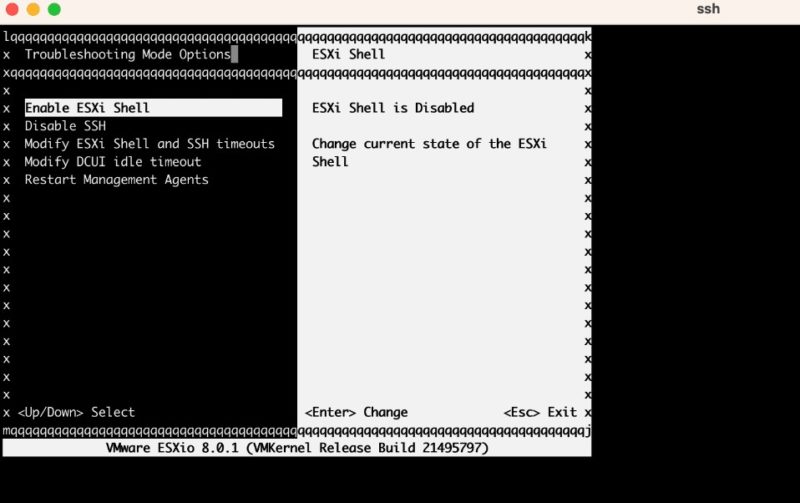

By default, the ESXi Shell is disabled in ESXio 8. Since we are doing this in a lab (do NOT do this at home) we can enable the shell.

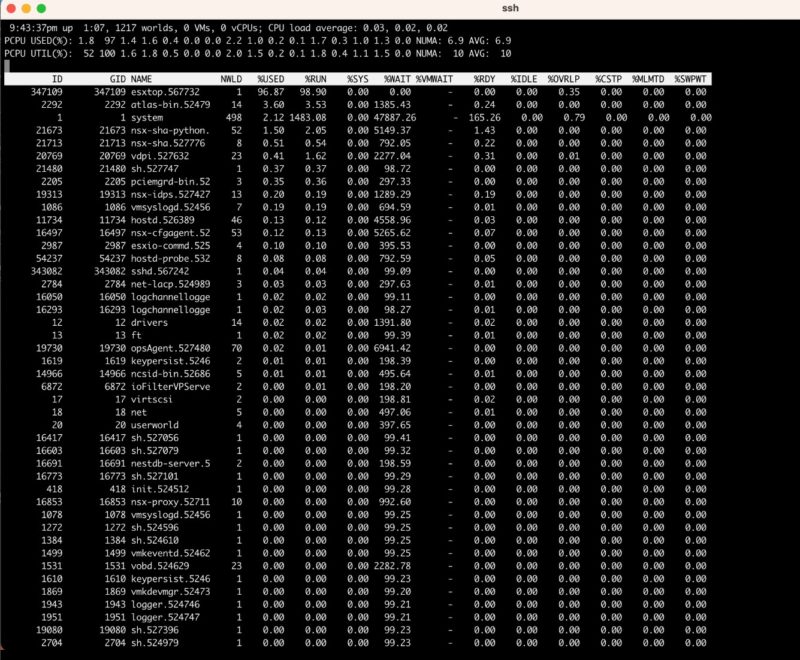

Here is a quick esxitop of the card running.

With VMware, very few users will ever venture into these depths. Instead, VMware has a really interesting program. They are doing the work to bring features like UPT, then other networking, and hopefully storage and bare metal management (future capabilities) to customers. VMware’s teams are responsible for figuring out AMD Pensando P4 engines and making the functionality work in the VMware ecosystem. To make this a bit more poignant as to how much VMware is doing, and trying to homogenize DPUs from different vendors, by default, the out-of-band management ports on these DPUs are disabled.

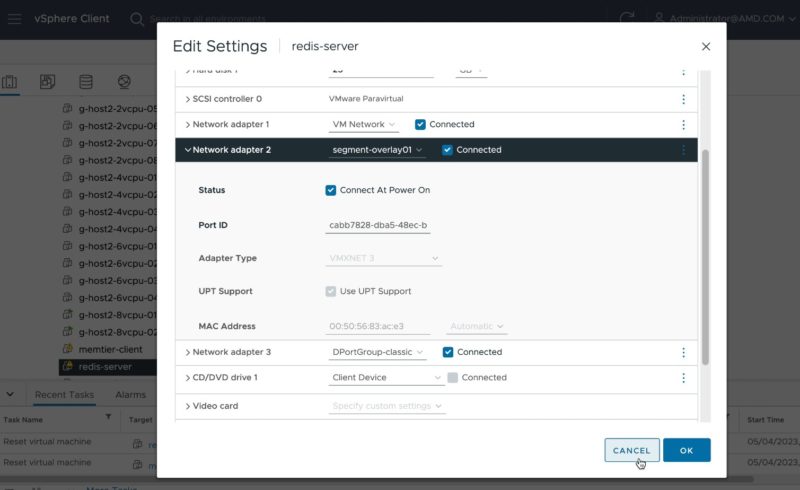

Now that I have used both, the difference comes down to the port speeds (for now) and a single drop-down box. To most users, most of the experience is just ensuring UPT is enabled.

VMware administrators are familiar with one of the biggest basic networking challenges in a virtualized environment. One can use the vmxnet3 driver for networking and get all of the benefits of having the hypervisor manage the network stack. That provides the ability to do things like live migrations but at the cost of performance. For performance-oriented networking, many use pass-through NICs that provide performance, but present challenges to migration. With UPT (Uniform Pass Through) we get the ability to do migrations with higher performance levels close to pass-through.

The challenge with UPT is that we need the hardware and software stack to support it, but since AMD Pensando DPUs are supported, we finally have that.

Since this is STH, let us get to the hardware.

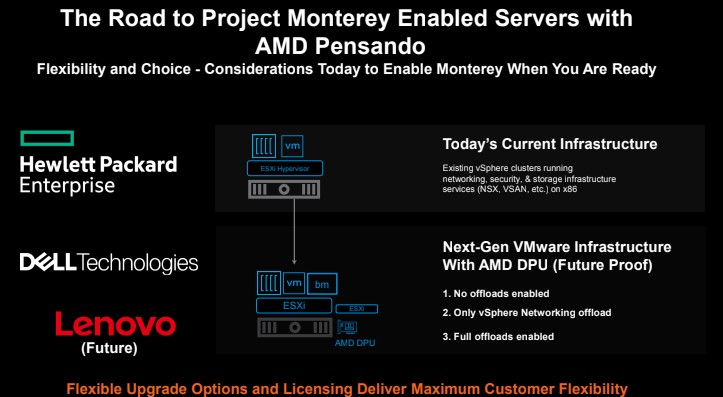

AMD Pensando DPU Test Lab

Testing the Pensando DPU, or any DPU with VMware is a bit tricky. Currently, you need to order a server from a traditional server vendor that has the DPU already installed. As we will see, as it stands today, you need a special DPU whether it is NVIDIA or AMD to be able to work with VMware and a corresponding part in the server. This is so that the DPU can be managed via the server’s BMC rather than having to use the out-of-band management solution of each DPU.

For this, we are going to use Dell PowerEdge R7615 systems. These are 2U single-socket AMD EPYC Genoa systems. One can see a “Titanite” QCT system below our two main test servers.

On the back of the system, we can see the AMD Pensando DSC2-100G installed in a PCIe riser.

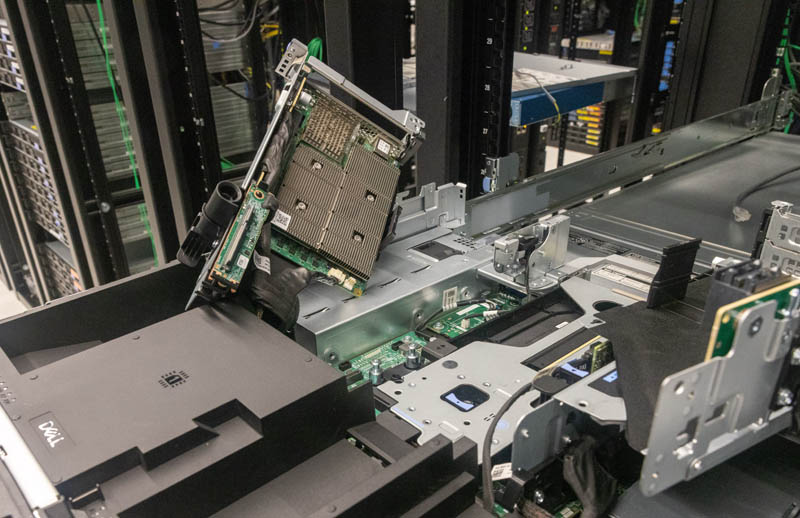

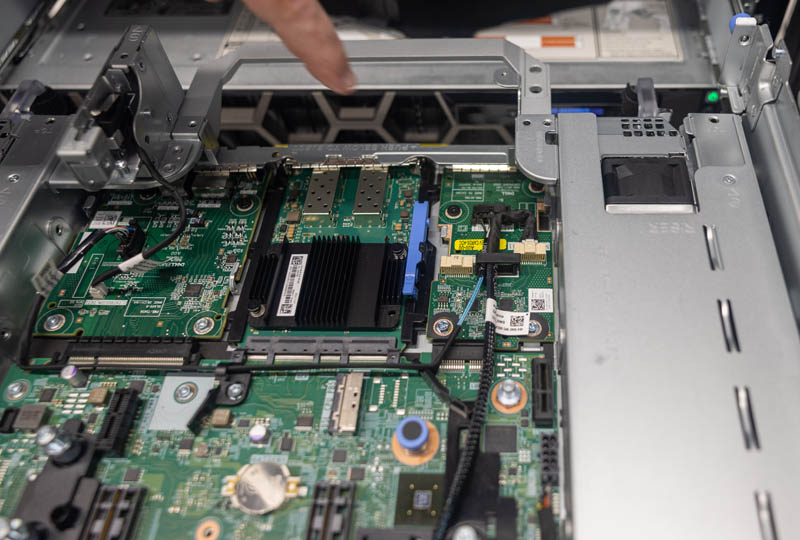

Here is a look at the card in the riser from inside the server. That cable is the management interface between the server’s BMC and the DPU.

Here is the card in its riser being pulled out of the server:

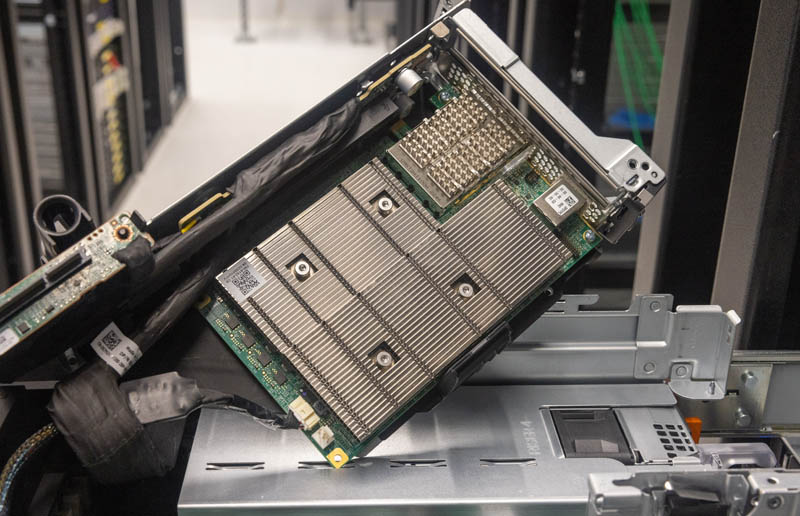

Here is a closer shot of the card in the riser.

That small cable goes to a little board next to the OCP NIC slot and is one of the most controversial parts of the current DPU kits.

If you saw our Dell PowerEdge R760 review you will see that this slot is a low bandwidth LOM slot. Instead of our Broadcom-based dual 1GbE solution, we have the management interface card between the server’s iDRAC management and the Pensando DPU. Rumor on the street is that the companies are working to make this a retrofit item since one can probably see it is easy to install. Currently, that is why you need to purchase a VMware DPU-enabled system directly from a vendor.

Losing our 1GbE networking is not a huge deal. We still have the OCP NIC 3.0 slot for low-speed networking in the PowerEdge R7615. Having the management interface board means we know we also have high speed DPUs in the system for plenty of networking to the point the 1GbE NICs are unlikely to be missed.

At this point, we have shown a DPU in the server, but what is this DPU. That is next.

STH is the SpaceX of DPU coverage. Everyone else is Boeing on their best day.

Killer hands on! We’ll look at those for sure.

This is unparalleled work in the DC industry. We’re so lucky STH is doing DPUs otherwise nobody would know what they are. Vendor marketing says they’re pixie dust. Talking heads online clearly have never used them and are imbued with the marketing pixie dust for a few paragraphs. Then there’s this style of taking the solution apart and probing.

It’ll be interesting to see if AMD indeed also ships more Xilinx FPGA smartnic or no?

They released their Nanotubes ebpf->fpga compiler pretty recently to help exactly this. And with fpga being embedded in more chips, it’d be an obvious path forward. Somewhat expecting to see it make an appearance in tomorrow’s MI300 release.

You had me at they’ve got 100Gb and nVidia’s at 25Gb

Does this work with Open Source virtualization as well?

Why does it say QSFP56? That’d be a 200G card, or 100G on only 2 lanes.

@Nils there’s no cards out in the wild and no open source virtualization project that currently has support built in for P4 or similar. It’s a matter of time until either or both become available but until then it’s all proprietary.

I also don’t expect hyperscalers to open up their in-house stuff like AWS’ Nitro or Googles’ FPGA accelerated KVM