Alongside the Dell EMC DSS 8440 GPU server launched at Dell Technologies World in Las Vegas, the company had a Graphcore IPU PCIe card in the booth. For those that do not know, Graphcore is perhaps one of, if not the, most hyped AI hardware companies in the industry these days. Dell is an investor in Graphcore and thus, we see an IPU at DTW. At the show, we saw person after person walk by this GPU instead peering into the DSS 8440. We took some video footage and photos to show off the card since we cover the industry and this is the first live single card engineering sample we have seen in the wild.

We made a video which you can see on YouTube since there is very little information on YouTube about these cards as well.

Here is a quick account of what we saw at DTW and the architecture driving the Graphcore C2 IPU PCIe card.

Hands-on With a Graphcore C2 IPU PCIe Card at Dell Tech World

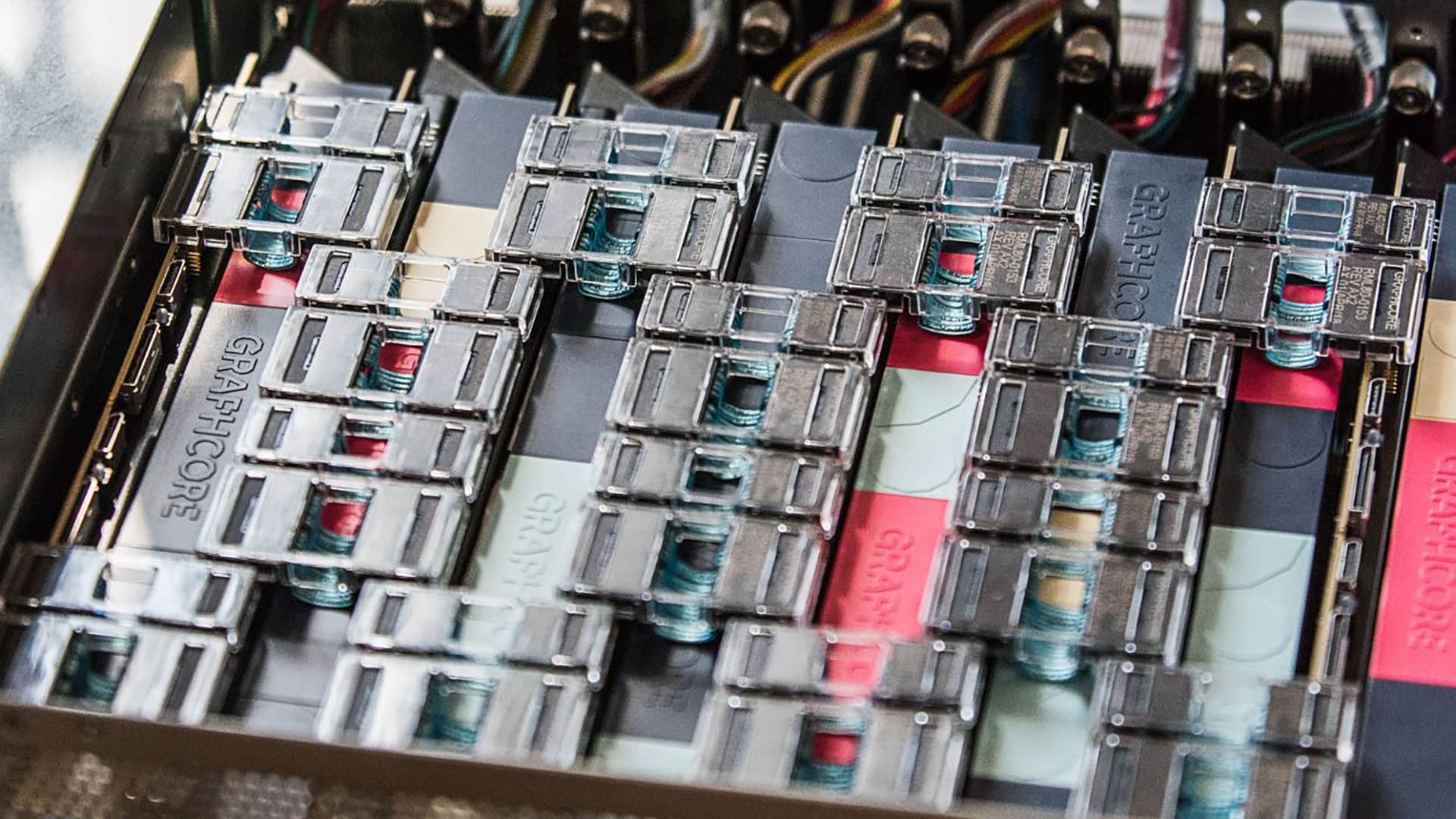

Here is the Graphcore C2 IPU PCIe card sitting on a small cardboard box at Dell Tech World 2019.

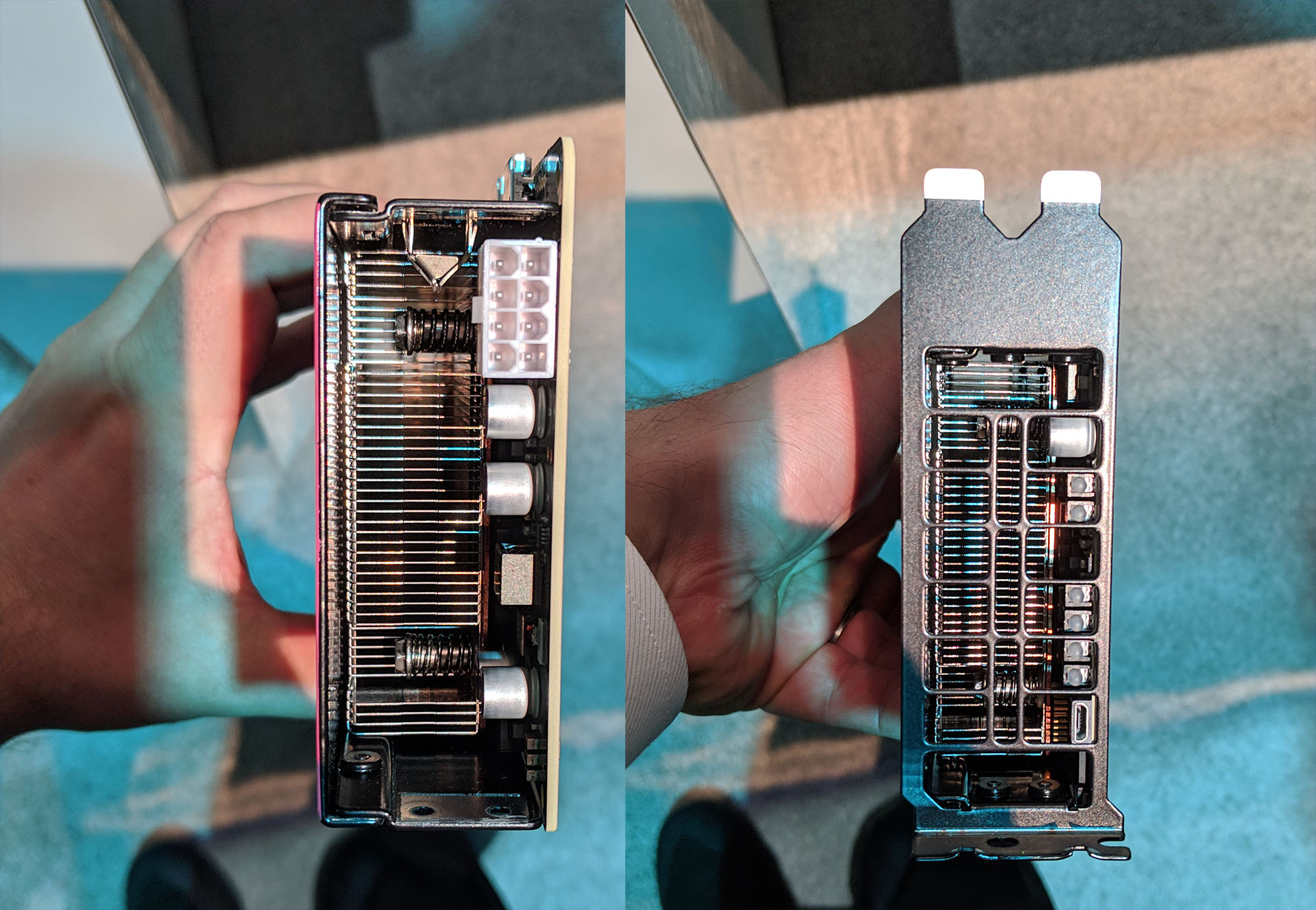

One can see those top edge connectors which the company can use to chain cards together using IPU-Links. This provides a scalable platform with high bandwidth and low latency compared to having to go back over PCIe.

The back of the card had a nice backplate that holds the heatsinks on and also provides some structural rigidity to the card. One will quickly notice that this looks like a dual processor design. That is because it is.

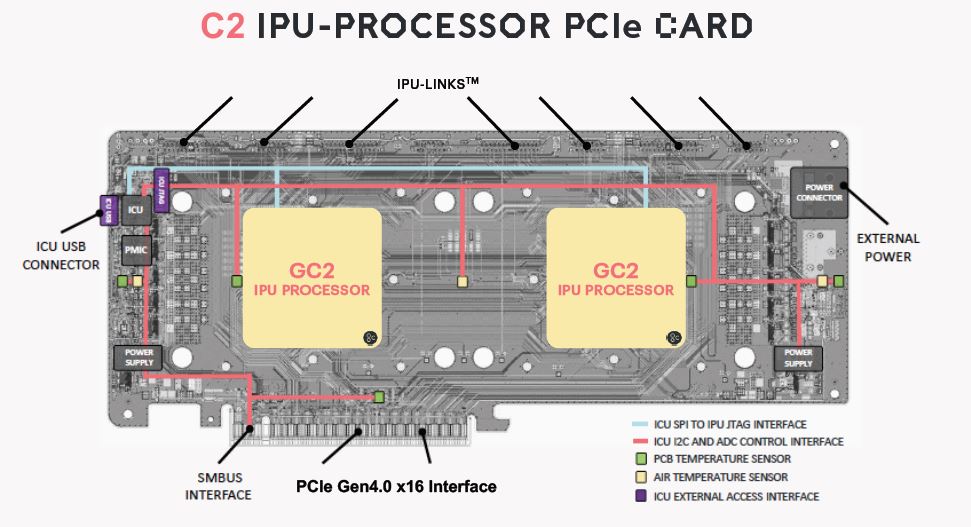

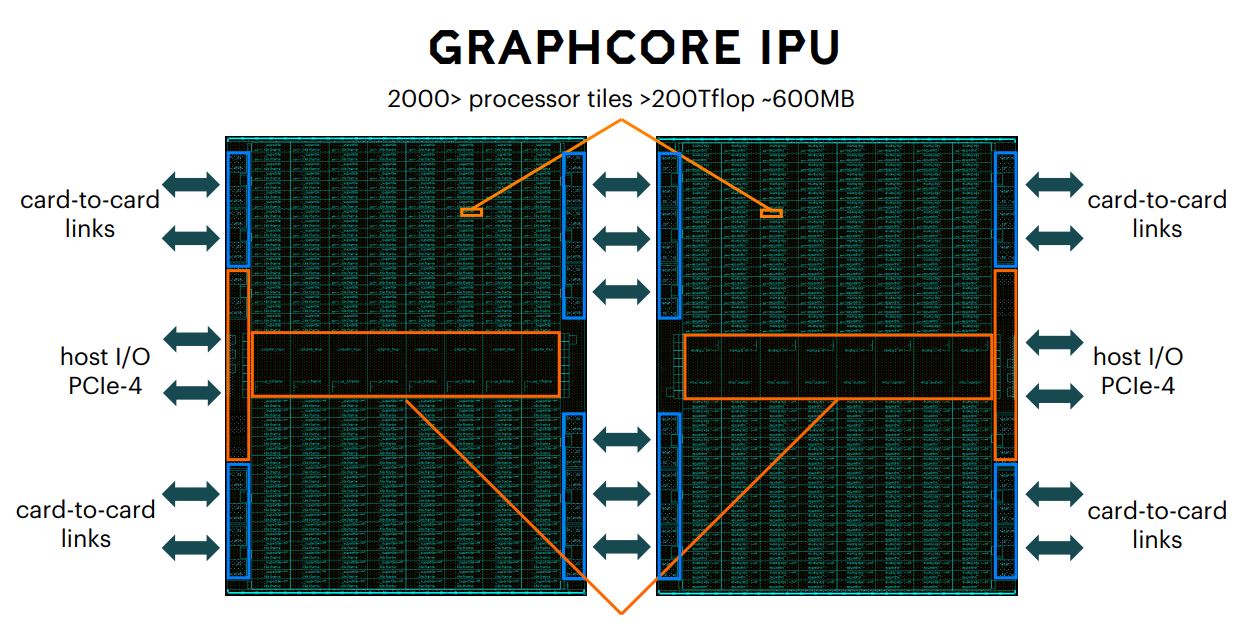

We are told that each C2 IPU-Processor PCIe card has two Colossus GC2 IPU-Processors onboard. It is also a PCIe Gen4 device which will be important for tomorrow’s AMD EPYC “Rome” platforms, future Intel Xeon platforms, as well as several Arm designs that will be coming out later this year.

Each Graphcore C2 IPU PCIe card has on-card IPU-Links for the two chips as well as to external cards. The chips also include the PCIe Gen4 host I/O.

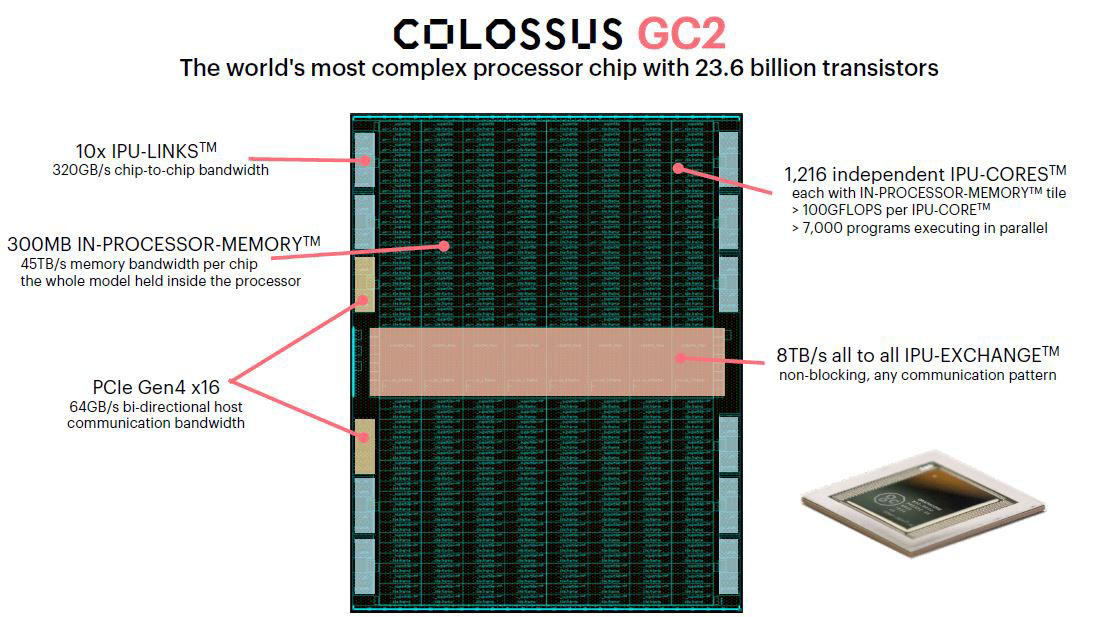

There are a total of 10x IPU-Links for chip-to-chip communication that yields 320GB/s of bandwidth going off the package. For some context, the PCIe Gen4 x16 controllers have 64GB/s of bi-directional host bandwidth. These chips are fairly massive with 23.6 billion transistors and the majority is for two features. First, there are 1,216 IPU-Cores that can hit over 100 GFLOPS per IPU-Core. These cores also have In-Processor-Memory amounting to 300MB of memory on the chip itself.

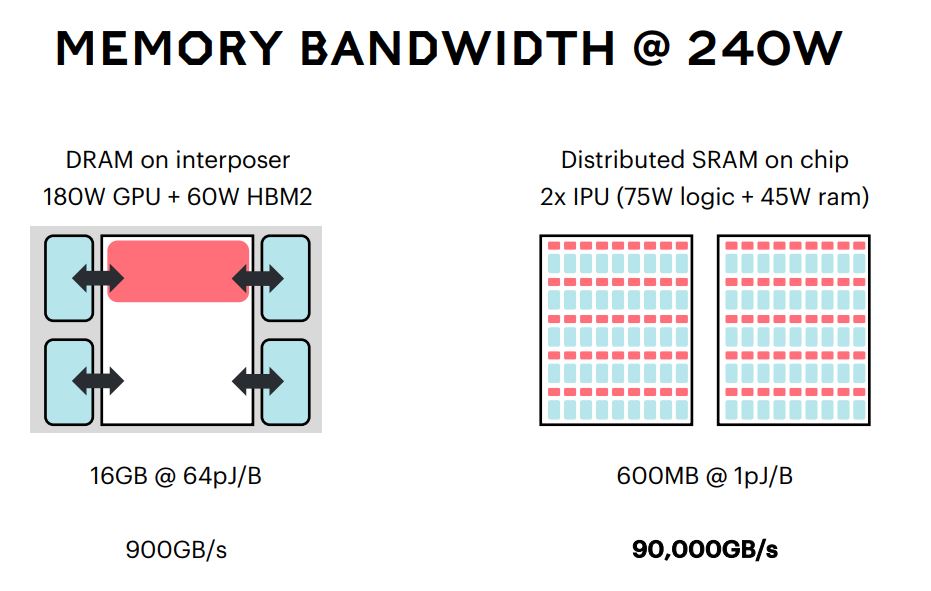

Colocating the memory with the logic cores yields a massive memory bandwidth. There is a total of about 45TB/s of memory bandwidth per chip. With two Graphcore Colossus GC2 chips per Graphcore C2 IPU card that means a total of 90TB/s of memory bandwidth. Graphcore says this is about 100x as much memory bandwidth at lower power than HBM2 commonly found on GPUs.

For those wondering, it is the company’s Poplar software stack that they have invested heavily in, that allows for the 300MB of memory capacity to work versus 32GB we are seeing these days on the NVIDIA Tesla V100’s as we saw in our Inspur Systems NF5468M5 Review 4U 8x NVIDIA Tesla GPU server.

Peering into the Graphcore C2 IPU PCIe card from both ends we can see a fairly straightforward passively cooled design.

On the inner edge, there is a single 8-pin power connector. On the rear I/O edge we can see LEDs and a USB port. Overall, these cards appear to be designed to fit directly into the same types of servers that support NVIDIA Tesla V100 PCIe GPUs today.

Final Words

This quick hardware overview was not sanctioned by Graphcore as the company is in the phase of growth where they are getting their hardware and software stack into large customers evaluating the technology. It was great seeing a Graphcore C2 IPU PCIe card in person. If we get a chance, we will take one apart at a later date to show our readers what is going on inside.

It was, however, absolutely stunning to see this card which has rarely been seen in public getting so little attention at Dell Technologies World. In terms of AI hardware startups, Graphcore is one of, if not the, most hyped companies out there. The gravity of the Dell EMC DSS 8440 with 8x NVIDIA Tesla V100 GPUs sitting next to this card drew Dell’s customers and channel partners, but this was a gem from the show. It is surprising it did not get a lot more coverage since this was one of the first public showings we have seen and we attend the events for major server vendors and review hardware for all of the major chip and server vendors.

We certainly hope to see more from Graphcore in the future. Stay tuned.

Patrick – did they say anything about software and SDK availability?

STH needs to do more with these guys. I watched that NextPlatform video interview which was worthless. Learned nothing. This I learned several things.

We are currently running over 700 GPUs in our cluster. I’d like to know how these scale beyond 8 IPUs

How fast it can access server (or other large) RAM (when 600MB isn’t enough)?

Is there anything known about retail price?

Thank you Patrick for posting, not enough attention is given to this area

I have been desperately looking for the drivers for this. Graphcore has the docker image for their development software available, but not the drivers. Does anyone have the drivers for the GC-2?

The Graphcore Docker image should have Poplar SDK in it, including tools to run on IPUs. Not sure if GC-2 is supported or EOL, though.

“`

docker run -it –rm graphcore/pytorch:3.0.0-ubuntu-20.04-20220923

ls /opt/

gc-monitor

“`