At SC24, we got to see the newly announced Google Cloud TPU v6e Trillium board without its heatsinks. This is one of the newer chips at Google and one that is part of the ct6e-standard / v6e instances meant for AI workloads.

Google Cloud TPU v6e Trillium Shown at SC24

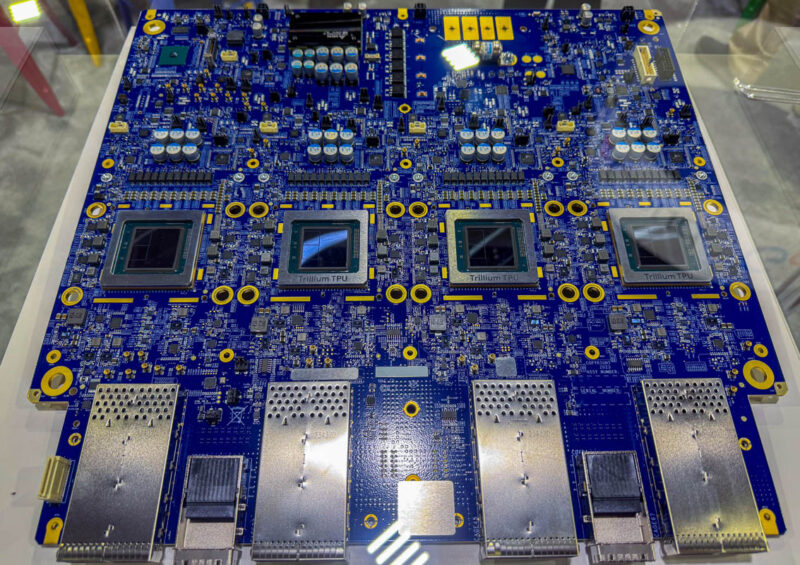

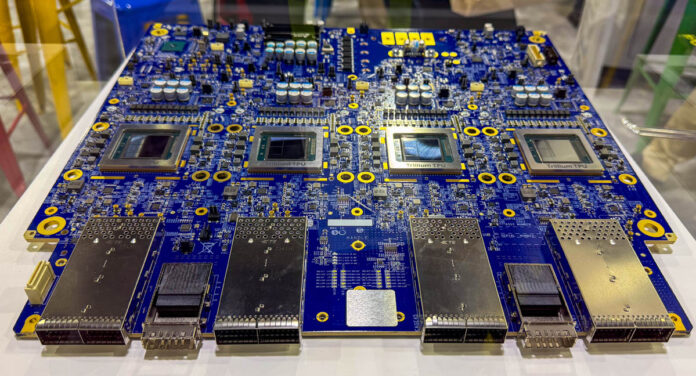

Here is the four TPU v6e Trillium board.

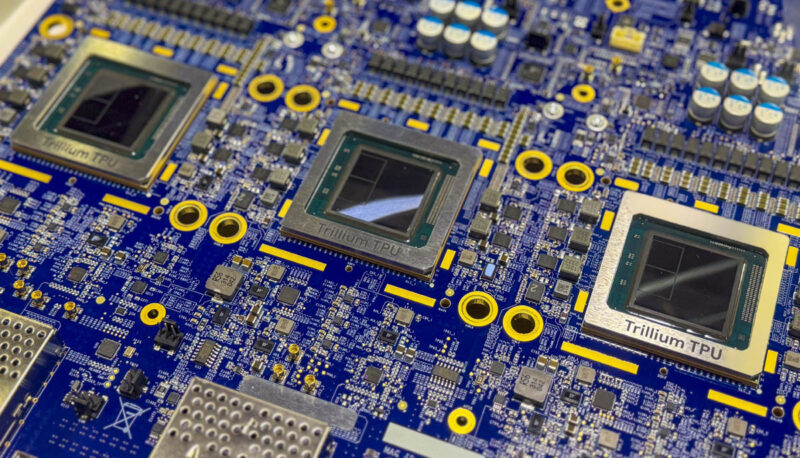

Each Trillium v6e chip is a lot more impressive than the v5e version. Onboard HBM has doubled from 16GB to 32GB with the bandwidth doubling in the new generation. Both INT8 and bfloat16 performance is up around 4.6-4.7x in this generation as well.

Here we can see four of the TPU v6e chips on top of the PCB. These are connected to dual socket CPU instances with four of the TPU v6e chips attached to each processor.

Each pod then gets a 256 accelerator set with a 2D Torus interconnect. In this generation each of the hosts also quadruples its interconnect bandwidth from two 100Gbps links to four 200Gbps links. The inter-chip interconnect also more than doubled its bandwidth in this generation.

Final Words

It is cool that Google shows off its hardware. Microsoft Azure tends to show the most hardware, by far. Google is probably one step behind Microsoft showing off its new instance hardware. AWS has a relative adversion to showing off its chips like this. Still it is really interesting since we know that the major hyper-scale players are doing their own custom silicon for AI in addition to deploying NVIDIA. Google will charge more per accelerator in this generation, but the idea is that with a faster part, the net impact is a significantly lower cost for the customer than the v5e instances. With NVIDIA aggressively churning its roadmap, Google needs to continually offer new chips with better performance per dollar to keep pace.

Is the Torus interconnect a proprietary protocol of some flavor; or something off the shelf(presumably ethernet unless otherwise specified) just run in an unswitched 2d torus topology rather than switched?