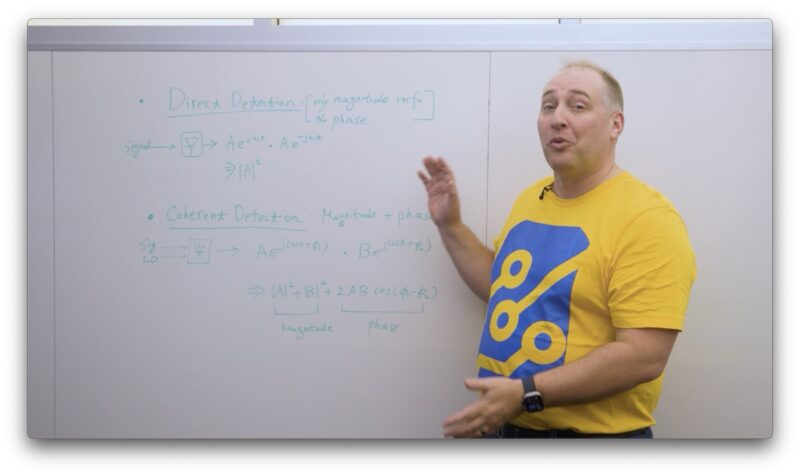

Direct versus Coherent Detection

As part of the video, one idea I had was to get into the difference between the direct detection that is commonly used in lower-end low-cost optics, versus coherent detection. This was the opening bit of when we recorded that.

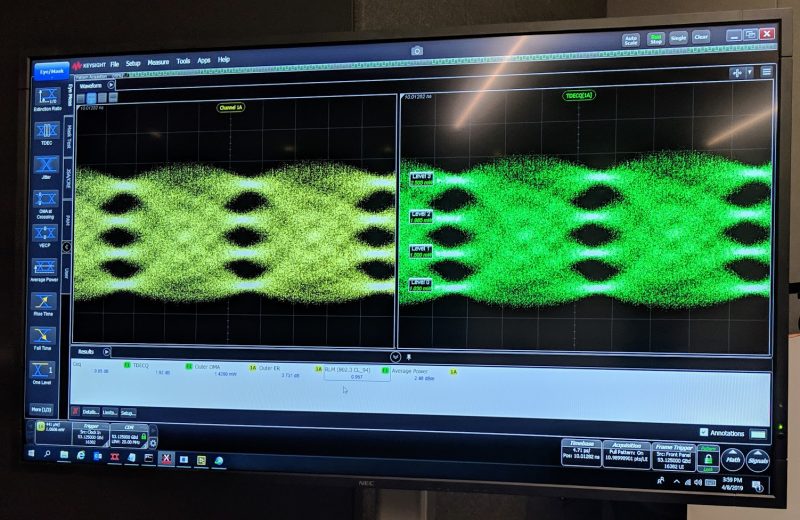

I then realized that the folks that need to go into the math and physics of it, probably already know. A lot of signal detection revolves around fairly straightforward is there or is there not a signal. We might think of this in a very simple 10Gbps module as “is there light or is there not light?” There are many fairly simple 2D representations of this, and some more complex encoding schemes, and often in labs we see eye diagrams that look like this (from our 2019 Intel Silicon Photonics piece, a business the company sold-off.)

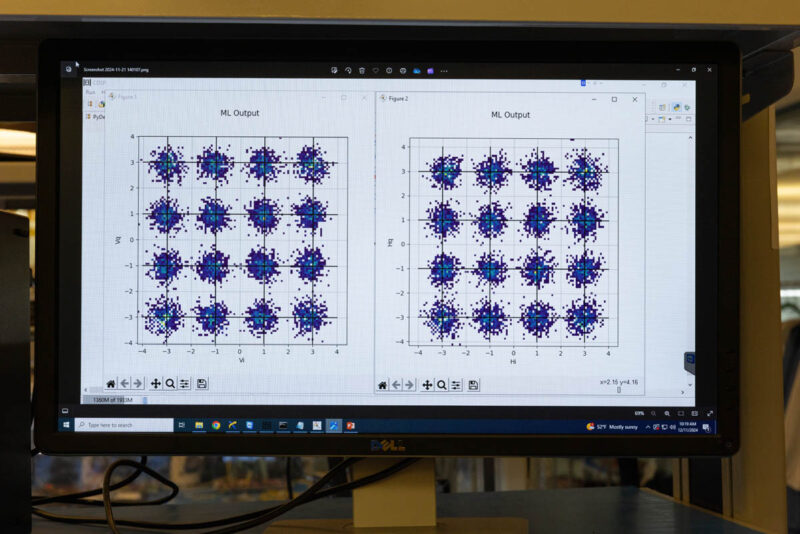

Coherent detection is a lot more complex since you can do a lot of more exotic techniques with light. Instead, there are “constellations” where we can see signal. Both of these charts show X and Y polarization so we have sixteen constellations.

That is why having the local osciallator reference is important. With a more complex encoding we need additional information provided by that reference.

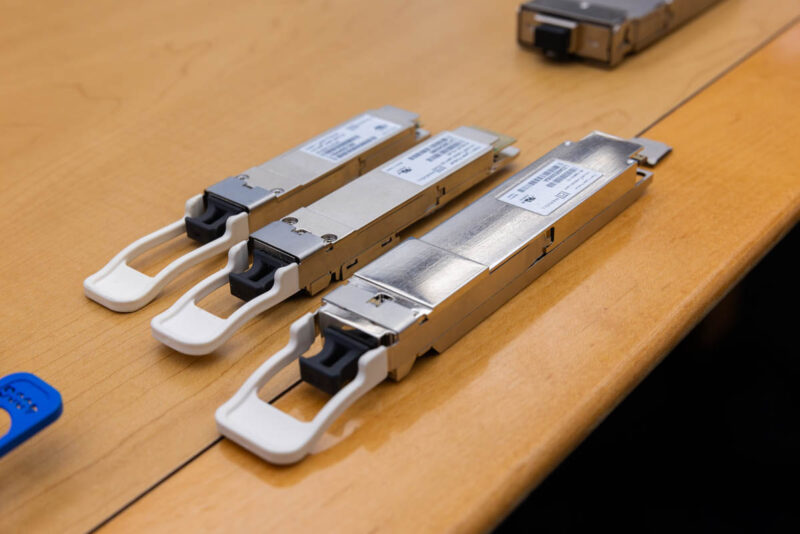

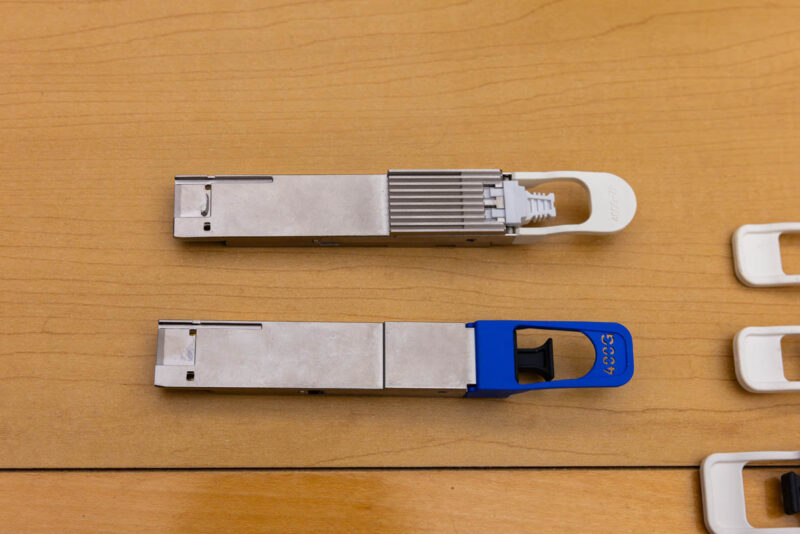

If you are interested in direct versus coherent detection, then there are papers that go into a lot of detail. For the STH audience, I think the big one is really that even though an 800G ZR+ OSFP module just looks like a slightly bigger 100G SR4 QSFP28 module, what is going on inside is very different.

Why This Matters

So the big question here is: “why does this all matter?” One simple reason is that sometimes it can be advantageous to span datacenters across geographies just for cost and resiliancy purposes. Being able to go directly from a switch out to another data center 1000km away or more allows an organization to potentially house more infrastructure at lower-cost and stable hosting sites. Also, if you simply have two campuses that are geographically distant, spanning that with 400Gbps to 800Gbps of bandwidth can be a game-changer.

Realistically though, one of the biggest drivers in pluggable optics today is the AI datcenter build-out. That build-out is power constrained so we are seeing data center operators focus on accesing power. There are plans for large data centers next to existing power plants, new power sources coming online for data centers, and so forth. Minimizing transmission loss helps maximize the power available for compute. The challenge is that clusters need so much power that the answer may no longer be a single site.

Instead of bringing the compute to a single campus, the idea of having multiple data centers located next to power is one that we hear often. As a fun aside, this was what many of the cryptocurrency miners were working on in the mid to late 2010s. The difference is that the mines did not need high-bandwidth and low-latency access to one another. AI clusters do.

The idea is that building a large-scale cluster across multiple sites next to available power sources saves on electric transmission loss and costs, but it also might be easier. Instead of having to permit and build large sources of power, one can light dark fiber or lay addiitonal fiber to bridge clusters at several smaller power sources.

So the easy answer is that the modules allow organizations to relatively inexpensively and easily light fast links spanning significant distances using a common pluggable optical module instead of using a dedicated DCI box. Perhaps more exciting is the idea of being able to do large-scale geographic distribution of AI resources.

Final Words

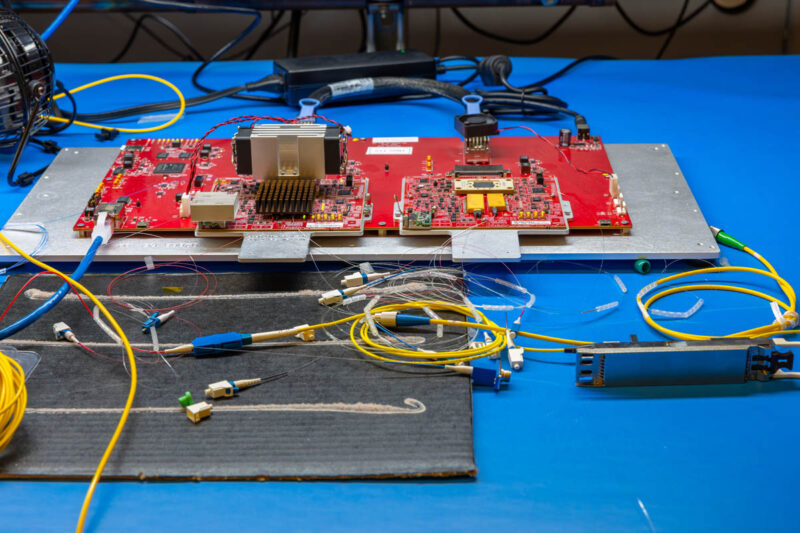

Hopefully you liked this look at a really cool piece of technology. We often look at pluggable modules and they look like metal housings. From this view, it is not easy to tell why one is more complex than another.

Hopefully looking at what is happening inside the higher-end modules gives some sense of just how complex things are inside these pluggable optics. In this we had the inside of a simple low-cost 100G SR4 optical module and that is vastly different than the 800G ZR+ optical module. The reason is that even though they may look similar, the COLORZ III module is delivering 8x the data rate at 10,000x the distance. Perhaps that is why I thought this was such a cool demo when I first saw it.

The only things that lab table is missing are:

– DUCT tape

– SuperGlue

– Coffee cup

What a superior Friday read. I could’ve handled more depth, but I’ve been doing this many years.

It’s good to have a Kennedy back in optical networking. You look like I remember your dad when I first met him when I was the buck with a newly minted PhD 20 years ago.

I always wondered if you’d go here.

Cool demo but I feel that this type of hardware is on the way out in favor of chips that leverage silicon photonics. The advantages are way too great to ignore in terms of power and potential bandwidth. Simpler encoding schemes tend to win in the end and this is a straight forward means to maintain simplicity without the need for highly complex DSPs in the transceivers (complex here is relative).

That convergence diagram looks like QAM 16 encoding, at least visually. Hardware exists to do QAM 4096 (see Wi-fi 7) but at a significantly lower real clock speed. I don’t think that there is enough sensitivity to go that high in modulation but QAM 16 does appear to be low resolution for what it is capable of. Perhaps with 1000 km of cable in between modules, the images are not so clean.

The ideas of expanding out a traditional data center using these modules is feasible but a pair of links for a big GPU cluster isn’t going to cut it: each GPU node nowadays is getting 100 Gbit of networking bandwidth and 800 Gbit would only be a means of linking up two big coherent frames of gear. An order of magnitude more bandwidth would be necessary to really start attempting this. If a company is willing put down tens of millions of dollars for a remote DC, then spending a few million to lease/run more additional fiber between the locations is a straight forward means by using more cables. Optics is fun in that signals can be transformed in transition to an extend (polarization filters as an example) to merge multiple lines together on to a single cable without interference. That is a way to aggregate more bandwidth over existing lines but what ever is multiplexed on one end had to be demux on the other side. This is also ignoring that such aggregation technics are not all ready used inside of the module (and my simple polarization example likely wouldn’t work with those described in this article). These techniques also incur some signal loss which directly impacts their range.

This tech is at its own level, and I could see it being a big deal for long-distance (subsea?) cables where it might be worth investing a lot in gear at each end if it lets you avoid repeaters/retimers along the way. Would welcome the explainers on optics!

It’s also *wild* how far commodity fiber stuff has come. This is a bit of a market fluke, but a ton of used Intel 100G 500m transceivers are out there that you can pick up on eBay for $10. New optics still wouldn’t be much of the cost of a 100G deployment. Even higher-end stuff doesn’t look ridiculous proportionate to what it’s doing, and isn’t single-vendor unobtanium.

DWDM is also incredibly cool! If you’re a giant multi-datacenter operator, maybe it’s simpler to just get 800G everything and be done with it, but conceptually it’s really neat that you can lay a many-strand cable and gradually get to 400G or 1T/strand, one 10 or 25G wavelength at a time.

It was only pretty recently I picked up even a vague awareness of what modern networking is able to do, since work hasn’t dealt in physical hardware for a while. Keep the posts on fun network stuff coming!

I don’t understand the mentions to AI, if you visit the OpenZR+ website it is very clear that the target market of these products are big telecom providers and their transport networks.

Thanks Patrick! Great stuff as always.

One question I’m asking my pluggable optics vendors these days is:

Will your 800G coherent modules work in my 400G router?

Specifically, I’m *really* interested in the extra-long reach capabilities of the 112GBaud QPSK 400GE encoding 800ZR+ opens up, & with the host side running at 56GHz per lane it might just fit within the existing 25W QSFP-DD power envelope. Also the L-Band possibilities are quite interesting.

Even if those particular modes aren’t supported, the economics have changed. If you install more than a handful of these optics in your router, you probably spent more on your optics than your router, & they likely consume more power & put out more heat than the router itself…