At Computex 2019 we had the opportunity to visit the Gigabyte server suite. There we saw a lot of new servers. You may notice, we strategically took a photo of only half of the suite. The other half of the suite was AMD EPYC and we were told that we could not take photos since there was a lot of AMD EPYC “Rome” generation hardware being shown. We are still a few months away from the Q3 Rome launch so we agreed. Instead, we are going to share our picks of what we are allowed to show.

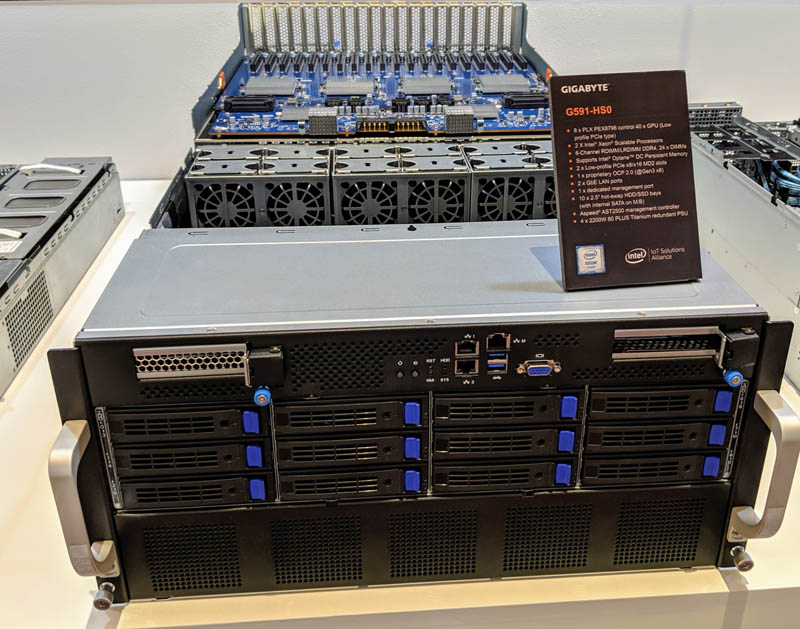

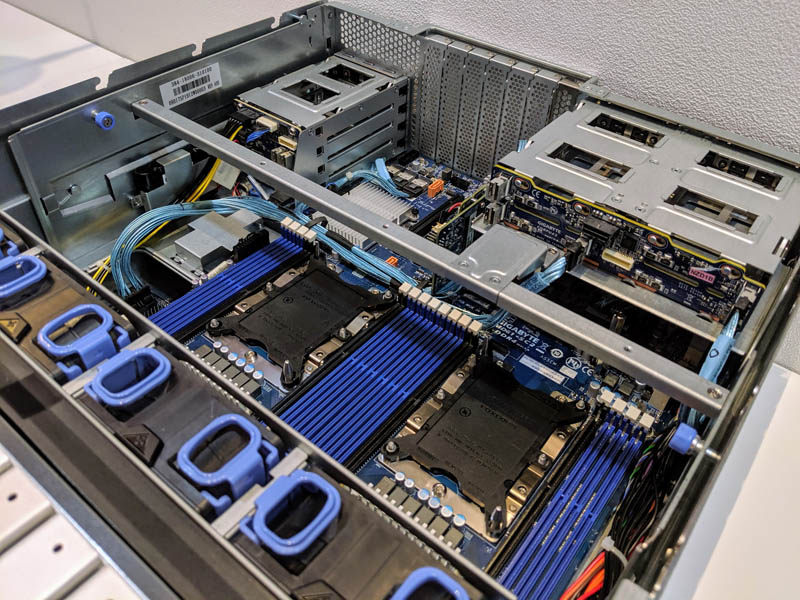

Gigabyte G591-HS0 40x GPU Server

The Gigabyte G591-HS0 is a 5U chassis with an intriguing surprise. Inside, it houses 40 PCIe x16 full length, single slot low profile devices.

Based on Intel Xeon Scalable, this platform uses a large PLX switch array and two 2U 20 slot trays to maximize PCIe I/O connectivity.

We were told that there are a number of devices such as FPGAs, inferencing cards, video capture devices, and other accelerators that can be used in 40 card arrays. NVIDIA Tesla T4 cards have a constraint that in some cases CUDA addresses only 32 cards in a system so this has more cards than NVIDIA can easily handle at the moment.

Gigabyte G291-2G0 2U 16x GPU Server

The Gigabyte G291-2G0 2U server houses sixteen single width full height GPUs that are mounted on either side of the chassis. Powering the server are two Intel Xeon Scalable processors that support Intel Optane DCPMM and full twelve DIMM per CPU configurations. One also gets additional PCIe slots for networking.

Gigabyte uses a similar design for 2U 8x double-width GPU systems. Since the solution uses a flexible design with a PCIe switch, Gigabyte was able to change the design to support twice as many GPUs by simply changing the riser. For companies that want to use a common platform for training and inferencing, this a useful design.

Gigabyte G461-3T0 60-bay 4U Server

The Gigabyte G461-3T0 is a 60-bay 4U server that is meant for higher capacity storage applications.

There is actually a lot of expansion to the Gigabyte S461-3T0. There are six U.2 NVMe SSD rear hot swap bays along with two SATA/ SAS bays. Gigabyte has an immense amount of connectivity here.

Many higher-density 4U storage server nodes sacrifice connectivity to increase 3.5″ drive bay space. Gigabyte has a nice balance of connectivity and NVMe storage in its solution. The company also has 8 DIMMs per CPU which allows for Intel Optane DCPMM support with 2nd Gen Intel Xeon Scalable CPUs.

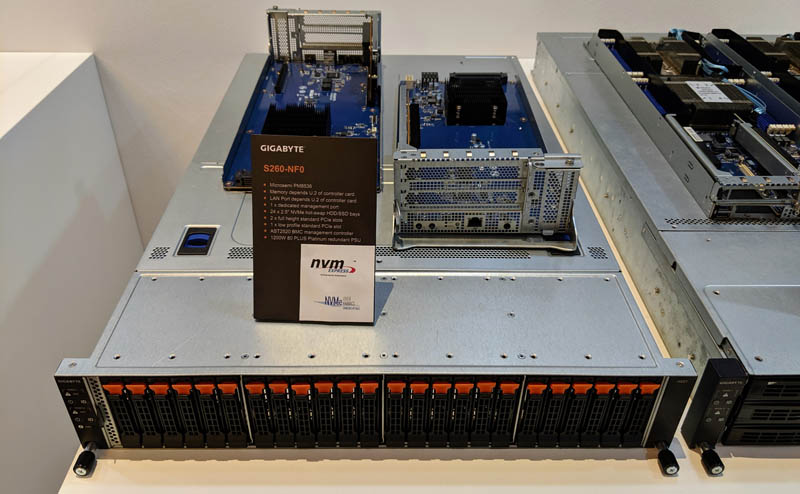

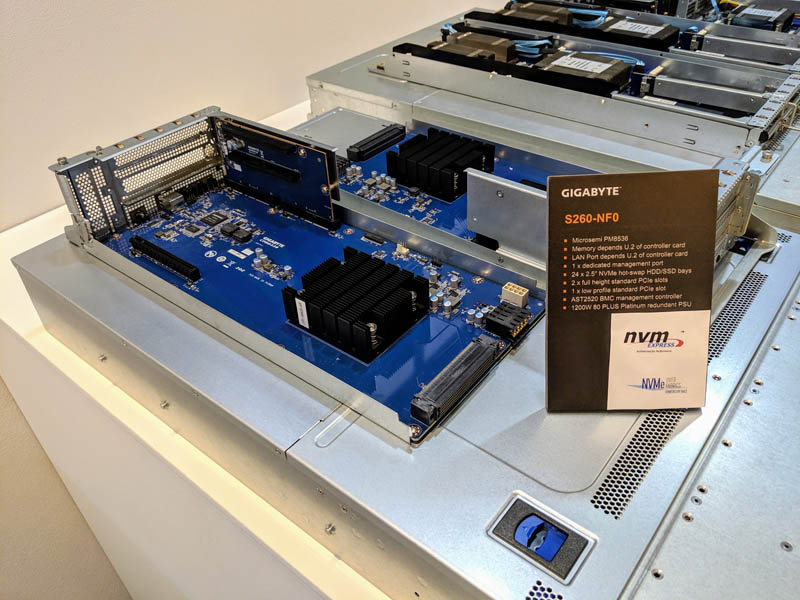

Gigabyte S260-NF0 for NVMeoF

One of the more innovative storage products we saw from Gigabyte was a dual controller NVMeoF box. The Gigabyte S260-NF0 is designed for dual controller NVMeoF implementations.

The front of the chassis has 24x 2.5″ U.2 NVMe SSD bays. Each controller has two full height and one low profile PCIe slot.

Controllers on each nodes are Microsemi PM8536 PCIe Gen3 switches with 96 lanes and 48 ports capable of 174GB/s of bandwidth. Each controller sled can take SmartNICs or controllers to handle the fabric and application aspects of NVMeoF deployments.

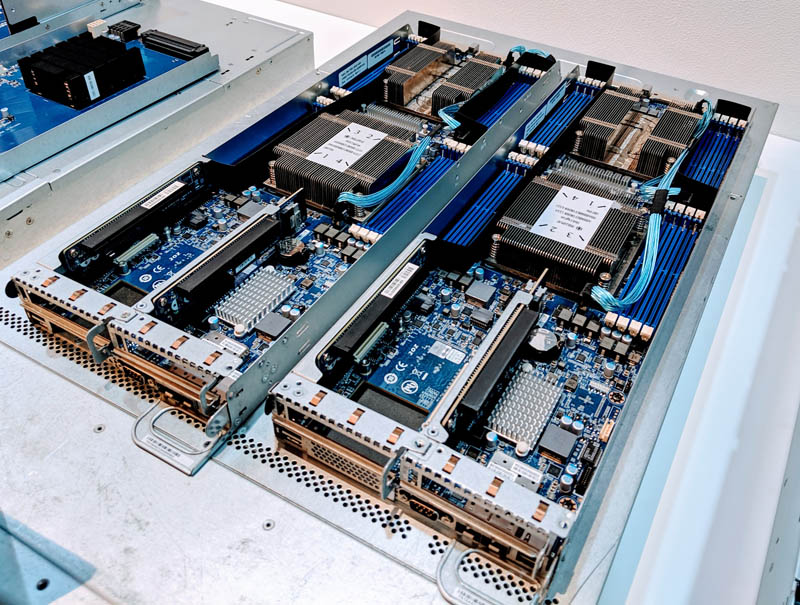

Gigabyte H261-T60 ThunderX2 Nodes Shipping

We previously covered the Gigabyte H261-T60 including at last year’s Computex. This is a 2U4N solution with two 28 core Cavium ThunderX2 chips per node.

We were told that this is finally shipping. What is more, these are 2U4N sleds that share a common infrastructure with what we saw in our Gigabyte H261-Z60 Review and Gigabyte H261-Z61 Review. In theory, the same 2U4N chassis should be able to accept Intel Xeon, AMD EPYC, and Cavium/ Marvell ThunderX2 nodes.

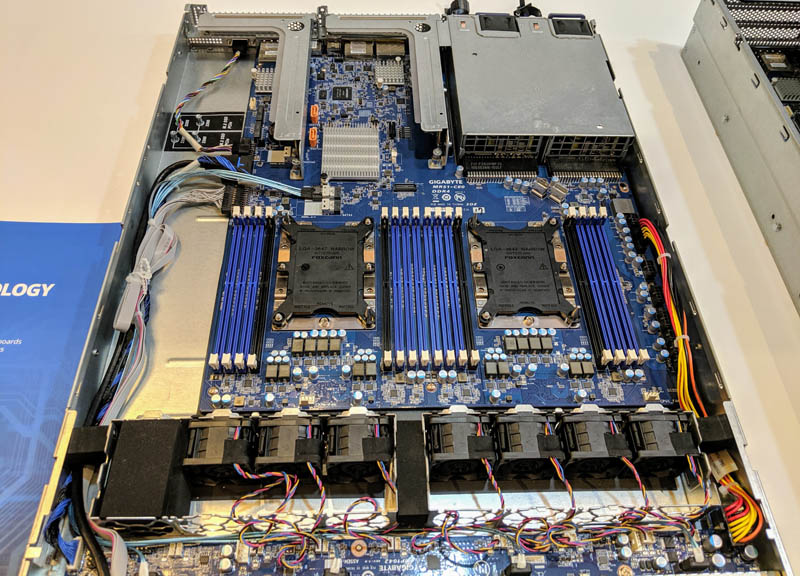

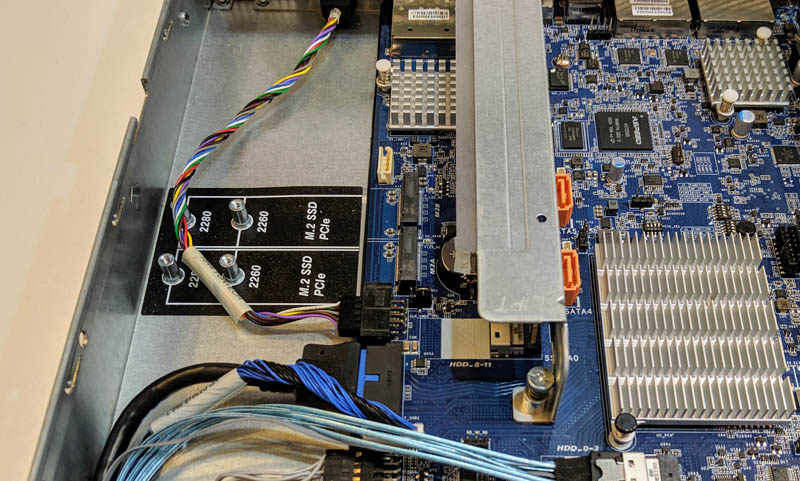

Gigabyte R161-340

We saw over 100 different server platforms today alone. The Gigabyte R161-340 is a 1U server platform based on dual Intel Xeon Scalable CPUs with redundant power supplies and risers for additional I/O. We saw something in this platform we wanted to highlight as a unique design point.

This is one of two servers that share the same motherboard that Gigabyte had on display. As one can see, there are two M.2 NVMe drives that Gigabyte did not have room to put the M.2 slots over the main PCB. Instead, these two M.2 slots have their screw-down points directly into the chassis.

That is some seriously interesting engineering from Gigabyte and is not what we normally see in servers.

Final Words

Gigabyte had a ton of new products on display at Computex 2019 and the AMD EPYC “Rome” designs are exciting. We still have a few months until we can share those. Until then, the Intel Xeon Scalable servers, NVMeoF nodes, and ThunderX2 system developments were great to see.

I know this thread is very old. But I would like to deploy NVMeoF in my network using the S260-NF0 (or a similar system). How is this done? How are drives put together as a single entity? What system in the network creates the file system? How is that storage shared on a network? How do I mount it on a Windows workstation?

I can’t find any answer to these questions on the internet (I’m probably asking the wrong question to Google…) so I would appreciate any idea of where to start.

Thanks!