Gigabyte S452-Z30 Performance

For this exercise, we are using our legacy Linux-Bench scripts which help us see cross-platform “least common denominator” results we have been using for years as well as several results from our updated Linux-Bench2 scripts.

At this point, our benchmarking sessions take days to run and we are generating well over a thousand data points. We are also running workloads for software companies that want to see how their software works on the latest hardware. As a result, this is a small sample of the data we are collecting and can share publicly. Our position is always that we are happy to provide some free data but we also have services to let companies run their own workloads in our lab, such as with our DemoEval service. What we do provide is an extremely controlled environment where we know every step is exactly the same and each run is done in a real-world data center, not a test bench.

We are going to show off a few results and highlight a number of interesting data points in this article.

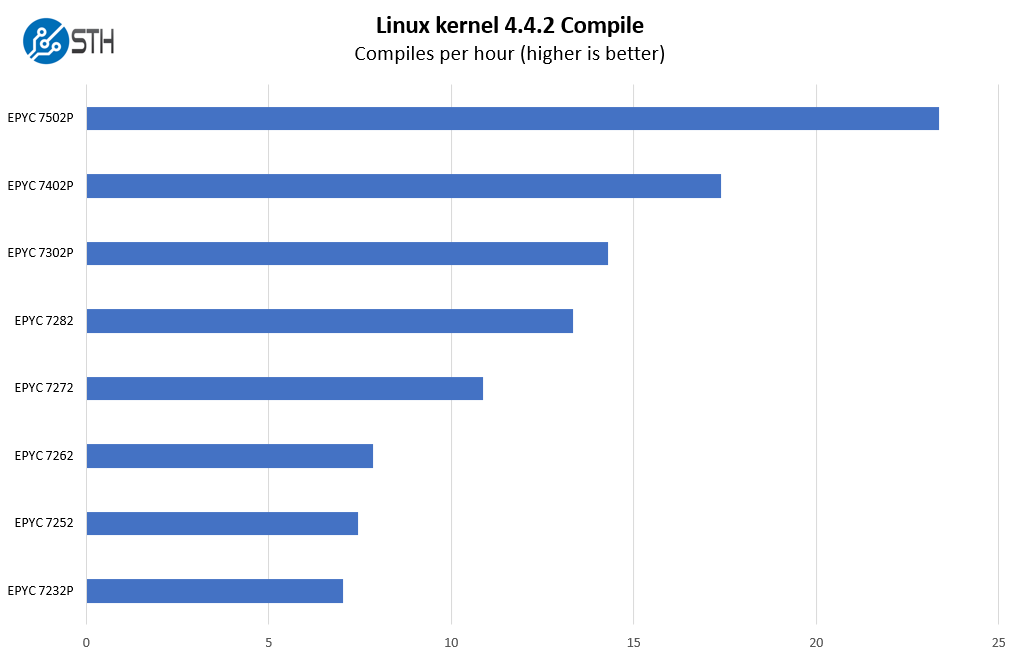

Python Linux 4.4.2 Kernel Compile Benchmark

This is one of the most requested benchmarks for STH over the past few years. The task was simple, we have a standard configuration file, the Linux 4.4.2 kernel from kernel.org, and make the standard auto-generated configuration utilizing every thread in the system. We are expressing results in terms of compiles per hour to make the results easier to read:

We tried a number of different CPUs in the system. AMD has a solid range. Something we did not do was to test the AMD EPYC 7702P at only 180W cTDP. AMD does offer higher core count parts so we are only showing up to 32-cores at 180W.

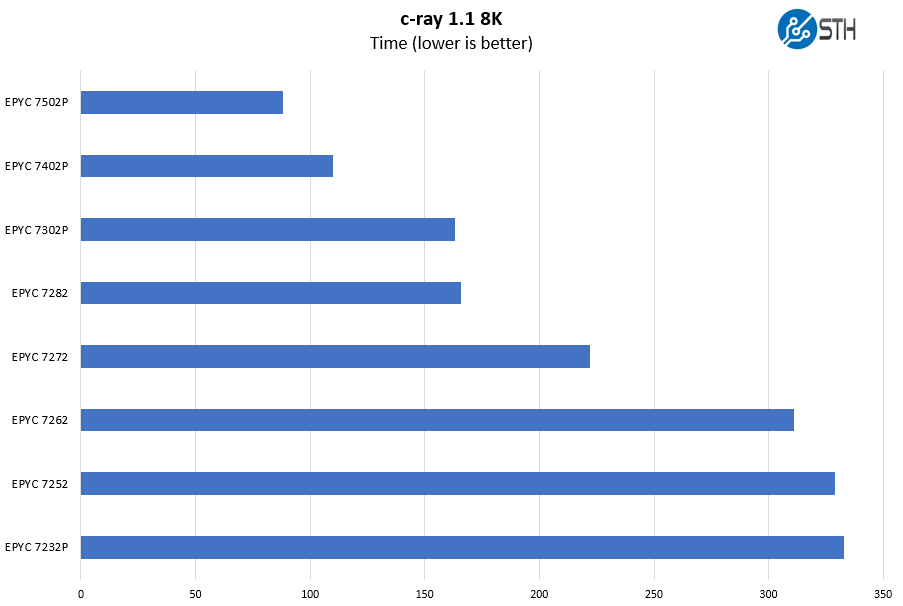

c-ray 1.1 Performance

We have been using c-ray for our performance testing for years now. It is a ray tracing benchmark that is extremely popular to show differences in processors under multi-threaded workloads. We are going to use our 8K results which work well at this end of the performance spectrum.

At the lower-end we have the AMD EPYC 7232P. If you were not looking for great performance, but wanted something that was more similar to two Intel Xeon E5-2603 V4’s or similar CPUs that were rebranded as the “Bronze” series, then this may be an option.

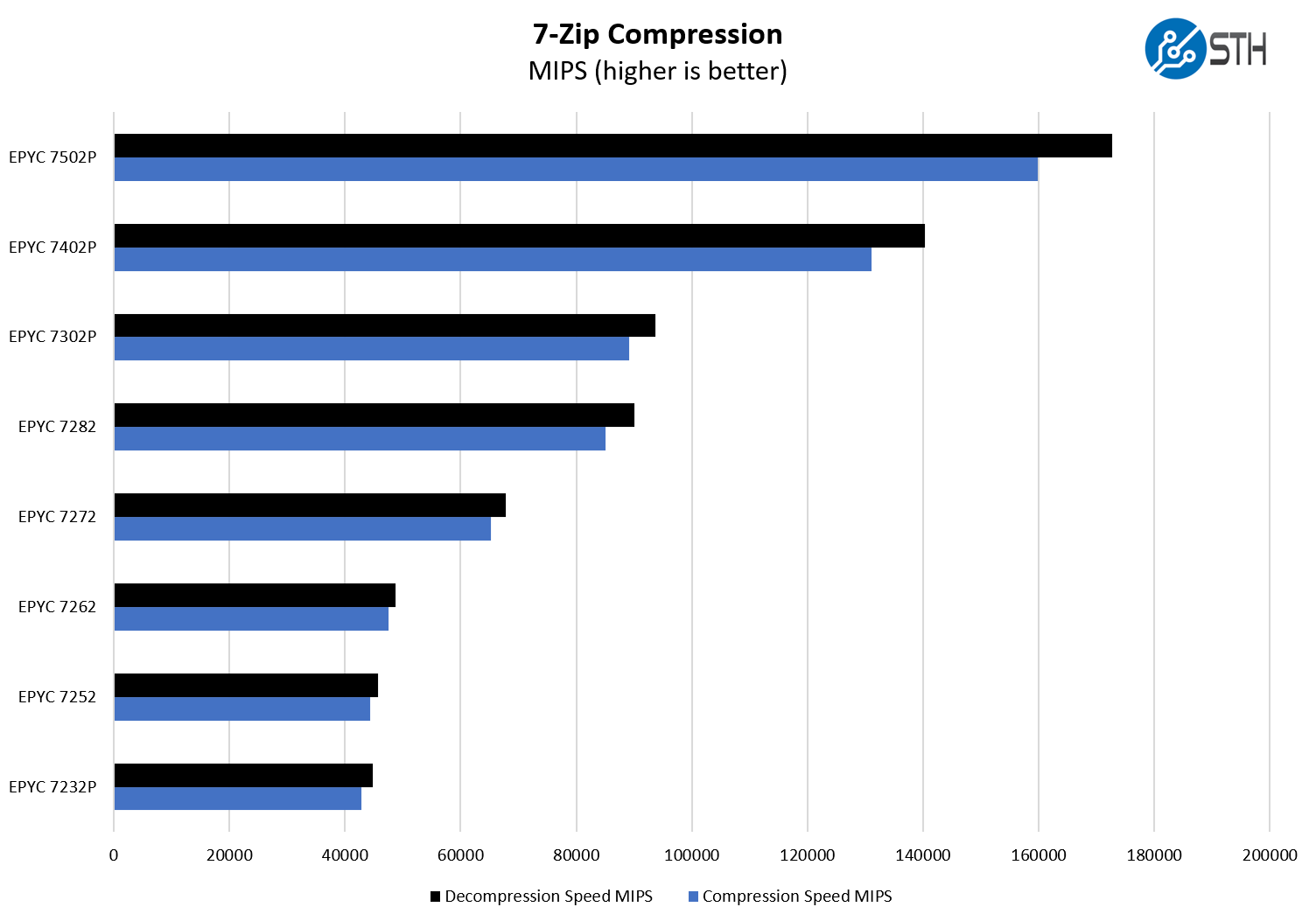

7-zip Compression Performance

7-zip is a widely used compression/ decompression program that works cross-platform. We started using the program during our early days with Windows testing. It is now part of Linux-Bench.

The AMD EPYC 7002 line has an extremely compelling option for those looking to get a full 8-channel memory feature set but also want to have a lower-cost CPU more akin to two Intel Xeon Silver 4208-4210 CPUs. This is the AMD EPYC 7302P which is the lowest-end CPU we would recommend in a system like this. A big part of that recommendation comes down to the CPU price relative to the entire system. In this case the cost of drives will dwarf the few hundred dollar premium required to upgrade to the EPYC 7302P from lower-end options.

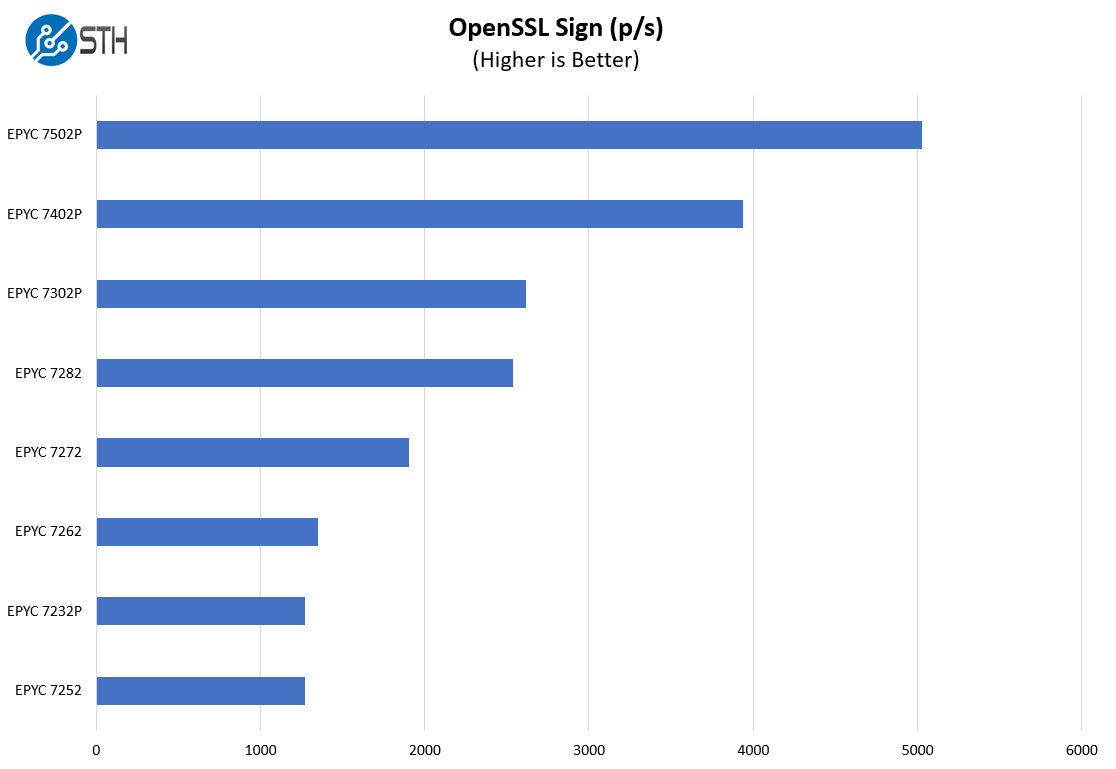

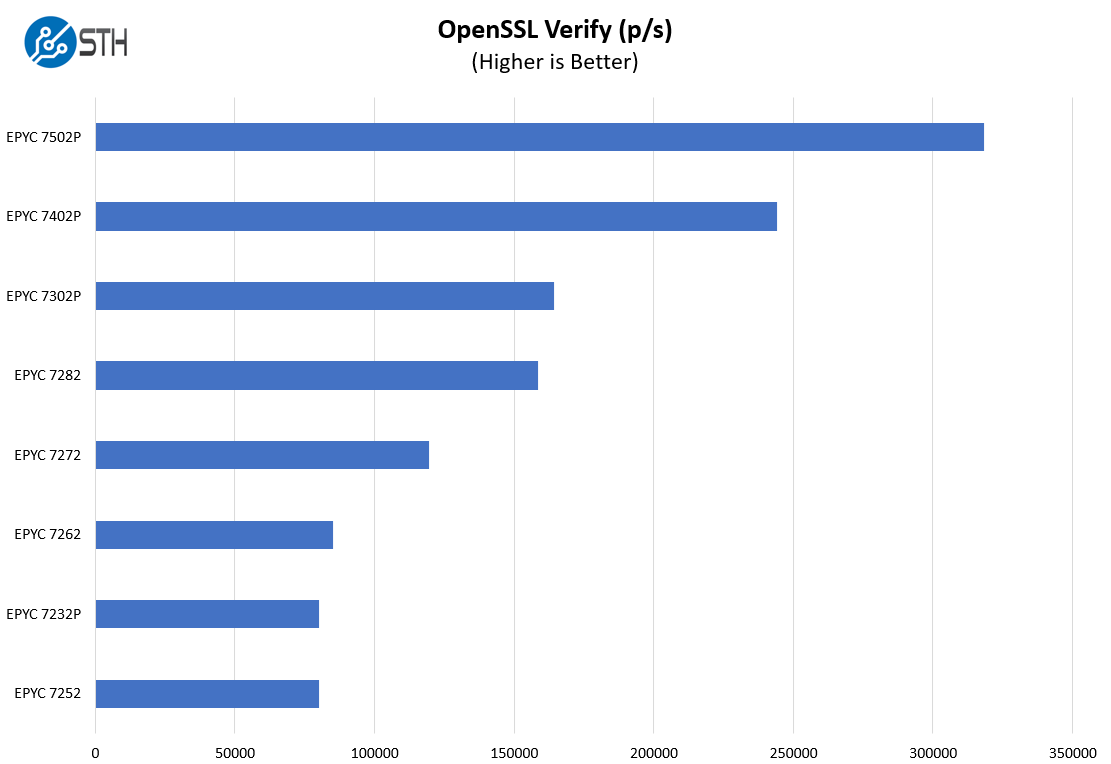

OpenSSL Performance

OpenSSL is widely used to secure communications between servers. This is an important protocol in many server stacks. We first look at our sign tests:

Here are the verify results.

While the AMD EPYC 7402P is perhaps our top choice overall, the AMD EPYC 7502P offers more performance in the 180W TDP range. 32-cores makes for a great consolidation case over two older generation Xeon CPUs.

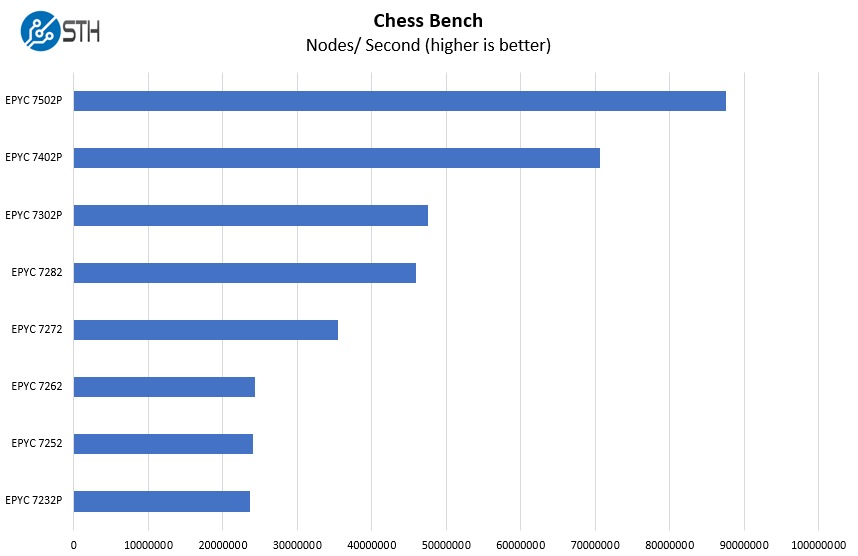

Chess Benchmarking

Chess is an interesting use case since it has almost unlimited complexity. Over the years, we have received a number of requests to bring back chess benchmarking. We have been profiling systems and are ready to start sharing results:

Many of the other options on our list in the 8-16 core range are AMD EPYC 7002 Rome CPUs with Half Memory Bandwidth. That is why we originally setup this system with the EPYC 7272 then switched it out when we saw the impacts. This is the type of system that really requires a full 8-channel SKU.

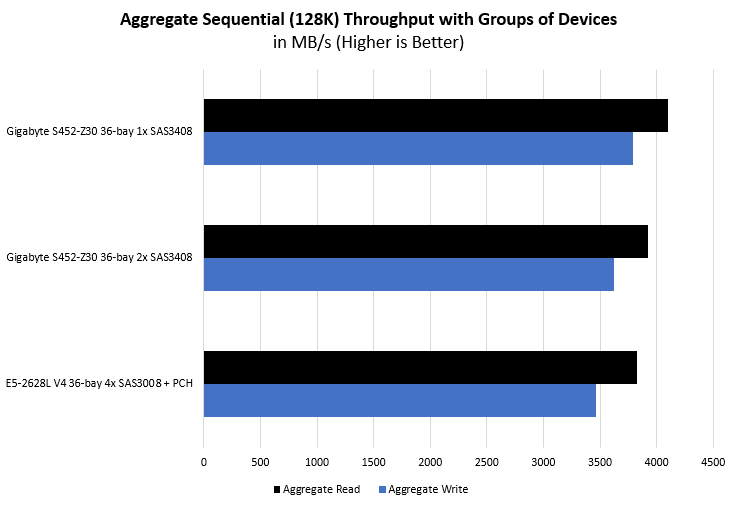

Storage Performance

We wanted to take a look at how this system performed compared to our older generations of 36-bay Xeon E5 based systems. For this test, we are using older 4TB HGST and Seagate SATA hard drives from our older lab system, importing the ZFS array, and then seeing the resulting performance. We wanted to keep the disks constant but the newer/ larger disks should be faster and offer more capacity. Using larger disks on the newer system is more realistic, however, we wanted to test the hypothesis that this was as fast, if not faster, than our older generation Xeon E5 V4 36-bay server.

What is interesting here is that we got slightly better performance here than our previous generation systems. We have a faster and more modern processor with a more modern SAS controller and SAS Expander, but we are using 1/2 as many controllers (plus no PCH SATA) and half the number of CPUs as in our older generation Xeon E5-2628L V4 systems, with the same number of cores and memory channels. Our hypothesis is that upgraded SAS infrastructure as well as the single NUMA node configuration is helping significantly with this.

When building your own storage servers, there are a lot of snags one can run into from the hardware and software sides. We did not expect to need to change wiring and use two HBAs but we got significantly better performance in that configuration.

Next, we are going to move to our power consumption, server spider, and final words.

Almost ordered 3 of these systems today, unfortunately it just missed the mark for us. It would have been a home run if someone took out 6 of the 3.5” drive bays from the back, moved the power supplies down to where ~ 3 drives were and put between 6 to 8 nvme where the other 3 drives were. That would free up room in the top 2 u for a motherboard with 2 sockets and 32 dims. With that you could have tired storage, or vm’s that could include nvr duties with possible room to add accelerator cards for video AI. Would be a branch office powerhouse, just add networking.

Something I think is an interesting use case is utilizing the PCIe slots for NVMe storage whether that is an internal card or an external chassis.

@Patrick: Thoughts of using this as a high-performance TrueNAS Core appliance with a Gigabyte CSA4648 (Broadcom SAS3008 in IT Mode) HBA?

We did not get to test FreeNAS. Proxmox VE or soon TrueNAS Scale are based on Linux.