Gigabyte S452-Z30 Internal Overview

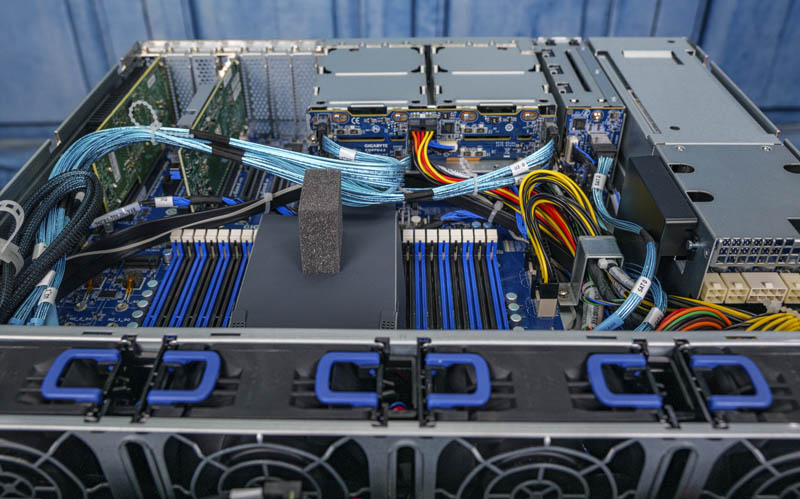

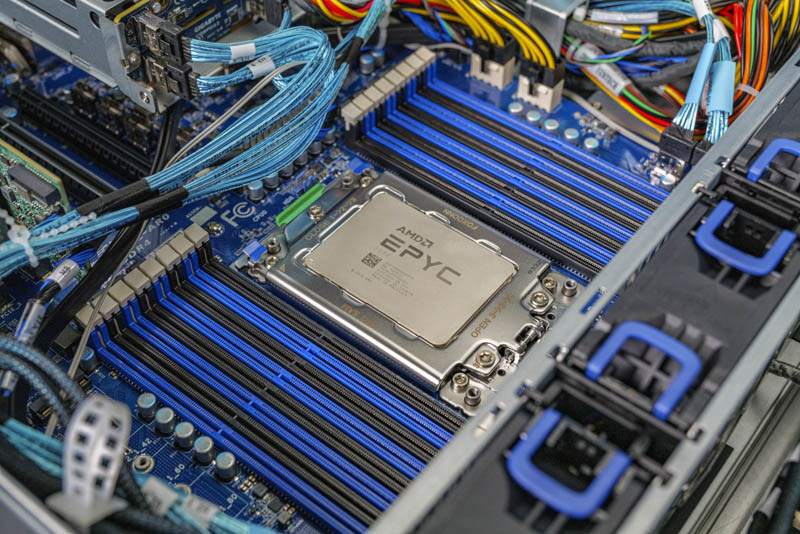

We are going to do this section a bit out of order from our standard front to back view. Instead, we wanted to focus on the motherboard in this system the MZ32-AR0. This is the same motherboard we saw in the Gigabyte R272-Z32 Review. Gigabyte is using this standard single-socket AMD EPYC 7002 motherboard to power a number of different form factors.

With the AMD EPYC 7002 series, we can see the EPYC chips scale to 280W. Given the number of components in the system, the S452-Z30 can only handle CPUs with up to 180W cTDP. Still, that provides up to 64 cores of compute capacity. One can augment the AMD EPYC CPU with up to 16x DIMMs. Gigabyte supports up to 128GB RDIMMs/ LRDIMMs which means there is a maximum of 2TB of memory capacity in this system. For those upgrading from dual Intel Xeon E5 series machines, there are still 8-channels of memory and the new AMD EPYC chips can consolidate any Xeon E5-era, dual-socket core count, into a single socket platform.

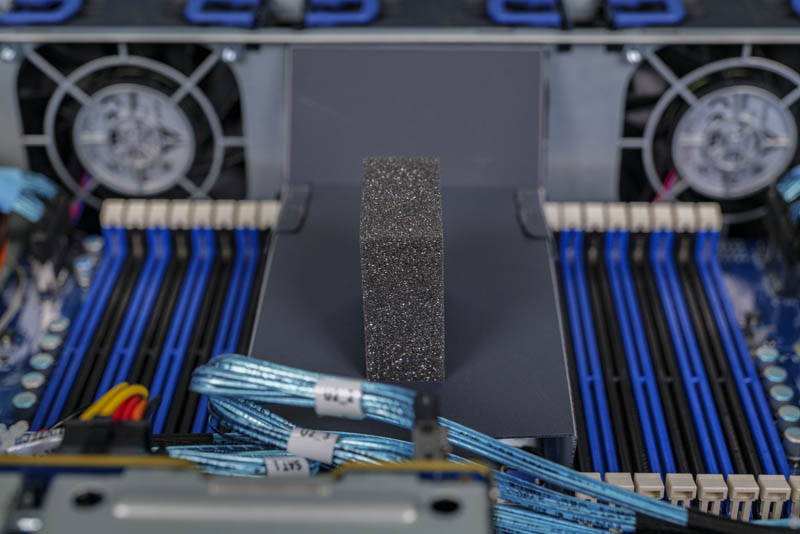

Something that is interesting in the 4U S452-Z30 is that the system is using a 1U heatsink. This is actually a copper base plus vapor chamber solution which is higher-end than many of the aluminum block heatsinks we have seen.

Since the server section is a 2U segment, Gigabyte must duct air over the CPU heatsink. That duct has to go from 2U and the fan partition to 1U for the heatsink. As a result, there is a lot of empty space above the duct. To prevent flapping, Gigabyte has given the duct a “mohawk” of foam to keep it in space. We generally prefer just using more robust ducting, but this was a unique solution.

The Gigabyte MZ32-AR0 motherboard is exciting beyond the single AMD EPYC CPU socket. We get three PCIe Gen4 x16 slots and a Gen4 x8 slot. There are two switched PCIe Gen3 slots one is a x16 that can run in x16 mode or bifurcated to enable the second slot in a x8/x8 configuration. In the Gigabyte R272-Z32, these PCIe slots were mostly occupied. In this system since we have hard drives, we can use them for expansion.

In terms of expansion, one of these slots is going to be used by a SAS3 controller. The 36 SAS bays eventually terminate in a pair of SFF-8643 cable ends. As a result, one can use a SAS controller in the PCIe Gen3 x8 slot and get a second slot for either a second card or another device.

Doing so leaves the PCIe Gen4 slots open. In the 2020s, it likely makes sense to just use a 100GbE adapter for this type of system. A dual 25GbE adapter or dual 50GbE adapter can handle the hard drive bandwidth, however, now that NVIDIA (Mellanox), Broadcom, and others have been in production with multiple generations of 100GbE NICs, and now Intel’s Columbiaville (Intel Ethernet 800 Series 100GbE NICs are now in production (we have a Supermicro dual 100GbE PCIe Gen4 Intel Ethernet 800 series card installed here) it makes sense to simply move to 100GbE with these systems given 100GbE NIC price trends.

Beyond these cards, under the four NVMe drive bays, there is an OCP NIC 2.0 slot with a PCIe Gen3 x16 connection. It is great that Gigabyte is including this standard form factor. At the same time, we wish this was an OCP NIC 3.0 slot with PCIe Gen4. The OCP NIC 3.0 slot is not just designed for faster networking cards, but it is more easily serviceable. Getting to the OCP NIC 2.0 slot takes some work in this system.

While that may seem like a lot of expansion, there is actually more. There are also two M.2 NVMe SSD drive slots. These can take up to M.2 2280 SSDs and are rated for PCIe Gen3 speeds. If you did not want SATA boot drives or if you wanted to simply add more NVMe cache devices, there are two M.2 drive slots.

Taking a moment here, one can also imagine using the PCIe Gen4 slots to connect more drive shelves or additional NVMe storage which makes the expansion story here quite unique.

Moving beyond the motherboard, we have the fans. One can see the four 80mm hot-swap fans. These are easy to service. Underneath these fans, there are also four more fans. Getting to these four, even though they are hot-swap, means disassembly. This is a common design challenge in this type of system and a common solution. Another option we have seen is stacking two fans together in an assembly so they can both be removed from the top. Fans are so reliable these days we have pondered “Are Hot-Swap Fans in Servers Still Required?” At the same time, if one does fail, it can potentially take over half a petabyte of storage offline in this system.

The storage itself connects to the motherboard using the two previously mentioned SFF-8643 cables. These go into the backplane that is responsible for the 24x 3.5″ front drive bays. On that backplane we have a Broadcom SAS35x48 SAS3 expander. The SAS35x48 is a 48-port SAS expander. With 8x SAS3 lanes going from the host to the SAS expander, that leaves 40 lanes for devices. 36 of those lanes are being used for the front and rear drive bays. Another feature of the SAS35x48 is that it has Broadcom DataBolt. DataBolt allows the SAS expander to have 8x 12Gbps SAS3 lanes back to the host, as are used in the S452-Z30. Devices can be 3Gbps or 6Gbps such as SATA III devices and still utilize the faster back-haul to the server.

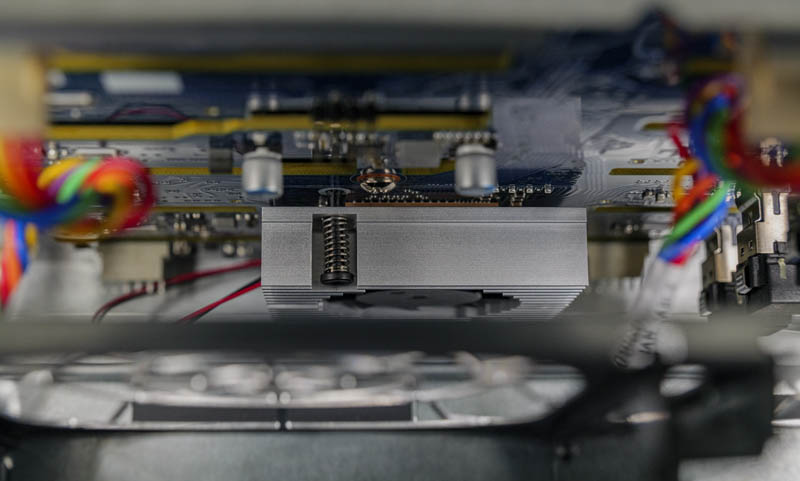

In the S452-Z30, the Broadcom SAS35x48 has a feature we normally would not expect. The SAS expander has an active fan on the heatsink. Again, fans are generally reliable but we normally prefer passive heatsinks that are cooled by chassis fans. Given the proximity to the chassis fans, we are a bit surprised Gigabyte needed an active solution here.

Next, we are going to take a look at our block diagram, test configuration, and management before getting to our performance, power consumption, and final words.

Almost ordered 3 of these systems today, unfortunately it just missed the mark for us. It would have been a home run if someone took out 6 of the 3.5” drive bays from the back, moved the power supplies down to where ~ 3 drives were and put between 6 to 8 nvme where the other 3 drives were. That would free up room in the top 2 u for a motherboard with 2 sockets and 32 dims. With that you could have tired storage, or vm’s that could include nvr duties with possible room to add accelerator cards for video AI. Would be a branch office powerhouse, just add networking.

Something I think is an interesting use case is utilizing the PCIe slots for NVMe storage whether that is an internal card or an external chassis.

@Patrick: Thoughts of using this as a high-performance TrueNAS Core appliance with a Gigabyte CSA4648 (Broadcom SAS3008 in IT Mode) HBA?

We did not get to test FreeNAS. Proxmox VE or soon TrueNAS Scale are based on Linux.