Gigabyte R282-N80 Internal Overview

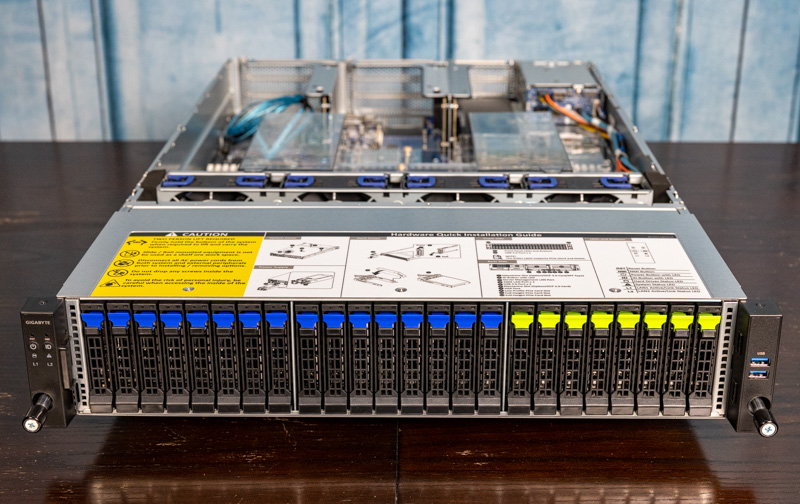

We are just going to show the front view to orient our readers here. We have already discussed the drives and the rear risers, so we are going to start our internal overview from the fan partition and work towards the back of the chassis.

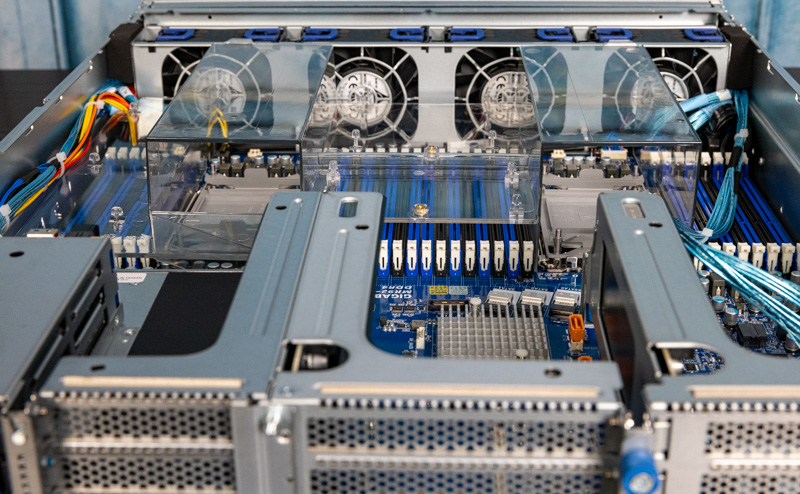

For fans, we get four 80mm 16,300rpm fans. These are hot-swappable and use Gigabyte’s carriers to help them slot in the chassis.

These fans have airflow directed through the chassis via a clear airflow guide. Two fans cool each CPU but the DIMMs and other components only have one fan cooling them.

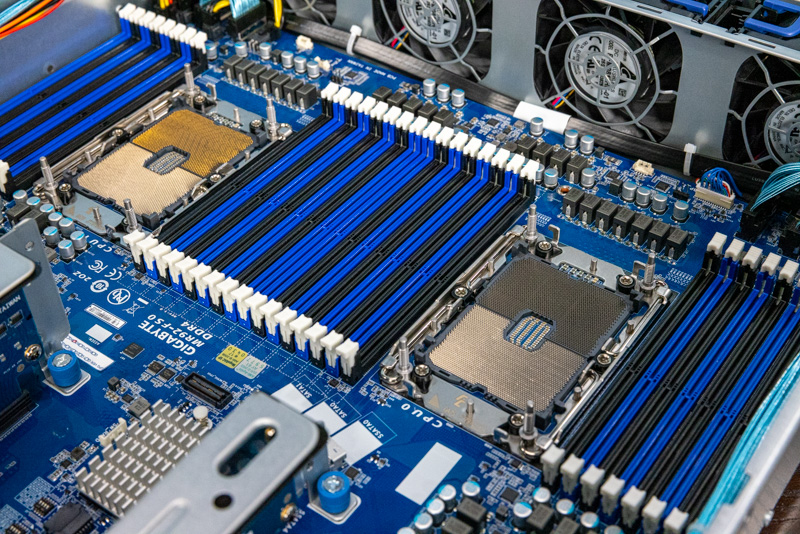

The CPU sockets are LGA4189, but specifically for the 3rd generation Intel Xeon Scalable Ice Lake processors, not Cooper Lake. Gigabyte supports up to 270W for the Platinum 8380 SKUs. Each CPU has 8-channel memory up from 6-channel in the previous generation. That means we get 16 DIMMs per CPU or 32 DIMMs total in this platform up from a total of 24 in the previous generation. That means we get more memory bandwidth, especially with DDR4-3200, as well as more memory capacity.

Each CPU gets more memory capacity in another way as well. Intel does not have the M and L high-memory SKUs with the Ice Lake Xeon generation. That means one can use higher capacity DIMMs and Optane DIMMs without having to spend more for a higher-memory SKU. In this generation, Intel also supports its PMem 200 series. We did a piece on the Glorious Complexity of Intel Optane DIMMs if you wanted to learn more about how Optane works.

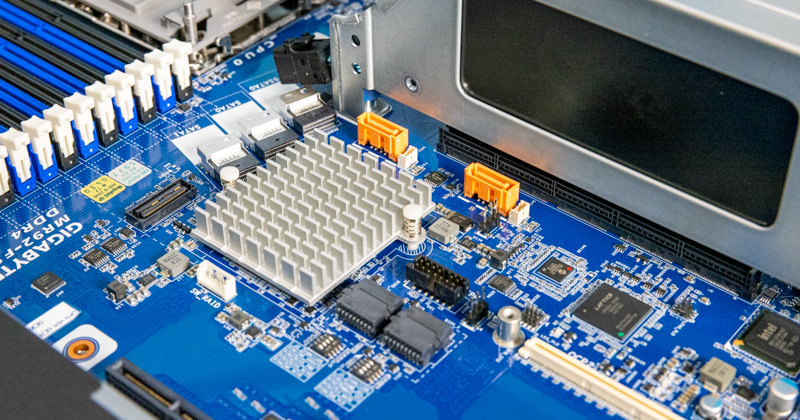

This is the heatsink. We had this in our set of photos and it just looked interesting so we have a photo.

If you want to learn how to install a LGA4189 CPU and heatsink, we did a video on that in another Gigabyte platform using Cooper Lake, but the methodology is the same.

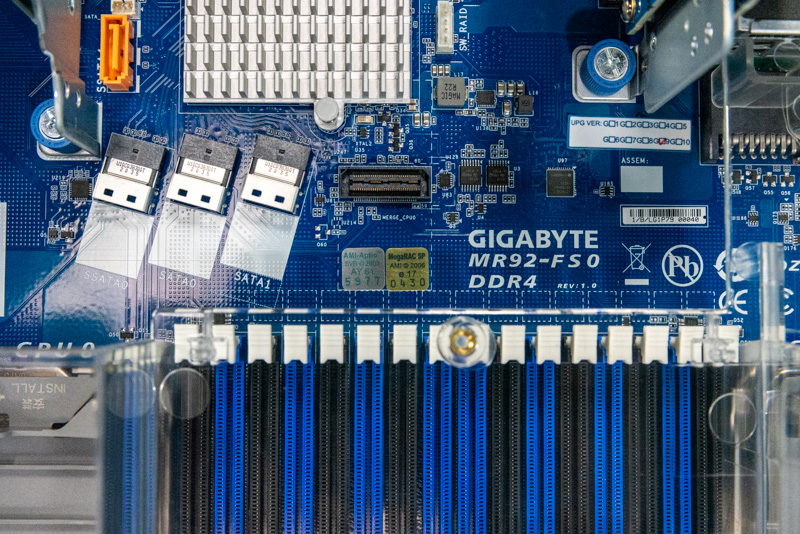

The motherboard itself is the Gigabyte MR92-FS0 if you wanted to look up specs for that.

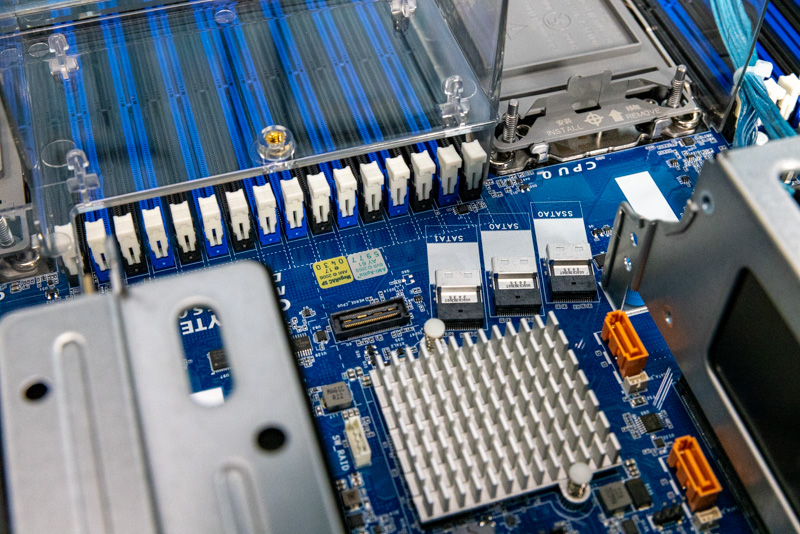

Behind the CPU and memory area, we can see that there is an array of three SlimSAS SATA ports. These provide twelve lanes of SATA III when connected.

Behind this, there is a heatsink for the Intel C621A Lewisburg refresh PCH as well as two SATA DOM powered connectors.

Gigabyte also has PCIe I/O on the front of the motherboard that is used for the drive bays.

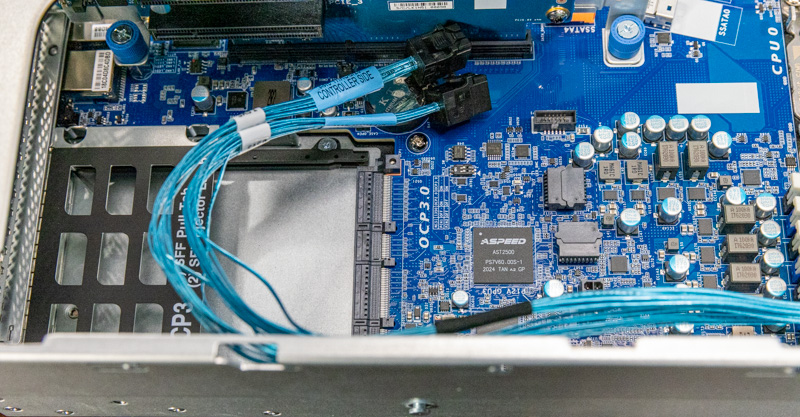

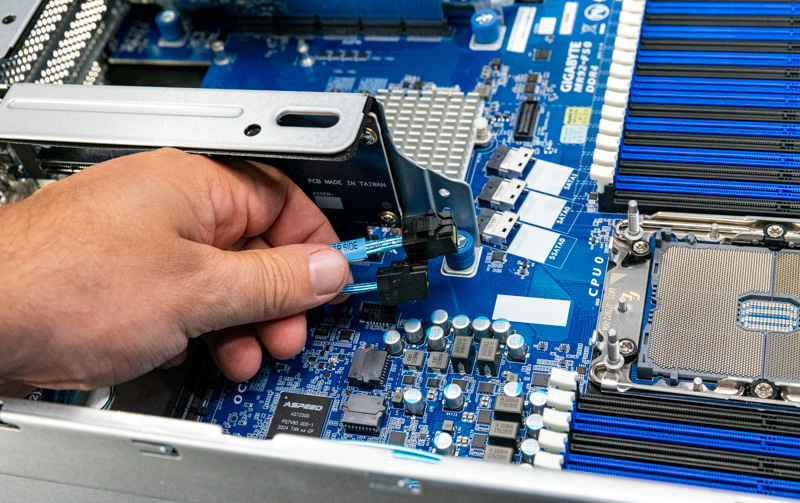

Something that is a bit strange is that the front SAS expander is not connected by default. Instead, we have two SFF-8643 cables that await the installation of a RAID card or SAS HBA.

This server requires the use of a SAS3 RAID controller or HBA because the cables leading to the SAS3 expander have different headers than the onboard SATA ports. The impact is that this strands the extra SATA capacity of the motherboard but it is because this SKU specifically has the SAS expander so this is a configuration choice. We just wanted to point out that configuration option so our readers are aware.

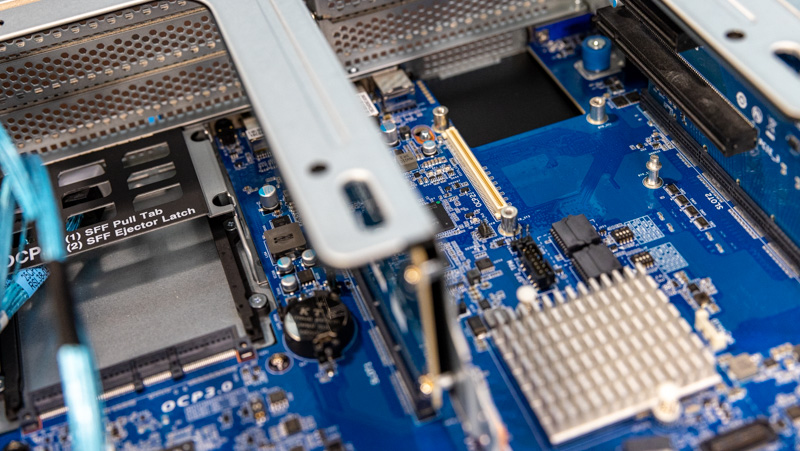

We discussed the risers in our internal overview, but there is also additional connectivity in the rear. Specifically, there is an OCP NIC 2.0 slot in the middle of the motherboard between the VGA and USB ports. This shares its eight lanes with the middle PCIe riser.

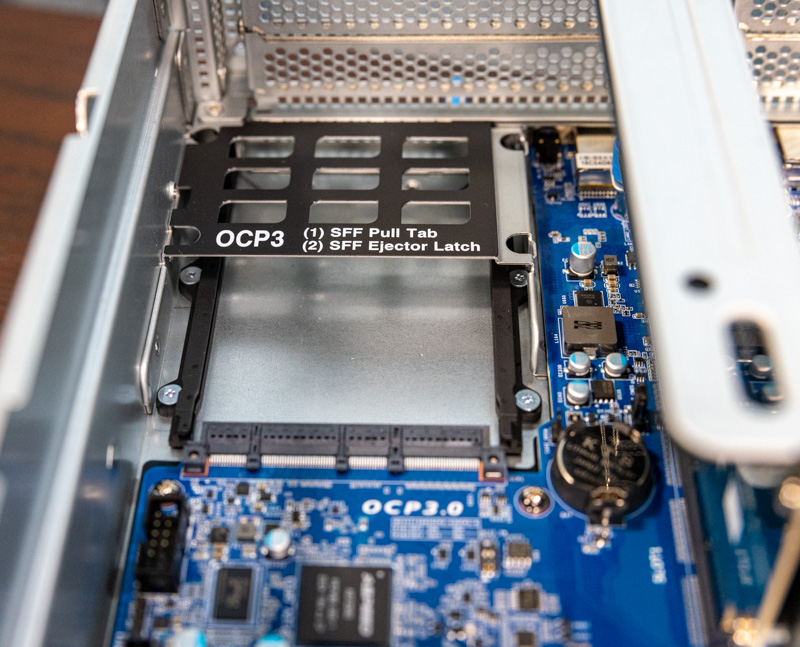

The OCP NIC 3.0 slot at the rear of the system adds another dimension to the rear I/O networking. Gigabyte dedicates a full PCIe Gen4 x16 for 100GbE/ 200GbE NICs to this slot. Some servers, even on 6-figure systems from top 2 OEMs can sometimes only have x8 in the OCP slots limiting their usefulness.

Overall, there are a lot of great features in this server.

Let us continue with the block diagram, management, and performance.

It’s interesting to see that passive risers are still possible with Gen 4. Not much of that to see in the consumer space.

I’m guessing they’ve only managed it because they’re using an exotic, low loss, perfect impedance (surface mount by the looks of it?) connector between the riser and the motherboard.