Gigabyte R272-Z32 Performance

For this exercise, we are using our legacy Linux-Bench scripts which help us see cross-platform “least common denominator” results we have been using for years as well as several results from our updated Linux-Bench2 scripts. Starting with our 2nd Generation Intel Xeon Scalable benchmarks, we are adding a number of our workload testing features to the mix as the next evolution of our platform.

At this point, our benchmarking sessions take days to run and we are generating well over a thousand data points. We are also running workloads for software companies that want to see how their software works on the latest hardware. As a result, this is a small sample of the data we are collecting and can share publicly. Our position is always that we are happy to provide some free data but we also have services to let companies run their own workloads in our lab, such as with our DemoEval service. What we do provide is an extremely controlled environment where we know every step is exactly the same and each run is done in a real-world data center, not a test bench.

We are going to show off a few results, and highlight a number of interesting data points in this article.

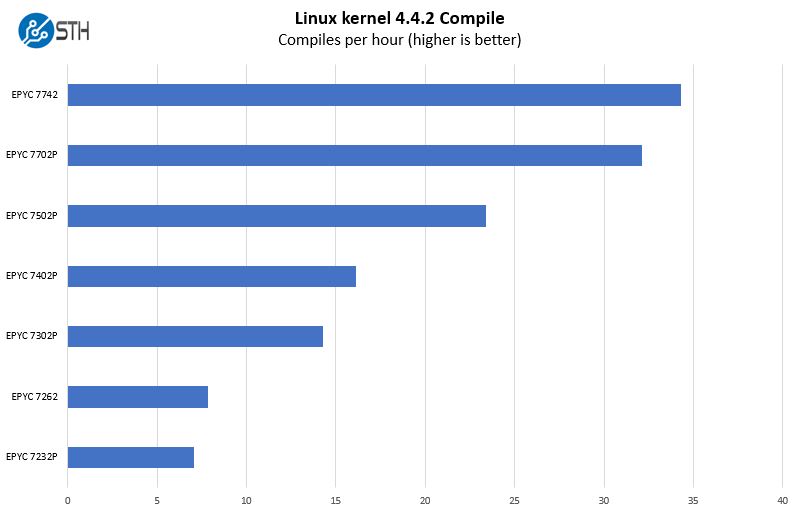

Python Linux 4.4.2 Kernel Compile Benchmark

This is one of the most requested benchmarks for STH over the past few years. The task was simple, we have a standard configuration file, the Linux 4.4.2 kernel from kernel.org, and make the standard auto-generated configuration utilizing every thread in the system. We are expressing results in terms of compiles per hour to make the results easier to read:

We are seeing some other platforms that are more suited to lower-end AMD EPYC offerings. With a full set of 16 DIMM slots and all of the storage I/O, we think that the AMD EPYC 7702P, EPYC 7502P, and EPYC 7402P will be popular in the Gigabyte R272-Z32. Gigabyte’s platform allows one to fully utilize the feature set of these AMD CPUs to use a lot of cores, RAM, and I/O.

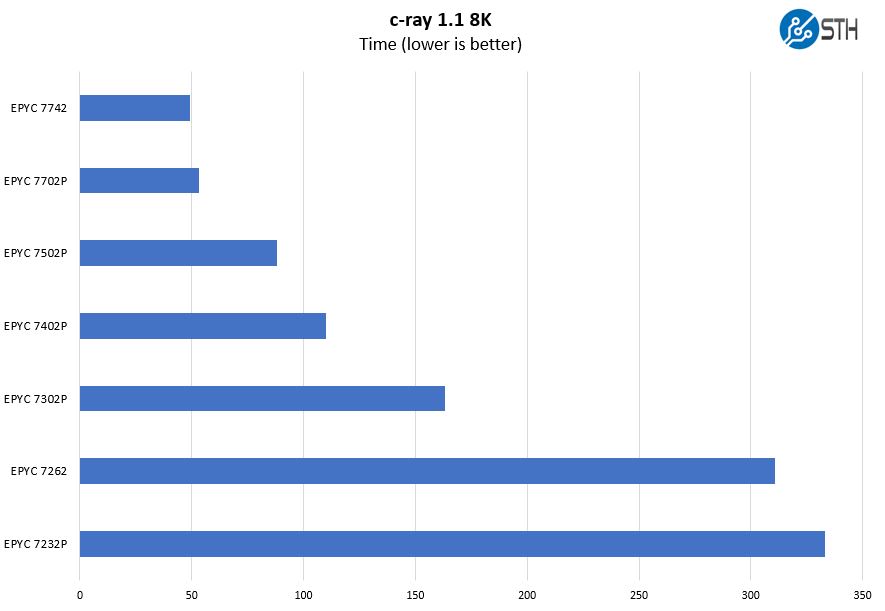

c-ray 1.1 Performance

We have been using c-ray for our performance testing for years now. It is a ray tracing benchmark that is extremely popular to show differences in processors under multi-threaded workloads. We are going to use our 8K results which work well at this end of the performance spectrum.

We wanted to take a quick pause and note the enormous performance delta that these processor options have. They range from an 8 core $450 CPU to a 64 core $7000 CPU.

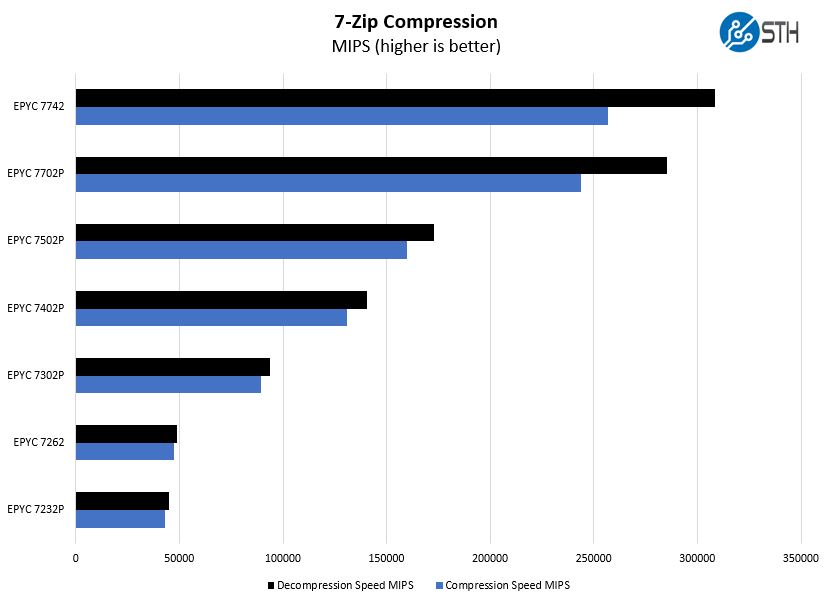

7-zip Compression Performance

7-zip is a widely used compression/ decompression program that works cross-platform. We started using the program during our early days with Windows testing. It is now part of Linux-Bench.

As some perspective here, the Intel Xeon Platinum 8280 and Platinum 8276L SKUs we tested will fall between the 24 core AMD EPYC 7402P ($1250) and the AMD EPYC 7502P ($2300.) You will need two Intel Xeon CPUs to run a 24-bay NVMe system like the Gigabyte R272-Z32.

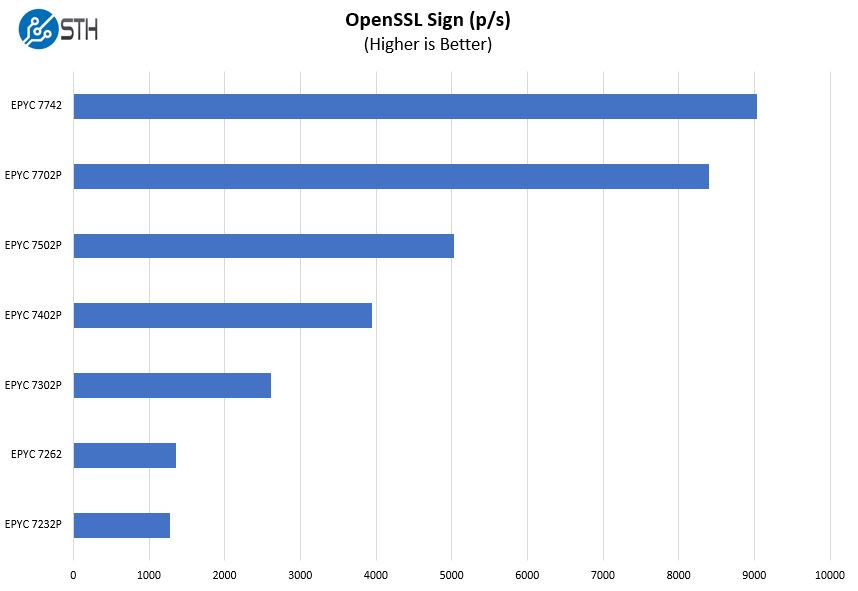

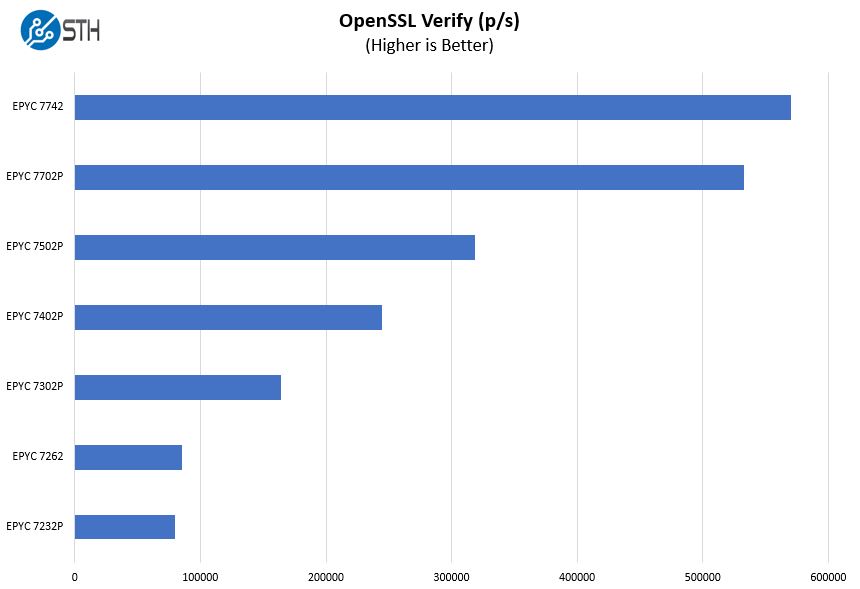

OpenSSL Performance

OpenSSL is widely used to secure communications between servers. This is an important protocol in many server stacks. We first look at our sign tests:

Here are the verify results:

With the AMD EPYC 7002 series, AMD again is pushing its single-socket value proposition by offering “P” SKUs. These “P” SKUs offer a substantial discount over non-P parts and as a result, we see the AMD EPYC 7702P being a much more common option in the Gigabyte R272-Z32 than the EPYC 7742.

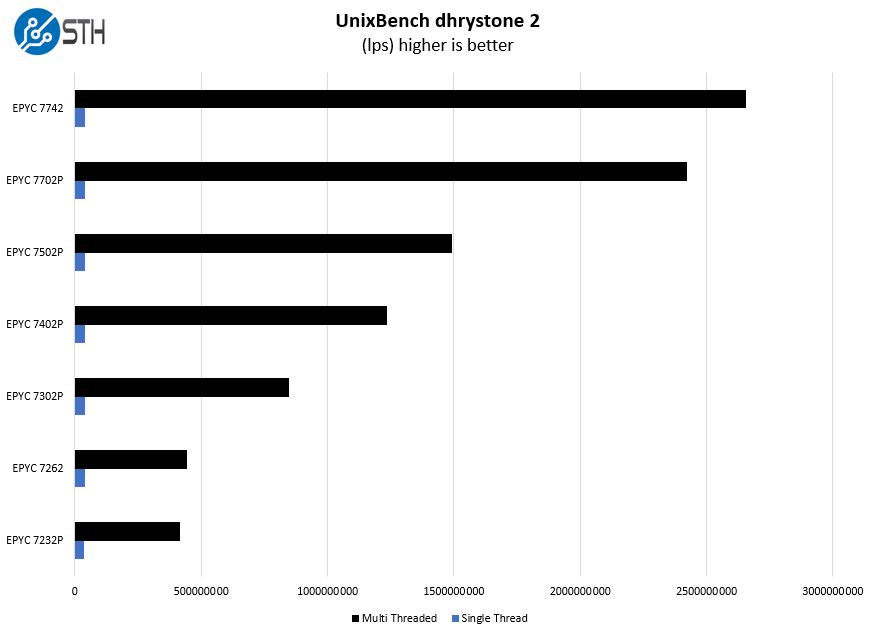

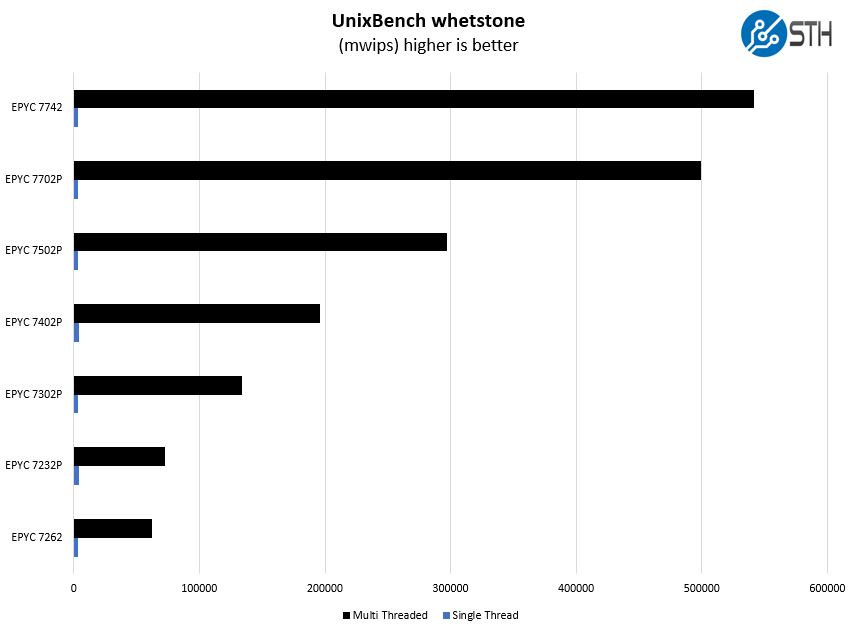

UnixBench Dhrystone 2 and Whetstone Benchmarks

Some of the longest-running tests at STH are the venerable UnixBench 5.1.3 Dhrystone 2 and Whetstone results. They are certainly aging, however, we constantly get requests for them, and many angry notes when we leave them out. UnixBench is widely used so we are including it in this data set. Here are the Dhrystone 2 results:

Here are the whetstone results:

From a raw CPU performance perspective, the single AMD EPYC 7702P and EPYC 7742 configurations are competitive with dual Intel Xeon Platinum 8280/ Platinum 8276 systems.

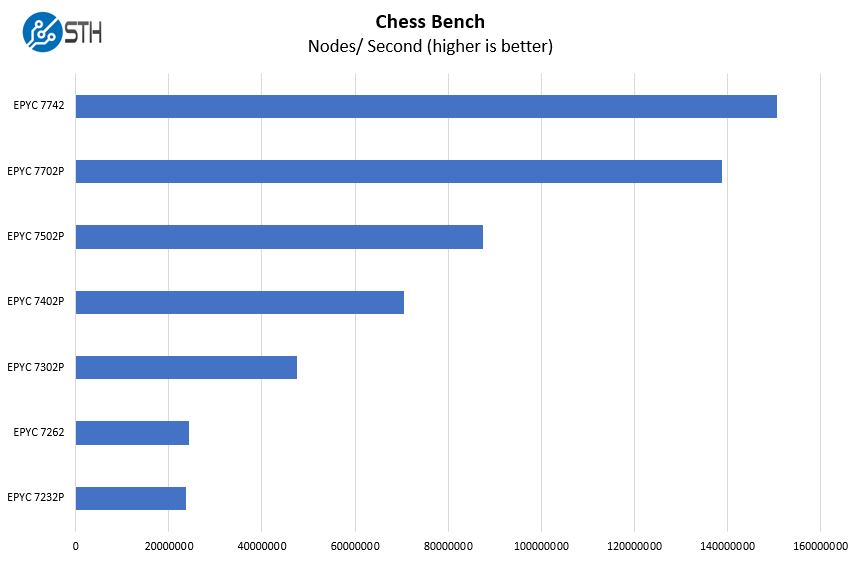

Chess Benchmarking

Chess is an interesting use case since it has almost unlimited complexity. Over the years, we have received a number of requests to bring back chess benchmarking. We have been profiling systems and are ready to start sharing results:

AMD has a very strong single-socket value proposition. We went over the market impact of their revolutionary architecture in AMD EPYC 7002 Series Rome Delivers a Knockout. The other key item to keep in mind when configuring the Gigabyte R272-Z32 is that the entire platform can run using a single CPU. Depending on your software-defined storage application, NIC being used, and workloads you may want to run on the platform, you have a fairly wide range of CPU options from AMD. We focused on the five AMD EPYC “P” series parts and two others that we think may make sense in a single-socket configuration like the Gigabyte R272-Z32.

Next, we are going to cover storage performance and power consumption before getting to our final words.

isn’t that only 112 pcie lanes total? 96 (front) + 8 (rear PCIe slot) + 8 (2 m.2 slots). did they not have enough space to route the other 16 lanes to the unused PCIe slot?

bob the b – you need to remember SATA III lanes take up HSIO as well.

M.2 to U.2 converters are pretty cheap.

Use Slot 1 (16x PCIe4) for the network connection 200 gbit/s

Use Slot 5 and the 2xM.2 for the NVMe-drives.

How did you tested the aggregated read and write? I assume it was a single raid 0 over 10 and 14 drives?

We used targets on each drive, not as a big RAID 0. The multiple simultaneous drive access fits our internal use pattern more closely and is likely closer to how most will be deployed.

Wondering if it support gen4 NVMe?

CLL – we did not have a U.2 Gen4 NVMe SSD to try. Also, some of the lanes are hung off PCIe Gen3 lanes so at least some drives will be PCIe Gen3 only. For now, we could only test with PCIe Gen3 drives.

Wish I could afford this for the homelab!

Thanks Patrick for the answer. For our application we would like to use one big raid. Do you know if it is possible to configure this on the Epyc system? With Intel this seems to be possible by spanning disks overs VMDs using VROC.

Intel is making life easy for AMD.

“Xeon and Other Intel CPUs Hit by NetCAT Security Vulnerability, AMD Not Impacted”

CVE-2019-11184

Most 24x NVMe installations are using software RAID, erasure coding, or similar methods.

I may be missing something obvious, and if so please let me know. But it seems to me that there is no NVMe drive in existence today that can come near saturating an x4 NVMe connection. So why would you need to make sure that every single one of the drive slots in this design has that much bandwidth? Seems to me you could use x2 connections and get far more drives, or far less cabling, or flexibility for other things. No?

Jeff – PCIe Gen3 has been saturated by NVMe offerings for a few years and generations of drives now. Gen4 will be as soon as drives come out in mass production.

Patrick,

If you like this Gigabyte server, you would love the Lenovo SR635 (1U) and SR655 (2U) systems!

– Universal drive backplane; supporting SATA, SAS and NVMe devices

– Much cleaner drive backplane (no expander cards and drive cabling required)

– Support for up to 16x 2.5″ hot-swap drives (1U) or 32x 2.5″ drives (2U);

– Maximum of 32x NVMe drives with 1:2 connection/over-subscription (2U)

Hi BinkyTo – check out https://www.servethehome.com/lenovo-amd-epyc-7002-servers-make-a-splash/

There are pluses and minuses of each solution. The more important aspect for the industry is that there are more options available on the market for potential buyers.

Thanks for the pointer, it must have skipped my mind.

One comment about that article: It doesn’t really highlight that the front panel drive bays are universal (SATA, SAS and NVMe).

This is a HUGE plus compared to other offerings, the ability to choose the storage interface that suits the need, at the moment, means that units like the Lenovo have much more versatility!

This is what I was looking to see next for EPYC platforms… I always said it has Big Data written all over it… A 20 server rack has 480 drives… 10 of those is 4800 drives… 100 is 48000 drives… and at a peak of ~630W each, that’s an astounding amount of storage at around 70KW…

I can see Twitter and Google going HUGE on these since their business is data… Of course DropBox can consolidate on these even from Naples…

Where can I buy these products

Olalekan we added some information on that at the end of the review.

Can such a server have all the drives connected via a RAID controller like Megaraid 9560-16i?

Before commenting about software RAID or ZFS, in my application, the bandwidth provided by this RAID controller is way more than needed, with the comfort of providing worry free RAID 60 and hiding very well the write peak latencies due to the battery backup cache.

Mr. Kennedy, recently I buy a R152-Z31 with a AMD Epyc 7402P, that have the same motherboard of R272-Z32 the MZ32-AR0 to test our apps with the AMD processor, but, now I am confused with the difference between ranks within RAM memory modules, in your review I saw you use a micron one, in the micron web page (https://www.crucial.com/compatible-upgrade-for/gigabyte/mz32-ar0) there are some models of 16GB, could you advice me with a model that will be at least work fine. I appreciate a lot if you could help me.