Gigabyte R272-Z32 Topology

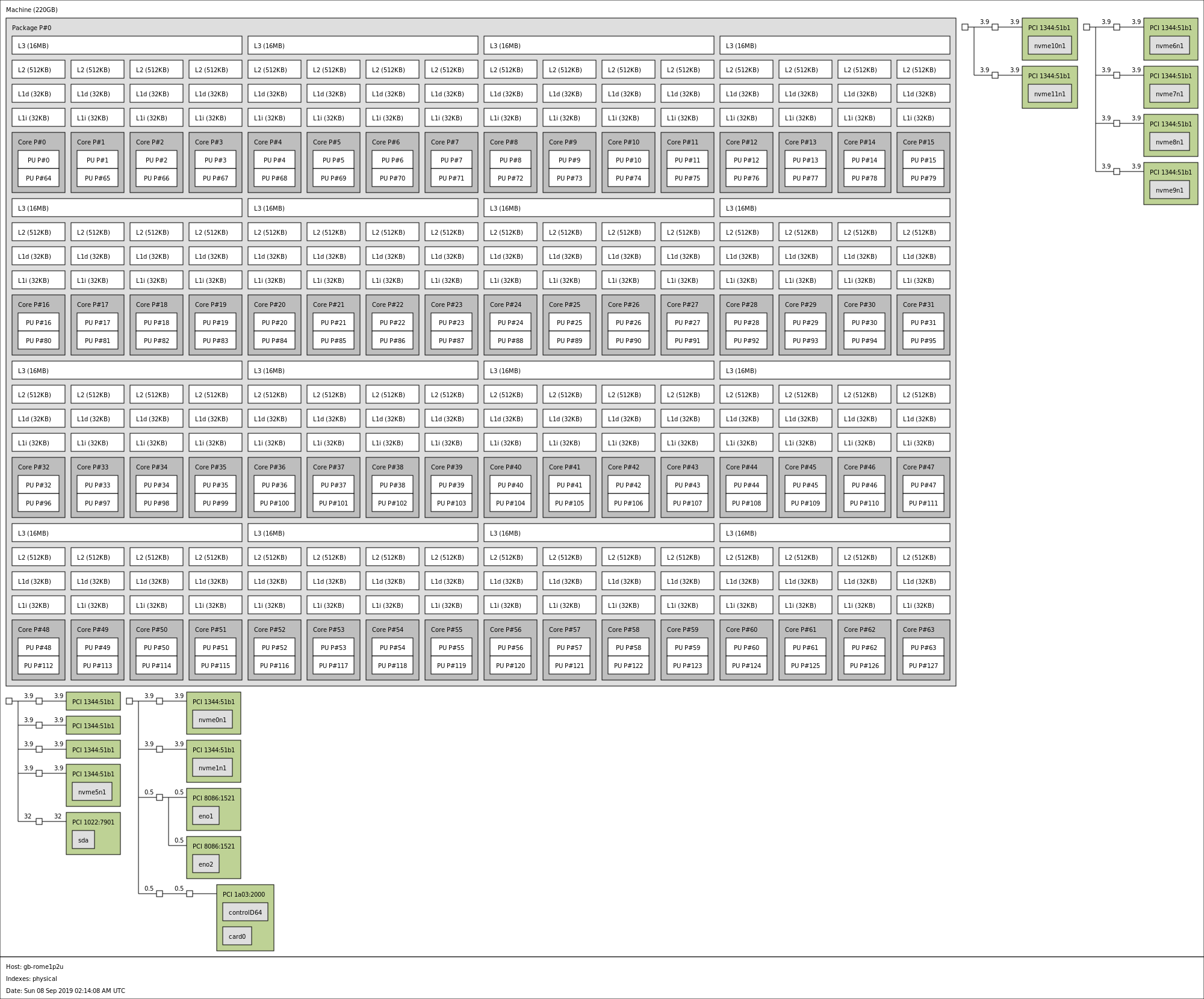

With AMD EPYC 7001 servers, topology was a big deal. Each AMD EPYC 7001 chip had four NUMA nodes per socket, which had implications for applications. With the AMD EPYC 7002 series, by default, each socket is a single NUMA node.

What you are seeing there is multiple NVMe SSDs and NICs sitting on a single NUMA node. That NUMA node happens to be an AMD EPYC 7702P 64-core CPU. While in the first generation, Intel’s common retort to the AMD competition was that AMD needed more NUMA nodes to hit core counts, the tables have turned. Intel cannot hit 64 cores in a single or even dual-socket configuration with the second generation Intel Xeon Scalable CPUs. Instead, it must resort to a quad-socket or quad NUMA node design to hit 64 cores. This is the power of the AMD EPYC 7002 series.

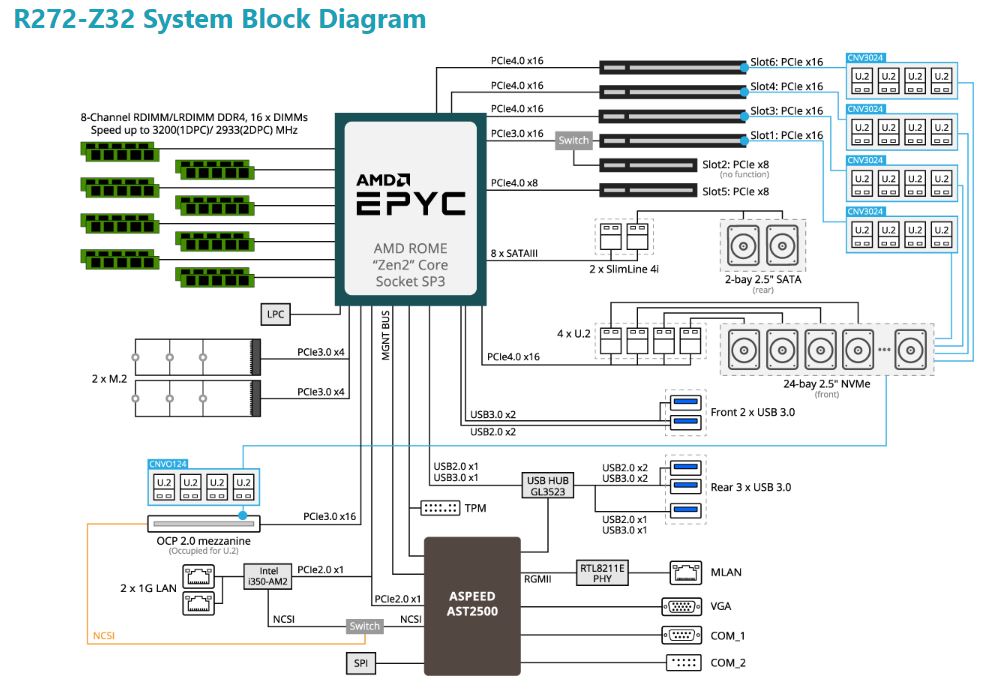

Many will completely miss this, but one of the PCIe x8 slots is not functional in the Gigabyte R272-Z32 design. That is because the switched PCIe port is dedicated to PCIe x16 for 4×4 PCIe lanes to the front panel U.2 drive bays. There is actually only a single available PCIe Gen4 x8 slot in the system along with two PCIe 3.0 x4 M.2 slots. That still allows the use of a 100GbE PCIe Gen4 NIC, but you must use a Gen4 NIC, not a Gen3 NIC to utilize 100GbE on this platform. This is one of those strange cases where we actually wish the two M.2 slots were sacrificed to make this a PCIe Gen4 x16 slot.

Another interesting topology note is that the Intel i350 gigabit LAN interface and BMC sit off of the WAFL PCIe 2.0 interface. That means this platform is designed for AMD EPYC 7002 processors, not EPYC 7001 CPUs. That is common for newer platforms and we expect the AMD EPYC 7003 CPUs will go into this platform as well when they are launched in the future.

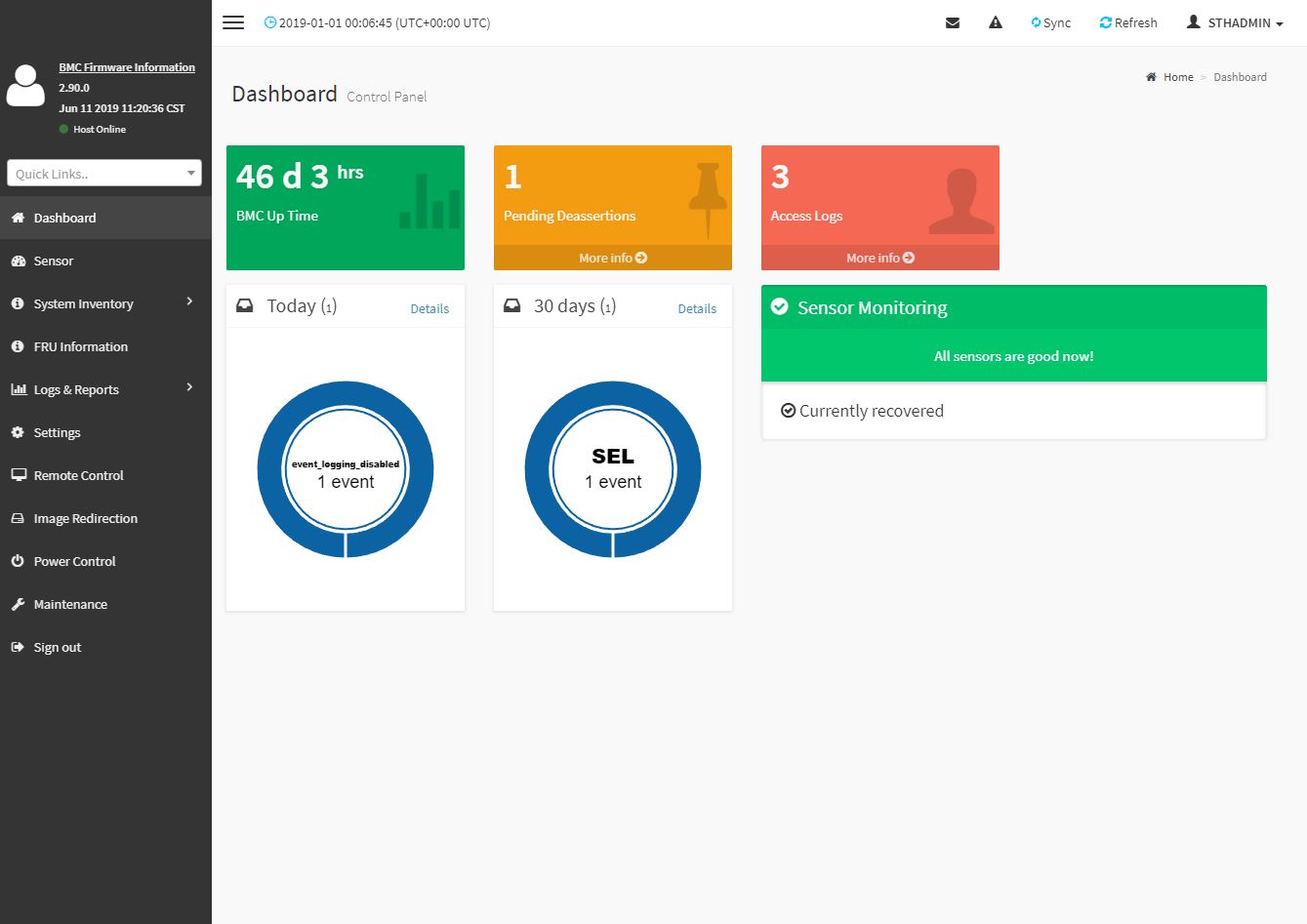

Gigabyte R272-Z32 Management

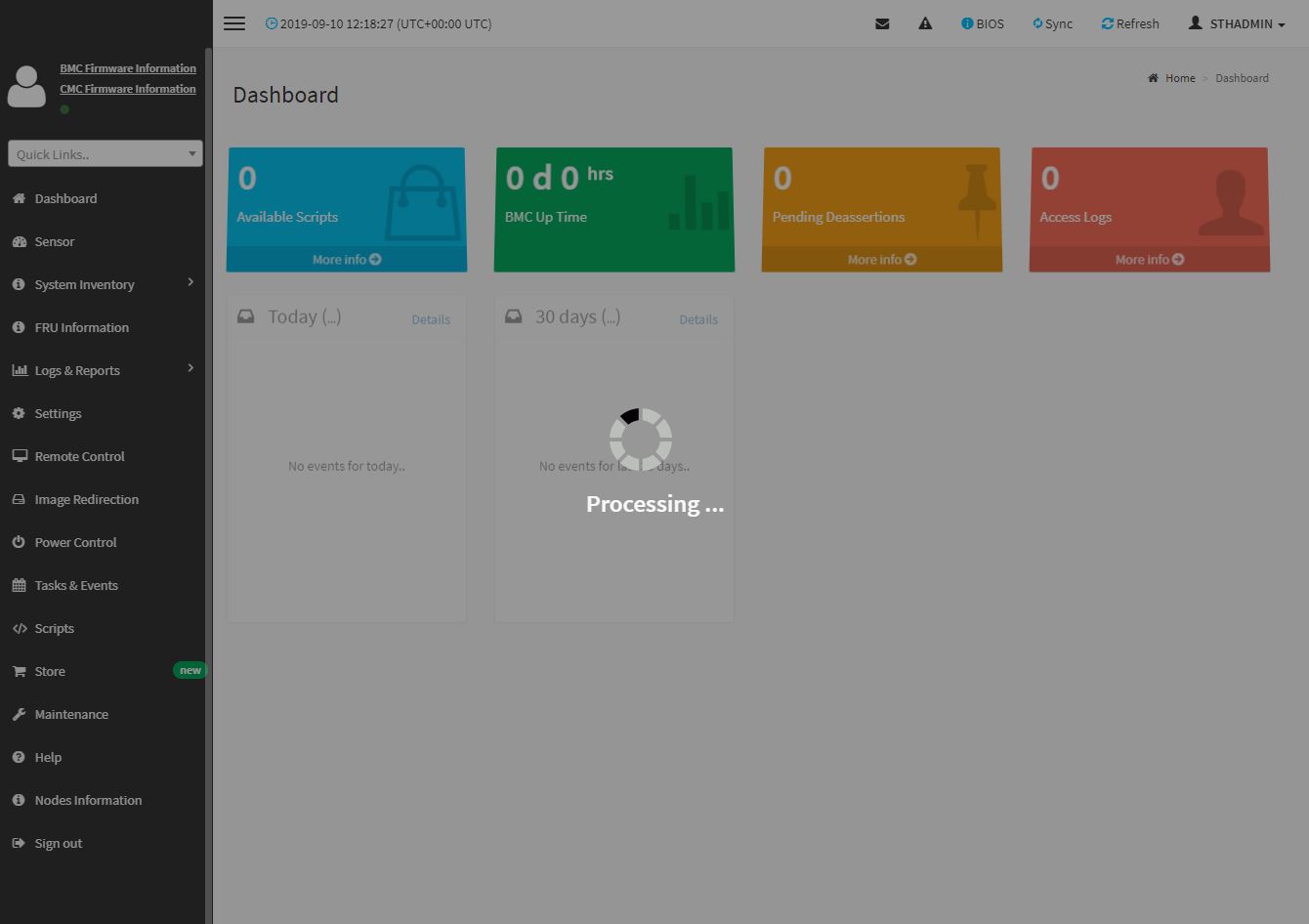

As one can see, the Gigabyte R272-Z32 utilizes a newer MegaRAC SP-X interface. This interface is a more modern HTML5 UI that performs more like today’s web pages and less like pages from a decade ago. We like this change. Here is the dashboard.

One item we noticed is that this new solution takes a long time to log in. We used a stopwatch to time between the login prompt and the dashboard being functional. It took around 26 seconds.

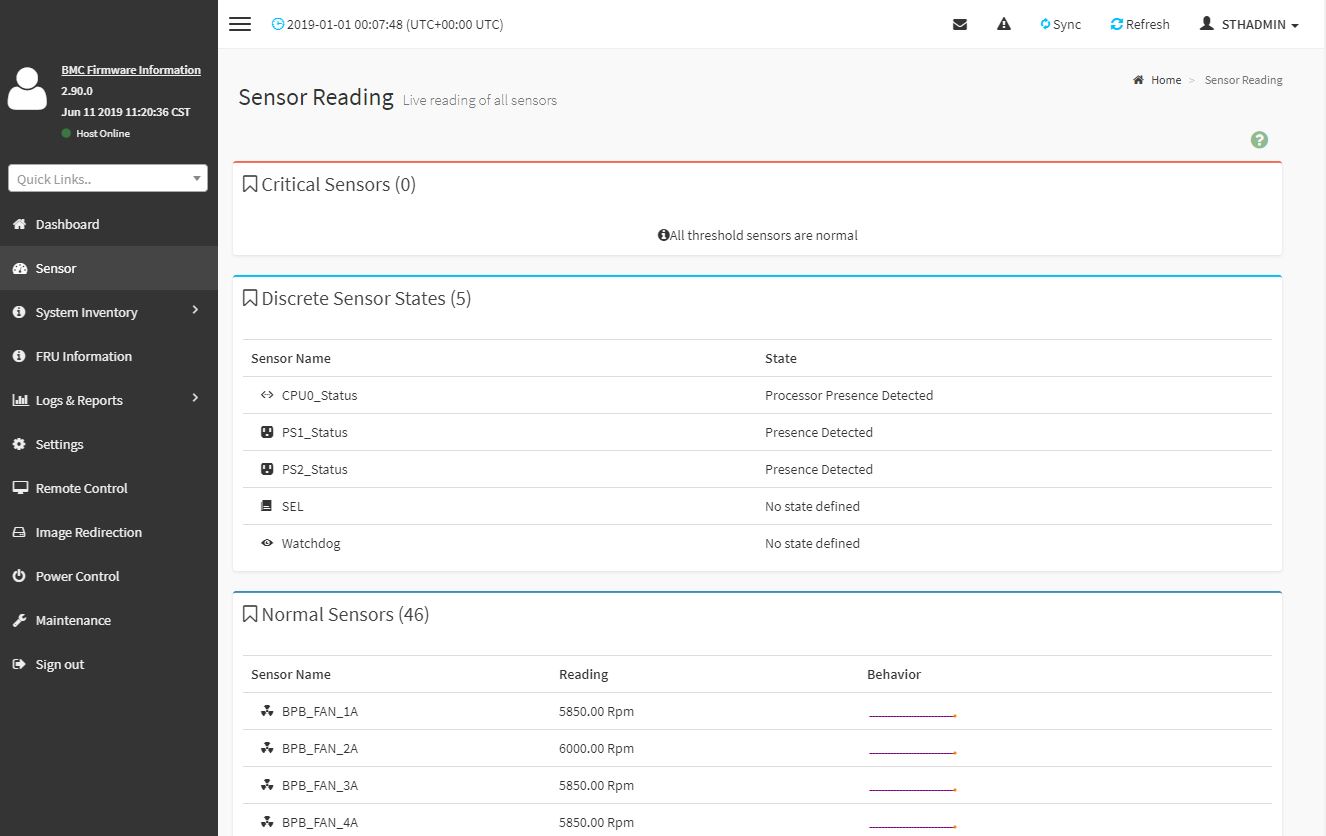

You will find standard BMC IPMI management features here, such as the ability to monitor sensors. Here is an example:

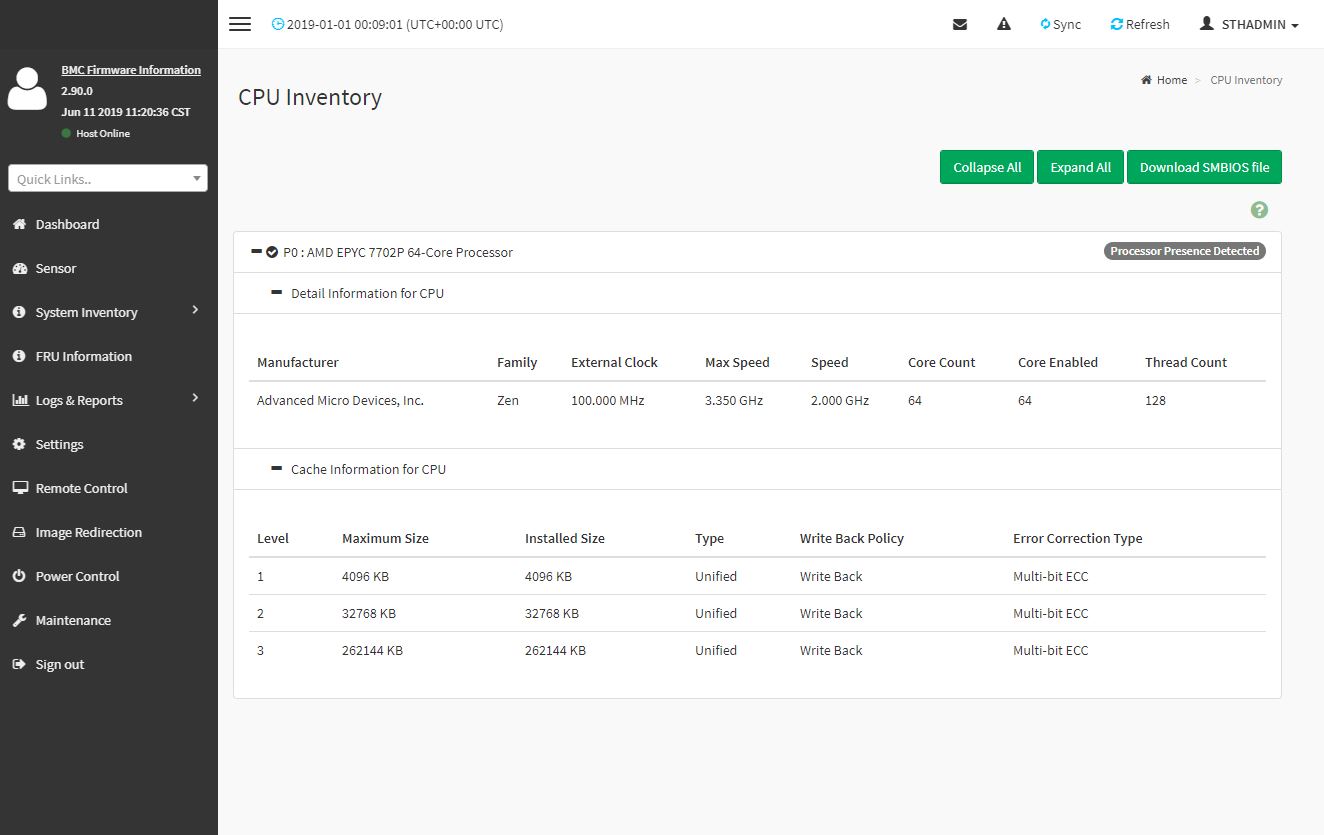

Other tasks such as the CPU inventory are available. One can see this particular CPU is a new AMD EPYC 7702P high-core count chip with 64 cores in the single socket.

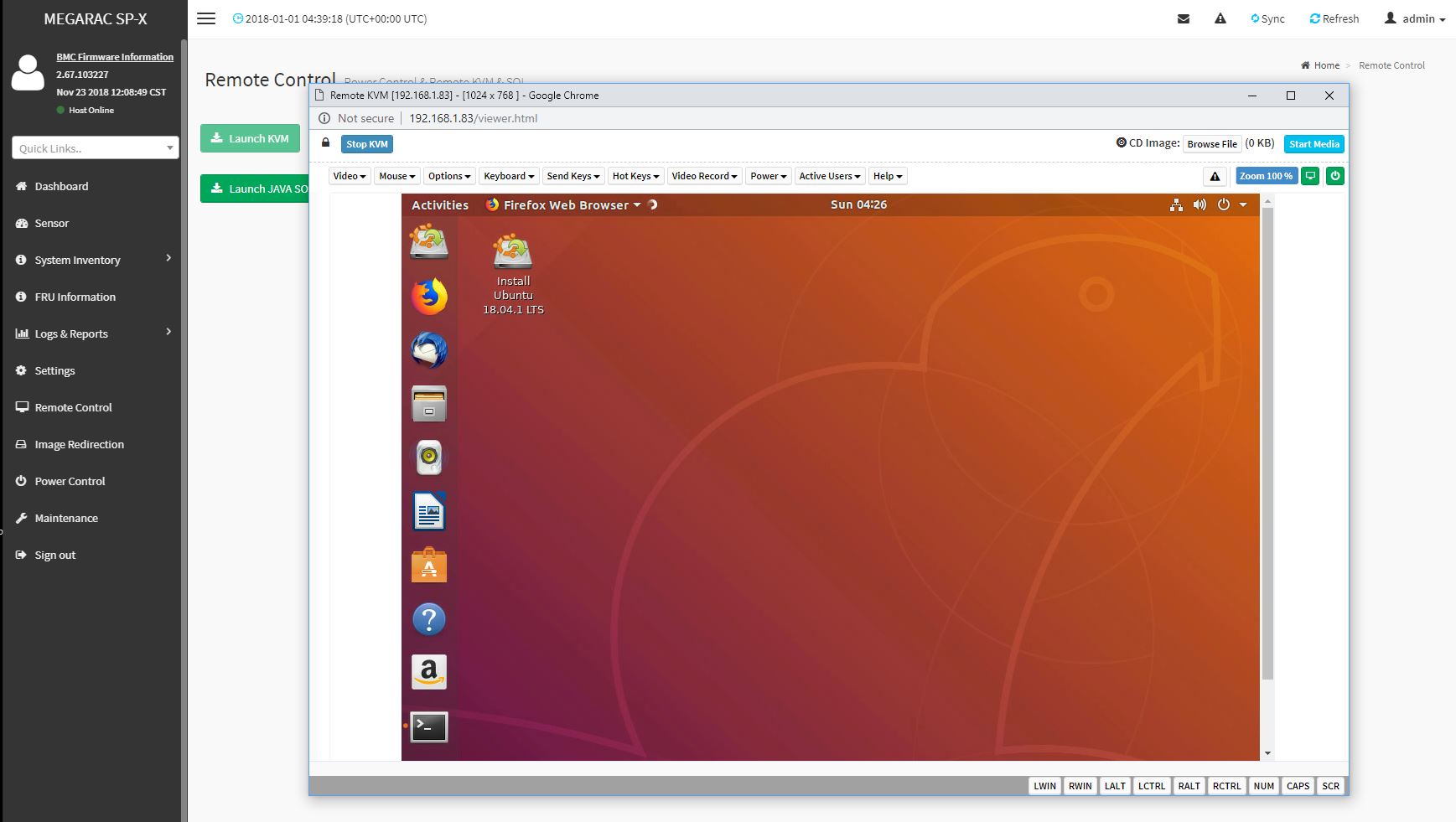

One of the other features is the new HTML5 iKVM for remote management. We think this is a great solution. Some other vendors have implemented iKVM HTML5 clients but did not implement virtual media support in them at the outset. Gigabyte has this functionality and power control support all from a single browser console.

We want to emphasize that this is a key differentiation point for Gigabyte. Many large system vendors such as Dell EMC, HPE, and Lenovo charge for iKVM functionality. This feature is an essential tool for remote system administration these days. Gigabyte’s inclusion of the functionality as a standard feature is great for customers who have one less license to worry about.

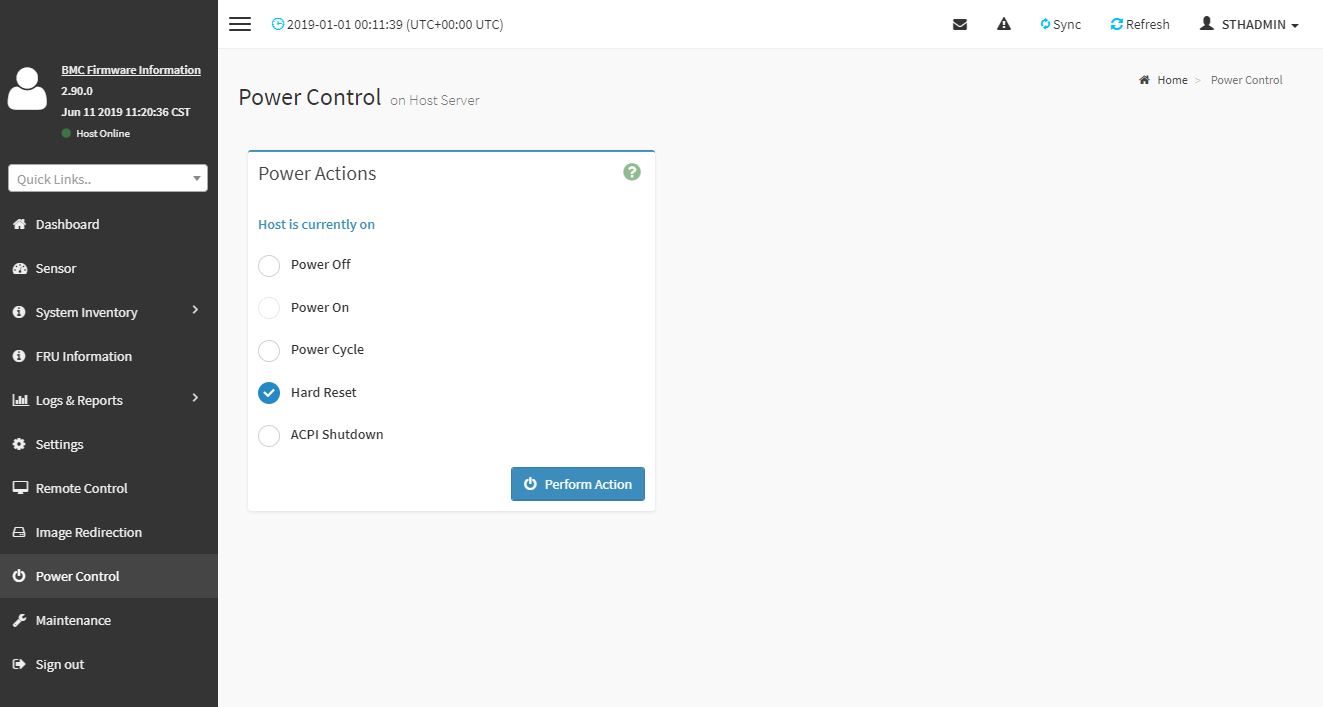

The Power Control feature is fairly standard. We wish this had a reboot or boot to BIOS feature. Our particular test unit defaulted to SMT=Off every time we installed a new CPU. As a result, we had to sit and wait for keystrokes to enter BIOS when we reboot to turn SMT on and change other settings we wanted.

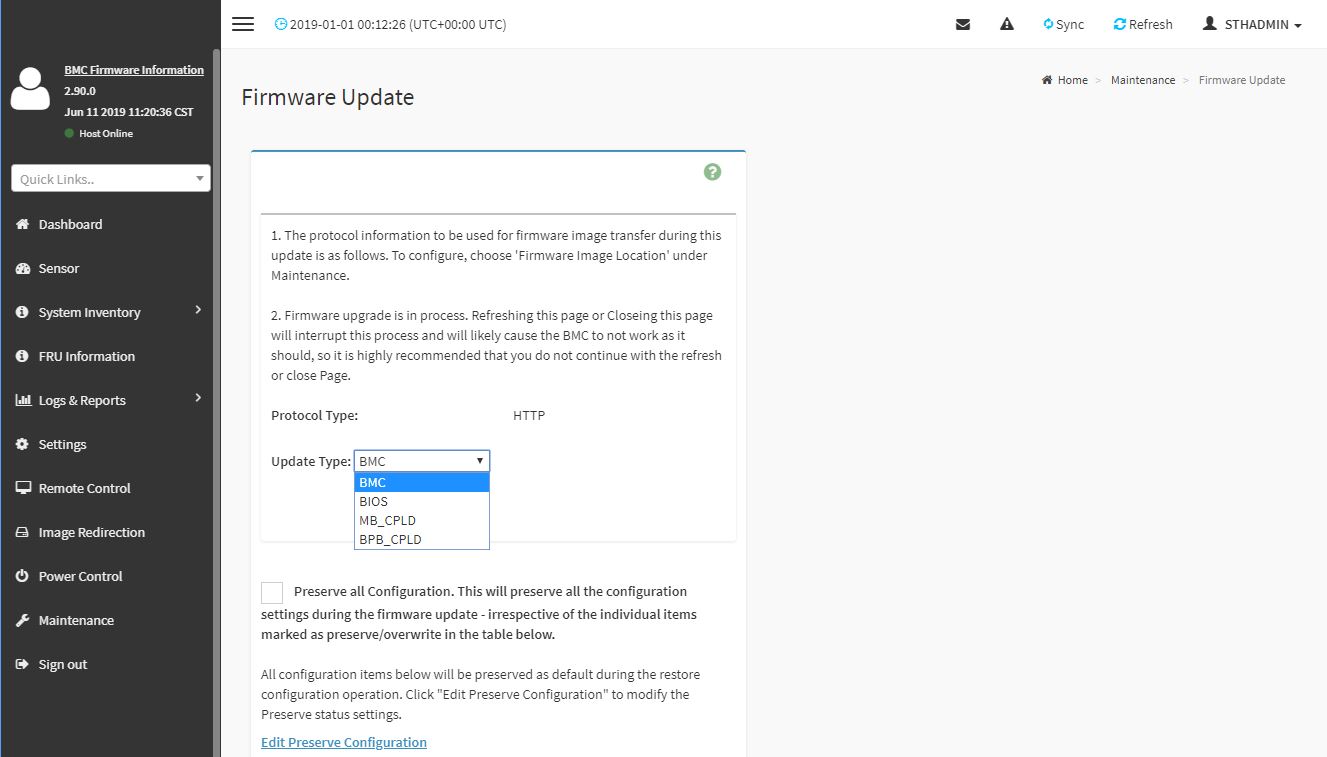

Gigabyte also includes the ability to update both BMC and BIOS firmware from the web interface. That is a feature that most modern servers have. At the same time, Supermicro adds an additional $20 license to update BIOS via the web interface.

Gigabyte R272-Z32 Test Configuration

Here is the test configuration we used for the Gigabyte R272-Z32:

- System: Gigabyte R272-Z32

- CPUs: AMD EPYC 7702P, 7502P, 7402P, 7302P, 7232P, 7262

- Memory: 8x Micron DDR4-3200 32GB RDIMMs (256GB total)

- Boot SSD: Intel DC S3710 400GB

- Storage SSDs: 10x Micron 9300 3.84TB, 14x Intel DC P3520 2TB

- Networking: Mellanox ConnectX-5 VPI PCIe Gen4 (CX556A) 100GbE

Overall, this gave us a solid foundation to get some performance numbers that we are going to show next.

isn’t that only 112 pcie lanes total? 96 (front) + 8 (rear PCIe slot) + 8 (2 m.2 slots). did they not have enough space to route the other 16 lanes to the unused PCIe slot?

bob the b – you need to remember SATA III lanes take up HSIO as well.

M.2 to U.2 converters are pretty cheap.

Use Slot 1 (16x PCIe4) for the network connection 200 gbit/s

Use Slot 5 and the 2xM.2 for the NVMe-drives.

How did you tested the aggregated read and write? I assume it was a single raid 0 over 10 and 14 drives?

We used targets on each drive, not as a big RAID 0. The multiple simultaneous drive access fits our internal use pattern more closely and is likely closer to how most will be deployed.

Wondering if it support gen4 NVMe?

CLL – we did not have a U.2 Gen4 NVMe SSD to try. Also, some of the lanes are hung off PCIe Gen3 lanes so at least some drives will be PCIe Gen3 only. For now, we could only test with PCIe Gen3 drives.

Wish I could afford this for the homelab!

Thanks Patrick for the answer. For our application we would like to use one big raid. Do you know if it is possible to configure this on the Epyc system? With Intel this seems to be possible by spanning disks overs VMDs using VROC.

Intel is making life easy for AMD.

“Xeon and Other Intel CPUs Hit by NetCAT Security Vulnerability, AMD Not Impacted”

CVE-2019-11184

Most 24x NVMe installations are using software RAID, erasure coding, or similar methods.

I may be missing something obvious, and if so please let me know. But it seems to me that there is no NVMe drive in existence today that can come near saturating an x4 NVMe connection. So why would you need to make sure that every single one of the drive slots in this design has that much bandwidth? Seems to me you could use x2 connections and get far more drives, or far less cabling, or flexibility for other things. No?

Jeff – PCIe Gen3 has been saturated by NVMe offerings for a few years and generations of drives now. Gen4 will be as soon as drives come out in mass production.

Patrick,

If you like this Gigabyte server, you would love the Lenovo SR635 (1U) and SR655 (2U) systems!

– Universal drive backplane; supporting SATA, SAS and NVMe devices

– Much cleaner drive backplane (no expander cards and drive cabling required)

– Support for up to 16x 2.5″ hot-swap drives (1U) or 32x 2.5″ drives (2U);

– Maximum of 32x NVMe drives with 1:2 connection/over-subscription (2U)

Hi BinkyTo – check out https://www.servethehome.com/lenovo-amd-epyc-7002-servers-make-a-splash/

There are pluses and minuses of each solution. The more important aspect for the industry is that there are more options available on the market for potential buyers.

Thanks for the pointer, it must have skipped my mind.

One comment about that article: It doesn’t really highlight that the front panel drive bays are universal (SATA, SAS and NVMe).

This is a HUGE plus compared to other offerings, the ability to choose the storage interface that suits the need, at the moment, means that units like the Lenovo have much more versatility!

This is what I was looking to see next for EPYC platforms… I always said it has Big Data written all over it… A 20 server rack has 480 drives… 10 of those is 4800 drives… 100 is 48000 drives… and at a peak of ~630W each, that’s an astounding amount of storage at around 70KW…

I can see Twitter and Google going HUGE on these since their business is data… Of course DropBox can consolidate on these even from Naples…

Where can I buy these products

Olalekan we added some information on that at the end of the review.

Can such a server have all the drives connected via a RAID controller like Megaraid 9560-16i?

Before commenting about software RAID or ZFS, in my application, the bandwidth provided by this RAID controller is way more than needed, with the comfort of providing worry free RAID 60 and hiding very well the write peak latencies due to the battery backup cache.

Mr. Kennedy, recently I buy a R152-Z31 with a AMD Epyc 7402P, that have the same motherboard of R272-Z32 the MZ32-AR0 to test our apps with the AMD processor, but, now I am confused with the difference between ranks within RAM memory modules, in your review I saw you use a micron one, in the micron web page (https://www.crucial.com/compatible-upgrade-for/gigabyte/mz32-ar0) there are some models of 16GB, could you advice me with a model that will be at least work fine. I appreciate a lot if you could help me.