Gigabyte R181-NA0 Management

These days, out of band management is a standard feature on servers. Gigabyte offers an industry standard solution for traditional management, including a Web GUI. This is based on the ASPEED AST2500 solution, a leader in the BMC field.

With the Gigabyte R181-NA0 solution, one has access to the Avocent MergePoint based solution. This is a popular management suite that allows integration into many systems management frameworks.

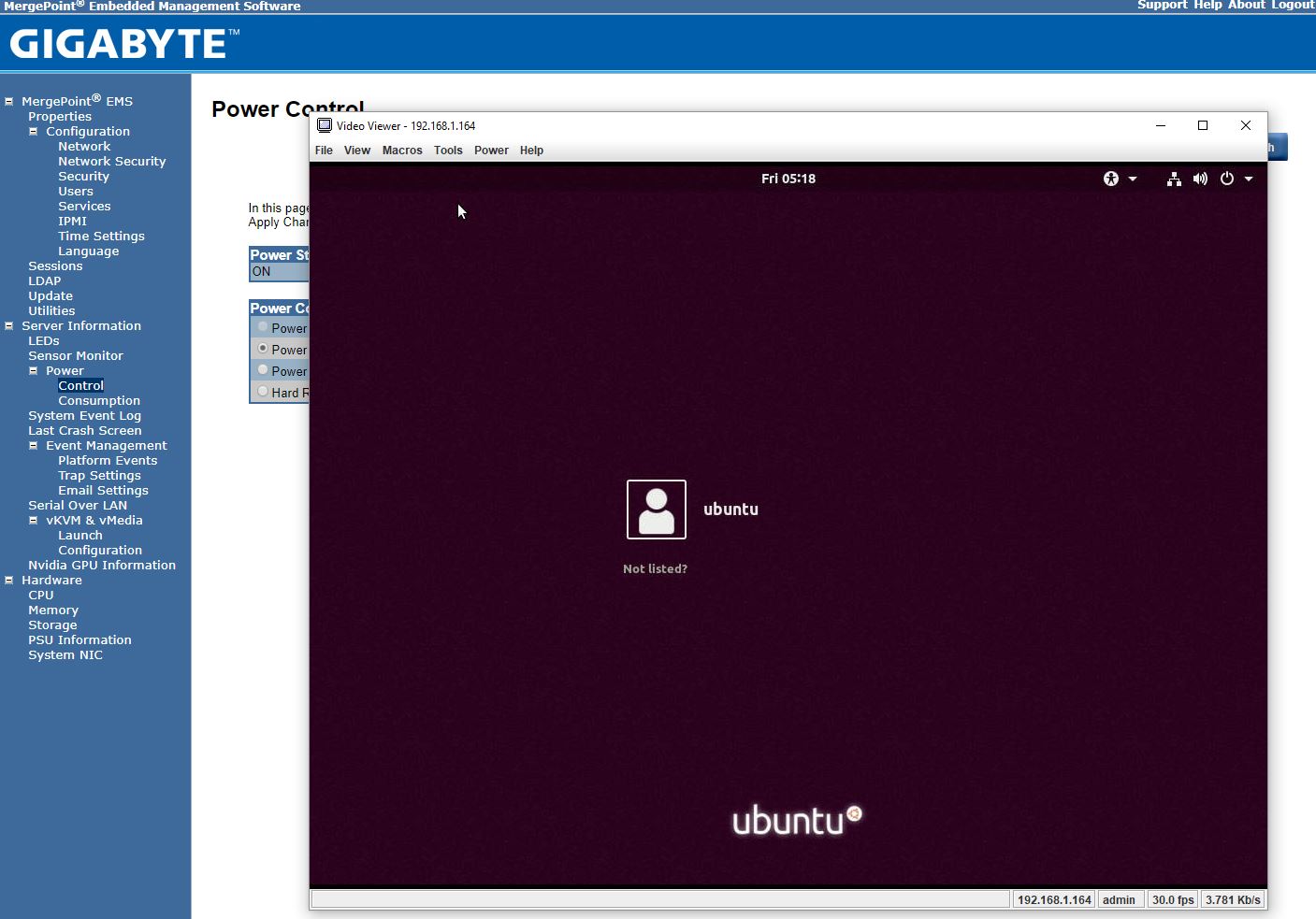

Gigabyte allows users to utilize Serial-over-LAN and iKVM consoles from before a system is turned on, all the way into the OS. Other vendors such as HPE, Dell EMC, and Lenovo charge an additional license upgrade for this capability (among others with their higher license levels.) That is an extremely popular feature because it makes remote troubleshooting simple.

At STH, we do all of our testing in remote data centers. Having the ability to remote console into the machines means we do not need to make trips to the data center to service the lab even if BIOS changes or manual OS installs are required.

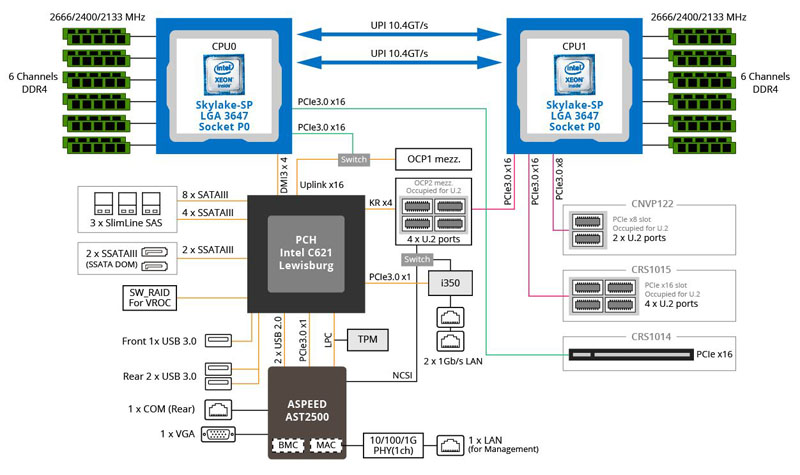

Gigabyte R181-NA0 Block Diagram

With these platforms that are pushing the capabilities of embedded SoC’s, the block diagram becomes an important tool to see how everything is connected. Here is a block diagram that shows the topology of the Gigabyte R181-NA0.

There is something that you will see here that is extremely important. While the system will boot with only CPU0 installed, you need to have CPU1 installed as well for full functionality. All ten of the front U.2 NVMe ports are run off of CPU1 PCIe lanes. We, therefore, need to recommend that anyone running this configuration utilize two CPUs.

Next, we are going to look at the Gigabyte Gigabyte R181-NA0 performance, followed by power consumption and our final words.

Only 2 UPI links? When true it’s not really made for the gold 6 series or platinum and to be honest why would you need suchs an amount of compute power in a storage server?

I’ll stick to a brand that can handle 24 NVMe-drives with just one CPU.

@Misha,

You are obviously referring to a different CPU brand, since there is no single Intel CPU which can support 10x NVMe drives.

@BinkyTO,

10 NVMe’s is only 40 PCIe slots sol it should be possible with Xeon Scalable, you just don’t have many lanes left for other equipment.

@misha hyperconverged one of the biggest growing sectors and a multi billion dollar hardware market. You’d need two CPUs since you can’t do even a single 100G link on an x8.

I’d say this looks nice

@Tommy F

Only two 10.4 UPI links you are easally satisfied.

24×4=96 PCIe lanes so their are 32 left – 4 for chipsets etc and a boot drive leaves you with 28 PCIe 3-lanes.

28PCIe-lanes x 985MB/s x 8bit = 220 Gbit/s, good enough for 2018.

And lets not forget octa-channel memory(DDR4-2666) on 1 CPU(7551p) for $2,200 vs. 2 x 5119T($1555 each) with only 6 channel DDR4-2400.

In 2019 EPYC 2 will be released with PCIe-4, which has double the speed of PCIe-3.

Not taken into account “Spectre, Meltdown, Foreshadow, etc….)

@Patrick there’s a small error in the legend of the storage performance results. Both colors are labeled with read performance where I expect the black bars to represent write performance instead.

What I don’t see is the audience for this solution. With an effective raw capacity of 20Tb maximum (and probably a 1:10 ratio between disk and platform cost), why would anyone buy this platform instead of a dedicated JBOF or other ruler format based platforms. The cost per TB as well as storage density of the server reviewed here seems to be significantly worse.

David- thanks for the catch. It is fixed. We also noted that we tried 8TB drives, we just did not have a set of 10 for the review. 2TB is now on the lower end of the capacity scale for new enterprise drives. These 10x NVMe 1U’s there is a large market for, which is why the form factor is so prevalent.

Misha – although I may personally like EPYC, and we have deployed some EPYC nodes into our hosting cluster, this review was not focused on that as an alternative. Most EPYC systems still crash if you try to hot-swap a NVMe SSD while that feature just works on Intel systems. We actually use mostly NVMe AICs to avoid remote hands trying to remove/ insert NVMe drives on EPYC systems.

Also, your assumption that you will be able to put an EPYC 2nd generation in an existing system and have it run PCIe Gen4 to all of the devices is incorrect. You are then using both CPUs and systems that do not currently exist to compare to a shipping product.

Few things:

1. Such a system with an a single EPYC processor would save money to a customer who needs such a system since you can do everything with a single CPU.

2. 10 NVME drives with those fans – if you’ll heavily use those CPU’s (lets say 60-90%) then the speed of those NVME drives will drop rapidly since those fans will run faster, sucking more air from outside and will cool the drives too much, which reduces the SSD read speed. I didn’t see anything mentioned on this article.

@Patrick – You can also buy EPYC systems that do NOT crash when hot-swapping a NVMe SSD, you even mentioned it in earlier thread on STH.

I did not assume that you can swap out the EPYC1 with an EPYC2 and get PCIe-4. When it is just for more compute speed it should work(same socket) as promised many times by AMD. When you want to make use of PCIe-4 you will need a new motherboard. When you want to upgrade from XEON to XEON-scalable you have no choice, you have to upgrade both the MB as the CPU

Hetz, we have a few hundred dual Xeon E5 and Scalable 10 NVME 1U’s and have never seen read speeds due to fans drop.

@Patrick don’t feed the troll. I don’t envy that part of your job.

dell-emc-poweredge-r7415-review 2U 24 U2

aic-fb127-ag-innovative-nf1-amd-epyc-storage-solution 1U 36 NF1

Yes, too bad that the Dell R7415 which STH reviewed earlier this year was not used for comparison of NVMe performance with Epyc.

Also STH themselves reported that the R7415 would support hot-swap without crashing. So I don’t quite understand why crashes are used as an argument to not benchmark against Epyc.

https://www.servethehome.com/dell-emc-poweredge-r7415-nvme-hot-swap-amd-epyc-in-action/