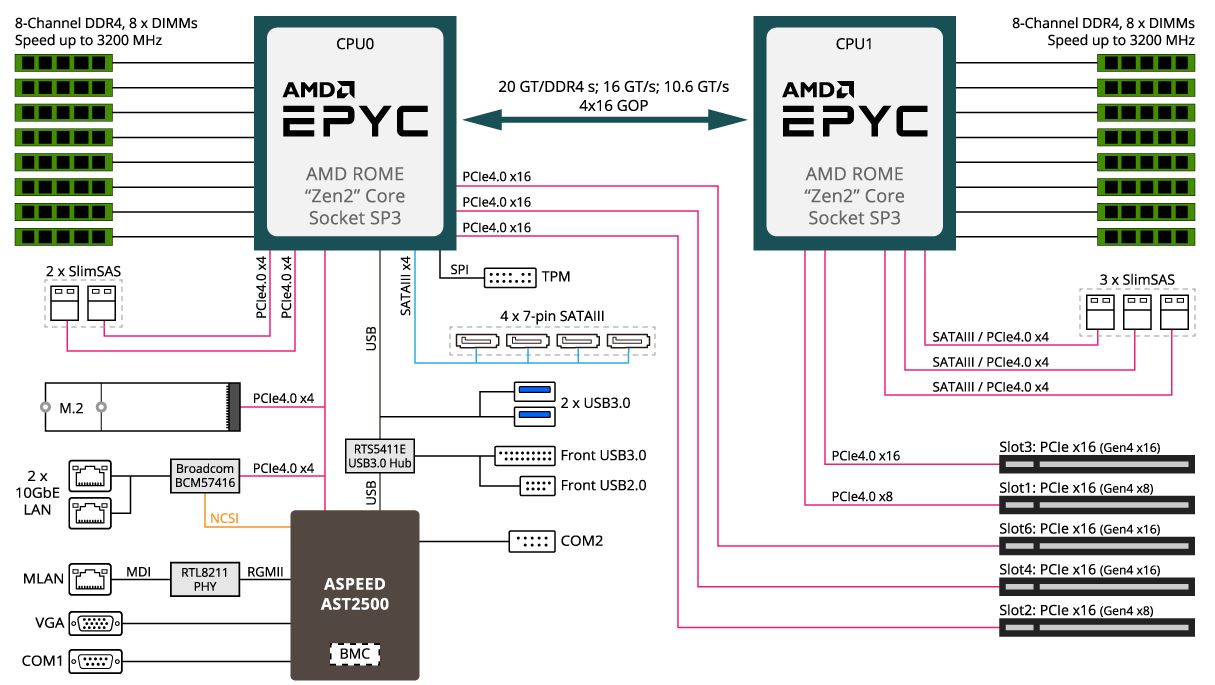

Gigabyte MZ72-HB0 Block Diagram

In dual-socket servers, the block diagram is extremely important. It tells us a lot about the server and how it is designed. Here we can see the MZ72-HB0’s block diagram.

As we saw in our hardware overview, this is a PCIe Gen4 motherboard. Many of the early EPYC 7001/ 7002 motherboards were Gen3 to save on costs. Now we are getting full bandwidth versions of platforms.

The other key item we will note is that with CPU0 we effectively get all of the main system connectivity including M.2, NICs, SATA, USB, and the BMC. The second socket adds a second CPU with its 8 DDR4 DIMMs and also PCIe/ SATA III connectivity for the slots and ports, but it is not connecting the base-level I/O of the server.

The final key point we wanted to show here is that the system is using all four links between CPUs for maximum bandwidth. We have shown how some AMD servers sacrifice socket-to-socket bandwidth to achieve a 160 PCIe Lane Design. In a motherboard this small, it would be difficult to fit 160x PCIe lanes, so Gigabyte made a good design decision here.

Next, we are going to take a look at management.

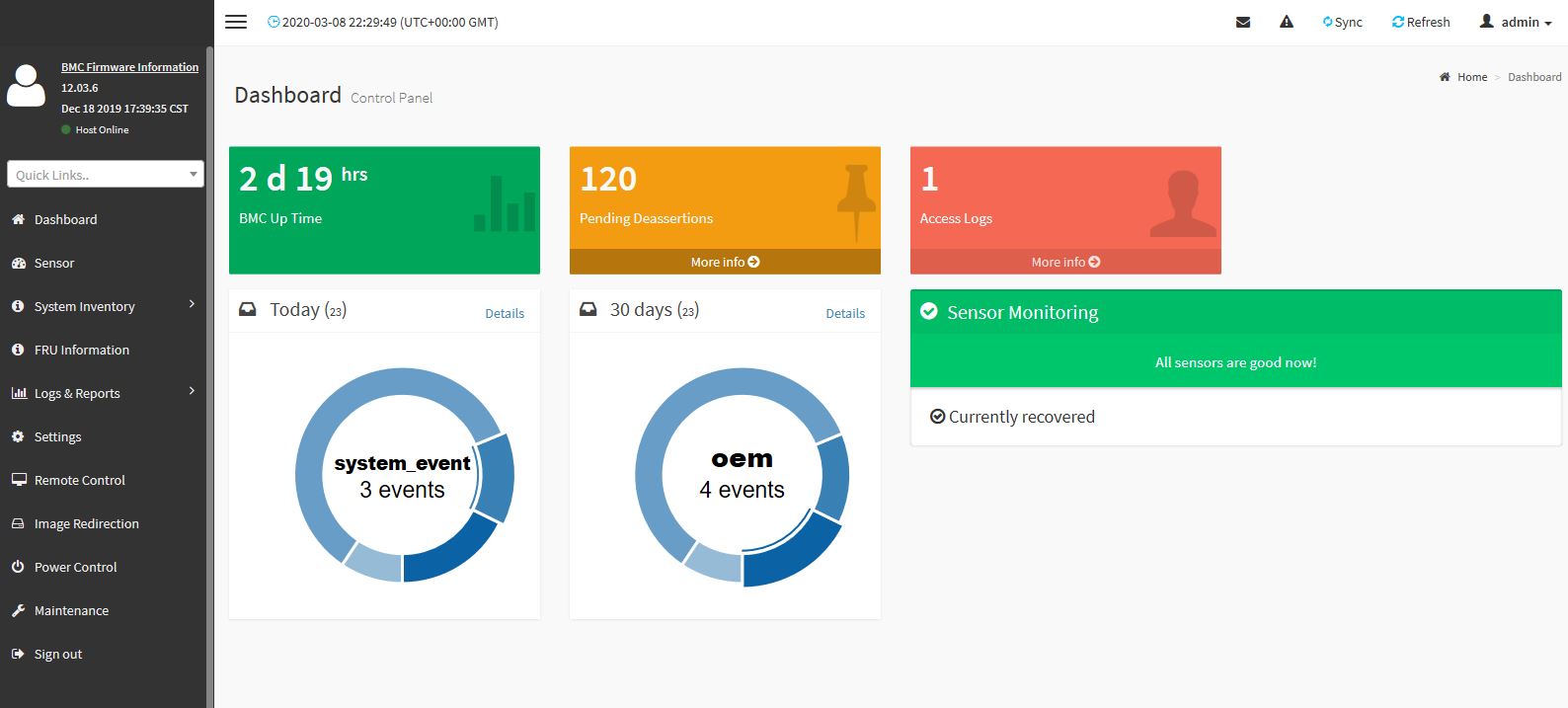

Gigabyte MZ72-HB0 Management

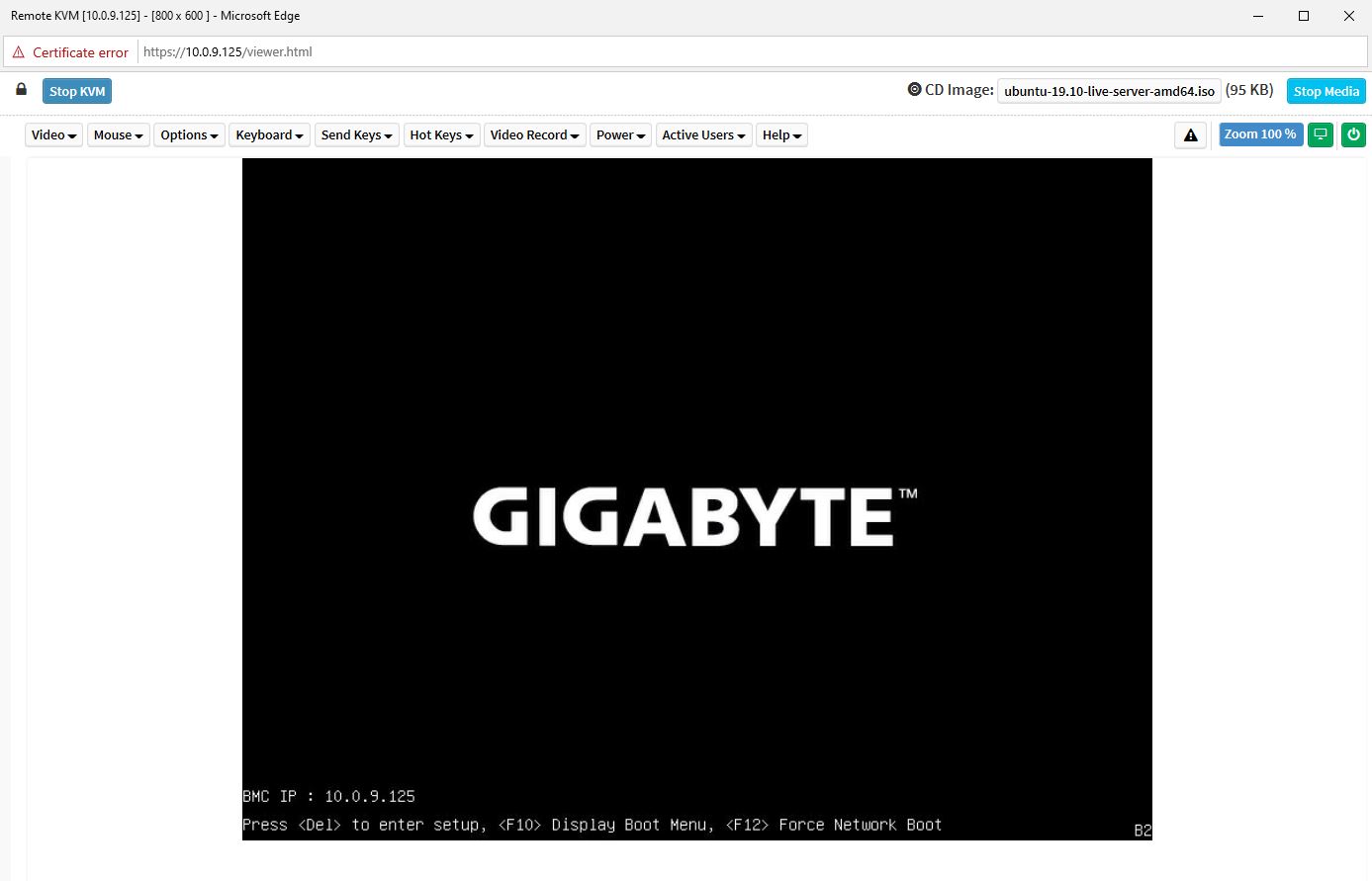

As one can see, the Gigabyte MZ72-HB0 utilizes a newer MegaRAC SP-X interface for Gigabyte that is common on a number of its newer platforms. This interface is a more modern HTML5 UI that performs more like today’s web pages and less like pages from a decade ago. We like this change. Here is the dashboard.

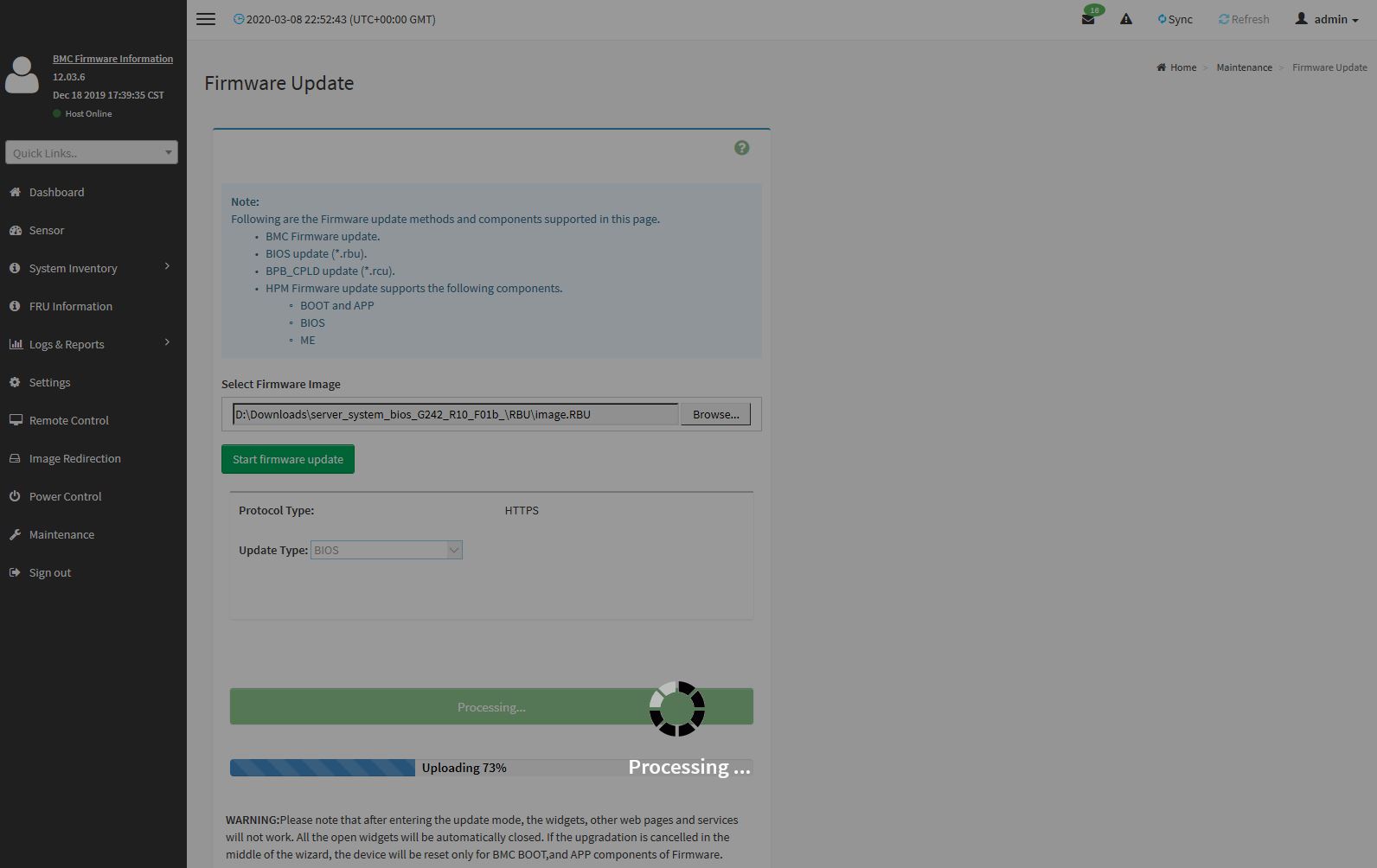

You will find standard BMC IPMI management features here, such as the ability to monitor sensors. One can also perform functions such as updating BIOS and IPMI firmware directly from the web interface. Companies like Supermicro charge extra for this functionality, but it is included with Gigabyte’s solution.

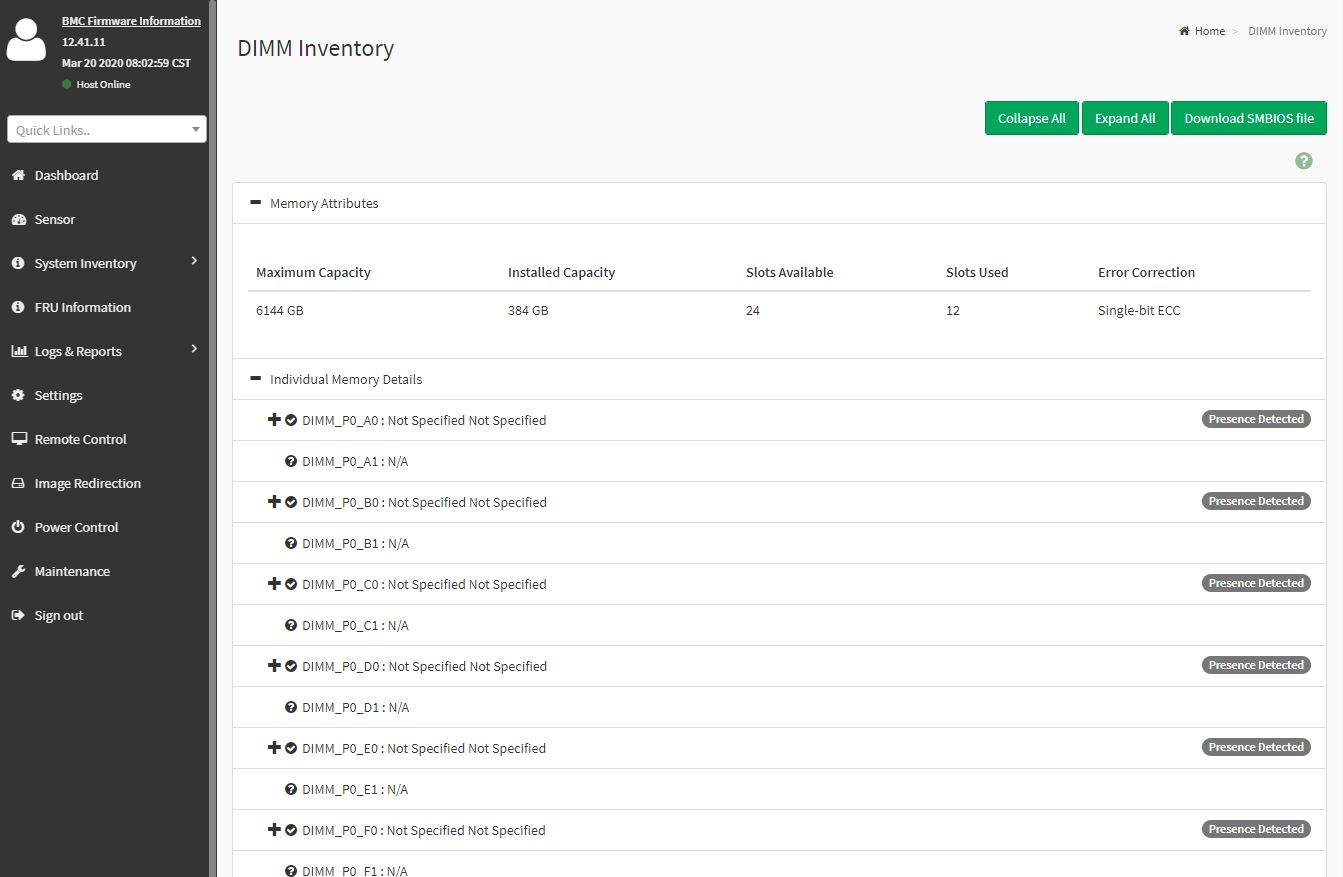

Other tasks such as the node inventory are available. One can see this particular node with generic TSV memory.

One of the other features is the new HTML5 iKVM for remote management. We think this is a great solution. Some other vendors have implemented iKVM HTML5 clients but did not implement virtual media support in them at the outset. Gigabyte has this functionality and power control support all from a single browser console.

We want to emphasize that this is a key differentiation point for Gigabyte. Many large system vendors such as Dell EMC, HPE, and Lenovo charge for iKVM functionality. This feature is an essential tool for remote system administration these days. Gigabyte’s inclusion of the functionality as a standard feature is great for customers who have one less license to worry about.

The web GUI is perhaps the easiest to show online, however, the system also has a Redfish API for automation. This is along what other server vendors are doing as Redfish has become the industry-standard API for server management.

As a quick note, this was our first Gigabyte system with a BMC unique password feature enabled. In the future, these systems will have a unique password due to a California law mandating the practice.

If you are looking for a unique password, we found the unique BMC password on the CPU cover.

Our advice is that everyone, regardless of vendor, has the ability to input default passwords into a system. It would be an extremely rough day if you installed twenty of these servers in a rack only to realize you had put the CPU covers together and forgot which password goes to which machine.

Test Configuration

For this test we utilized the following setup:

- Motherboard: Gigabyte MZ72-HB0

- CPU: 2x AMD EPYC 7742

- RAM: 16x 32GB DDR4-3200 ECC RDIMMs

- Storage: 1x Kioxia CD6 7.68TB, 1x Samsung PM1733 3.84TB (both PCIe Gen4)

- NIC: Supermicro 100GbE (Intel 800 series based),

On the CPU side, this platform can take up to 280W TDP CPUs such as the AMD EPYC 7H12. We are instead using the EPYC 7742 as mainstream CPUs. One can configure up to 240W TDP on these chips in the Gigabyte platform.

With the Xeon E5 generations of servers, dual 8-12 core CPUs were common for this type of EATX motherboard. If one needs lower core counts and lower memory bandwidth to gain lower power consumption and costs, there are a number of 4-channel memory SKUs such as the AMD EPYC 7272 that can be a great fit. You can learn more about that here:

Let us move onto performance in our review.

This is fantastic, and with water-cooled solutions you can easily run 3x high end GPUs and enough memory for local machine learning and video editing applications. Also with raid controller cards, have plenty of storage with 48 drives in the accessory cards alone. I look forward to this hitting market

Great JOB

One thing to notice CPUs are positioned exactly behind each other to give room for long PCie at the first slot. This will have a negative effect on Colling CPU 1 since hot air passing through CPU0 will be blowing on it. I think that is why they assigned 56 PCIe lanes on CPU0 vs only 36 PCIe lanes on CPU1

In general it is a great design

Free HBO! Nice

nice review, but i am missing the temps of the components during the test so we can compare with supermicro h11dsi

Hi erik – We typically do not publish temps as you would see on a consumer site. Most of these boards utilize chassis cooling and PWM fan control so there is not as much of an issue as we see on the consumer side.

Frankly, temps are an almost irrelevant point of differentiation with the H11DSi since the H11DSi is a PCIe Gen3 motherboard while this is a PCIe Gen4 board. To most, the ability to support PCIe Gen4 v. Gen3 is the significantly more important point of differentiation.

Interested in building a computer for CFD. Would it be possible to start off with one cpu and set of memory and have it run, then upgrade with a second cpu later?

I can never find a retailer for the newer AMD Gigabyte server boards. Any suggestions?

Sona.de has the board in europe, got mine there a few months ago and have been using it as a workstation board and its been very good.

Id like to see some more workstation related benchmarks, there’s seems to be quite a few people using epyc/rome boards for workstations in the forums.

Small error in the review, theres 100 lanes available not 88, and 128 used between cpus, for a total of 228/256 used(according to anandtech theres actually 129 for each cpu, four extra lanes for the bmc). Would be interesting to see a comparison if you review the coming asrock ROME2D16-2T board that only uses 92 lanes between cpus, to see if there’s much performance difference. That board has a few more pcie lanes available and 5 x16 slots and two m.2 slots so maybe even better as a workstation board.

I bought this board. Everything is fine except the boot loop. So if I reset, reboot or power cycle, the mobo boots up for 8 seconds and then cuts off the power. Then it tries to boot up again for another 8 seconds. This keeps happening over and over again and it wont boot up the OS.

However if I shut it down and remove the power cable / switch off the PSU for 5-10 minutes, then it can boot up properly again.

Has anyone experienced this issue? I have tried adjusting the BIOS, upgraded BMC and BIOS to latest version.