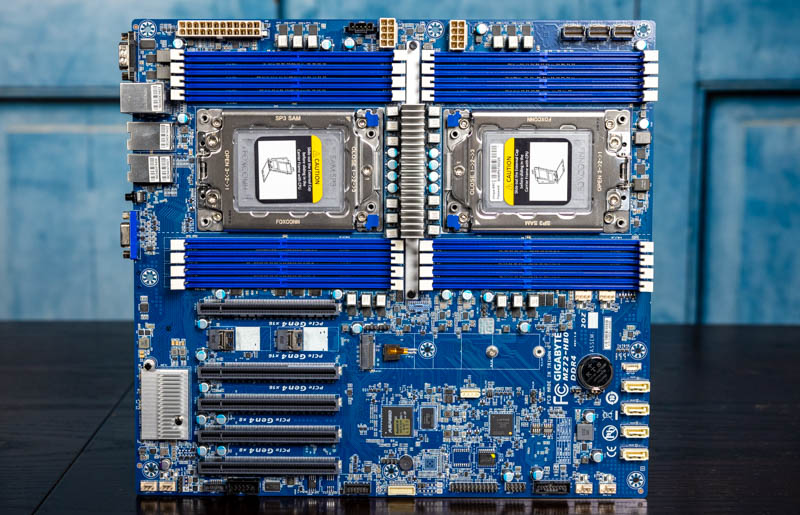

While much of the server industry has migrated to custom form factors over the past few years, there are still a large number of organizations and individuals that want to take advantage of the plethora of custom chassis available. Using a custom chassis offers flexibility to create a tailored solution for a specific purpose. In our Gigabyte MZ72-HB0 review, we are going to see how this EATX motherboard is built to offer server builders a unique set of capabilities to create AMD EPYC 7002/ 7003 based systems with PCIe Gen4 support.

Gigabyte MZ72-HB0 Overview

The MZ72-HB0 is an EATX form factor. That means that the motherboard is 305x330mm or about 12×13 inches. That is actually relatively small compared to many dual socket AMD EPYC motherboards on the market today, and will also be small looking ahead to the Ice Lake generation of Intel Xeon CPUs simply due to the size of sockets and memory. Better said, even though to some this may seem like a large motherboard, it is relatively compact for this class of dual-socket motherboard.

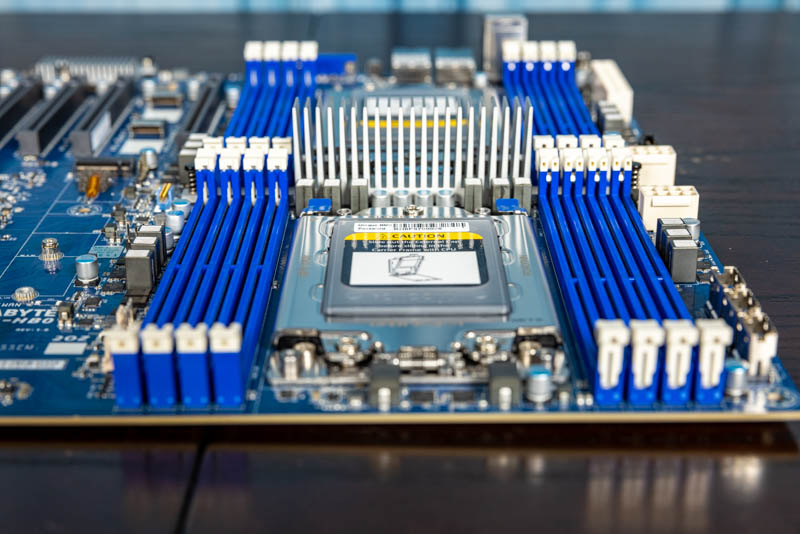

The airflow is a key differentiator of this platform. It follows traditional front to back server airflow. Since the motherboard is designed to fit in an EATX chassis, that means one needs to ensure proper airflow design. Many server chassis have this, but some workstation chassis do not. One also has to be mindful of clearances. Standard server heatsinks work fine. Liquid cooling can work too, but one may need to be careful if larger tower coolers are used given the memory layout and chassis clearances.

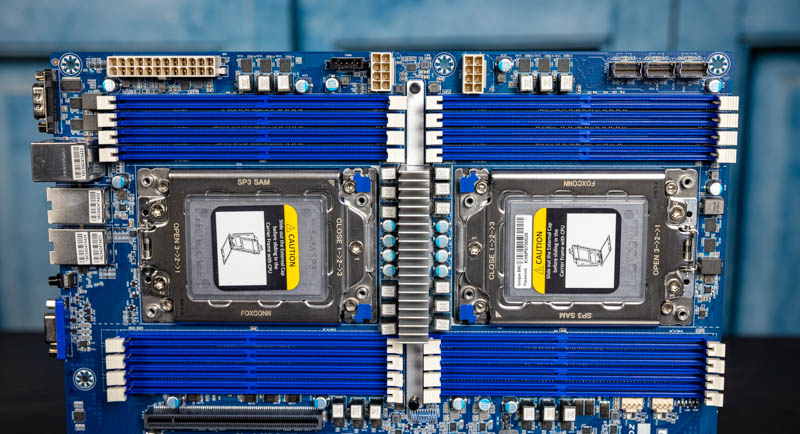

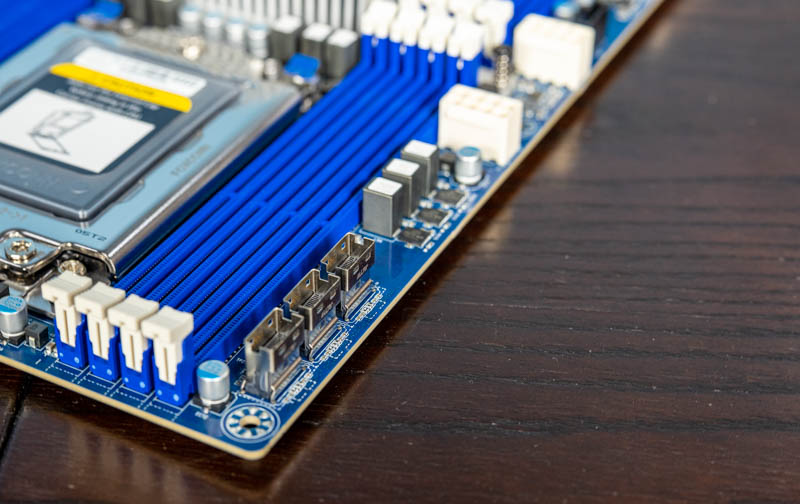

Looking at the top of the motherboard, we can see the dual AMD SP3 sockets. These sockets fit AMD EPYC 7002 “Rome” CPUs and should support EPYC 7003 “Milan” parts as those arrive more broadly in 2021. Each CPU socket has eight DIMMs or one per memory channel. EPYC CPUs can handle two DIMMs per channel or 2DPC but this is a 1DPC design meaning to get EATX one loses half of the DIMM slots over some larger server motherboards. Again, the is a compact motherboard and one can see how much space these DDR4-3200 DIMM slots take up. We will quickly note that these DIMM slots have one latching and one fixed side. We generally prefer two latching sides, however, that is a preference.

Along the top of the motherboard, we can see the 24-pin ATX power connector along with two 8-pin CPU power connectors. Most EATX server chassis are designed for these to be in the top right of the motherboard so you may need an ATX power extension cable to reach on this motherboard, but that is chassis and power supply dependent.

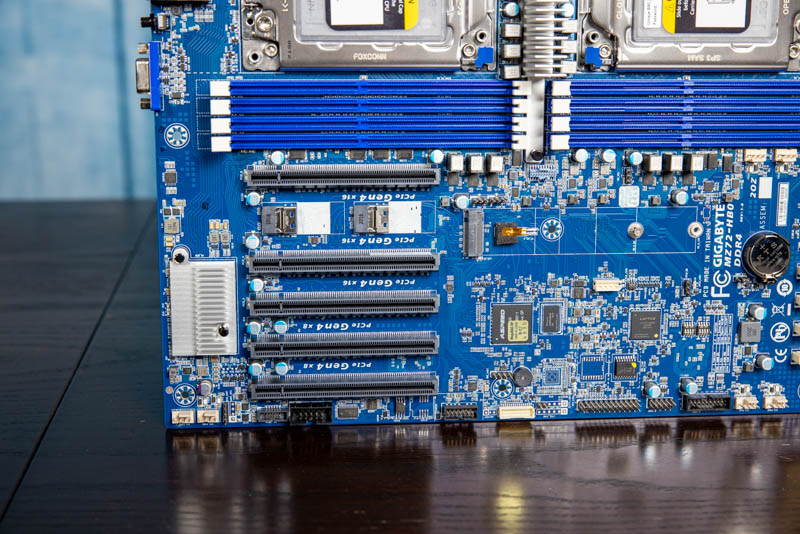

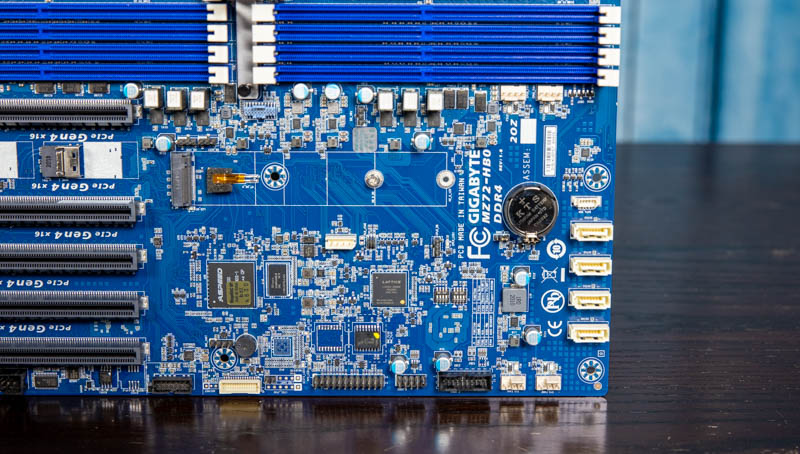

Moving to I/O, the MZ72-HB0 is a PCIe Gen4 system. There are a total of five PCIe slots that are all x16 physical. Two are PCIe Gen4 x8 electrical and three are x16 electrical including the slot closest to the CPUs that has room for a double-width cooler. Instead of there being a PCIe slot there, Gigabyte adds two PCIe Gen4 x4 links via SlimSAS headers. One can use these for cabled peripherals with the most common being PCIe Gen4 NVMe SSDs such as the Kioxia CD6 and Kioxia CM6 we reviewed. This is an excellent way to get more PCIe density from the system instead of having a blank spot or a spot where a slot would be covered by a dual-width GPU, FPGA, or AI accelerator.

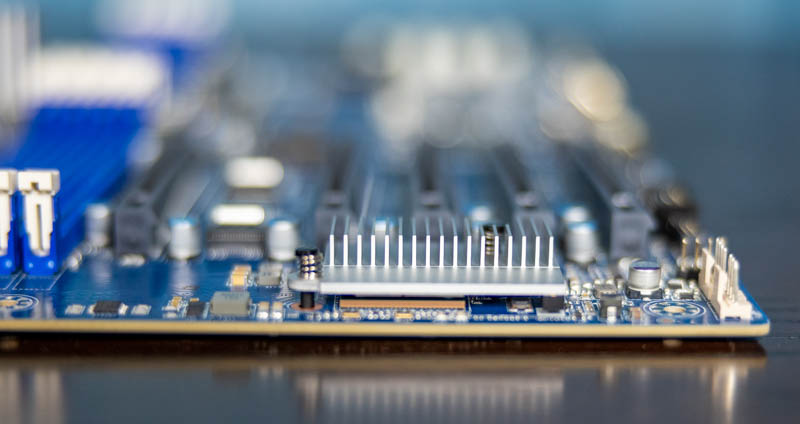

Next to the slots, we find a lone M.2 PCIe Gen4 x4 NVMe SSD slot. This can take M.2 2280 (80mm) and 22110 (110mm) NVMe SSDs. We would have liked to see Gigabyte find a way to add a second slot on a future revision, but again space is tight so this makes sense.

As we continue along on our hardware tour, take a look at the 4-pin PWM fan headers. There are six total. Two are under the DIMM slots for the right CPU. The other four are along the bottom edge. Many chassis assume that these are relatively close to the right side of the motherboard so the two bottom left PWM fan headers one needs to ensure work with the cable lengths one has. If cables are too short, one can use extension cables so this is not a major factor, we just want readers to be aware of it.

For traditional storage, we get four SATA III 7-pin ports. To be frank here, we would have preferred more high-density SlimSAS over these headers, especially if we could get more SATA/ PCIe lane ports from that space. In modern motherboard designs, the reason we see these is because both the connectors are inexpensive, but also the 7-pin SATA cables are very inexpensive. Some customers building systems from motherboards like this are cost-sensitive enough to demand these 7-pin SATA connections. One day we hope that if SATA connectivity is still included, it is modernized as we see with the other SATA/ PCIe ports on the motherboard.

There are three SlimSAS ports at the top right of the MZ72-HB0. Each of these small ports carries four PCIe Gen4 lanes or four SATA III lanes. We really like this design since it gives one the option to connect legacy hard drives or more modern PCIe Gen4 NVMe storage while retaining the same motherboard design.

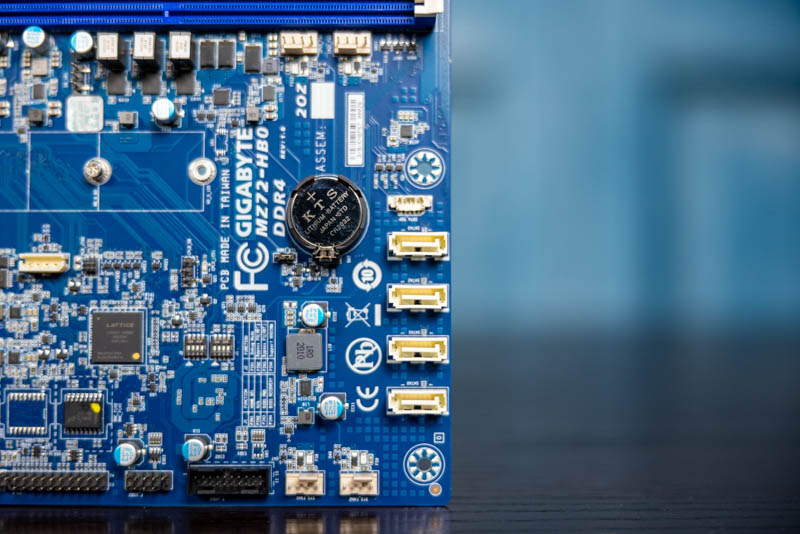

On the rear of the unit, we have a fairly standard I/O format. We have legacy serial and VGA ports on either side. We also have two USB 3 ports along with an out-of-band management port.

The two primary networking RJ45 ports are something a bit different. These are actually 10Gbase-T ports based on the Broadcom BCM57416 NIC. This NIC sits under a small heatsink on the edge of the motherboard near the PCIe slots.

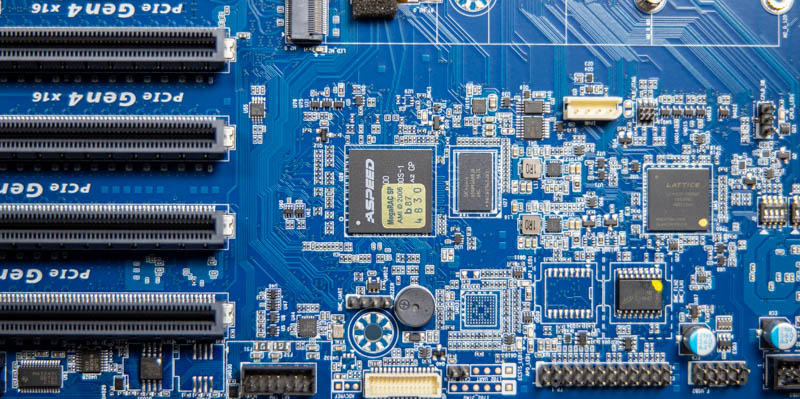

In terms of management, we are going to get to that functionality shortly. This is an industry standard AST2500 solution.

Overall, there are compromises made to achieve a compact EATX size. We only have half of the maximum DIMM capacity. We also only have 88 exposed PCIe lanes instead of 128. We do also get some connectivity for the four SATA III ports and the Broadcom NIC, but there are a few lanes on the platform not being used. Still, at some point, there are limits to what can fit on an EATX motherboard while maintaining PCIe Gen4 speeds and reasonable PCB costs.

Let us take a look at the PCIe configuration via the block diagram next.

This is fantastic, and with water-cooled solutions you can easily run 3x high end GPUs and enough memory for local machine learning and video editing applications. Also with raid controller cards, have plenty of storage with 48 drives in the accessory cards alone. I look forward to this hitting market

Great JOB

One thing to notice CPUs are positioned exactly behind each other to give room for long PCie at the first slot. This will have a negative effect on Colling CPU 1 since hot air passing through CPU0 will be blowing on it. I think that is why they assigned 56 PCIe lanes on CPU0 vs only 36 PCIe lanes on CPU1

In general it is a great design

Free HBO! Nice

nice review, but i am missing the temps of the components during the test so we can compare with supermicro h11dsi

Hi erik – We typically do not publish temps as you would see on a consumer site. Most of these boards utilize chassis cooling and PWM fan control so there is not as much of an issue as we see on the consumer side.

Frankly, temps are an almost irrelevant point of differentiation with the H11DSi since the H11DSi is a PCIe Gen3 motherboard while this is a PCIe Gen4 board. To most, the ability to support PCIe Gen4 v. Gen3 is the significantly more important point of differentiation.

Interested in building a computer for CFD. Would it be possible to start off with one cpu and set of memory and have it run, then upgrade with a second cpu later?

I can never find a retailer for the newer AMD Gigabyte server boards. Any suggestions?

Sona.de has the board in europe, got mine there a few months ago and have been using it as a workstation board and its been very good.

Id like to see some more workstation related benchmarks, there’s seems to be quite a few people using epyc/rome boards for workstations in the forums.

Small error in the review, theres 100 lanes available not 88, and 128 used between cpus, for a total of 228/256 used(according to anandtech theres actually 129 for each cpu, four extra lanes for the bmc). Would be interesting to see a comparison if you review the coming asrock ROME2D16-2T board that only uses 92 lanes between cpus, to see if there’s much performance difference. That board has a few more pcie lanes available and 5 x16 slots and two m.2 slots so maybe even better as a workstation board.

I bought this board. Everything is fine except the boot loop. So if I reset, reboot or power cycle, the mobo boots up for 8 seconds and then cuts off the power. Then it tries to boot up again for another 8 seconds. This keeps happening over and over again and it wont boot up the OS.

However if I shut it down and remove the power cable / switch off the PSU for 5-10 minutes, then it can boot up properly again.

Has anyone experienced this issue? I have tried adjusting the BIOS, upgraded BMC and BIOS to latest version.