Addressing the Elephant in the Room: Is the Gigabyte MZ01-CE1 a Workstation Motherboard?

Calling the Gigabyte MZ01-CE1 a “workstation” motherboard we have come less to like. Indeed, Gigabyte markets the motherboard as a “server/ workstation” product.

Typically with a workstation product, at the time of this review, we would see some large differences such as:

- Onboard audio

- Additional USB 3.0 Ports

- USB Type-C / USB 3.1

- Onboard 7-pin SATA (note there are Slim SAS SATA ports)

- Multiple onboard M.2 slots (not just one)

- Excellent fan control for quiet operation

Most of these are easily attainable via PCIe add-in card slots. At the same time, doing so means one is utilizing the very slots that make this motherboard so unique. This is realistically a limitation of the ATX form factor and the ever-enlarging sockets.

Finally, as of this writing, one can utilize high-core count EPYC processors, like the AMD EPYC 7551P. With that said, the AMD EPYC 7371 is a better workstation fit due to higher clock speeds. They are not quite in the 4.5-5.0GHz range common on many consumer and higher-end workstation parts, but it is close to 4.0GHz at least making it bearable for inevitable single-threaded tasks. The processor selection is still not optimal for workstations, but it is usable.

Fan control can be done via IPMI, but that is a different level of involvement than having a nice GUI. If you are using multiple GPUs, cooling noise will become considerable. With four GPUs using around 1kW plus storage, the CPU and RAM, the solution, as a workstation will use almost 1.4kW of power. It will also be extremely loud as we will cover in the W291-Z00 For US 15A 120V circuits, that is very near the 80% sustained usage circuit rating (1.44kW.)

If you are using a single or dual GPUs, the Gigabyte MZ01-CE1 is more manageable. Power usage is more acceptable for a standard workstation, as are noise levels. One can also get the add-in cards for additional functionality one may miss in this server platform. At the same time, using less than three or four GPUs means that there are other platforms that will compete in the space.

The Gigabyte MZ01-CE1 can be used as a workstation however, we want our readers to go into that fully knowing that there are going to be a few challenges involved with using a server motherboard as a workstation part. To decree this a workstation motherboard without noting challenges would be disingenuous. If you are thinking of using the MZ01-CE1 for your own, or your customer’s workstations, there are a few integration challenges, even with using an off-the-shelf tower server solution like the Gigabyte W291-Z00.

Gigabyte MZ01-CE1 Topology and Block Diagram

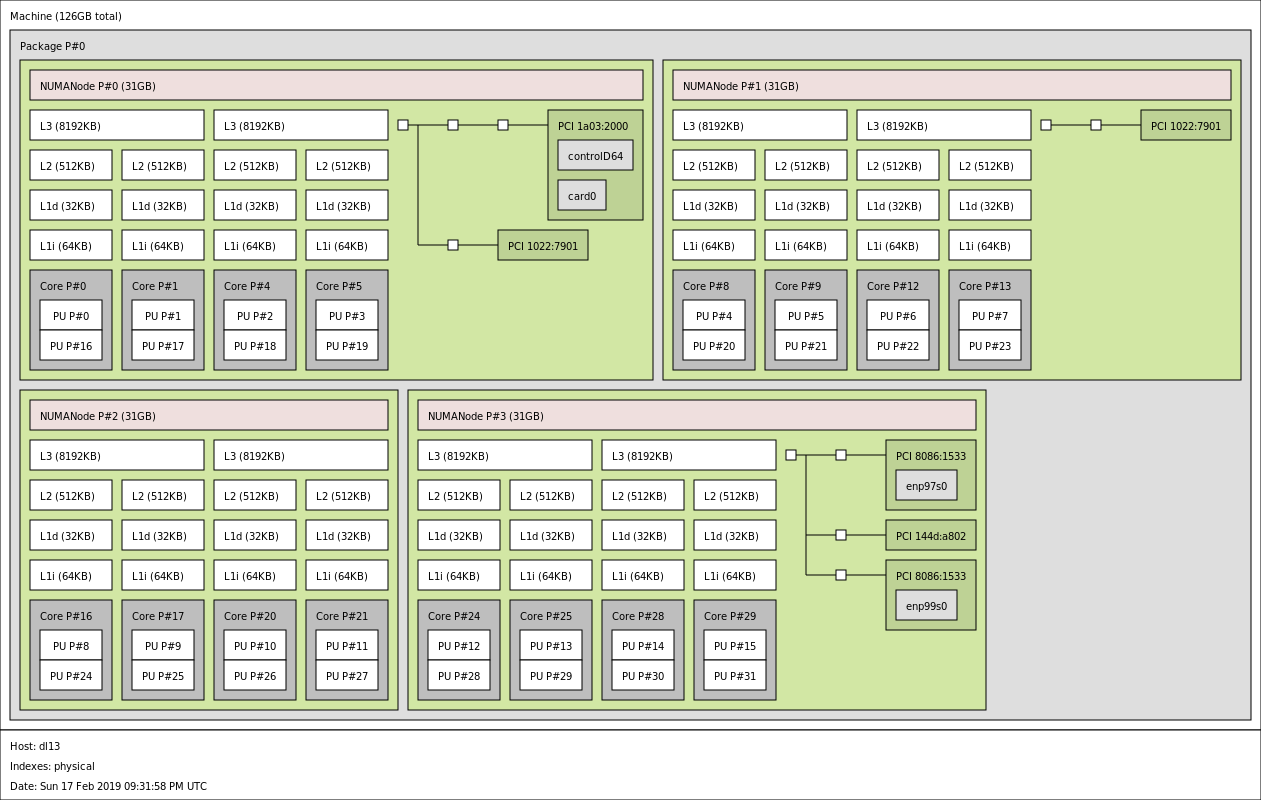

With the AMD EPYC 7001 “Naples” generation, system topology is more important in a single socket form factor than it has been for some time. We have started showing topology maps for our system and motherboard reviews.

Here we had an M.2 NVMe installed on the motherboard and wanted to show what this looks like:

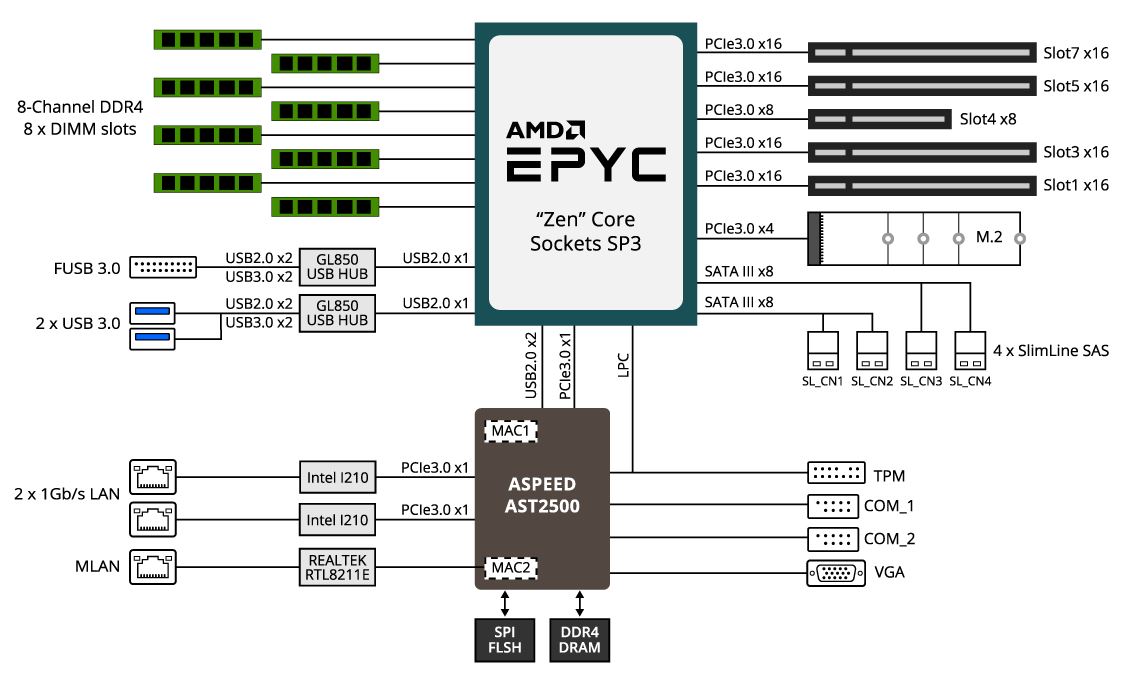

Here is the official block diagram for the motherboard:

As one can see, this is a robust block diagram for an ATX platform. We would have liked to have seen AMD tie PCIe lanes to Naples dice, however, with Rome coming we can see why they would not.

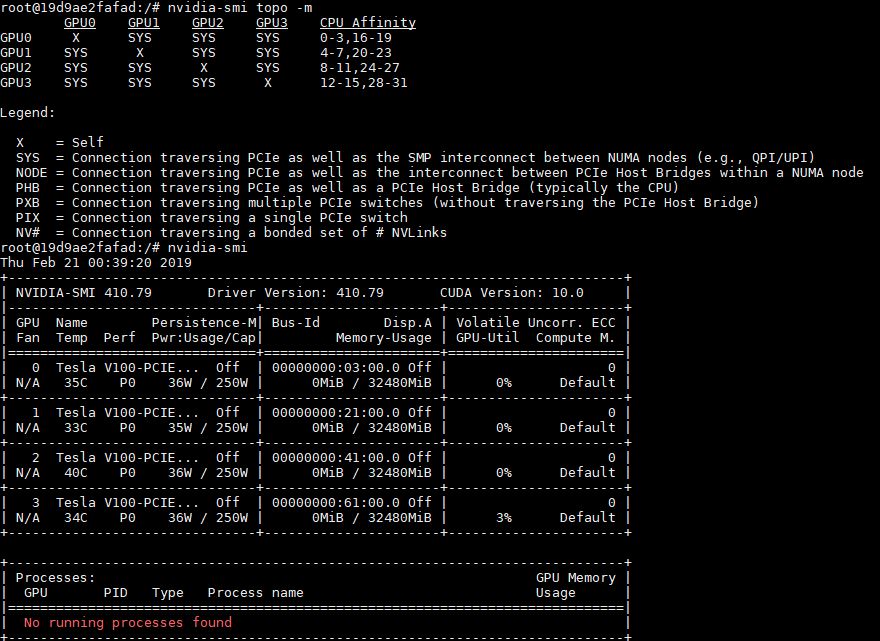

If one installs four GPUs, all of the GPU lanes will have to traverse the SMP interconnect. Here is what NVIDIA topology looks like with four NVIDIA Tesla V100 32GB GPUs installed:

NVIDIA needs to update its SMP interconnect from “e.g. QPI/ UPI” to “e.g. UPI/ Infinity Fabric” to handle AMD EPYC systems like these.

Next, we are going to look at the management solution which is a significant departure from what Gigabyte used previously.

Titan V waterblocks + the Asrock Rack EPYCD8-2T might make things easier.

Pihole called…they want their dashboard back.

Very nice: this and the CE0 model. Just what the doctor ordered! When Rome lands these will make deadly workstation cum GPU boxes.

I have setup a server with Gigabyte MZ31-AR0 having Tesla K80 cards and i get a similar topology to yours i.e. (SYS) option. When i run P2P test, the bandwidth between 2 GPUs is only 3 GB/s if not the same and 8 GB/s if on the same card. IOMMU is disabled as well as ACS control. Is the reason for getting half the speed because i am traversing through the SMP interconnect between two different GPUs. Strangely enough, the Tesla K80 should have theoretical bandwidth of 16 GB/s at least on the same Tesla K80 card since i use PCIE Express 3.0 x16. Any suggestion would be highly appreciated. Many thanks.