Perhaps the most hotly requested motherboard review we have had in the past quarter has been for our Gigabyte MZ01-CE0 review. The reasons are simple, this is one of the only GPU optimized, single socket AMD EPYC, ATX motherboards on the market. While AMD EPYC truly shines in motherboards that tightly integrate with chassis, the ATX “channel” market is essential since that often trailblazes new use cases.

The Gigabyte MZ01-CE0 motherboard is one of the second salvos of AMD EPYC motherboards. When AMD EPYC first launched, the first motherboards offered had some reasonably specific use cases in mind. In this second salvo we are seeing from vendors, motherboards are coming out that are more general purpose in nature. A vital feature of the AMD EPYC 7000 platform is single socket PCIe lanes, and we have heard from readers that they want more PCIe lanes and a standard ATX form factor. The MZ01-CE0 addresses this on both fronts.

In our review, we are going to take a look at the hardware, the management, key connectivity details, and then finally end with our final thoughts.

Gigabyte MZ01-CE0 Hardware Overview

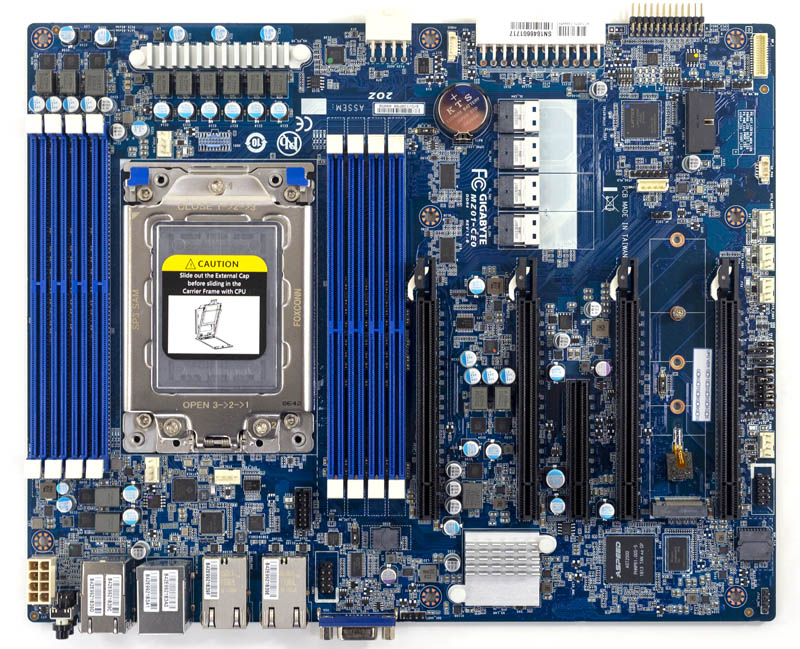

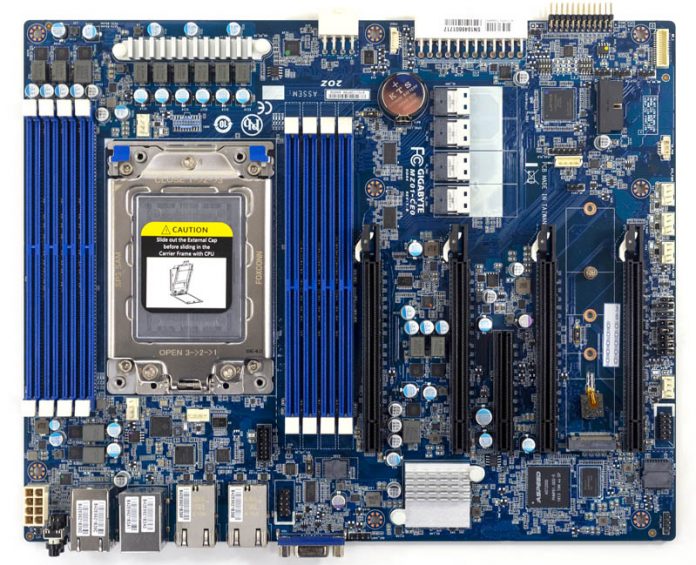

The Gigabyte MZ01-CE0 is a standard ATX form factor motherboard. That means it measures 12″ x 9″. Most importantly, it fits a wide variety of chassis. The ATX form factor has been around so long; we recently published the Ultra EPYC project where we used the motherboard in a twelve-year-old Sun Ultra 24 workstation without needing to cut or drill. There are countless chassis that support ATX motherboards making this a tremendous asset for the platform.

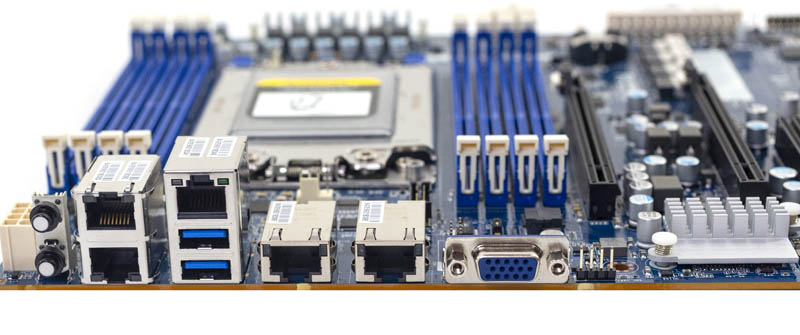

We are going to start this review in the reverse order of our typical STH server motherboard review. Looking at the rear I/O panel, there are power/ reset buttons set next to two 1GbE NICs. The IPMI management NIC sits atop two USB 3.0 ports. Next, we have dual 10Gbase-T ports providing 10GbE in this model. Gigabyte offers the Gigabyte MZ01-CE1 model which deletes 10GbE to lower costs. We will have a review of that platform soon. One can also see a legacy VGA port for KVM cart usage.

An Intel X550 NIC provides 10Gbase-T networking connectivity. This is one of Intel’s higher-end 10Gbase-T NIC which means power consumption and features are significantly better than older generations. These NICs still require heatsinks to cool them. One oddity with the Gigabyte MZ01-CE0 is that the heatsink’s fins are aligned for top to bottom airflow the opposite of the rest of the board’s front-to-back airflow design.

One can also see the ubiquitous ASPEED AST2500 BMC here. We are going to cover the management solution later in this review.

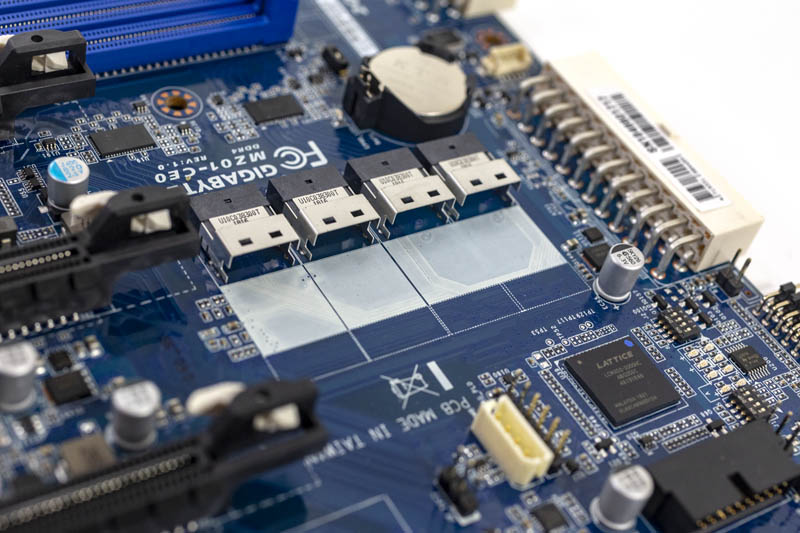

Storage is provided by four SlimSAS ports. While the cable name may include “SAS” these are SATA-only ports. Sixteen is a generous figure. If you wanted to create a SATA storage array, this offers direct attachment through the AMD SATA controller. The SlimSAS cables are not as prevalent in the market, but prices are reasonable.

PCIe expansion is a key selling point for the Gigabyte MZ01-CE0. There are four PCIe 3.0 x16 slots here with double-width spacing. Or translated, designed for high-power GPUs and FPGAs. A GPU installed in the top x16 slot is tricky to un-latch from the retention mechanism if DIMMs are installed. Likewise, the bottom x16 slot requires a chassis designed for at least 8x PCIe I/O slots. Many ATX server and workstation chassis are only built for 7x, so this is a consideration. Still, the gravity of this design is that one can install four GPUs in this platform in a relatively standard chassis.

Aside from the four PCIe 3.0 x16 slots, there is a PCIe 3.0 x8 open-ended slot. The motherboard can take an additional x16 card running at x8 speeds if you have two single-width devices.

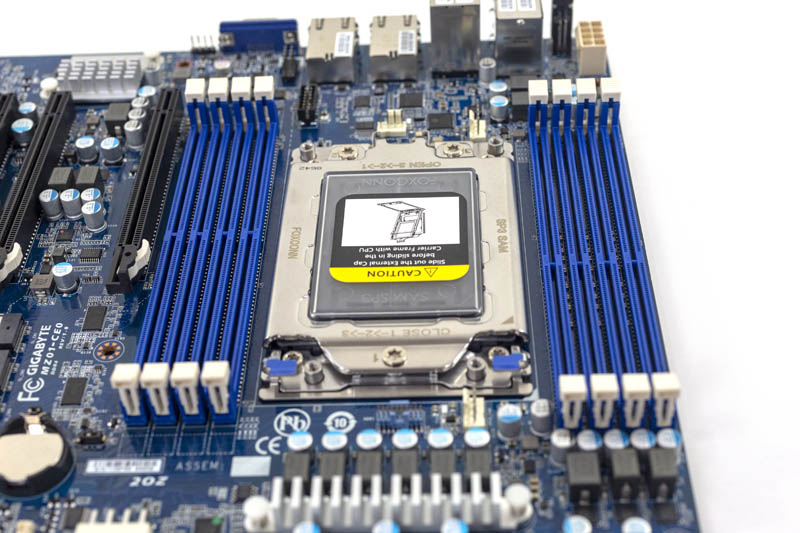

Many of the early AMD EPYC motherboards were designed in ways which blocked using larger PCIe x16 devices, like GPUs, due to placement around the DIMM slots. An example is seen in our Gigabyte MZ31-AR0 review. Gigabyte’s engineering team solved that with the MZ01-CE0.

For onboard M.2 NVMe storage, there is a M.2 slot that (thankfully) takes up to M.2 22110 (110mm) NVMe SSDs. That means one can use NAND SSDs with PLP or even the Intel Optane 905P 380GB M.2 NVMe SSD.

Pausing for a second to take a tally, the Gigabyte MZ01-CE0 is an ATX motherboard with a breakdown of the available 128x high-speed I/O lanes from a single socket AMD EPYC as follows:

- PCIe x16 Slots: 64 lanes

- PCIe x8 slot: 8 lanes

- PCIe 3.0 x4 slot (M.2): 4 lanes

- 16 port SATA: 16 lanes

- Intel X550: 8 lanes

The net impact is that one has 100 lanes for I/O before even touching the BMC or other onboard functionality. For an ATX motherboard with AMD EPYC, this is a lot of I/O capability and has an enormous amount of I/O exposed.

From a CPU and RAM perspective, there are eight DIMM slots, half of an AMD EPYC CPUs maximum, and a single SP3 CPU socket.

For AMD EPYC, there are “P” series parts such as the AMD EPYC 7351P (16 cores), AMD EPYC 7401P (24 cores), and AMD EPYC 7551P (32 cores) that AMD prices at an exceptional value. You can read about AMD’s pricing methodology in our piece: AMD EPYC’s Extraordinarily Aggressive Single Socket Mainstream Pricing. Beyond these P series parts, there is the AMD EPYC 7371 which is a high-frequency part that is faster than what Intel has available at the time of this writing even at twice the price. If you are building a workstation like the Ultra EPYC, the EPYC 7371 is our recommendation for its higher clock speeds.

Briefly, we wanted to mention right angle connectors. As you may have seen, the four SlimSAS (SATA) headers, the USB 3.0 front panel header, the front panel switch and LED header, along with the ATX and aux power connectors are all mounted parallel to the motherboard’s surface. This design allows for four large GPUs to be installed and not block the physical connectivity of the connectors. It also means that a trade-off was made.

With four large GPUs installed, this is a challenging motherboard to service. For example, four of the Gigabyte MZ01-CE0’s seven 4-pin PWM fan headers sit at the bottom edge of the motherboard. A double-width GPU on the bottom covers these, as well as the USB 3.0 front panel header. A double-width GPU on the second to bottom spot will interfere with servicing the M.2 NVMe slot along with the SlimSAS and front panel headers. This trend continues for all four GPUs with headers parallel to the motherboard PCB.

Gigabyte had to choose between allowing connectivity to happen even with four GPUs installed, or to have GPUs block connectivity and sacrifice ease in servicing. We wholeheartedly agree with the design decision to sacrifice serviceability for connectivity, but we wanted to make our readers aware of this. If you are not using four GPUs, instead if you are using add-in cards like 100GbE NICs, then this is less of a concern.

Next, we are going to address whether or not the Gigabyte MZ01-CE0 is a workstation motherboard. We are then going to look at the topology before getting into management and our final words.

Oooooh, workstation <3

I hope they make PCIe 4.0 version too!

Only issues I see is 4th GPU blocking fan headers located at bottom and only 2* USB root.

Could you cover FAN speed control options? Is there way to make user defined temperature vs. pwm% profile? Or other way to keep it bedroom-silent in idle and temperatures down at full load?

Servethehome there is a real shortage of graphics workstation related benchmarking that is being done on Epyc 1p/2p systems could someone fill the void and do some more Blender 3d related CPU rendering benchmarks on both 1p 1nd 2p Epyc systems.

I’m getting tired of there being only Threadripper related Graphics Workstation related content available and very little Epyc related Graphics workloads benchmarking being done. AMD’s Epyc 7371 is a much higher clocked part just released at the end of 2018 and I’d love to see a similarly clocked 1P 32 core Epyc/Naples part introduced by AMD. Epyc/Naples represents a much better Cost/Feature value than even AMD’s Threadripper platform when one considers the Epyc/Naples Platform’s 128 PCIe lanes and 8 memory channels per socket.

But really More Folks are making use of Blender 3d than ever before and the cost of Blender 3d is a no brainer when paired with some Epyc hardware options that are even better cost/feature deals than any consumer branded processor variants/platforms.

I’ve been looking for this mobo since, say, November. Can’t seem to find it available for purchase [and I tried most of the Gigabyte links too].

I did however find “Gigabyte MZ31-AR0 – 1.0” [cough a*azon, n@w@gg, c.d.w., etc]. Near as I can tell it’s very similar to the MZ01-CE0 … sfp+ ports and 16 dram slots vs. baseT and 8 slots. So perhaps a better option.

This board is screaming as an AIO homelab style server board.

10Gb integrated, 1Gb ports for separate networking. Standard ATX form factor, aka drop into any case you want. The PCIe connectivity gives you the possibility of at-home VDI-style gpu passthrough. 16 Sata seems to be the sweet spot for me personally, and drives are getting larger and more abundant still for the connection type. Plus the onboard m.2 for cache ssd.

Throw in the most important:

management iKVM al-la Supermicro style FREE.

Yeah, this just got added onto the wish list with an Epyc 7371 and 128GB ram. *Drool*

Looking forward to the next board review!

I prefer this one: ASRock Rack EPYCD8-2T

ATX 12” x 9.6”

Single Socket SP3 (LGA4094) support AMD EPYC™ 7000 series processor family

Supports 8 Channels DDR4 2667/2400/2133 RDIMM, LRDIMM 8 x DIMM slots

Supports 8x SATA3 6.0 Gb/s (from 2x mini SAS HD), 1x SATA DOM and 2 x M.2

Supports 2 X 10G LAN by Intel X550

Supports 4 PCIe3.0x 16, 3 PCIe 3.0×8

Supports 2 OCulink

“There are two rear USB 3.0 ports, and internally the USB 3.0 header’s orientation can create some tight fits.”

Maybe buy a 90° adapter for the 19-pin USB3 header, they cost like $5 on ebay for a 5-pack of them.

“We would have liked to see another M.2 slot. However, there simply is not room.”

I’d challenge that. Mini-ITX mobos have resorted to stacking two M.2 on top of each other, this would have been possible here too.

Nice, but waiting for the MZ32-AR0 review: https://forums.servethehome.com/index.php?threads/gigabyte-mz32-ar0.22533/

Of course it won’t be as relevant if AMD jumps to 5.

How do the PCIe Lanes work with Epyc? I never asked that, but are all 32/64 attached to one node or do the nodes each have 16/32? I think that’s kinda important when you want to do something with multiple graphics cards.

Epyc has 128 PCIe lanes connected to 1P.

For 2P, 64 on each node is used as interconnect, so the remaining 2×64=128 lanes are available for peripherals in 2P too.