At Computex 2023, we saw the Gigabyte H263-V11. This is the company’s NVIDIA Grace Hopper platform that fits four 72 core Arm CPU plus NVIDIA H100 GPU nodes into a single 2U chassis.

Gigabyte H263-V11 a 2U4N NVIDIA Grace Hopper Platform

The front of the 2U 4-node server has several drive bays. There are a total of four 2.5″ PCIe Gen5 NVMe drive bays per node.

On the rear of the server, we have a unique configuration. this may look a bit upside down since the photo was taken into a mirror showing this part of a hanging server. Each node gets dual 10Gbase-T, a management NIC, slots for networking, and two USB ports. Perhaps the most striking part of the server is the fact that it has three power supplies, not just two that we typically see on 2U4N servers.

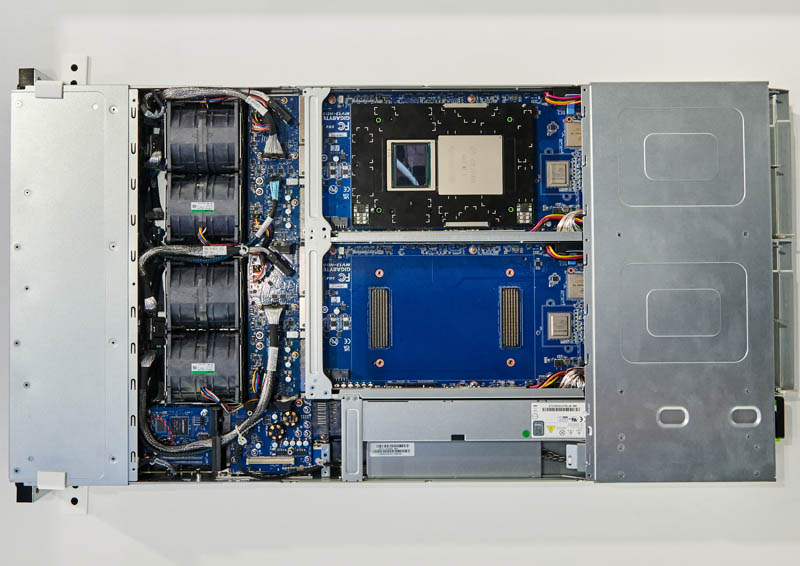

In the middle, we have a fairly standard 2U4N Gigabyte layout that we have seen for generations.

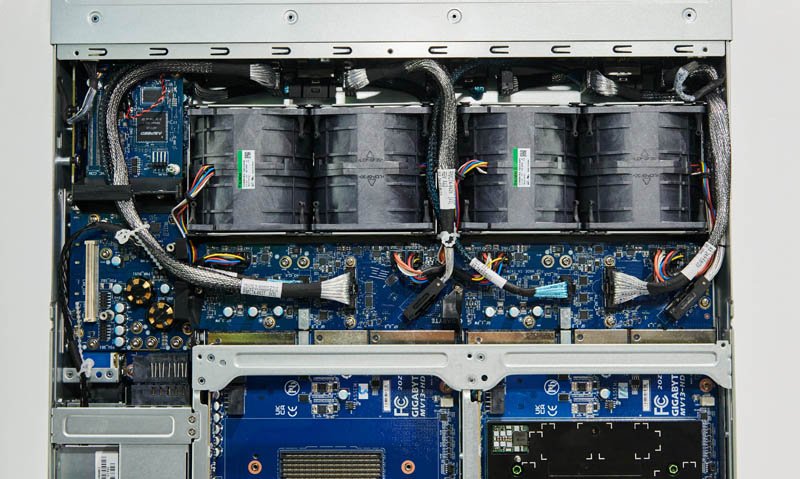

In the middle, we get a chassis management controller (CMC) which is a shared management controller for the system. There are also four large fan modules and a midplane.

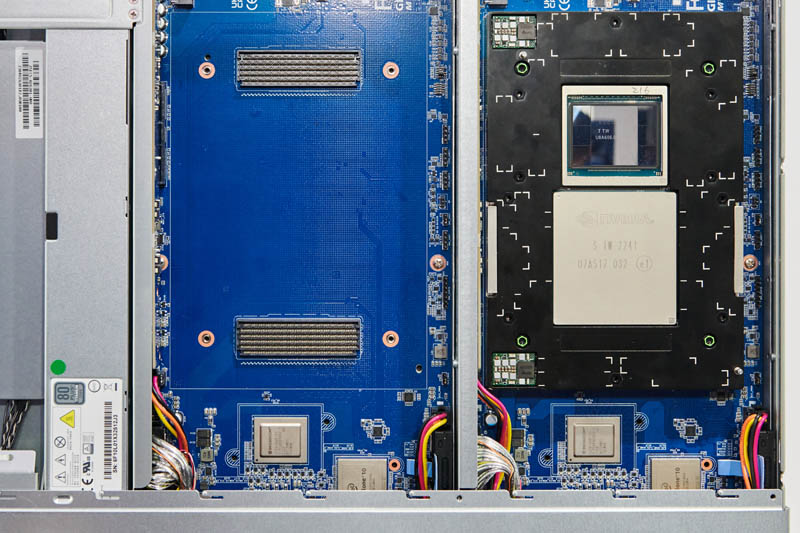

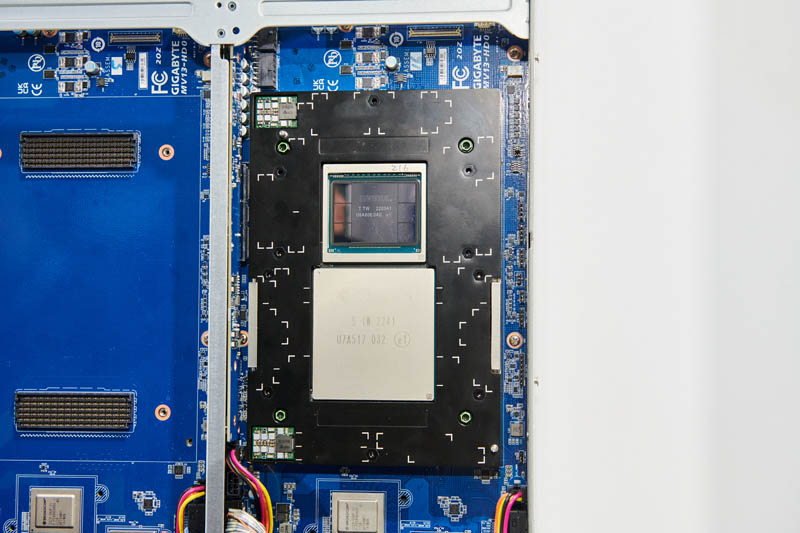

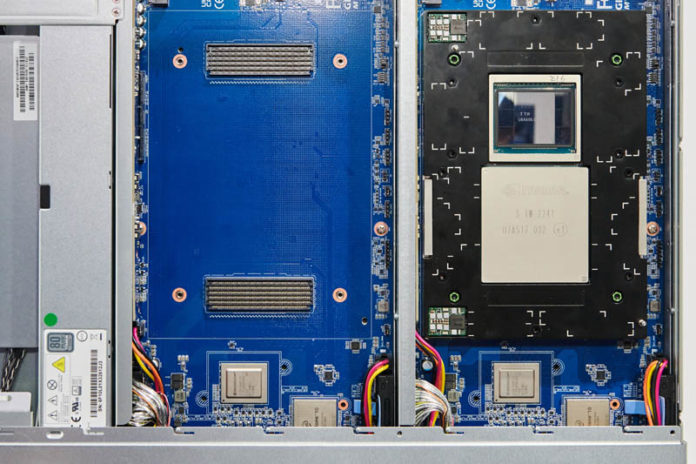

The NVIDIA Grace Hopper is an Arm CPU and GPU node that is like a large SXM module.

The NVIDIA Grace Hoppe is installed without its heatsink. Since this is Grace Hopper, not Grace Grace, we get up to 72x Arm Neoverse V2 cores, 512GB of memory, and the H100 GPU.

Something that we noticed about this design is that below the module connectors there is a Broadcom PEX89048A0 PCIe switch chip as well as an Intel Cyclone 10.

Overall, there is a lot going on in this node.

Final Words

The NVIDIA Grace Hopper is an interesting solution. Generally, today’s AI systems tend to have a higher GPU to CPU ratio. NVIDIA Grace Hopper is a fairly mid-range CPU with a lot of memory bandwidth and lower memory capacity. It then connects this directly to each GPU. It is certainly an interesting design, and in some ways similar to some of the AMD MI300 designs we will see next week (and have already seen.) There are still a lot of differences between how NVIDIA and AMD are approaching this market. Still, seeing systems with the Grace Hopper modules was really interesting. What is also interesting is that the price of these modules will mean that we would expect 90%+ of the cost of these systems to be from NVIDIA’s parts in a 2U 4-node machine.

IF I was just a couple years older, I might have had the chance to attend a lecture given by Dr Grace Hopper at the University of Maryland (my first boss did attend a lecture given by Admiral Hopper at said university).

And somewhat ironically, while working on my Computer Science degree, a few other CompSci students and I got the department to teach a course on COBOL.

How to cooling those huge module ? such high TDP ? Liquid cooling is strong enough ?

On the midplane module, just above the PSUs, it almost looks like there is an OCP 2.0 connector.