Gigabyte H261-Z61 Storage Performance

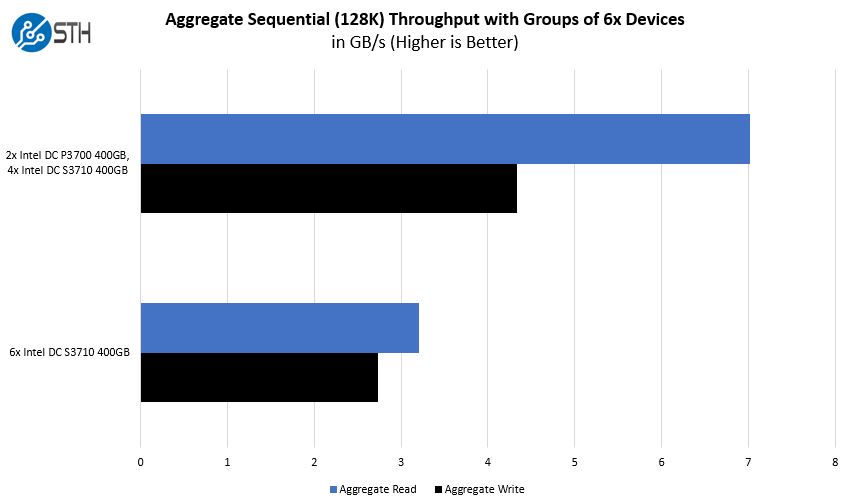

Storage in the Gigabyte H261-Z61 is differentiated by the two NVMe bays in each chassis. We wanted to compare high-quality SSDs and HDDs to give some sense of what one can get out of each node.

One can see that by replacing two SATA SSDs we used on the H261-Z60 for two NVMe SSDs on the Gigabyte H261-Z61, even at the same capacity, we get enormous gains. Newer NVMe SSDs can be much faster than the Intel DC P3700’s that we are using here, but many of today’s capacity optimized drives are slightly slower.

We generally prefer more NVMe storage these days as SATA is a declining standard while NVMe is taking SATA’s share. There are certainly deployment scenarios for both, but this is why we prefer the Gigabyte H261-Z61.

Compute Performance and Power Baselines

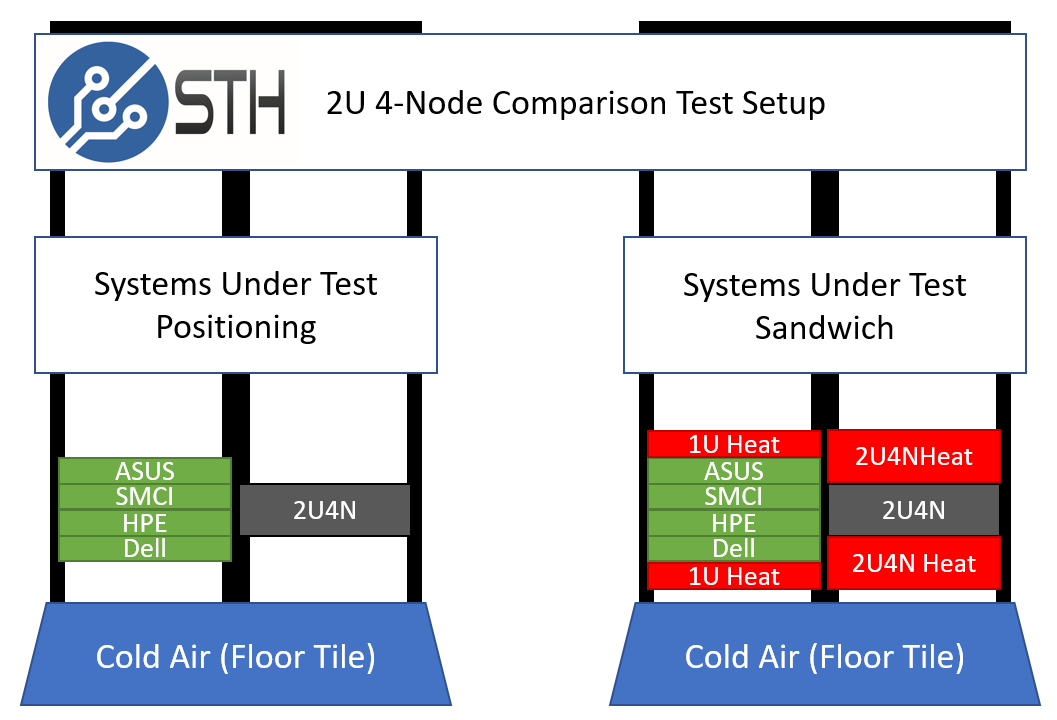

One of the biggest areas that manufacturers can differentiate their 2U4N offerings on is cooling capacity. As modern processors heat up, they lower clock speed thus decreasing performance. Fans spin faster to cool which increases power consumption and power supply efficiency.

STH goes through extraordinary lengths to test 2U4N servers in a real-world type scenario. You can see our methodology here: How We Test 2U 4-Node System Power Consumption.

Since this was our second AMD EPYC test, we used four 1U servers from different vendors to compare power consumption and performance. The STH “sandwich” ensures that each system is heated on the top and bottom as they would be deployed in dense deployment.

This type of configuration has an enormous impact on some systems. All 2U4N systems must be tested in a similar manner or else performance and power consumption results are borderline useless.

Compute Performance to Baseline

We loaded the Gigabyte H261-Z60 nodes with 256 cores and 512 threads worth of AMD EPYC CPUs. Each node also had a 10GbE OCP NIC and a 100GbE PCIe x16 NIC. We then ran one of our favorite workloads on all four nodes simultaneously for 1400 runs. We threw out the first 100 runs worth of data and considered the 101st run to be sufficiently heat soaked. The other runs are used to keep the machine warm until all systems have completed their runs. We also used the same CPUs in both sets of test systems to remove silicon differences from the comparison.

Note: This is not using a 0 on the Y-axis. If we used 0-101% you would not be able to see the deltas as they are too small.

We found the Gigabyte H261-Z61 nodes are able to cool CPUs essentially on par with their 1U counterparts. That is a testament to how well the system is designed as performance remains what we would expect given 1U, dual processor, server solutions.

where are the Gigabyte H261-Z61 and H261-Z60 available at? I’m having difficulty sourcing them in the US.

Brandon, Gigabyte has several US VARs and distributors. If you still cannot find one shoot me an e-mail patrick@ this domain and I can forward it on.

Can the management controller be configured to expose a low traffic management network to the host operating system?