Gigabyte H261-Z60 Management Overview

Looking at the Gigabyte H261-Z60 management, we see a departure from the Avocent-based management firmware solution. Instead, Gigabyte is using the HTML5 MegaRAC with a more modern UI. Our Gigabyte H261-Z60 came with the Central Management Controller (CMC) which we are going to discuss in a bit. First, we are going to look at the individual node management.

Gigabyte H261-Z60 Node Management

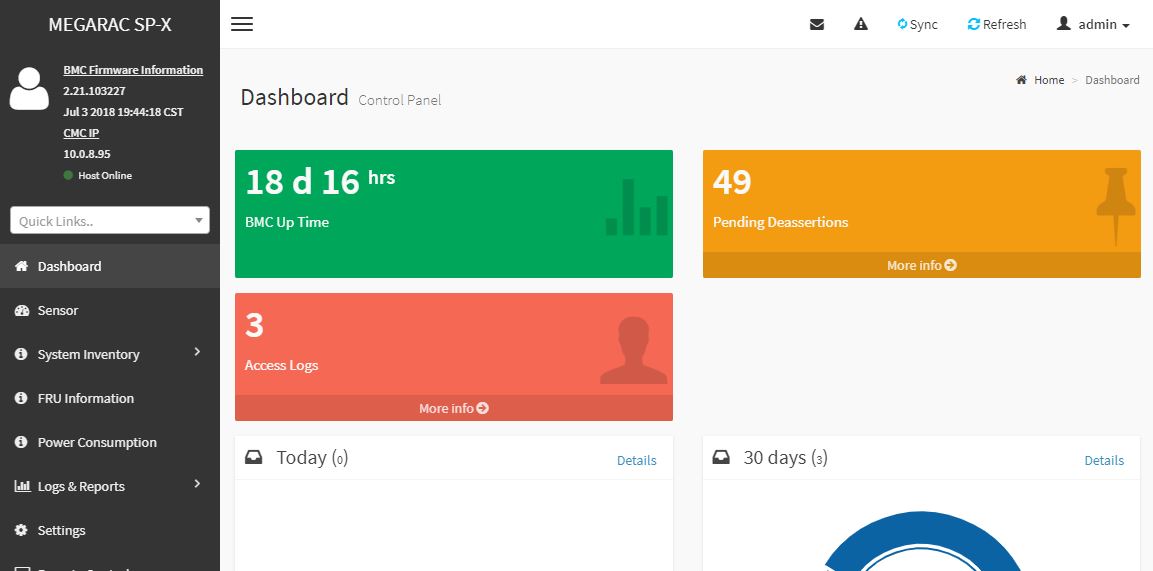

As one can see, the Gigabyte H261-Z60 utilizes a newer MegaRAC SP-X interface. This interface is a more modern HTML5 UI that performs more like today’s web pages and less like pages from a decade ago. We like this change. Here is the dashboard. One item we will quickly note is that you can see the CMC IP address in the upper left corner that is helpful for navigating through the chassis.

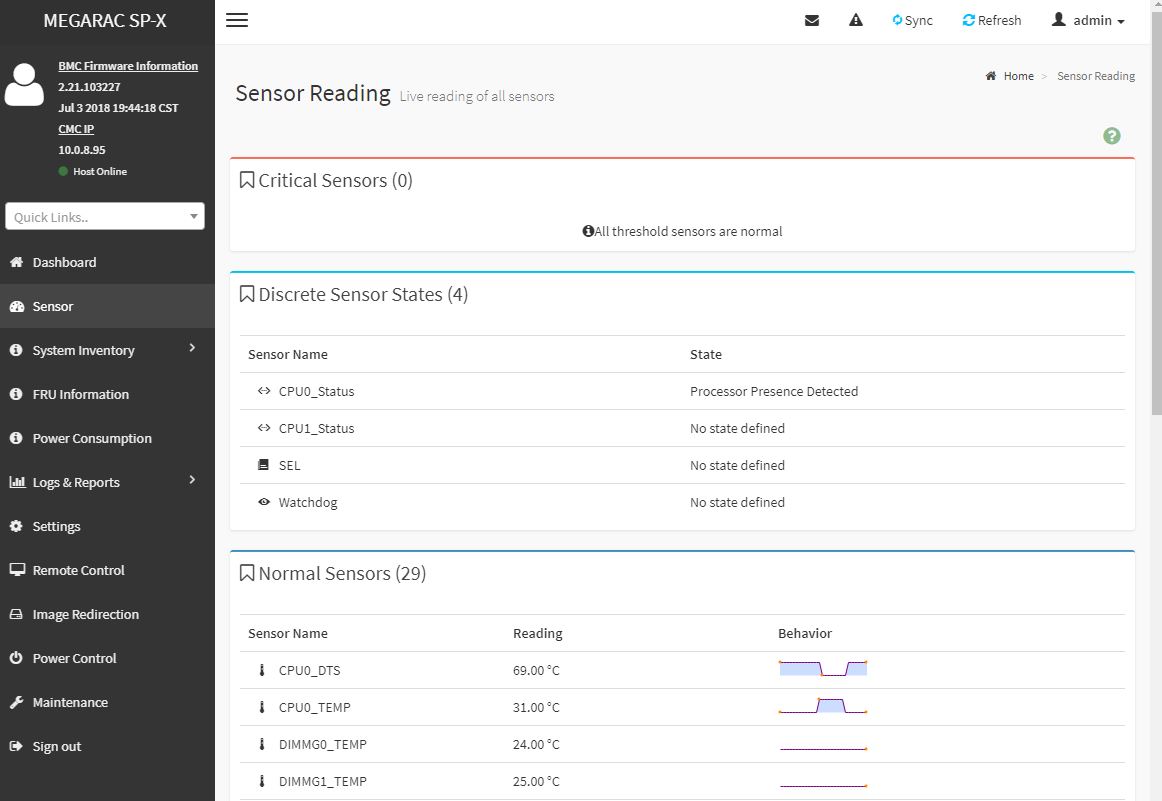

You will find standard BMC IPMI management features here, such as the ability to monitor sensors. Here is an example from one of the machines.

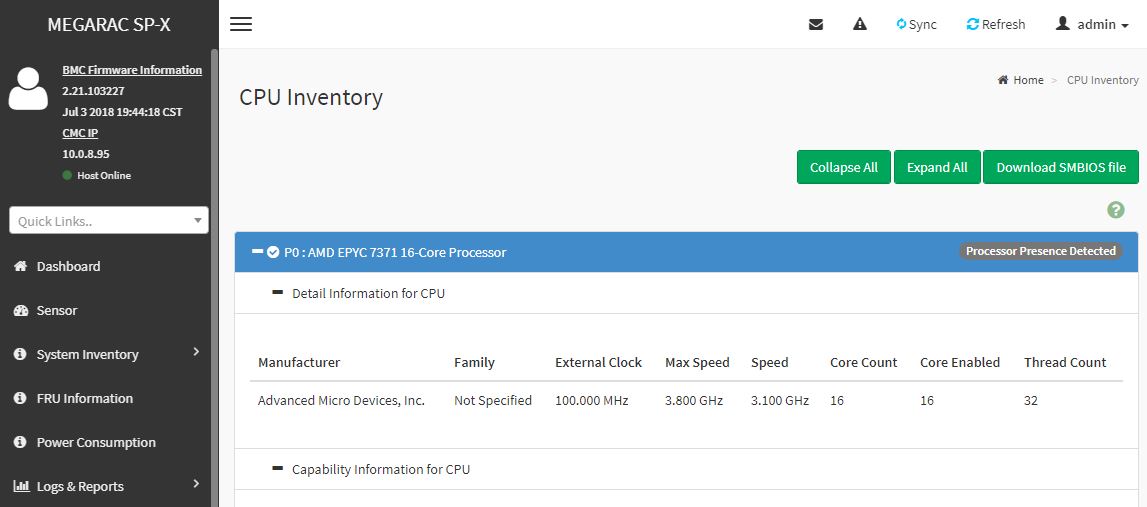

Other tasks such as the CPU inventory are available. One can see this particular CPU is a new AMD EPYC 7371 high-frequency Naples part that will be available next quarter. We already tested the Gigabyte H261-Z60 with these CPUs.

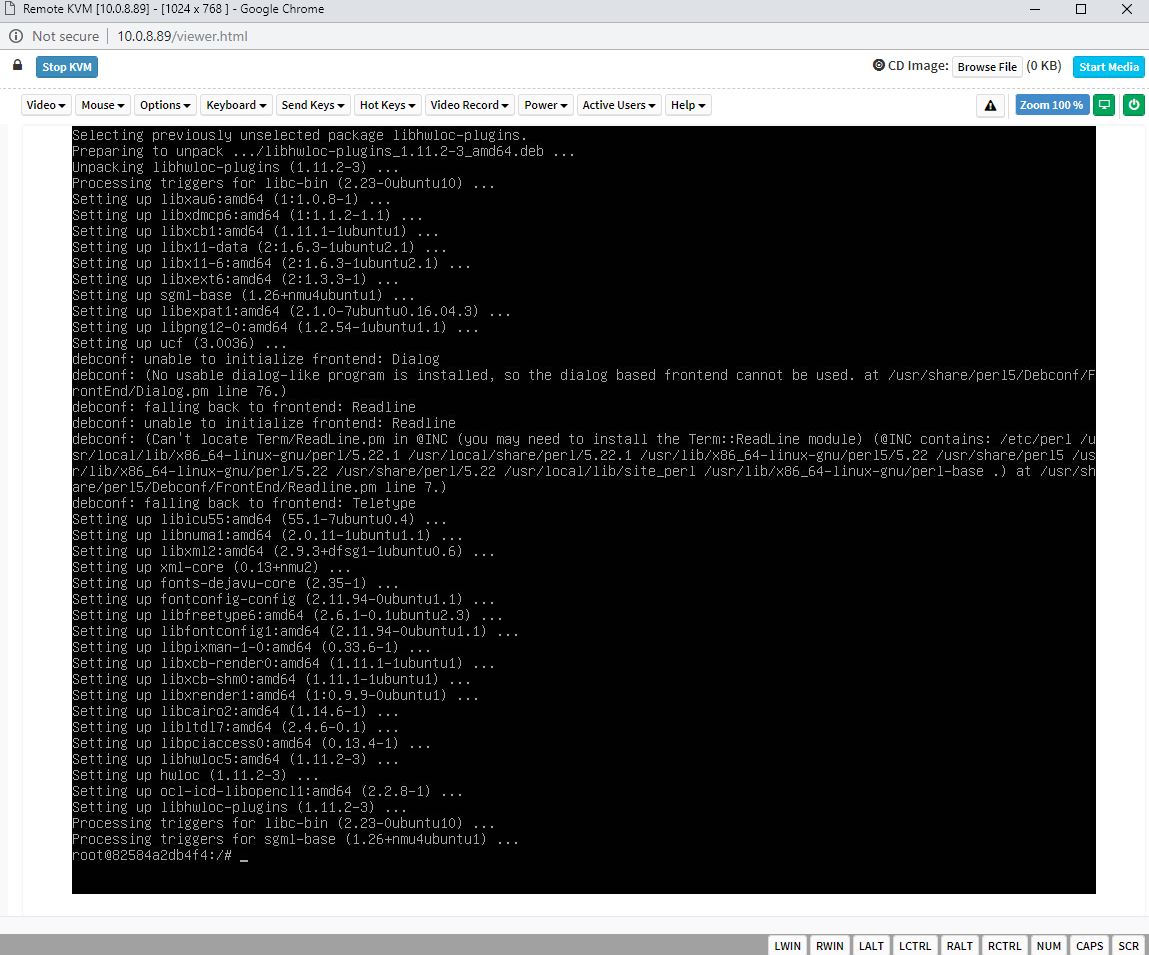

One of the other features is the new HTML5 iKVM for remote management. We think this is a great solution. Some other vendors have implemented iKVM HTML5 clients but did not implement virtual media support in them at the outset. Gigabyte has this functionality and power control support all from a single browser console.

We want to emphasize that this is a key differentiation point for Gigabyte. Many large system vendors such as Dell EMC, HPE, and Lenovo charge for iKVM functionality. The feature is an essential tool for remote system administration these days. Gigabyte’s inclusion of the functionality as a standard feature is great for customers who have one less license to worry about.

While each node’s BMC has features for LDAP, AD, RADIUS authentication integration, logging, remote firmware update tools, and similar industry standard functions, what sets the H261-Z60 apart is the CMC which reduces cabling needs in the data center.

Gigabyte H261-Z60 CMC A Big Feature

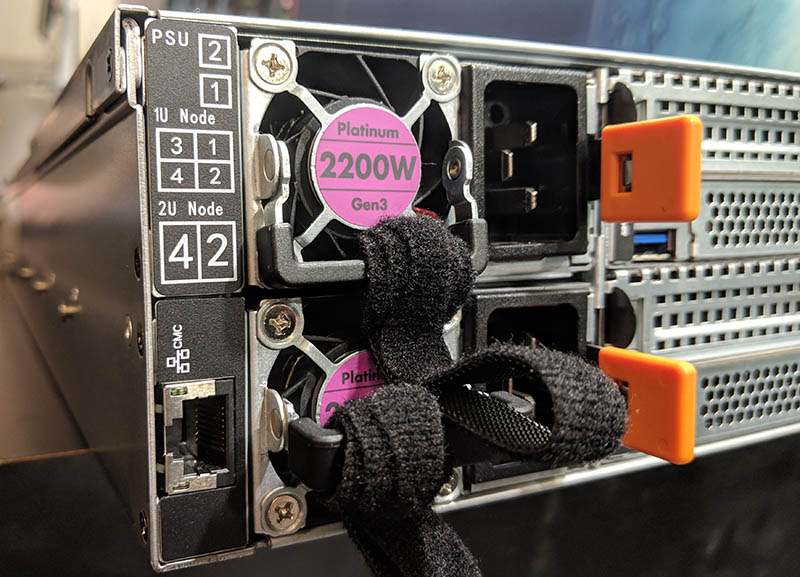

One of the newer features with this generation is the Gigabyte H261-Z60 CMC. CMC stands for Central Management Controller. This serves two purposes. First, it allows some chassis level features. Second, it allows a single RJ-45 cable to access all four IPMI management ports on the individual chassis which greatly reduces cabling. With 2U 4-node chassis, cabling becomes a big deal. Removing cabling means better cooling efficiency and less spent on cabling, installation, and switch ports. The CMC is an option we wish Gigabyte makes standard on 2U4N designs going forward.

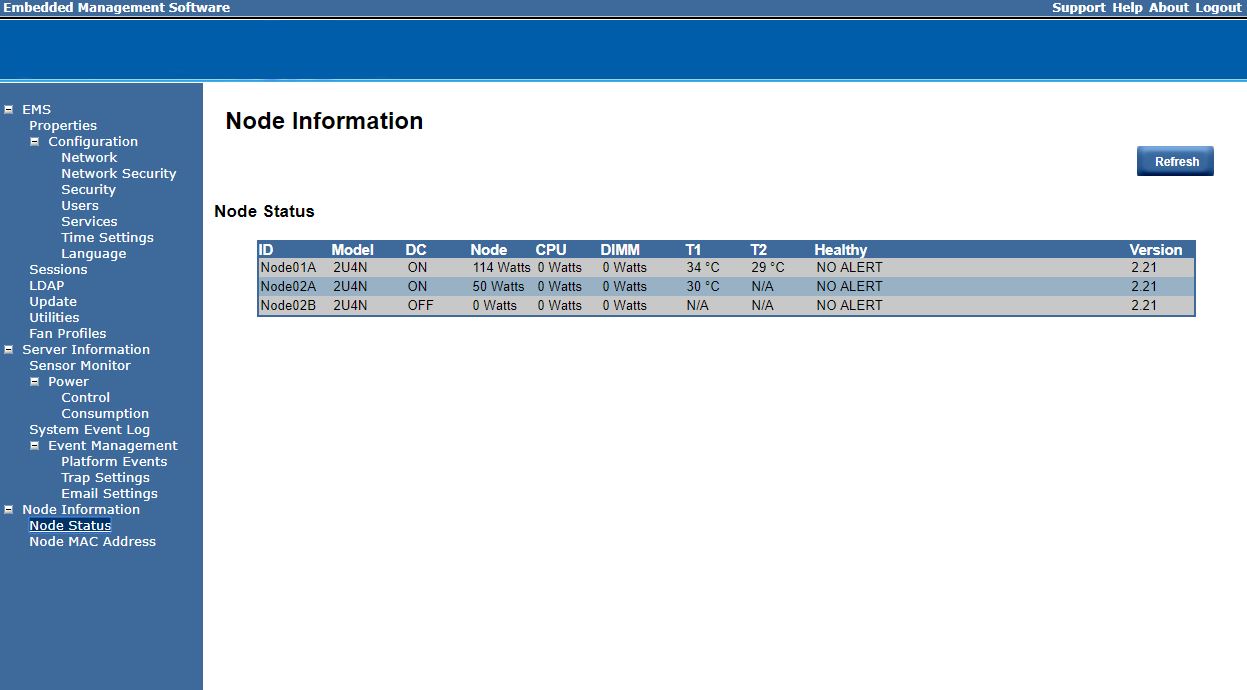

The CMC is run by an ASPEED BMC. This still uses the more traditional Gigabyte management interface, but it has some great features. Here is an example we set up in the lab with a dual CPU node, a single CPU node, a powered off node, and a node removed. An administrator can see this information remotely through one interface per chassis.

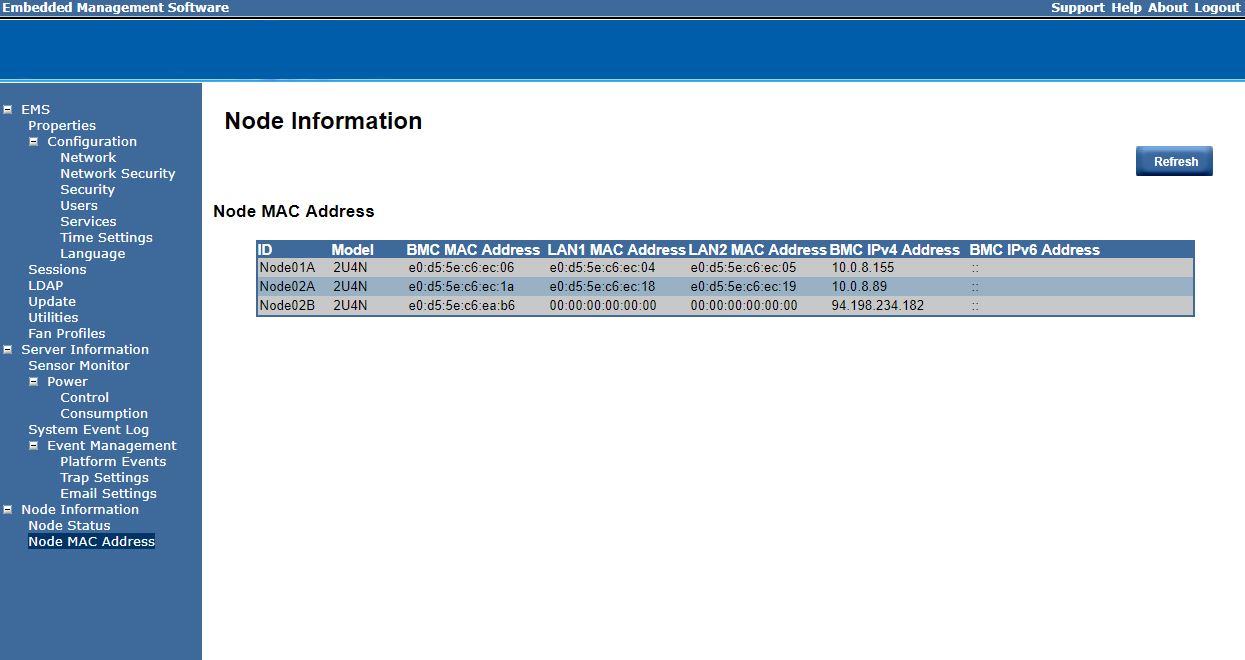

The “Node MAC Address” shows information on each node that is physically plugged in. Using the four test cases shown above, we can see that if the node is powered on, we can get the LAN MAC addresses as well as the BMC address/ IPv4/ IPv6 addresses. In a cluster of 2U4N machines, this is important when you need to troubleshoot and find a node.

There are some aggregated sensor data points shared with the CMC for remote monitoring.

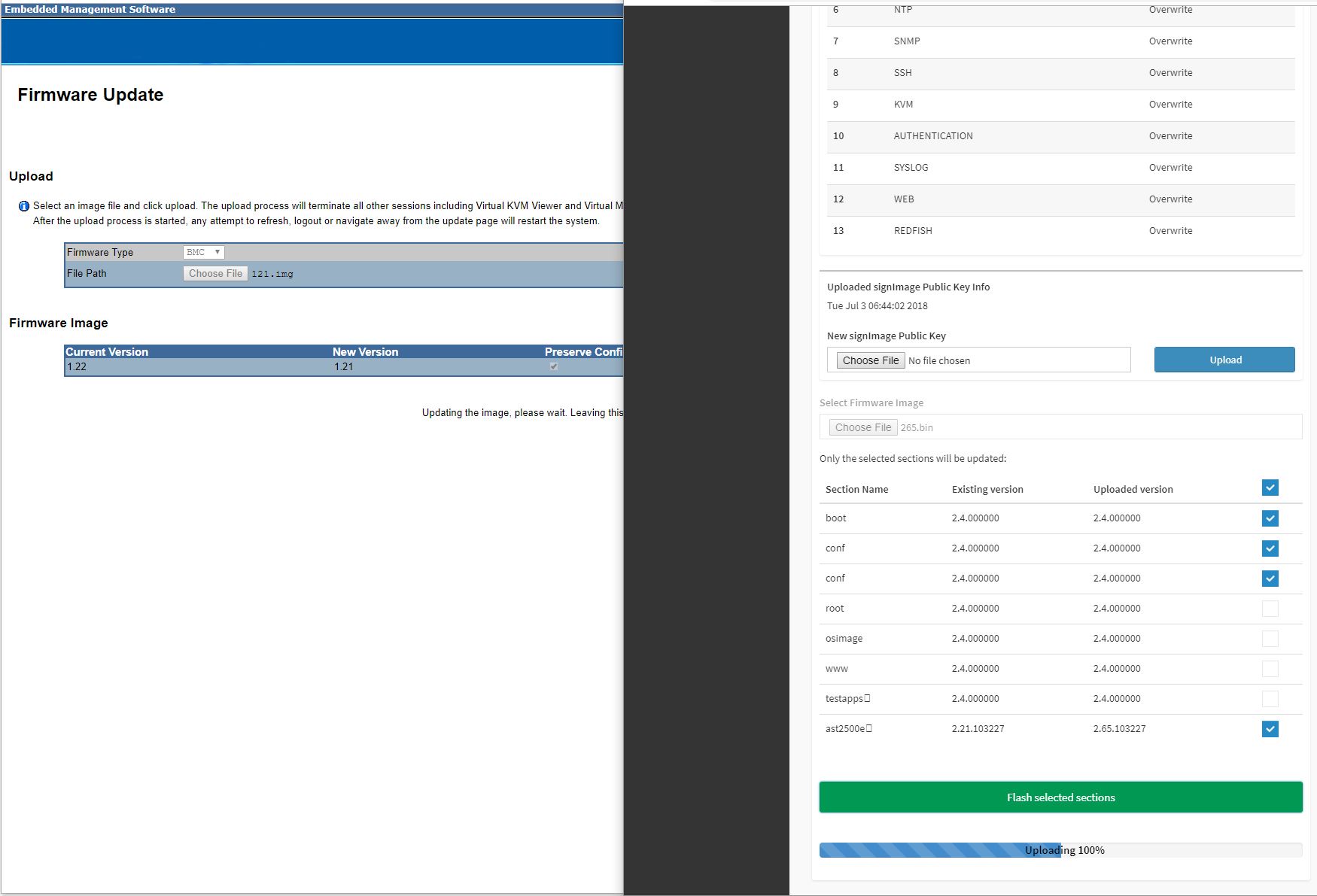

You will have to use separate firmware updates for the CMC versus the nodes, that also means there is another BMC to secure and keep updated and the functionality is a bit different between the nodes and the CMC.

We like the chassis power figures, the ability to turn the entire chassis off, and the assistance for tying nodes to chassis for inventory purposes. Realistically, the “killer” feature of the CMC is using fewer cables to wire everything. Reducing 4 cables helps mitigate the 2U4N cabling challenge.

Next, we are going to look at performance before moving to power consumption and our final words.

That’s a killer test methodology. Another great STH review.

Great STH review!

One thing though – how about linking the graphics to a full size graphics files? it’s really hard to read text inside these images…

Monster system. I can’t wait to hear more about the epyc 7371’s

I can’t wait to see these with Rome. I wish there was more NVMe though

My old Dell C6105 burned in fire last May and I hadn’t fired it up for a year or more before that, but I recall using a single patch cable to access the BMC functionality on all 4 nodes. There may be critical differences, but that ancient 2U4N box certainly provided single-cable access to all 4 nodes.

Other than the benefits of html5 and remote media, what’s the standout benefit of the new CMC?