Gigabyte H261-Z60 Node Topology

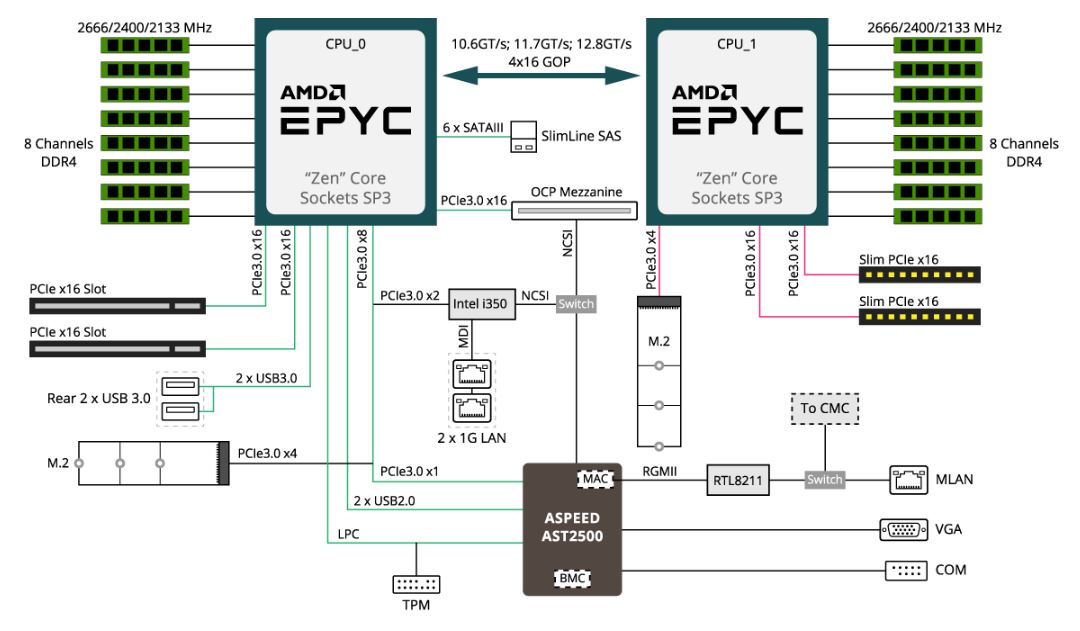

One of the really interesting aspects to the Gigabyte H261-Z60 that we think our readers will be especially intrigued by is how AMD EPYC provides a differentiated experience versus Intel Xeon Scalable in 2U4N. The Gigabyte H261-Z60 shows why an AMD EPYC 2U4N solution is perfectly viable using single CPU configurations, something that Intel cannot match. To start understanding why, here is the official motherboard block diagram to frame our discussion:

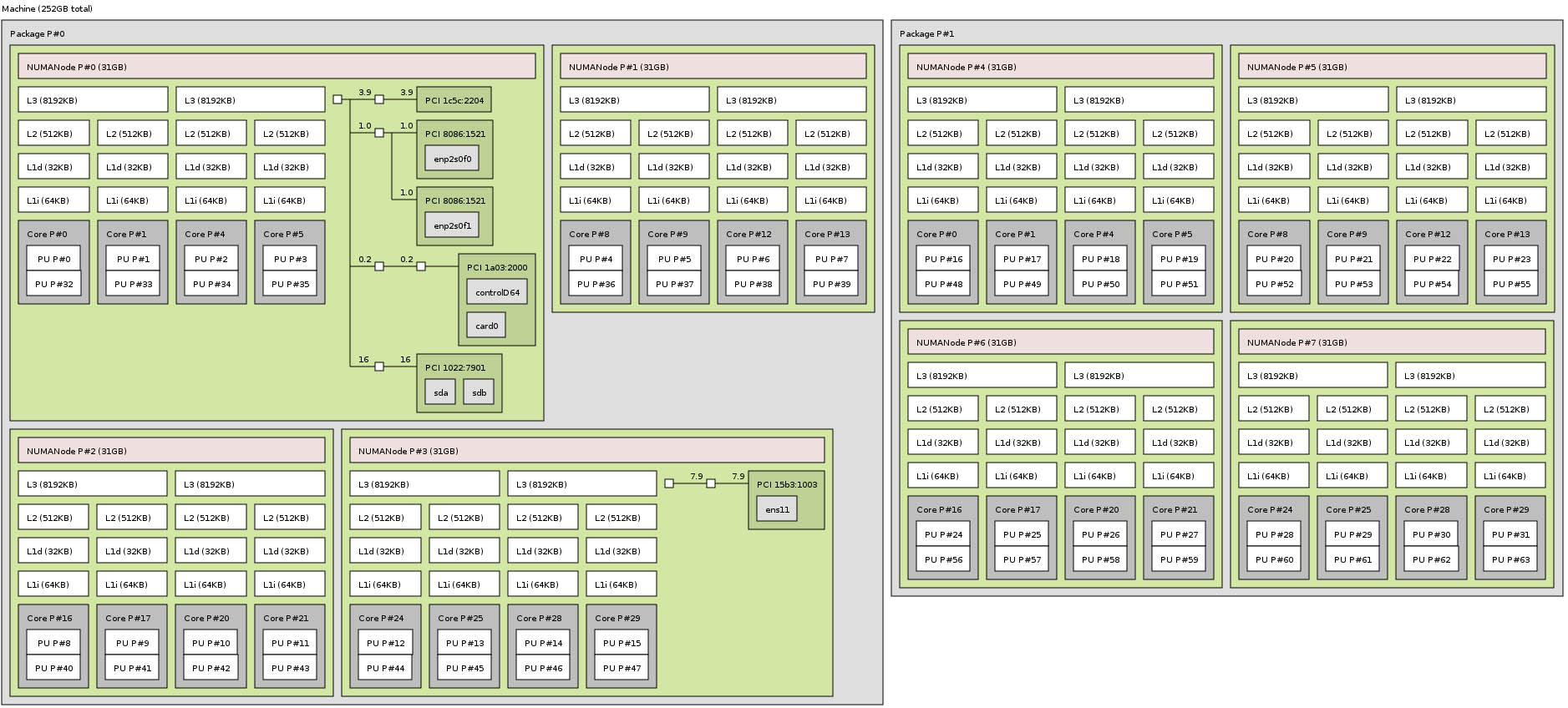

To illustrate why this is important, here is a dual processor node. One can see, all devices are attached to the first CPU. If we had a second M.2 device that would be on the second processor, but here, all I/O is attached to a single CPU.

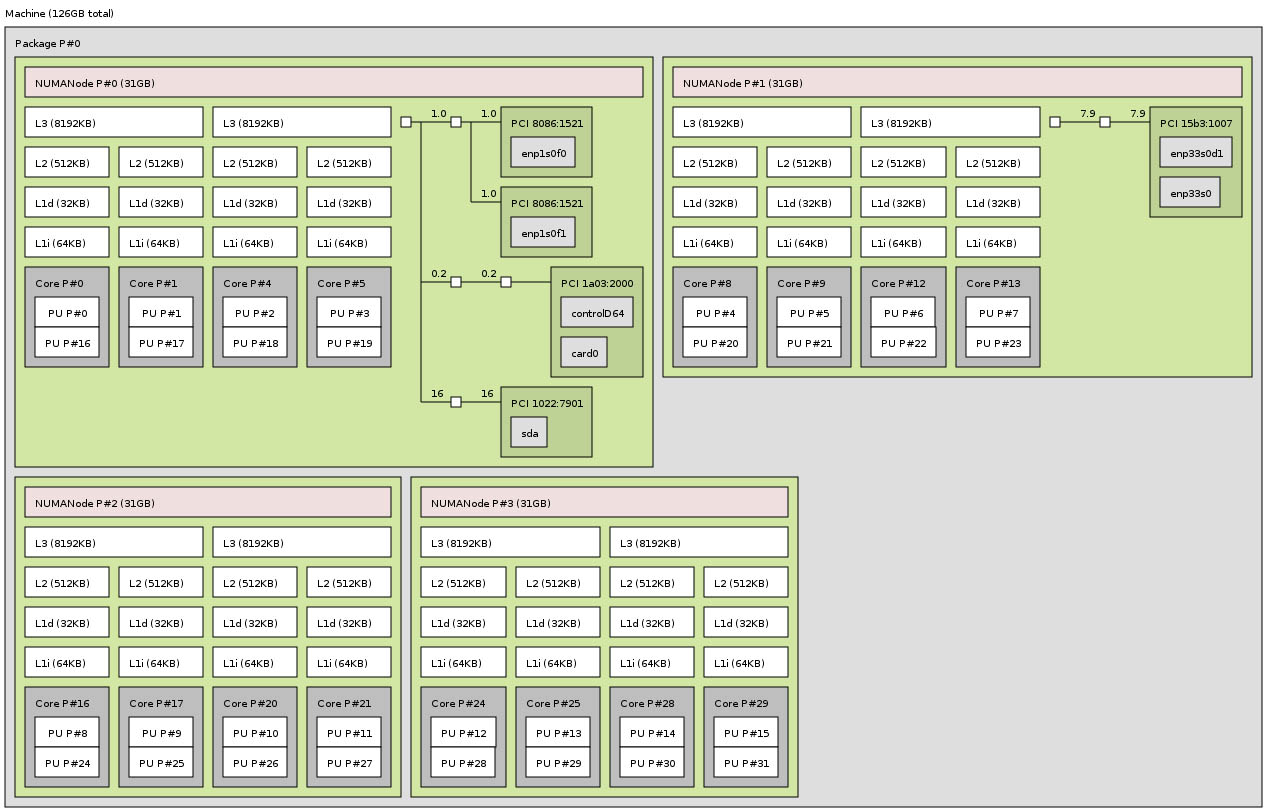

For AMD EPYC, this is important since it means that you can use all storage and expansion options, save the second M.2 slot, with a single CPU instead of having to use dual CPUs. Here is a single CPU example with a Mellanox NIC in the top left PCIe x16 slot.

Topology in AMD EPYC is important since it means that one can effectively use “P” series CPUs with this platform. Instead of two 16 core sockets, one can use an AMD EPYC 7551P with 32 cores and save money on the initial server hardware, and also halve VMware ESXi license costs. In a 2U4N chassis, those VMware savings can be tens of thousands of dollars per chassis.

With the PCIe x16 slots plus OCP mezzanine slot available, one has 48 PCIe lanes dedicated to those three I/O slots, plus four more for the M.2. That is 52 lanes or 4 more than the Intel Xeon Scalable single processor solution has. The additional 12 lanes from the first CPU are powering the BMC, 1GbE NICs, and the SATA III ports. One can add 50GbE or 100GbE OCP Mezzanine cards and use the PCIe x16 lanes for external hard drive arrays, NVMe JBOFs, or even GPUs and accelerators in external chassis. With Intel Xeon Scalable to achieve this level of connectivity, two CPUs must be used. With AMD EPYC, a single, lower-cost CPU can be used. A very valid use case is to use the Gigabyte H261-Z60 as a 2U4N system with one CPU per node.

Next, we are going to explore the management options before moving onto the power and performance testing.

That’s a killer test methodology. Another great STH review.

Great STH review!

One thing though – how about linking the graphics to a full size graphics files? it’s really hard to read text inside these images…

Monster system. I can’t wait to hear more about the epyc 7371’s

I can’t wait to see these with Rome. I wish there was more NVMe though

My old Dell C6105 burned in fire last May and I hadn’t fired it up for a year or more before that, but I recall using a single patch cable to access the BMC functionality on all 4 nodes. There may be critical differences, but that ancient 2U4N box certainly provided single-cable access to all 4 nodes.

Other than the benefits of html5 and remote media, what’s the standout benefit of the new CMC?