Starting with early hyper-scale deployments the 2U, 4-node (2U4N) form factor is now all the rage in corporate data centers. If you do not need a large expansion footprint nor large local storage arrays, the 2U4N form factor is a huge density upgrade. In that vein, the Gigabyte H261-Z60 in this review answers that need with the densest possible air-cooled AMD EPYC platform on the market. Fitting up to 8x 32 core AMD EPYC “Naples” generation CPUs, and with an upgrade path to next year’s 64 core “Rome” next-generation parts, compute density is awesome. Using the 2U4N chassis and leaving some room for additional networking, one can now fit 80x dual CPU nodes in 40U of a rack. That means a rack of 20x Gigabyte H261-Z60 systems will yield 5120 cores and 10240 threads today, doubling that in 2019.

In our review, we are going to show you around the system. We are going to talk about management. Finally, we are going to show some performance figures and give our final thoughts.

Gigabyte H261-Z60 Hardware Overview

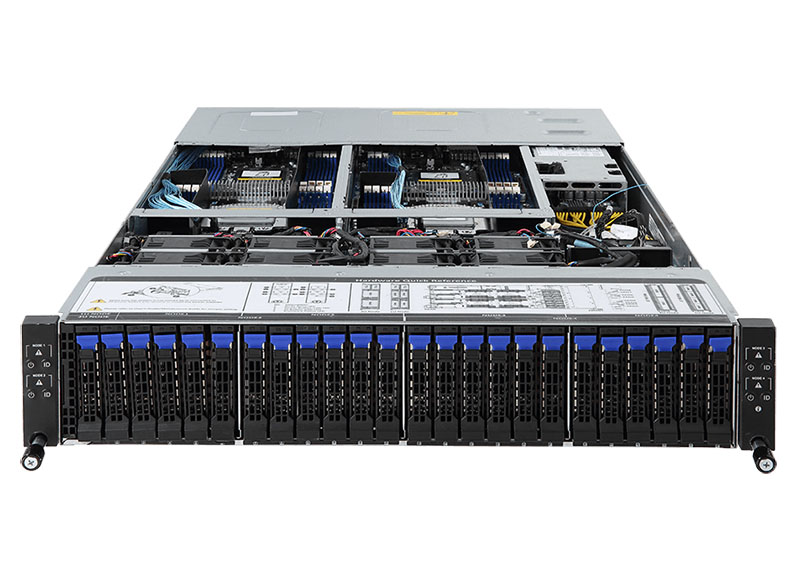

The Gigabyte H261-Z60 is a 2U chassis. The front of the chassis looks like many other 2U 24-bay storage chassis on the market except for a few differences. On each rack ear, there is a pair of node power and ID buttons. Our configuration utilized 6x SATA III 6.0gbps connections to each of the front drive bays. One can add a SAS controller to make these bays SAS enabled, but we think most will opt for the SATA configuration.

Looking just above the front of the chassis, Gigabyte affixes a sticker with the basic node information allowing one to quickly navigate the system. This type of on-chassis documentation was previously only found on servers from large traditional vendors like HPE and Dell EMC. Gigabyte has taken a massive step forward in recent years paying attention to detail even on small items such as these labels.

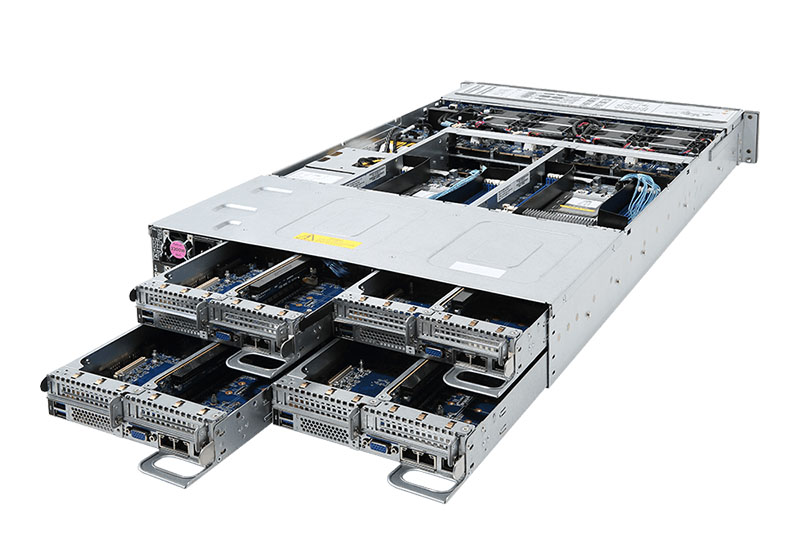

Since this is a 2U 4-node design, there are four long nodes that each pull-out and can be hot swapped from the rear of the chassis. This makes servicing extremely easy. If you have a rack of these servers, one can replace a node into another chassis without having to deal with rack rails. From a servicing perspective, the 2U4N design is excellent.

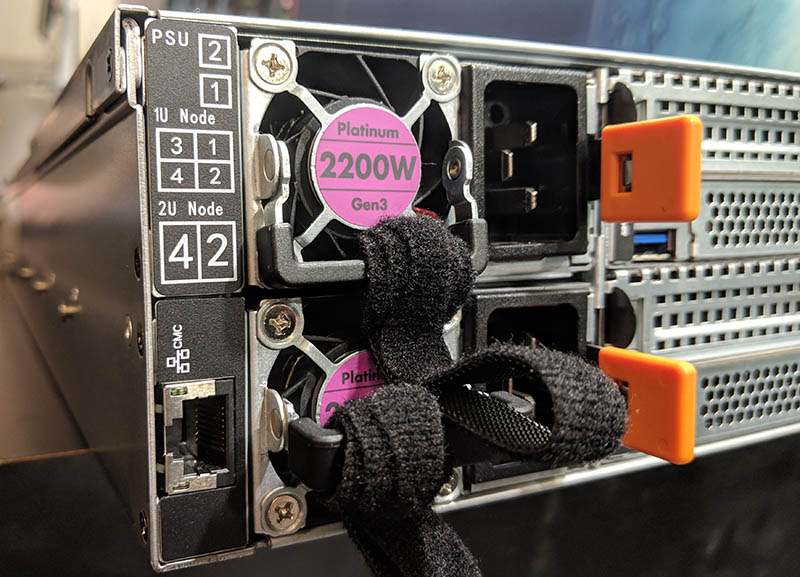

Power is provided to the chassis via dual 80Plus Platinum rated 2.2kW power supplies. These are the same power supplies we saw used in our Gigabyte G481-S80 8x NVIDIA Tesla GPU Server Review. One of the big benefits to the 2U4N design is that you can utilize two power supplies for redundancy instead of eight power supplies for individual 1U servers. These power supplies can run at higher efficiency which lowers overall power consumption for four servers. Cabling is reduced from eight power cables to two using a 2U4N server like the Gigabyte H261-Z60.

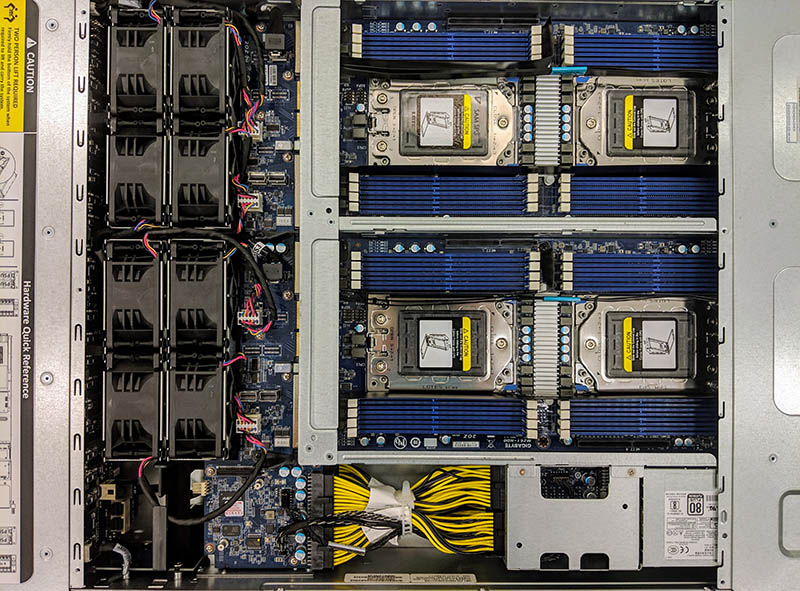

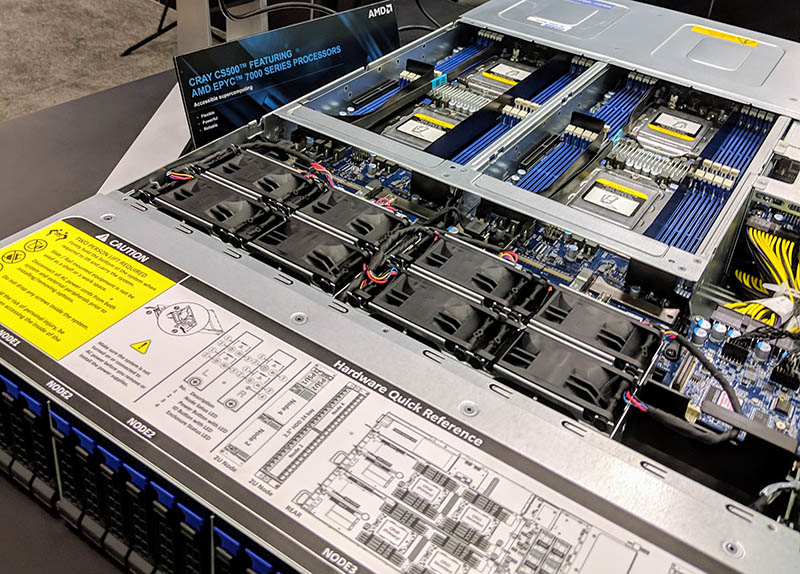

Taking a look at the top of the server opened up, one can see four pairs of chassis fans cooling the dual processor nodes. With only eight fans per chassis, there are fewer parts to fail than in four traditional 1U servers and the cooling provided by the fans can be better utilized leading to power consumption savings over less dense options.

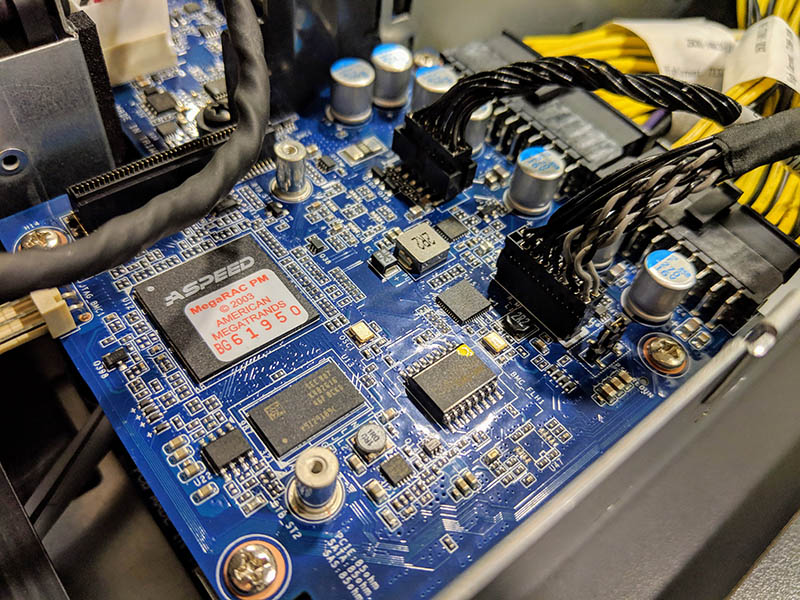

You may have noticed the small PCB in the bottom left of that picture. That is the Central Management Controller part on the Gigabyte H261-Z60 that our test unit came equipped with. This CMC has its own baseboard management controller and allows a single connection for all four node BMCs reducing cabling further.

Gigabyte H261-Z60 Nodes

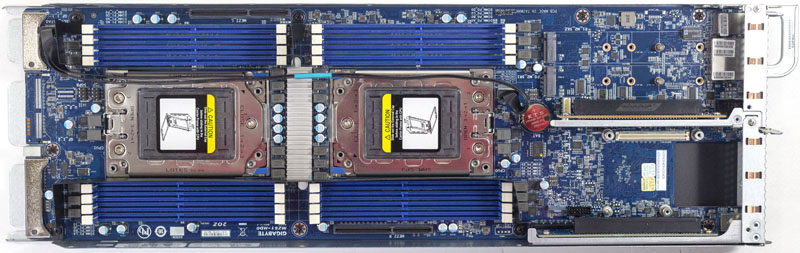

Each Gigabyte H261-Z60 node and there are four of them per chassis, is a complete system itself. One can see the hot swap tray contains a Gigabyte MZ61-HD0 motherboard suitable for dual AMD EPYC processors with 8 DIMMs per CPU and 16 DIMMs total.

At the rear of the unit are two low profile, half-length PCIe x16 slots. These are accessed by removing a few screws. We hope that in the future Gigabyte can find a way to make this a tool-less design. At the same time, most 2U4N nodes like this use screws primarily for structural rigidity to ensure that they remain the proper size to fit neatly into the larger chassis.

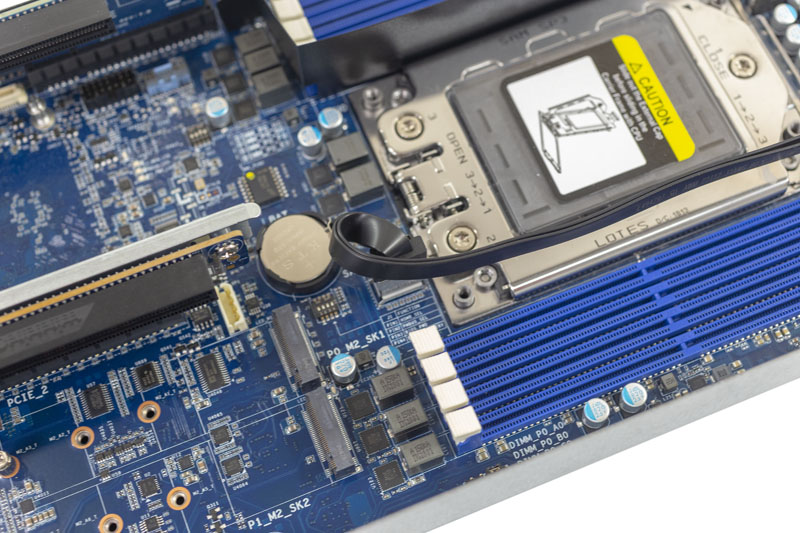

One also gets an OCP mezzanine card slot and two M.2 NVMe slots per node. You can see here with a Mellanox 10GbE OCP mezzanine card installed as well as a SK.Hynix M.2 22110 NVMe SSD. Even in this compact form factor, Gigabyte can offer two full M.2 22110 (110mm) slots. This is important because the 110mm drives typically can support power loss protection while the 80mm drives do not due to space constraints. This is a differentiating design feature for the H261-Z60.

Each node has two USB 3.0 ports, a VGA port, dual 1GbE networking, and a management port built-in as well.

Mass storage is provided via a cabled interface from the motherboard. When in place, this also supports proper airflow through the chassis.

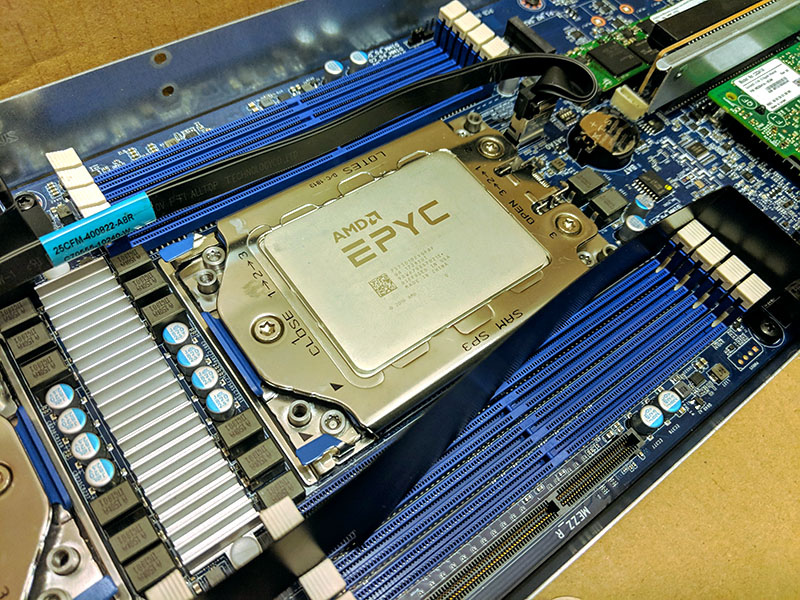

We were able to configure this server with everything from 32-core “Naples” generation processors to even the new high-frequency AMD EPYC 7371 processors.

During the review, we actually saw this hardware at Supercomputing 2018. Cray chose the Gigabyte H261-Z60 as the basis for its CS500 supercomputing platform.

Gigabyte’s hardware and attention to detail have gotten much better over time to the point that well-known vendors like Cray are using hardware like the H261-Z60 in their high-performance computing systems.

Next, we are going to take a look at the system topology before we move on to the management aspects. We will then test these servers to see how well they perform.

That’s a killer test methodology. Another great STH review.

Great STH review!

One thing though – how about linking the graphics to a full size graphics files? it’s really hard to read text inside these images…

Monster system. I can’t wait to hear more about the epyc 7371’s

I can’t wait to see these with Rome. I wish there was more NVMe though

My old Dell C6105 burned in fire last May and I hadn’t fired it up for a year or more before that, but I recall using a single patch cable to access the BMC functionality on all 4 nodes. There may be critical differences, but that ancient 2U4N box certainly provided single-cable access to all 4 nodes.

Other than the benefits of html5 and remote media, what’s the standout benefit of the new CMC?