Gigabyte has been making an aggressive push into the server market, and this week, just in time for GTC in San Jose California is releasing the Gigabyte GS-R22PHL. The GS-R22PHL is a dual Intel Xeon E5-2600 2U server that can support up to eight 2.5″ drives and 512GB of RAM. While this would likely be considered a fast supercomputing platform a few years ago, in today’s market GPU solutions such as the NVIDIA Tesla series or the Intel Xeon Phi series are very important to accelerate certain parts of code that can be highly parallel and small enough to fit in limited memory of accelerator cards. The Gigabyte GS-R22PHL keeps the centerline of the chassis focused on a standard dual LGA2011 Intel Xeon E5-2600 server while the sides of the rackmount chassis are completely reserved for GPUs/ Intel Xeon Phi cards and supporting fans for proper airflow.

The Gigabyte GS-R22PHL is designed to consume a lot of power. With eight 200+ watt GPU or Intel Xeon Phi cards installed in a chassis plus two Intel Xeon E5-2600 series CPUs with 10GbE connectivity, these chassis are sure to take a lot of power. Gigabyte offers redundant 1600w power supplies that are 80 PLUS platinum certified. These power supplies may seem extreme but are needed in the Gigabyte GS-R22PHL.

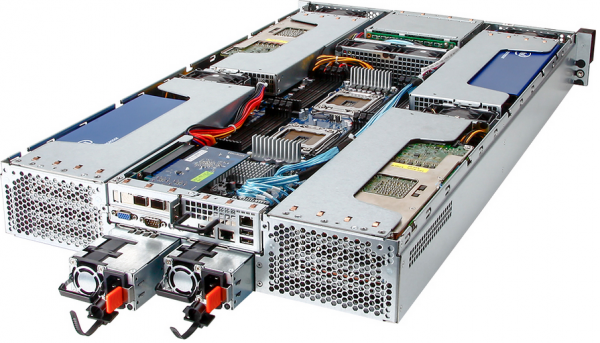

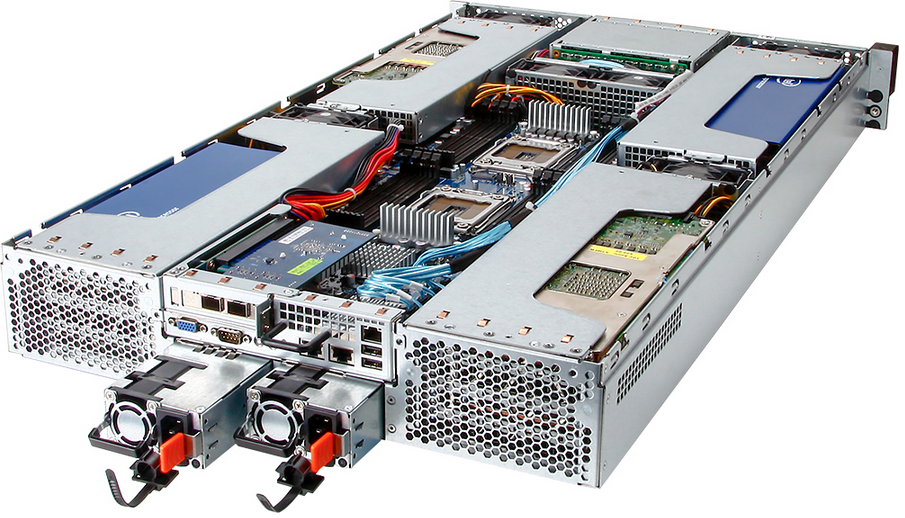

The main purpose of the chassis is to support up to eight dual slot GPU based accelerators such as the NVIDIA Tesla series or Intel MIC co-processors. One can see from the above picture four accelerator card cages two on each side of the chassis. There is direct airflow to the PCIe cards and mid-chassis fans to move air through at a very fast rate. Here is Gigabyte’s photo with two Intel Xeon Phi cards in an expansion cage.

Quick specs for the Gigabyte GS-R22PHL supercomputing platform can be found in the following togglebox:

[toggle_box title=”Gigabyte GS-R22PHL Specifications” width=”Width of toggle box”]

| Form Factor | 2U 448 x 87.5 x 800 mm (WxHxD) |

| CPU | Dual LGA 2011 sockets for dual Intel Xeon E5-2600 Series CPUs |

| Chipset | Intel C602 |

| Memory | 16 x DIMM slots supporting registered or ECC unbuffered memory (512GB Max) |

| LAN | Dual 10GbE BASE-T ports (Intel X540) + Dual 10GbE SFP+ ports (Broadcom® BCM57810) via mezzanine card (optional) Management LAN |

| HDD | 8 x 2.5″ hot-swappable HDD bays |

| RAID Function | Intel SATA RAID 0/1/5/10 LSI SAS 2208 RAID 0/1/10/5/50/6/60/JBOD (optional) |

| Expansion Slots | 2 x Mezzanine IF card slots |

| Power Supply | Redundant 1+1 1600W 80 PLUS Platinum level (94%+) power supplies |

| Server Management | Aspeed AST2300 with IPMI 2.0 and iKVM |

[/toggle_box]

It is always good to see additional competition in the server market. Gigabyte has been venturing to do some things slightly different with their machines such as using Avocent based IPMI 2.0 instead of a standard AMI MegaRAC offering.

Cool, my next BF3 machine.

Few things are unclear:

1) how 1600W could possibly be enough for 8xMIC cards (each rated @ 225W i.e. up to 1800W total) + 2 X CPUs (~200W) + RAM etc?

2) 8 MICs or GPUs need 128 (8×16) PCIe lanes plus lanes for 2x10Gb ports + lanes for mezannine cards etc but 2 Xeon E5 only have 80 ( 2×40); does that mean that there are PCIe switch chips on the mb or they’re running MIC/GPU in x8 mode (if >4 are installed)?

3) what’s the approximate price for barebone server (without MICs/GPUs/CPUs/RAM etc) and who’s selling (and supporting) it in US?

Igor – I don’t think that’s true MICs may be x8 but they only need like x4 max and mabye less.

Yeah, no need for 16 lanes, especially when at version 2 or 3. PSU’s are on the light side but the figures quoted by Igor are also on the high side. Huge CPUs are not required for anything more than PCIE lanes. You won’t get 8 huge cards in there either and keep heat under control. Big cards should never be placed that close and is a FAIL on most manufacturers who try placing them side by side with no way to get air into them. You won’t put 8 video cards in though with a windows OS, they still limited to 4 cards max.

Both Intel MIC and new GPUs (including Tesla) are x16 – and even if they can run in x8 slot it’ll seriously bottleneck their performance in many cases.

Figures quoted by me are from Intel (ark.intel.com) and for some classes of problems they can actually need >200W. Give how expensive each card is (>$2,000/card for either Intel MIC or new Nvidia Teslas) it seems like a really strange idea to use more than 4 cards in a 2-CPU server.

Igor, I think you are missing the point with this system. It is not aimed at desktop/workstation use. It is aimed at render farms and distributed GPU computing. There are no Intel CPU’s that draw 200W, the biggest is 150W TDP. However, the need for such big CPU is not in this system, the CPU’s are for nothing more than PCI-e lanes and distributing workloads to the GPU compute cards.