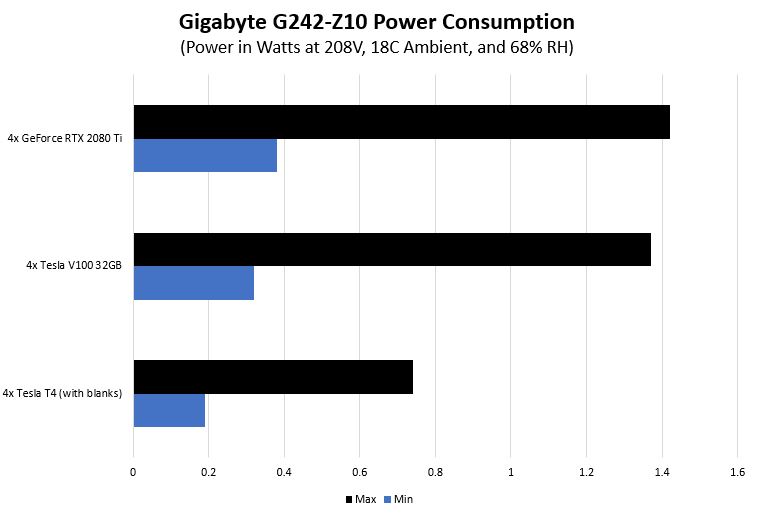

Gigabyte G242-Z10 Power Consumption

We decided to settle on an AMD EPYC 7402P configuration with 256GB of RAM (32GBx8) and the QLogic 4x 25GbE NIC and varied GPUs in the system.

Perhaps the most interesting aspect here is that the GeForce RTX 2080 Ti Blower configuration was using the most power. It seems like the extra power draw from the blower-style fans was pushing chassis fans to spin harder which caused additional power consumption in the context of the server. That is an interesting result which was not our hypothesis, however, it makes some sense given ramping fan speeds we saw. We would have liked to test with the new Tesla V100S and the Radeon Instinct MI60 but we did not have those GPUs available and could not source units to borrow. Still, this is a decent set to give some sense of scaling.

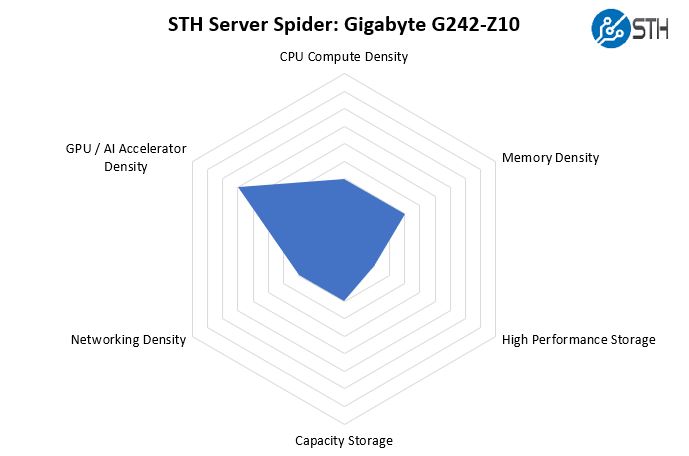

STH Server Spider: Gigabyte G242-Z10

In the second half of 2018, we introduced the STH Server Spider as a quick reference to where a server system’s aptitude lies. Our goal is to start giving a quick visual depiction of the types of parameters that a server is targeted at.

The Gigabyte G242-Z10 is not the densest system. It has four 3.5″ drives, but it is not a capacity storage server. It has two NVMe 2.5″ drives and a single M.2 slot but is not an NVMe server. There are two GPUs per rack U and a single CPU with 8x DIMM slots. While this is not the densest system, it is perfect for many existing racks and facilities that are not chasing 40kW+ per rack loads.

Final Words

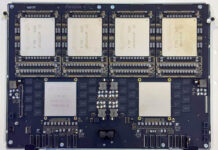

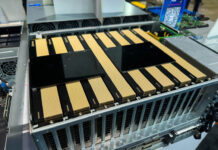

This is one of those platforms which I wish we had for the lab. It is perhaps one of the easiest 2U servers to service GPUs on since Gigabyte is doing something novel with the G242-Z10’s PCIe risers. In terms of density, since we do not currently have the highest-density racks, this is just about perfect.

We had a few minor points of improvement. There are small things such as moving the battery that we pointed out that are nice-to-have upgrades. Still, we like the overall attention to detail on items such as the tool-less drive trays is excellent.

Thanks for being at least a little critical in your recent reviews like this one. I feel like too many sites say everything’s perfect. Here you’re pushing and finding covered labels and single width GPU cooling. Keep up the work STH

As its using less than the total power of a single PSU, does this then mean you can actually have true redundancy set for the PSUs?

Also, I could have sworn that I saw pictures of this server model (maybe from a different manufacturer) that had non-blower type GTX/RTX desktop GPUs installed (maybe because of the large space above the GPUs). I dont suppose you tried any of these (as they run higher clock speeds)?

https://www.asrockrack.com/photo/2U4G-EPYC-2T-1(L).jpg

https://www.asrockrack.com/general/productdetail.asp?Model=2U4G-EPYC-2T#Specifications

I am looking forward to see similar servers with PCIe 4.0!

Been waiting for a server like this ever since Rome was released. Gonna see about getting one for our office!

For my application I need display output from one of the GPUs. Can not even the rear GPU be made to let me plug-in a display?

To Vlad: check the G482-Z51 (rev. 100)

Has anyone tried using Quadra or Geforce GPUs with this server? They aren’t on the QVL list, but they are explicitly described in the review and can be specc’d out on some system builders websites.