Gigabyte E283-S90 Block Diagram

Here is the block diagram for the server:

Overall this is a very balanced systems with almost a 50/50 split of resources on CPU0 and CPU1. The Intel C741 PCH is attached to CPU0 as we would expect. Some may wonder why the OCP NIC 3.0 slots have a “Switch” between them. This is not a big PCIe switch, or a big management switch. Instead, it is to allow for the NCSI signal to go to the OCP NIC 3.0 slots as well as the Intel i350-am2.

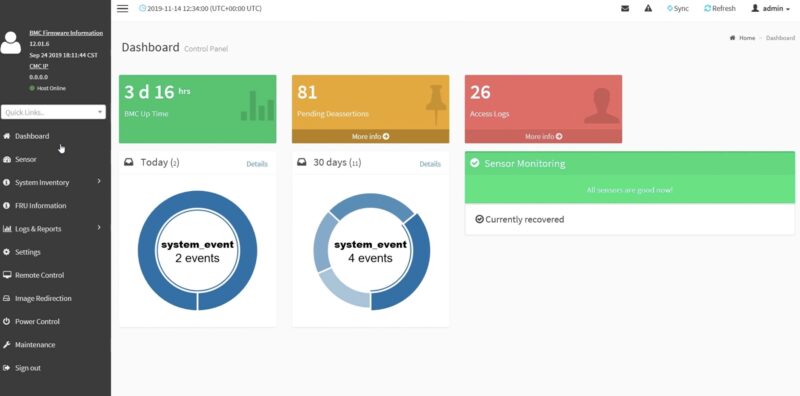

Gigabyte E283-S90 Management

On the management side, we get something very standard for Gigabyte: an ASPEED AST2600 BMC. This is on its own card, which is becoming more common than putting the BMC on the motherboard. Having a BMC card means one can easily change the BMC for other customers/ regions.

Gigabyte is running standard MegaRAC SP-X on its servers, which is a good thing for IPMI, web management, and Redfish.

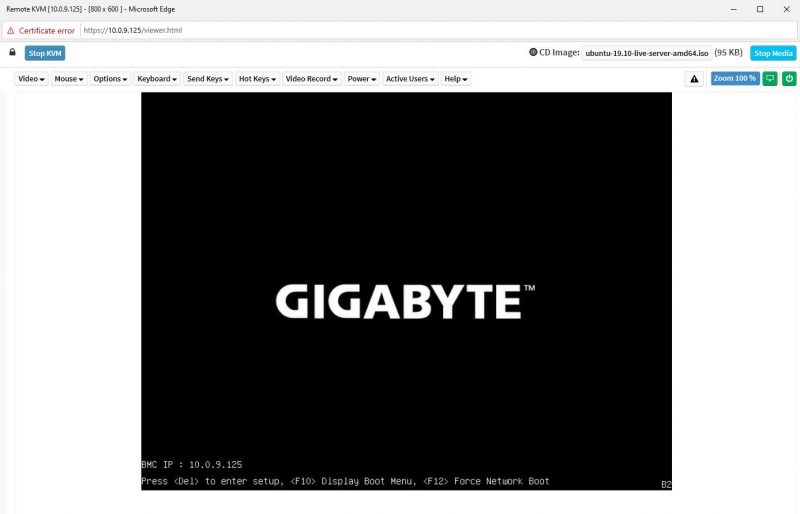

Gigabyte also has a HTML5 iKVM that is included.

Something a bit different is that this server has a unique BMC password. For those who have not purchased servers in years, this is common. You can see Why Your Favorite Default Passwords Are Changing that we wrote a few years ago on why this is the case.

Something that we were not fans of was that the BMC password on this system was on a sticker on the side of the case. It was just a pain to access once the server was racked.

Next, let us do a quick performance check.

Gigabyte E283-S90-AAV1 Performance

The 4th Gen and 5th Gen Intel Xeon Scalable processors will continue in the lower-power P-core segment for a few more months as Intel slowly rolls out its various Xeon 6 platforms. Still, that means we have a very well-known platform.

Something we were worried about was the ability of the server to handle higher TDP CPUs, but that turned out to be a relative non-issue. We were running a 350W TDP CPU, so right at the limit of the server, and the results were still within a +/- 3% margin from our baseline that many would consider a test variation. Still, the server was slightly below some other 2U designs. This is solid, but not superior performance.

Next, let us get to the power consumption of the server.

What’s the reliability like for Gigabyte servers? I’ve had a few of their components over the years (motherboards, video cards and power supplies) and I found them pretty flaky and had to return a few, until eventually I got ones that worked well enough for a year or two then developed problems again. Since then I’ve steered clear of them. Are their servers similar in this respect, or do they design them better than their consumer gear?

This looks like it was designed backwards, in comparison to the server in your reviews of: Supermicro Hyper-E / SYS-220HE-FTNR, Gigabyte E251-U70, Supermicro ARS-210ME-FNR 2U or ASUS EG500-E11 and (not reviewed) Asus EG520-E11-RS6-R.

Were it necessary to have rear I/O Mitxpc seems to have a good idea with their mini rackmount servers with rear-io; where the I/O is reversible, and can be moved to the front instead.

If you plan on swapping out fans more than anything else I can see the benefit of this design.

Still, thanks for reviewing this, as it caters to someone who wants this; maybe for their colocated rack power sized systems.

Is there a way to search the server reviews based on the spider score? Say I am interested in high compute density and would like to just read the reviews above a particular score?

Hey Ryan – We do not have this, but it is a good idea. We probably need to time or generational bound it as well. A high density CPU compute from 2019 with 64 cores/ socket will be very tiny by Q1 2025.

I cannot see any U2 or U3 SSD slots… instead poor old SATA !

Last year, I have been able to buy KINGSTON U2 Enterprise for less than their SATA counterpart. But these excellent U2 have been discontinued and not replaced by Kingston.

Nevertheless, the Micron U3 are accepted by my DELL PowerEdge R650.

I still see a lot of crazy high prices for 12 Gb SAS SSDs which are faster than SATA but really slower if you compare to NVMe PCIe gen3 or gen4.

Maybe the reason of this situation comes from CTOs afraid of buying their SSDs from a third party seller and stuck with the server manufacturer (ie DELL, HP, Lenovo).