Key Lessons Learned

The AMD EPYC 8004 family is really interesting. Using Zen 4c allows AMD to deliver a CPU that is still faster on a per-core basis than previous generations such as Xeon E5 V4 and Skylake/ Cascade Lake. One can then get more cores, and consolidate to a single socket with more I/O than the outgoing Xeons that are being replaced. The biggest benefit is the power consumption where this can fit into 2U servers with under 150W per U power budgets.

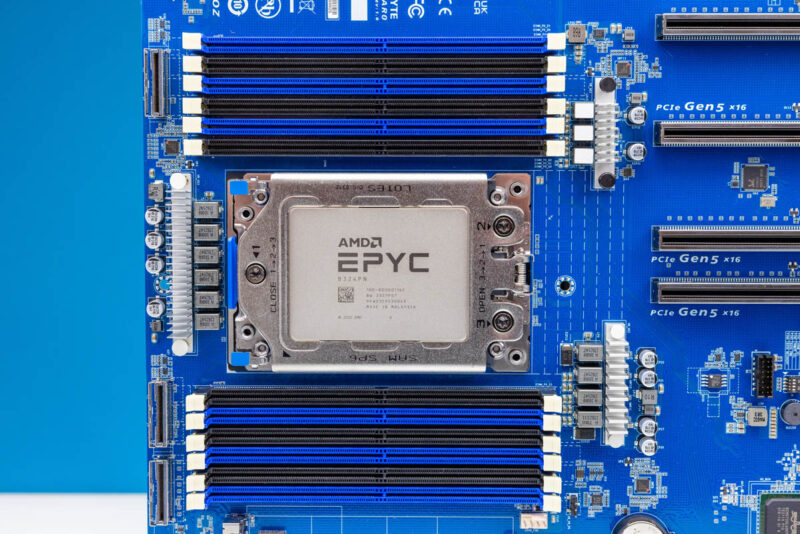

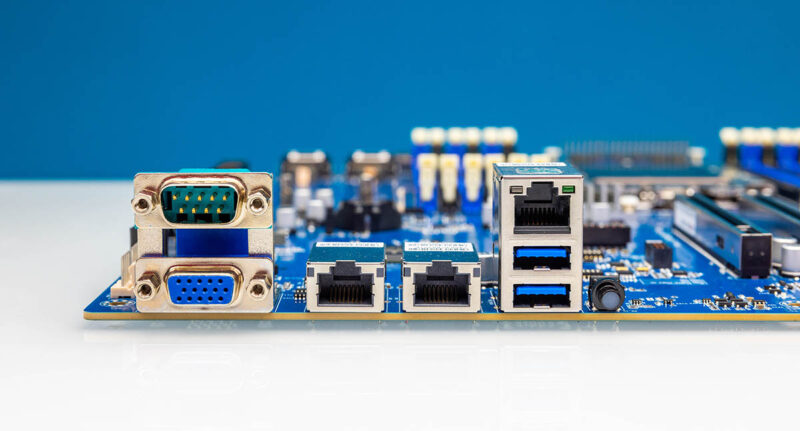

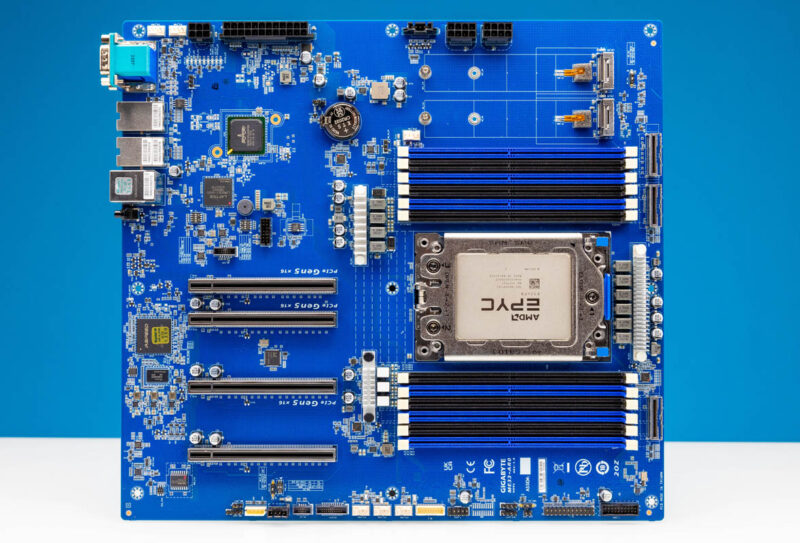

This motherboard is somewhat fun. It is huge. There is tons of I/O, but then the onboard networking is a low-cost 1GbE solution. Frankly, in 2023-2024 we would want to see something a bit higher-end, but this is a cost-saving move. Being able to fit into standard EATX cases is a big plus. It is easier to integrate being a standard form factor.

This motherboard is very different from the other EPYC 8004 motherboards we have seen.

Final Words

The platform that Gigabyte has has not changed much in the past few generations. What the company has done in this generation is position it towards a lower cost and lower power platform instead of trying to fit the highest core count and power parts.

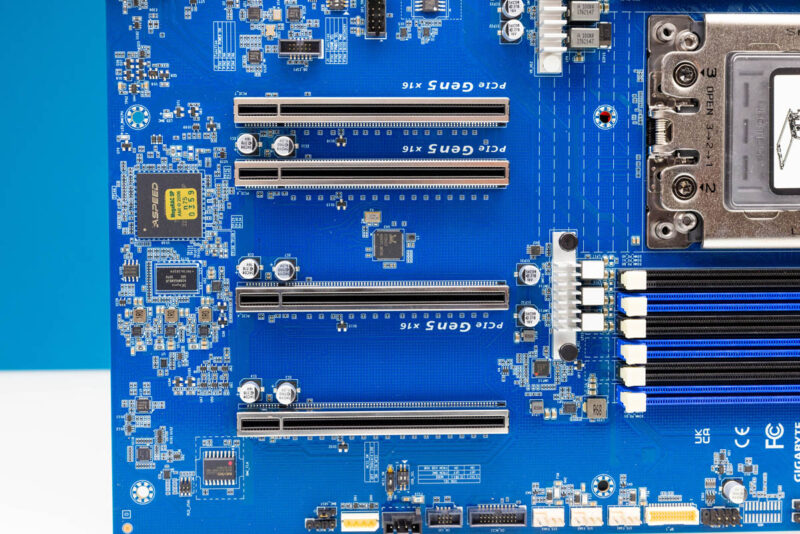

Some folks will look at this motherboard and immediately be drawn to the I/O possibilities. There is a lot that can be done with a motherboard like this. Having newer PCIe Gen5 I/O along with DDR5 and more cores means that not only can one do a 2:1 socket consolidation (or more) from older platforms to this EPYC 8004 platform, but there are new platform features as well.

Some will think that the EATX size and think that this is too big for a single socket platform. This is far from the densest motherboard we have seen for the EPYC 8004 series. At the same time, it also has a unique set of I/O. If we look back at the history of the line it starts to make a lot of sense. This is the third generation of -AR0 board we have looked at. There is a market for lower-cost single socket platforms that have a lot of storage and PCIe I/O. The Gigabyte ME33-AR0 is the latest iteration of this edge motherboard line.

Hi Eric,

Could you please provide some Info about power consumption of the board?

Thanks,

Emil

With the CPU & RAM placement like this, how can one do with these PCIe slots? Does it only suitable for NICs?

Goodness, such an I/O connected board with only 1G ethernet? I fail to understand why mid-end or higher-end boards don’t have at least one 10G port and a 2.5G port

What an odd board.

Hello,

I am running HPC servers for FEA consulting that I do. Could you comment on the idle power of this board as I am actively looking for HPC server solutions with low idle power consumption to replace an older 4 socket Xeon system. My current dual socket EPYC 9554 system pulls around 475W at idle and my older 4 socket Xeon system pulls close to 750W at idle.

Sheesh. What a weird layout.

I hope no one needs any long PCIe cards.

Indeed Eric, a word about the placement of the PCIe slots would be interesting. What’s the idea here? Always use risers? Did you talk to Gigabyte about it?

These boards aren’t usually bought by those who uses GPU accelerators

figure mostly NICs, HBAs, or U.2 breakout cards

That’s pcie placement is gigabyte trademark i guess, the most terrible design that I have seen.

You can’t use any card that have bigger size than x16 slot, wuick reminder, that almost any raid controller have cables that going not to the top, but to the side.

You wanna install something like perc h755? Hah, shame on you you only have 1(!) port to do it, otherwise you will by on top of memory dimms.

And this spaces between pcie slots is more for GPU (since they are twoslot width), but nope, can’t use it for it.

So I guess it’s just HBA retimers card, but they ALSO bigger, than x16 slot. So the cables from them will go into cpu heatsink, yikes.

I just don’t understand why gigabyte continues to do this..

Is that 150W figure for the whole board? Because that would truly be amazing if not impossible

2024, at least 10GbE should be the bare minimum standard.

If I were the designer of this board, I would have swap the placement of the M.2 with the DIMM/CPU

That at least would render the PCIE slots usable….

so close, so far

That is the stupidest board layout I have ever seen. Should have left out the PCIe slots and sold it cheaper, they being blocked by CPU & RAM.

Folks, I see no one gets this from the commenting crowd. This is a dedicated home/lab/SOHO server board. As were the previous AR0 series boards.

Thanks to no high-power components, besides the CPU, this board is fully compatible with desktop/tower cases as well as ANY cheap/simple rack chassis.

The absence of a HOT 10GbE chip is a FEATURE. Not a bug. Install an SP3 Noctua, and there ya go!

There are 3 x16 slots for suitable NICs, HBAs or x4 NVMe carriers. All of these are full-height/half-length cards. If someone needs spiining rust, there are 16 native SATA ports for some ZFS goodness.

Lastly, the bottom slot is full x16 PCIe gen4, so even a monster GPU can be supported in most cases.