The new Fungible GPU-Connect solution aims to bring fabric attached GPUs to clouds and enterprises. Underpinning this announcement is that the current infrastructure trend is to try matching GPU needs to servers in fixed configurations. With the Fungible DPUs, the goal is to flip this and deliver GPUs to servers in a manner similar to how NVMe storage is delivered with NVMeoF.

Fungible Adds NVIDIA GPU Orchestration to its DPUs

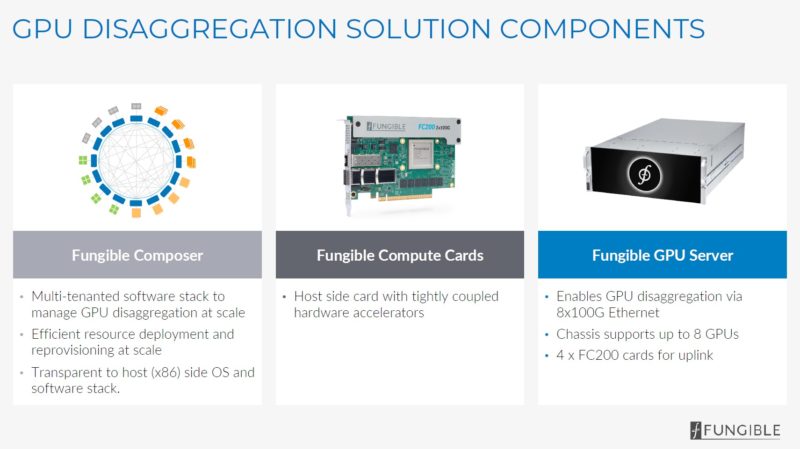

As part of the announcement, Fungible has its composer software to handle orchestation. The Fungible FC200 cards are used as high-speed DPUs. Judging by the pictures, the Fungible GPU server is a Supermicro JBOG chassis with Fungible DPUs that is used to get NVIDIA GPUs onto the fabric.

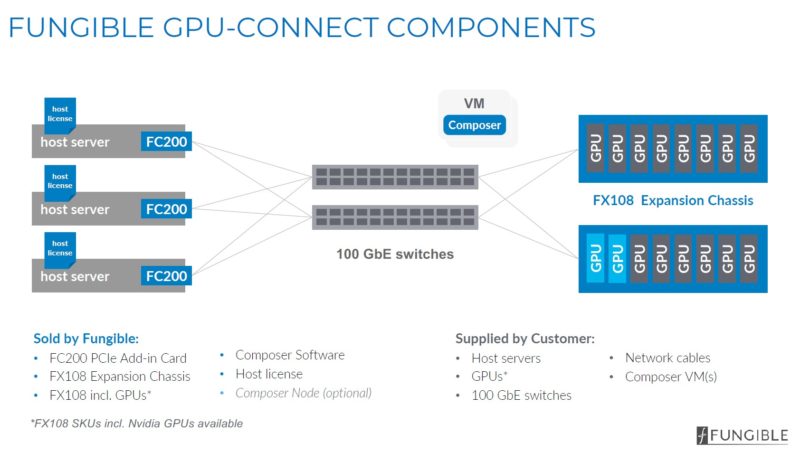

Fungible is using its high-end FC200 DPUs for the host as well as for the uplink in the GPU server. The fabric connectivity is powered by 100GbE. That makes it easier to integrate for many organizations than using other fabrics like Infiniband.

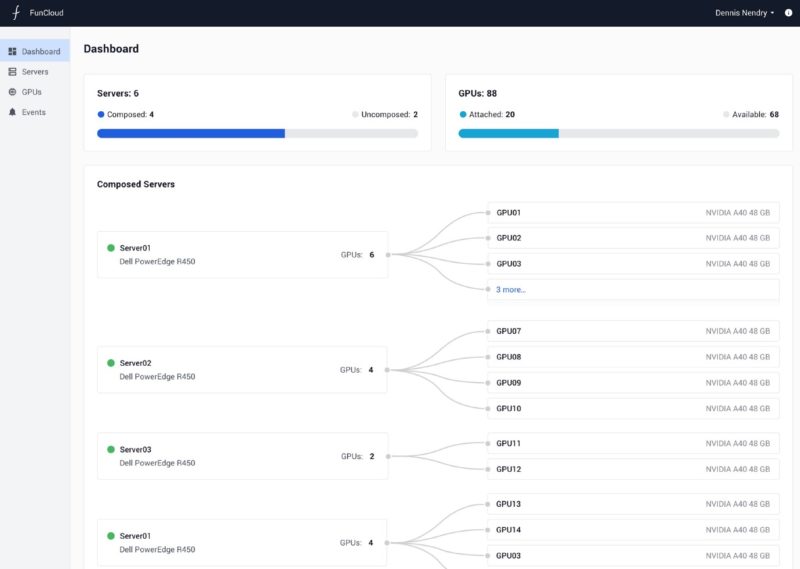

Once the GPUs are onboarded, onto the physical network, they can then be added to Fungible’s cloud, also known as the FunCloud.

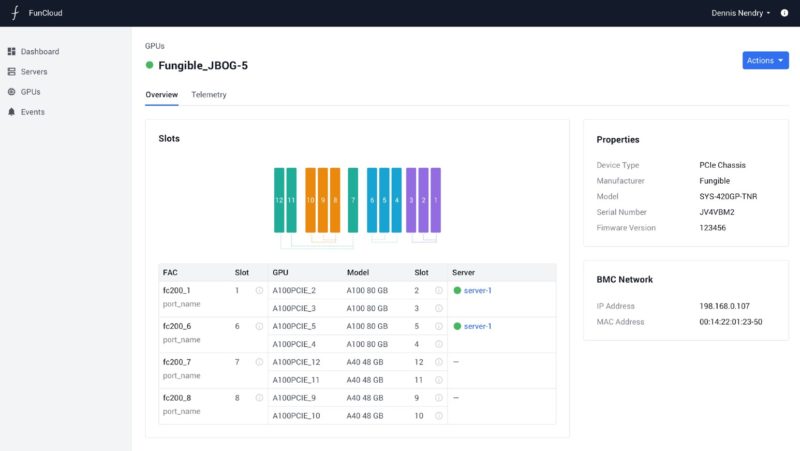

Here we can see the GPUs in the Supermicro SYS-420GP-TNR chassis. We can also see which slots the GPUs are in and which servers they are attached to.

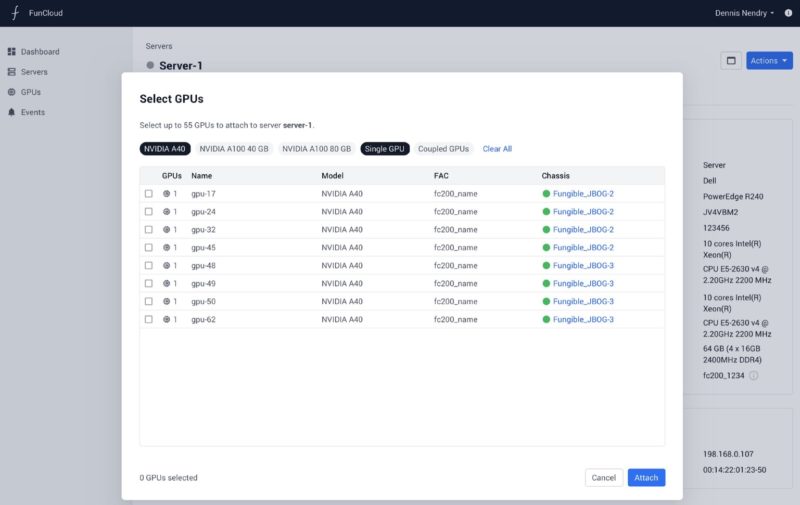

One can attach GPUs to servers. Here is a big point, the ratio can be more than 8:1. You will notice this screen says “select up to 55 GPUs to attach to server server-1”. That is a key concept. One can attach greater numbers of GPUs than a server has PCIe slots.

Fungible also has a dashboard to see how all of these services tie together.

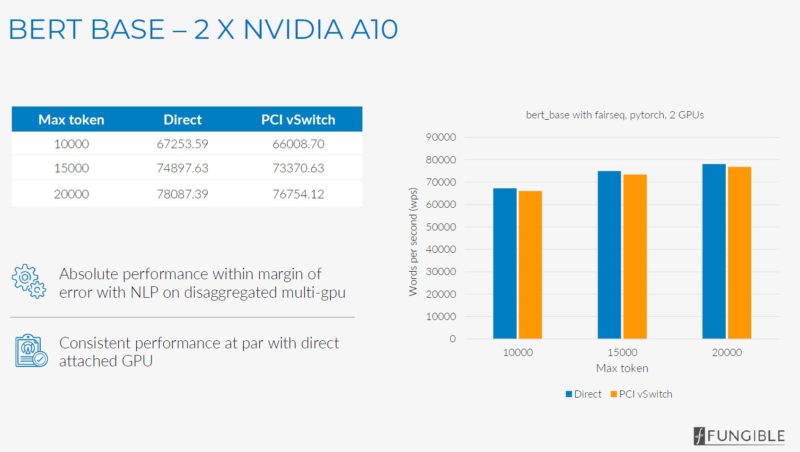

The company has a number of benchmarks, all essentially showing little performance impact to putting the GPUs over the network via Fungible DPUs and the PCI vSwitch versus direct attach PCIe. Of course, at some point, the DPU solution is higher latency and also has less bandwidth than PCIe. If one attached 10 GPUs to a single DPU there would be less physical bandwidth to go around.

This looks like a cool technology concept.

Final Words

This type of approach is not necessarily designed for maximum performance training. However, for many inferencing workloads, this makes sense. It provides a way to deliver composable GPUs over Ethernet using the Fungible DPU. Composability drives higher efficiency and utilization of resources.

Looking through the screenshots, it would have been nice to see more types of accelerators and storage shown. If Fungible has a “PCI vSwitch” that is delivered via its DPUs, it would be nice to see the PCIe scale of this with FPGAs or other devices. We would also like to see the NVMeoF capabilities shown alongside the GPUs. A big reason to deploy DPUs is composability and that is not just for GPUs but also for storage (Fungible’s first product) and other devices. We hope that comes soon.

Note from the Editor

Patrick the editor’s note: Just a quick word on timing on this one since Cliff wrote it before we were briefed on AMD-Pensando. I had a discussion with Fungible last week, several days before the AMD Acquires Pensando for its DPU Future briefing and announcement happened. My feedback to the Fungible team was straightforward with this announcement. Announcing that Fungible has a DPU to pair with NVIDIA’s DPUs is a tough road given NVIDIA has its own DPU (albeit, in many ways the current Fungible DPU has better features than its BlueField-2 contemporary.) Also, Intel has its own IPU-DPU solutions. My feedback was that Fungible needed to push cross-vendor in its orchestration. Fungible can use any PCIe device and turn it composable, and span larger physical data center footprints than current PCIe-based offerings. I just feel like for Fungible to be relevant, they need to push beyond just the NVIDIA market and get orchestration for other devices. Pensando was focused more on being a Nitro alternative and AMD’s leadership likes to create platforms that are cross-vendor.

Since the Pensando acquisition happened between this briefing and announcement, Fungible now is the big independent DPU player.