This week, we removed a hard drive that has been on the fritz for a long time in the hosting cluster. We usually overbuild the hosting cluster to tolerate failures over long periods of time. This WD 8TB hard drive, however, did something we were not expecting, so we thought it would be one to memorialize with a quick story.

Farewell Brave 8TB WD Hard Drive You Served STH Well

This 8TB WD hard drive appears to have been from a shucked WD My Book enclosure. It started life in January 2016 and eventually found its way to a longer-term backup server.

This little drive started in a Proxmox VE server in our hosting cluster, which was more of an experiment. We were using Proxmox VE with ZFS as a storage target for other Proxmox VE hosts in the cluster as a point of nightly backups in the data center. Every so often, it is nice to have a local backup as well as one that is offsite, just given the bandwidth and latency differences of having something in the same data center.

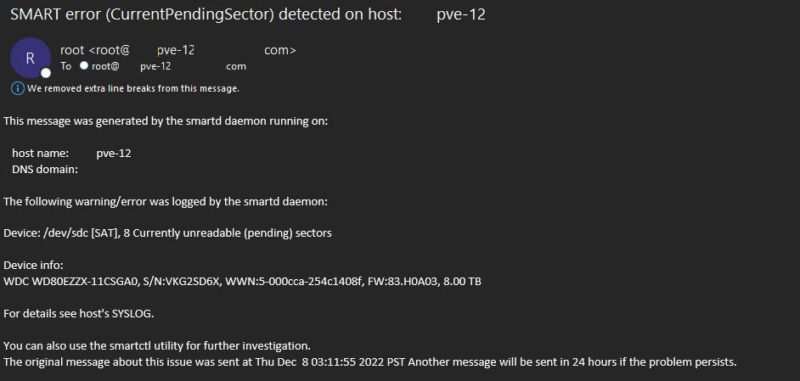

This drive was actually shown on STH in its own piece back in December 2022. If you look at the model number and serial, it was actually a drive we used in our Proxmox VE E-mail Notifications are Important piece at the time.

Since December 2022, Proxmox VE has sent around 497 notification e-mails about this drive. ZFS Scrub events, Pending sectors, increases in offline uncorrectable sectors, and error count increases all triggered SMART data notifications. Still, the drive continued on in its ZFS RAID 1 mirror.

Finally, this week, I managed to visit the data center. The front desk was undergoing a shift change, but they recognized me, telling me they just watched the San Francisco 49ers Levis Stadium data center video. They also told me it had been a long time since I was there. We checked the logs, and the last time I was in the data center was May 18, 2020, with a full pandemic mask on. It is time to do a rip-and-replace upgrade here, but I wanted to replace this drive in the meantime.

A few fun bits, 59830 hours were logged on this particular drive. Meaning it had a power-on time of around 6.83 years. Not too bad at all.

Since this was just a backup server for a few VMs, it did not use much storage. The 8TB RAID 1 array only had 4.15T of data. It was swapped with a newer 20TB Seagate drive for those wondering. Here is the resilver time:

scan: resilvered 4.15T in 09:29:16 with 0 errors on Wed Feb 21 05:07:36 2024

That is one of the reasons we tend to use a lot of mirrored arrays in our hosting cluster. The rebuild time for 4.15T was only around 7.3G/ min. If we had a larger RAID-Z array and more data, it is fairly easy to see how one can get to a day or more of having no protection from another drive failure other than having to restore from offsite backups.

Final Words

Of course, without additional layers of redundancy, we would not have let this drive sit in a degraded state for almost fifteen months. It was also just a fun one to watch and see what would happen. In the end, this shucked 8TB WD drive lasted 6.83 years in service before being retired. It will have contributed to multiple STH articles during its lifespan.

The fun part of this is twofold. First, it had been almost four years since I had visited this location. This was only one of two active hard drives, and we did not have any Dell or HPE server gear installed, so failure rates have been very low. The model has been:

- Use flash.

- Overbuild

- Then let it sit until the next refresh

That is a very different model than you see online when people talk about having to visit servers and colocation facilities constantly.

Perhaps the most fun part about this is that it means we are now gearing up for a big spring refresh!

With SATA I’ve seen a drive go bad enough it prevented the firmware from posting. This is what I thought the unexpected result would be for your failing 8TB drive.

I read the article twice and tried to find the unexpected behind the introductory remark that “This WD 8TB hard drive, however, did something we were not expecting,”

What unexpected thing did the 8TB drive do?

Definitely seen SATA drives go so bad that it causes the uefi to indeterminably hang on

Usually Seagates.

Usually those drives die within two months after you start seeing multiple bass sectors. We’ve probably gone through 1500 8tb WD HD and had abt 200 fail before we pulled at 5 yrs.

The building a co-lo to fail in place is what I’d like to hear more on. Imagine doing costing for initial capital outlay and then just labor for refreshes. It’d make cloud and on Prem so much easier to model. You made it 42 months without going so that’s a big lesson to everyone here.

I’ve got 10 x 2.5″ 5GB Seagate drives in a home made raid setup (originally using RPI3 but upgraded to RPI4 a few years back). All the drive’s are SMART reporting over 60000 hours. As this setup is used for a home media center they take quite a pounding day in and day out. I’m honestly surprised they’ve lasted as long as they have. However, I’m about to retire these drives with 2 x 20TB WD Red Pro and 2 x 20TB Seagate IronWolf Pro. The Seagate’s are really noisy compared to the quiet purr of the WD’s. Only annoying thing is the WD’s have this ticking sound, which I understand is something to do with self-lubricating. Here’s hoping the WD’s will meet or beat the 60000 hour mark.

Does Dell and HPE server gear kill hard drives faster?

“This was only one of two active hard drives, and we did not have any Dell or HPE server gear installed, so failure rates have been very low.”

Mostfet – I am not entirely sure why, but we have had clusters of Dell / HPE gear from the same era and those have had higher failure rates. Usually, that is due to using the HBA/ RAID card and vendor drives. I think they kick things out much faster and there are additional points of failure.

Patrick, I found a typo in your “Final Words” section: “The fun part of this if twofold.”

Thanks for the article and sharing the story this drive. I’ve bought several used drives off Amazon using DiskPrices tracker for building backup arrays for my ZFS pool(s) and have had remarkable success with secondhand spinning rust. Blasphemy to some I know, but if it’s stupid and it works it’s not stupid. Take care!

This is so weird … I decided to do a badly needed electronics clean up the last few weeks. It’s still ongoing. A lot of stuff had been gathered together by my late father who died in 2015. During those early `I’m an orphan now` days I sort of let myself go. But I was a drive shucker and I had 1 mybook and 1 seagate whatever lying around that I bought in 2016-2017 to replace older 4TB drives in my still running Synology DS410*. So I carefully removed the enclosure yesterday of those 2 drivers and found out I actually have an 8TB CMR (same type you showed and I used your article on how to remove it from the enclosure) and a 5TB CMR drive lying around. As well as a 275 gb never used Crucial SSD drive.

* Btw the 4 drives in use are now clocking +70-80.000 hrs Power On Time and only one is giving me doubts because of 3 reconnections in the month of november 2 years ago). No smart 5, 187, 188, 197,198, … issues at all.