At OCP Summit 2019, one of the big stars was Facebook’s new switch. The Facebook Minipack is a modular switch that the company worked with Edgecore Networks (a division of Accton) and Cumulus Networks to open and bring to market. Last year the company showed off the Facebook Fabric Aggregator at OCP Summit 2018. As Facebook expands its data center footprint and faces daunting network growth, the company needed higher density switches to create flatter and more scalable networks. Facebook has another challenge: the availability of components like optical transceiver availability which the Minipack helps to alleviate.

Facebook Minipack 32x 400GbE or 128x 100GbE Modular Switch

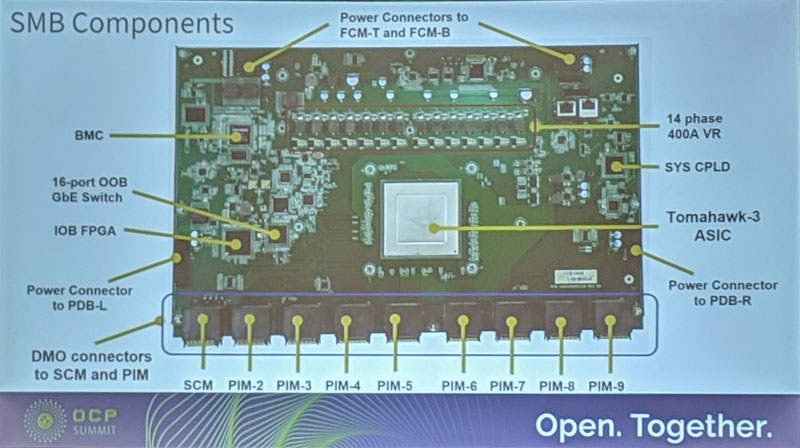

The Facebook Minipack switch is a modular switch following a trend the rest of the industry innovated on years ago. Based on the Broadcom StrataXGS Tomahawk 3 switch silicon the ASIC is capable of line rate 12.8Tbps Layer 2 and Layer 3 switching. The Broadcom Tomahawk 3 is perhaps the most broadly deployed merchant switch chip at this speed. That 12.8Tbps is commonly presented as 128x 100GbE or 32x 400GbE interfaces. With the Minipack, Facebook does not need to choose.

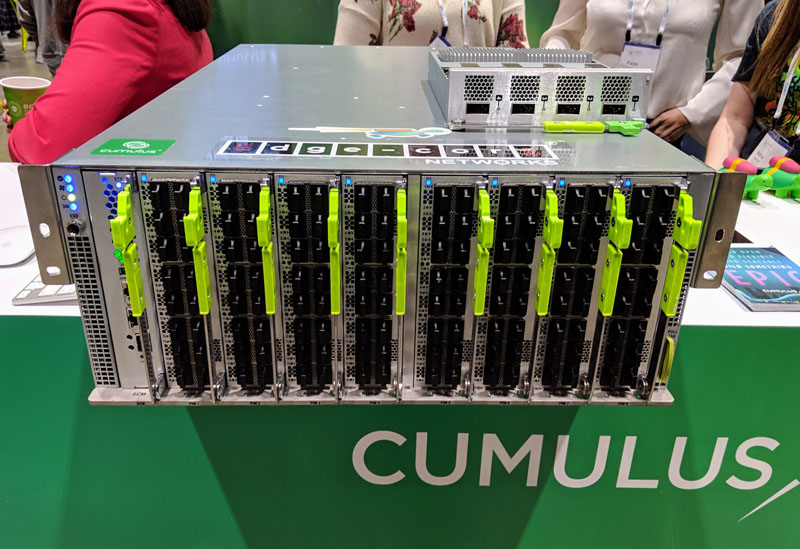

Along the front of the Facebook Minipack are eight port interface modules (PIM) slots. Launch PIM options available for the Edgecore Minipack switch are the PIM-16Q with 16x100G QSFP28 ports and the PIM-4DD with 4x400G QSFP-DD ports. We saw QSFP-DD in our in-depth Dell EMC PowerEdge MX review. Using eight PIM-16Q modules yields 128x 100GbE and eight PIM4-DD modules yields 32x 400GbE.

Packed in a 4U form factor, the Minipack allows Facebook to use less space than its previous Backpack based platform. Using one higher-capacity switch chip also means the company can reduce the number of switch ASICs in production. A direct benefit is lower power consumption and a flatter network topology.

Delving Into Minipack Hardware

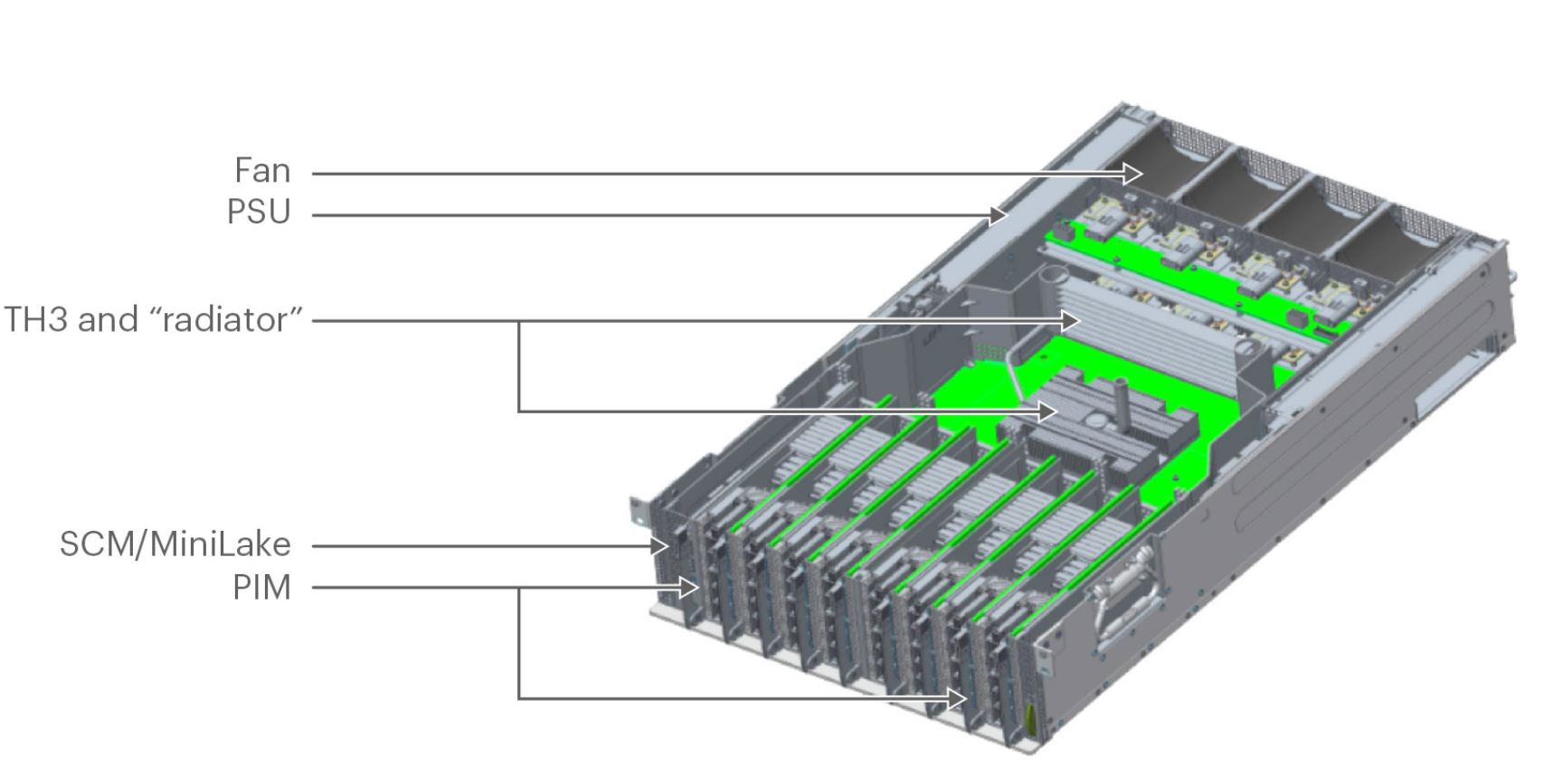

Here is the overview of the Minipack switch. The mechanical drawing looks interesting, but having hands-on time with the hardware showed a lot more about the design.

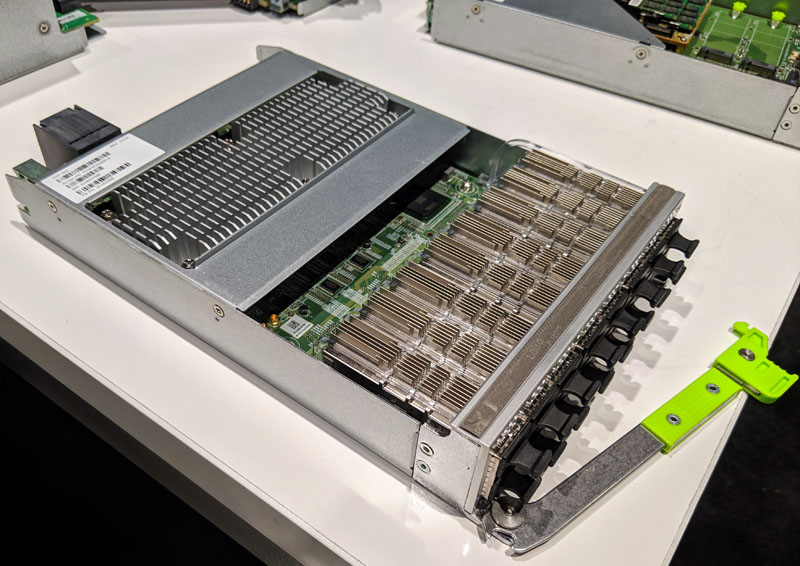

Most of the PIMs we saw at the show were the PIM-16Q or the 16x 100GbE PIM modules. Here is what one looks like and you can see the amount of heatsink hardware these modules have.

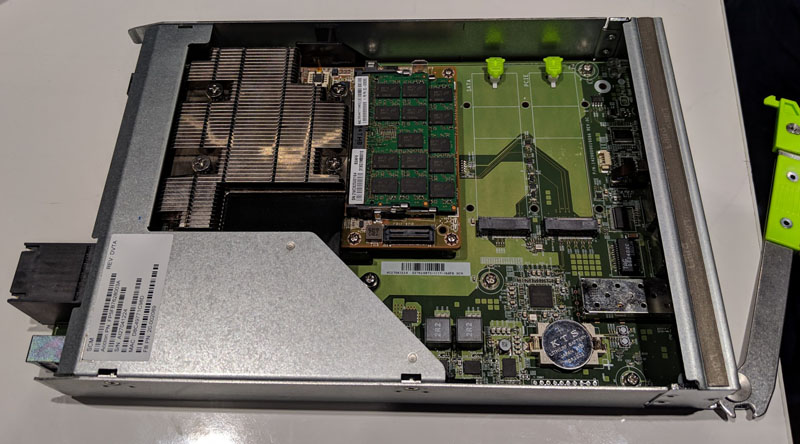

The other module type on display was the Minipack switch control module. You can see this is a Broadwell-DE design with two DDR4 SODIMMs and up to two M.2 SSDs.

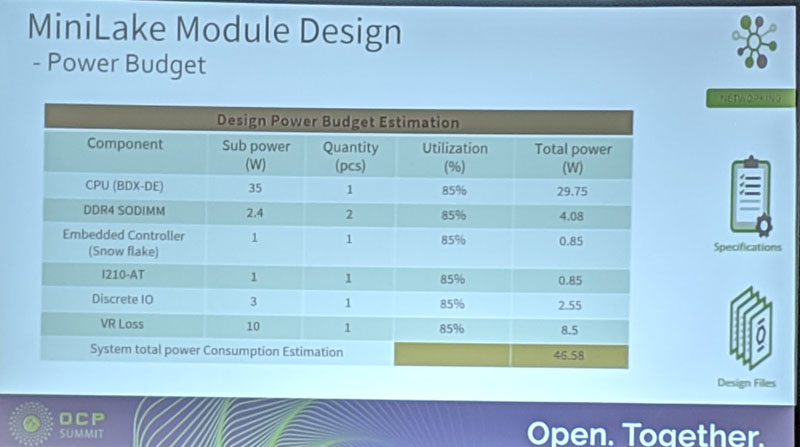

Here is more of a breakdown of the MiniLake SCM. This control module is estimated to use around 47W of power.

These modules plug into a dense connector that allows plenty of airflow through the 4U Minpack chassis.

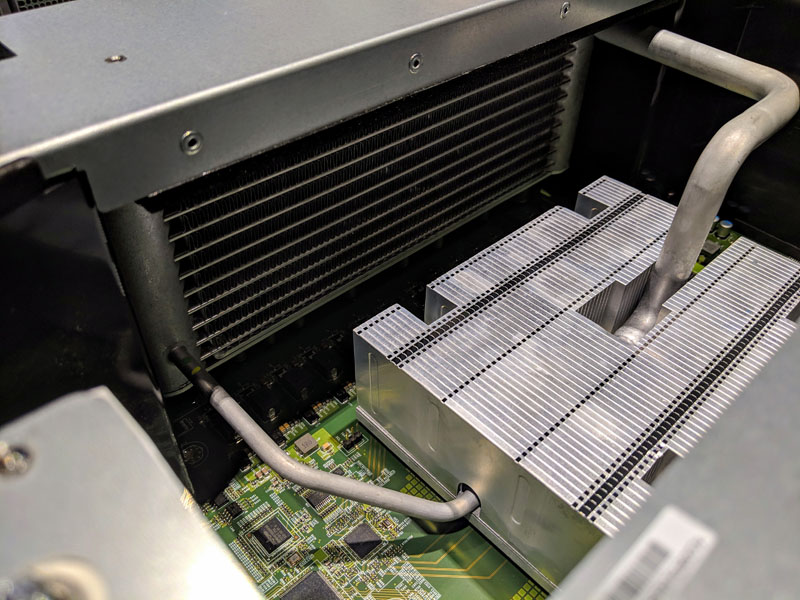

Behind the PIMs one can see a large heatsink, that has pipes to an even larger heat exchanger. This is an absolutely massive cooler since it is in a 4U chassis. It is far larger than CPU coolers we see.

Here is the switch motherboard diagram labeled and without the cooling fixtures.

Pulling air through the chassis are eight of Facebooks standard fan modules.

There are also four power supplies. Facebook is having newer power supply models for 277V operation made.

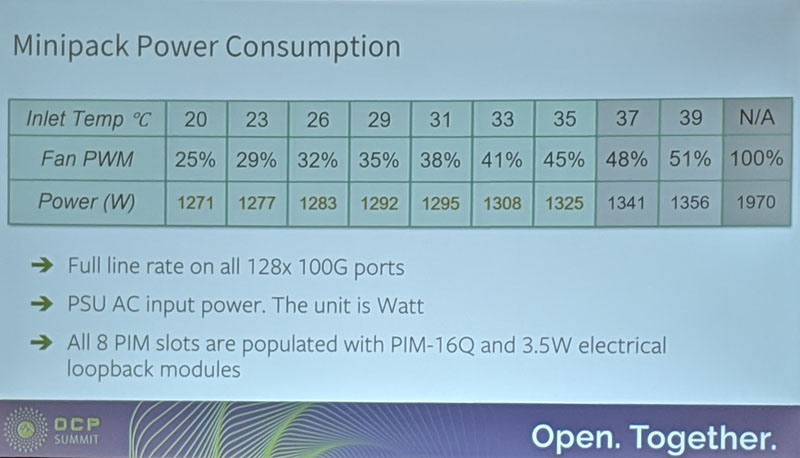

To give one some sense of how much power this switch uses, here is the power consumption curve. You can see here just how much power the fans can potentially consume in the chassis using this chart.

Overall, this solution is considerably more compact than Backpack. It should use less power, and should be significantly lower cost as well. We are told you can get a Backpack model with Cumulus for under $80,000.

Going Beyond Facebook

One of Facebook’s big pushes on the open networking side is to bring switches to others outside of the company. Here, Facebook is partnering with Edgecore (who helped develop Minipack) and Cumulus Networks to bring this to more organizations. Cumulus Linux is a front runner in the software-defined networking stack with broad industry support and over 100 switch models supported.

I had the opportunity to chat with Cumulus co-founder JR Rivers at OCP Summit. When I asked him about porting Cumulus to the platform he said it was a relatively easy affair. Cumulus works a lot with Edgecore so the transition was relatively fast after the ASIC support was completed.

For those looking towards a larger vendor supporting a similar switch, the Arista 7368X4 runs EOS and starts at “under $600 per port” shipping now.

You can read more about the Facebook process creating the Minipack here.

I’m a little ignorant of switch design so I’m confused on the purpose of the mini Xeon server built into this. Can someone tell me what purpose this Xeon serves that isn’t just built into the Tomahawk ASIC?

Modern switches have control processors beyond the switch ASIC. That is what this CPU is doing here.