Facebook’s Vijay Rao gave the company’s Open Compute Summit 2017 keynote. Facebook was a founding member of the Open Compute Project, so the keynote is one of the highly-anticipated keynotes of the Summit.

7.5 Quadrillion Web Server Instructions Per Second

As you may expect, Facebook gave a number of jaw-dropping statistics. Here is the one that resonated, Facebook’s web servers have a capacity of 7.5 quadrillion instructions per second. That is not a total compute capacity

Just take a second to think about how big that number is.

The Facebook Servers

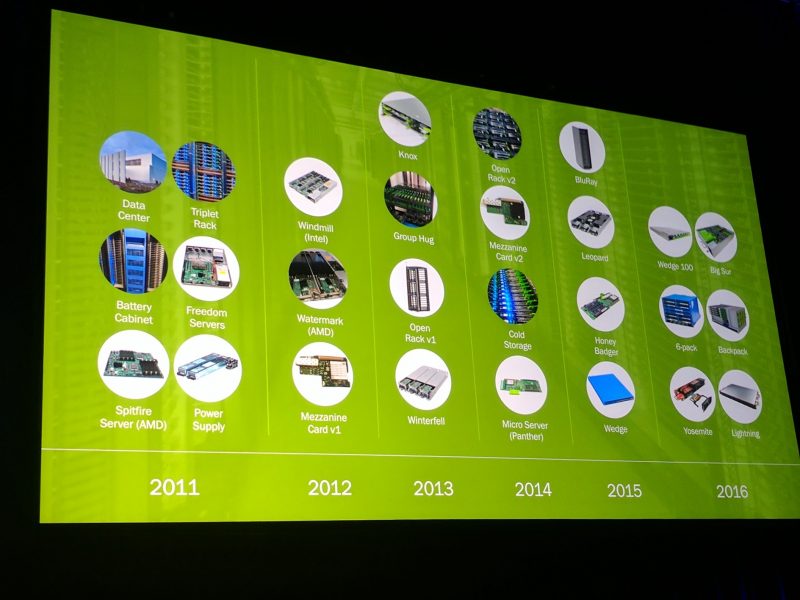

Facebook first highlighted its submissions to the OCP project over the years.

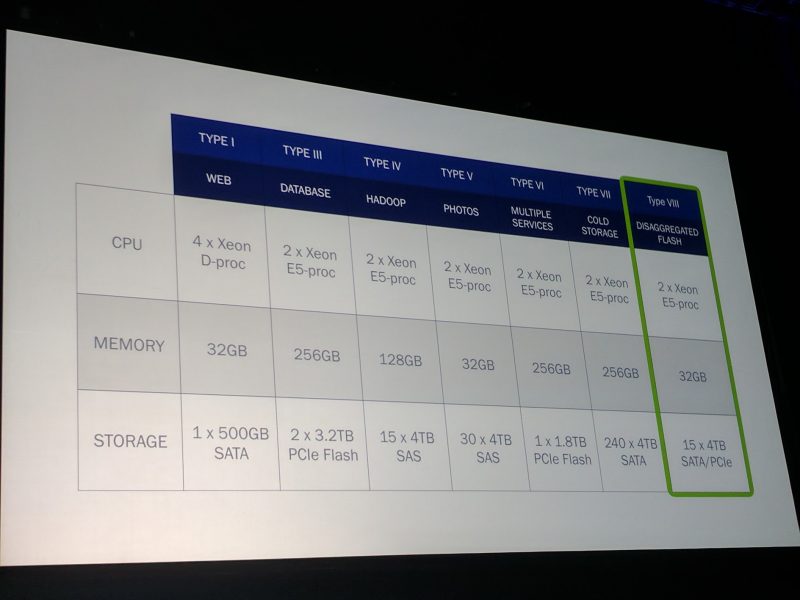

It also showed the types of servers its engineers can choose from with Type I through VIII.

Although the CPU and storage figures vary widely, it seems as though Facebook is using 32-256GB RAM per server. Based on machine types, this seems to be much lower than the enterprise segment.

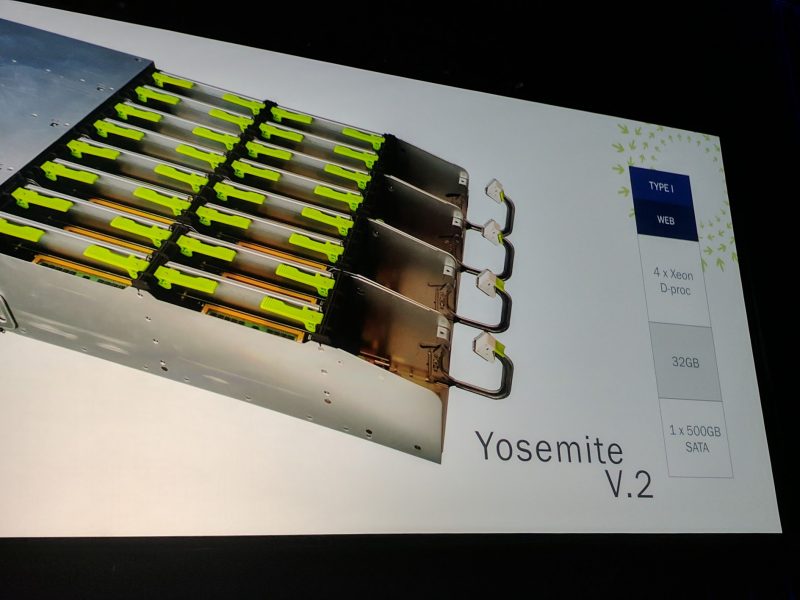

Yosemite V.2 “becoming a workhorse at Facebook”

At STH we have more reviews of Xeon D hardware than anywhere else. Facebook is clearly enamored with”becoming a workhorse at Facebook.”

The new Yosemite V.2 hardware has the ability to add storage nodes into the chassis. When you look at the 7.5 Quadrillion instructions per second figure, and that the Yosemite platforms are the the “workhorse” web servers, one can understand why Intel Xeon D CPUs were in tight supply.

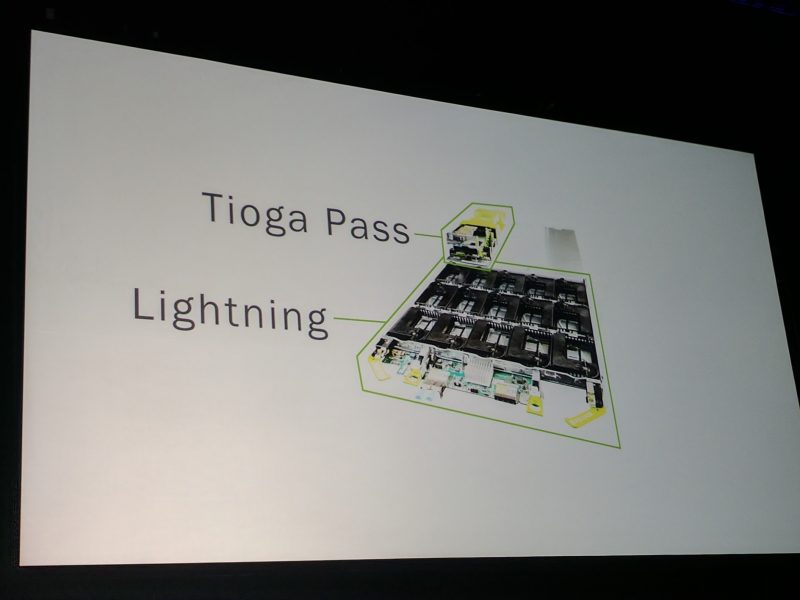

Tioga Pass

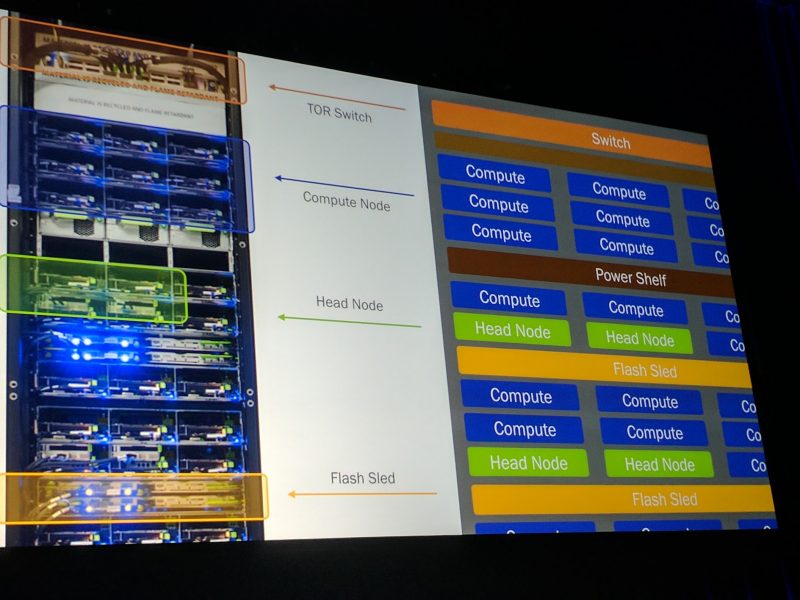

Here is a look at what an example Facebook OCP rack looks like.

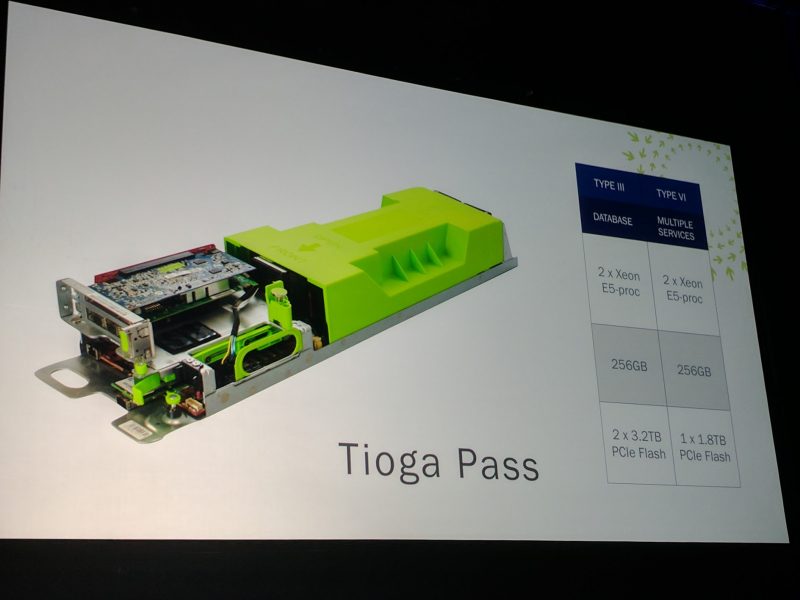

Tioga Pass is the name for the head nodes and compute nodes using Intel Xeon E5 processors. Here are the configurations for databases and for other services.

These Tioga Pass compute nodes can be used as head nodes to connect a large amount of flash via Lightning JBOF arrays. We covered the Lightning JBOF during the Intel keynote.

Facebook was the major early customer for Fusion-io. Facebook is clearly using lots of low latency flash in its compute nodes and the Tioga Pass server module is the backbone.

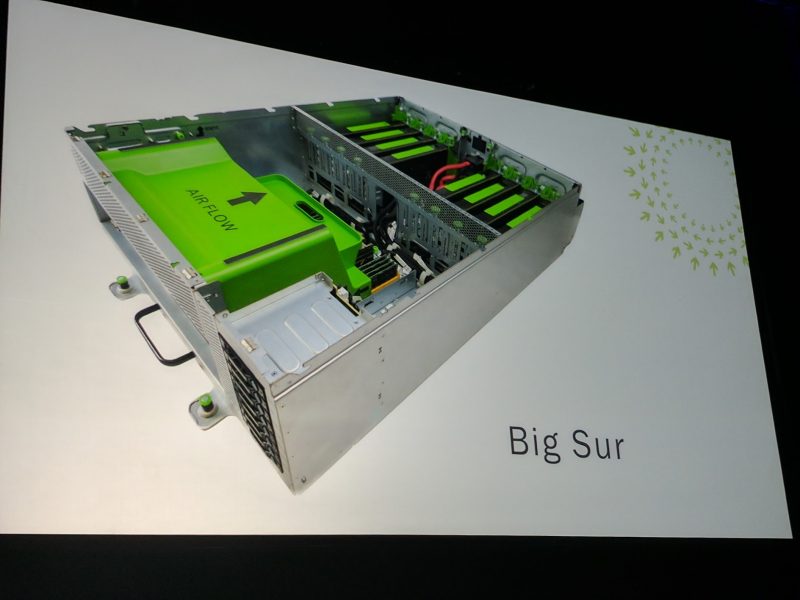

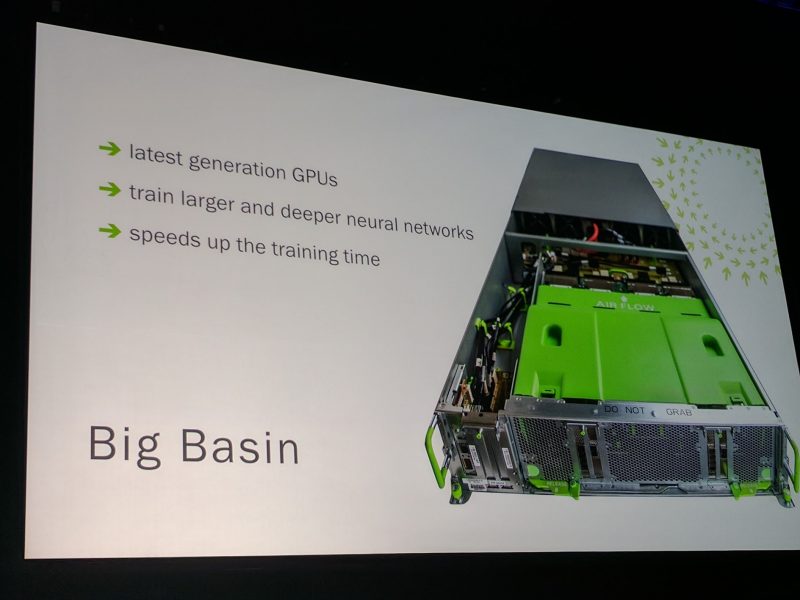

Big Basin for AI and Machine Learning

Facebook’s team is doing a ton of research in the machine learning/ AI space. To power this, Facebook previously used the Big Sur platform produced by QCT.

Big Basin is an upgrade with newer GPUs.

We have a feeling that NVIDIA loves Big Basin platforms.

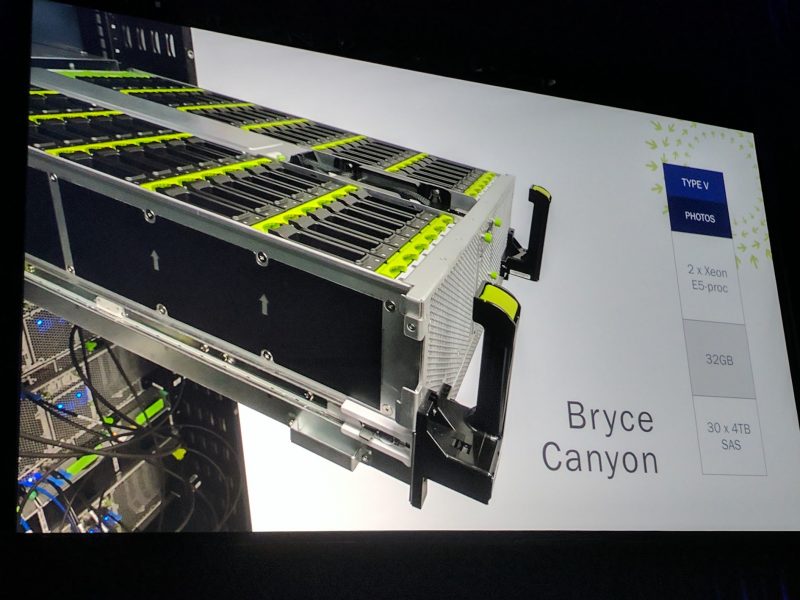

Bryce Canyon for Storage

Facebook needs somewhere to store all of the images posted. The Bryce Canyon storage platform uses dual Intel Xeon E5 CPUs and 32GB of RAM alongside 30x 4TB disks.

We are a bit surprised that Facebook is still using 4TB drives.

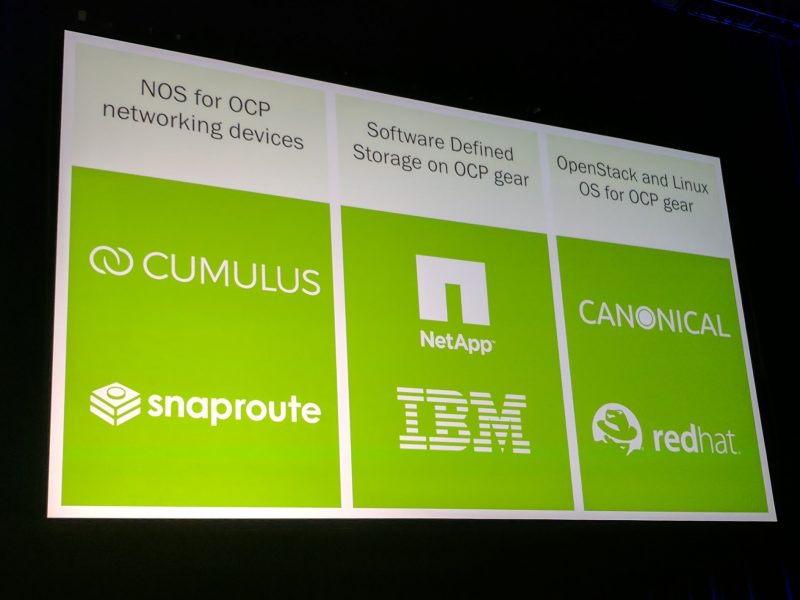

Facebook OCP Software

Facebook took the time to cover the main software vendors in the OCP world. We covered the major announcement with NetApp and IBM during the main keynote.

MyRocks

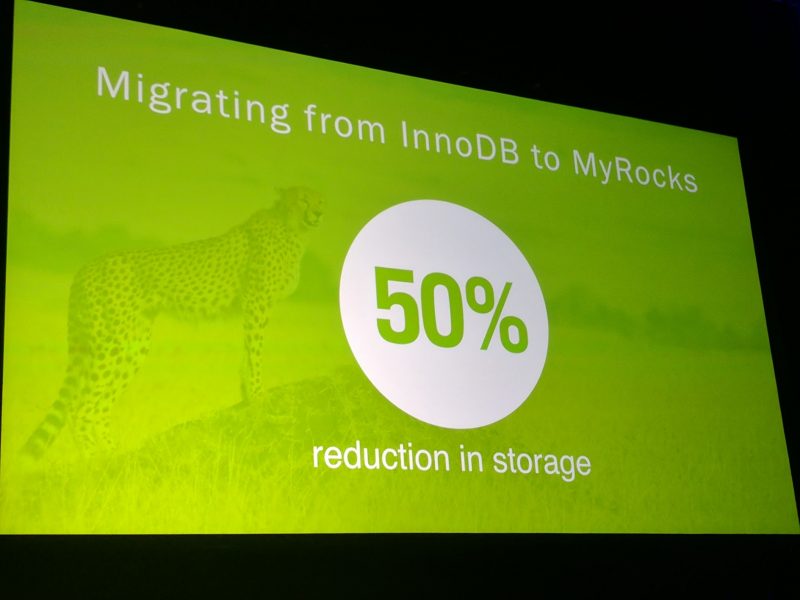

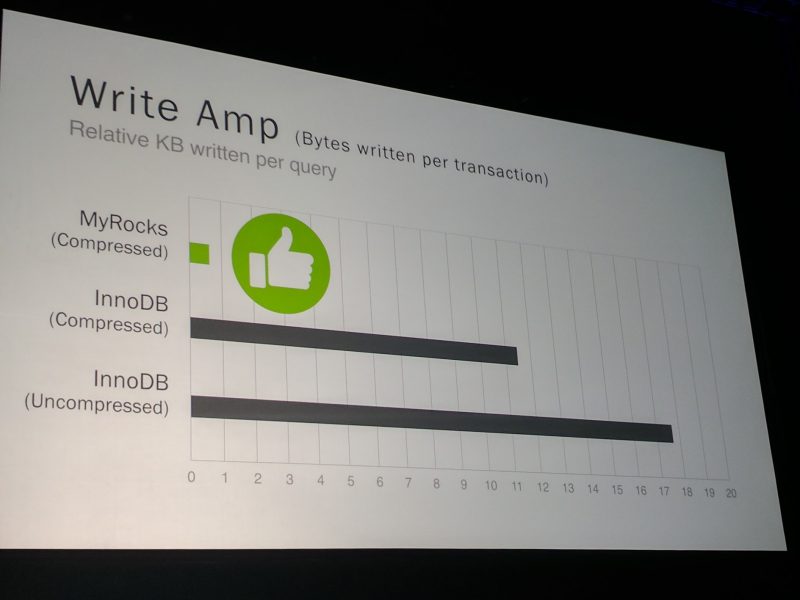

What do you get when you combine MySQL with RocksDB? Facebook’s MyRocks project. Here are some of the figures that Facebook is touting for MyRocks which are extremely impressive:

MyRocks is giving Facebook a lower storage footprint which has huge dollar savings.

One of the biggest impacts is the lower write amplification. Lowering write amplification means that Facebook will need less write endurance for a given workload, preserving capital dollars and NVME flash drives.