The new Marvell Structera CXL family includes both CXL Type-3 Memory expansion devices with a few exciting twists. What makes the family more intriguing is that the company has another version, called a near-memory accelerator with up to 16 Arm Neoverse V2 cores onboard. I open very few briefing decks where I immediately get excited. On the way back from the Olympics, I opened this announcement half asleep and felt that sense of excitement. Let us get into it.

Marvell Structera CXL Family

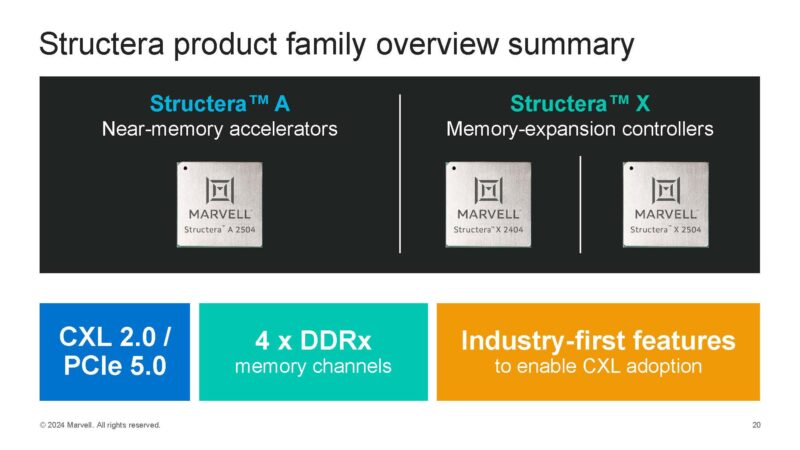

The two sides of the family currently are the Structera A and Stuctera X. The A feels like it is for accelerators and the X is for memory eXpansion.

These are CXL 2.0 and PCIe Gen5 devices designed for today’s servers built on TSMC 5nm. Let us get into it.

Marvell Structera A 2504: CXL DDR5 Memory Accelerator with Arm

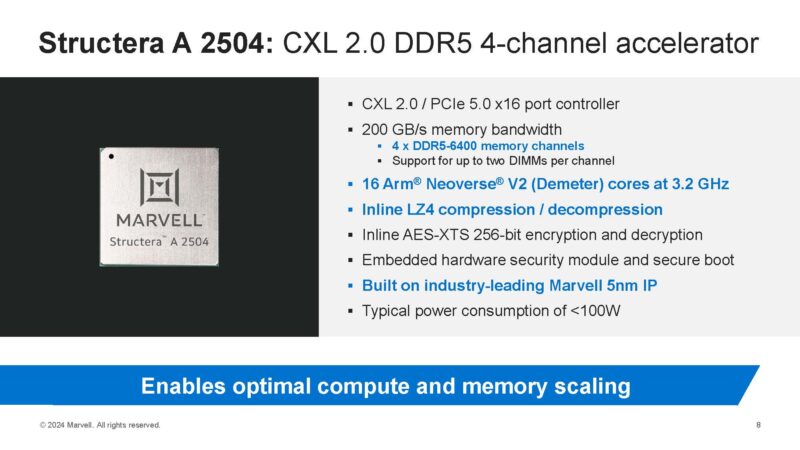

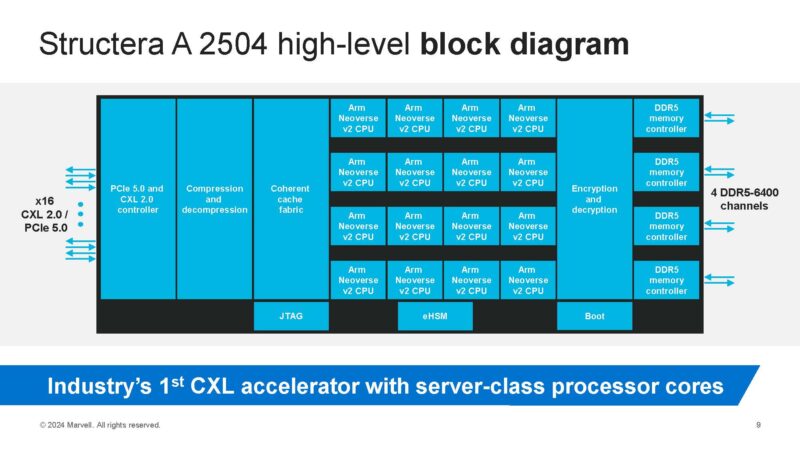

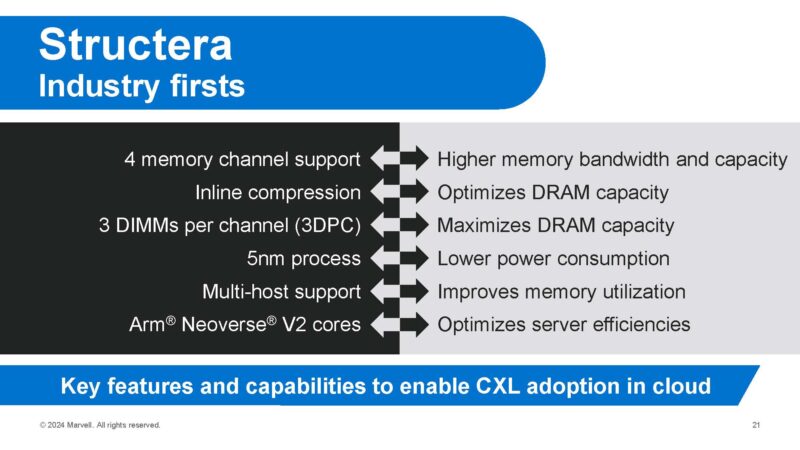

Let us start with the coolest one first. The Marvell Structera A 2504 is, at its heart, a CXL 2.0 memory expansion device, but with a huge twist. First, it can support up to four channels, not just two. Next, it has 16x Arm Neoverse V2 cores. Those are the same cores as NVIDIA Grace uses, so these are not low-power and low-performance cores.

Marvell also has built-in LZ4 compression and decompression to maximize the capacity of the memory, which can be up to DDR5-6400.

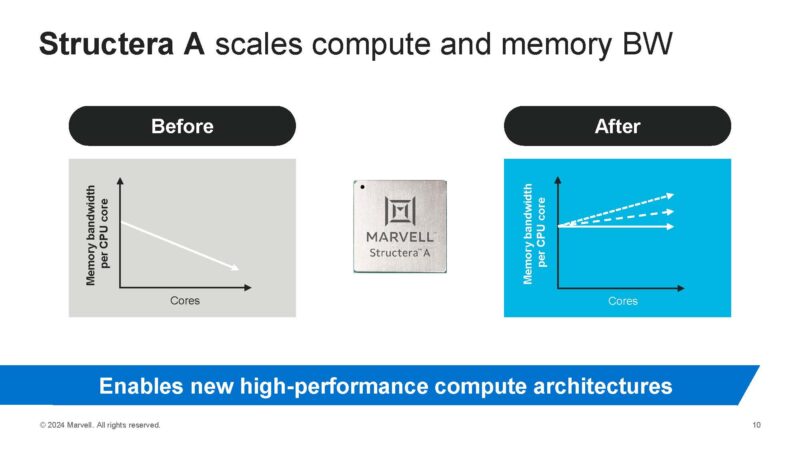

Of course, folks may wonder why one would want to put Arm cores on a memory expansion card. The basic idea is that scaling cores only leaves you with less memory bandwidth per core. Scaling memory capacity only does not help add more processing power. That is why some applications will want to scale both. If you saw our Memory Bandwidth Per Core and Per Socket for Intel Xeon and AMD EPYC piece, that demonstrates what is going on in the industry.

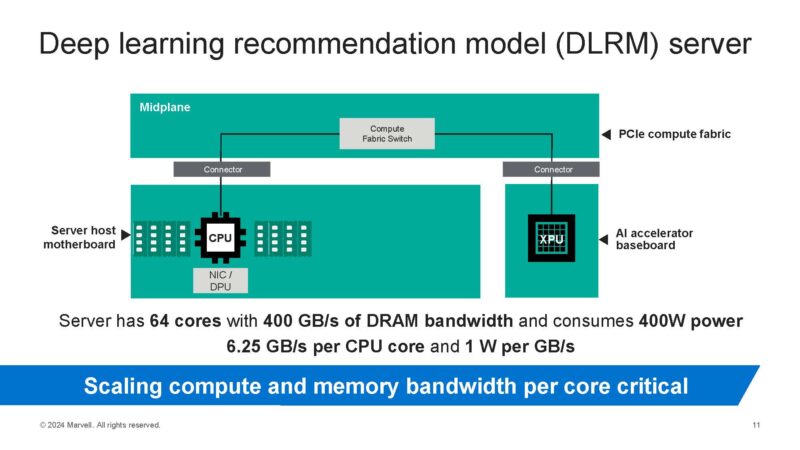

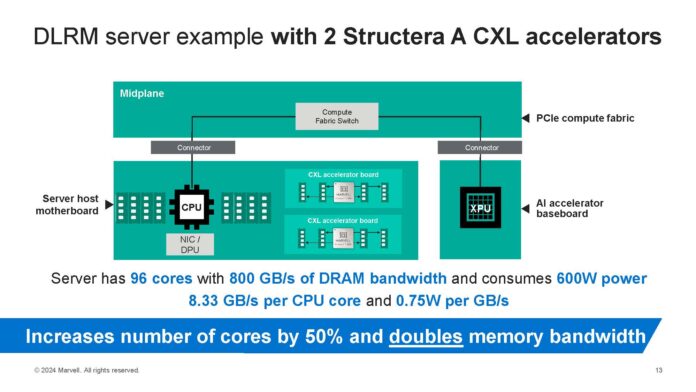

Here is an example of a deep learning recommendation model (DLRM) server. Here, we can see the host CPU with 64 cores, an 8-channel memory solution, and an XPU for AI acceleration. DLRM is still a huge space, even though folks often talk a lot about generative AI.

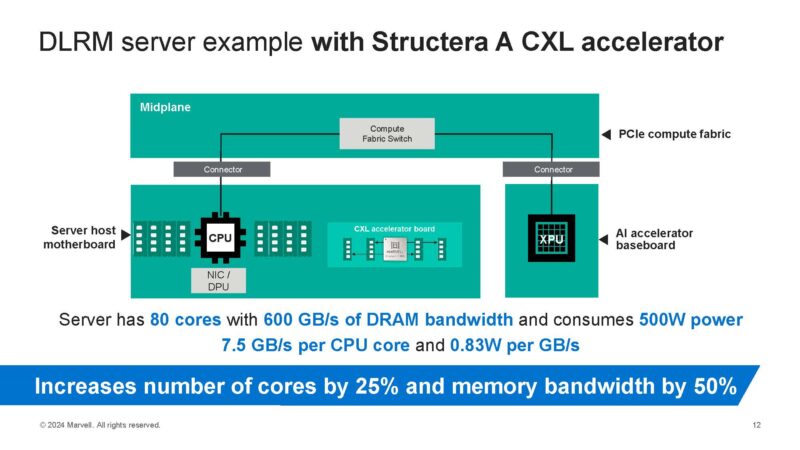

Adding one accelerator adds 25% more cores and 50% more memory bandwidth.

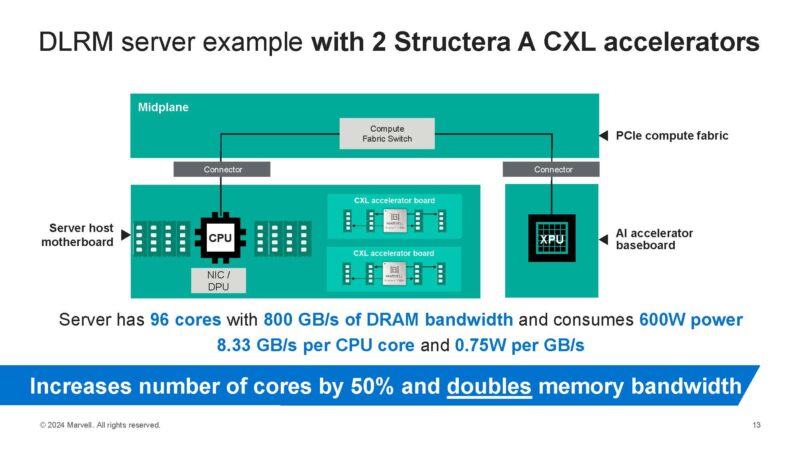

Adding two of these accelerators would add memory capacity and also double the memory bandwidth while adding 50% more cores (32 on cards plus 64 on the CPU.)

The big advantage here is that the server gets more cores and more memory bandwidth that can scale by adding more CXL controllers.

We are just going to note here that Marvell makes chips for hyper-scale clients. The example above is specific enough that it does not feel like Marvell is making this as a product, hoping that someone configures it in an HPE ProLiant one day.

Still, not all servers and applications will want to scale cores like this. For that, Marvell has new CXL memory expanders.

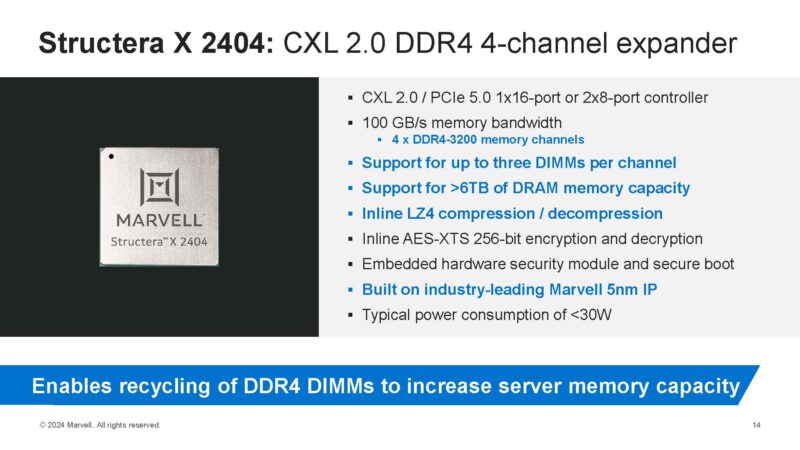

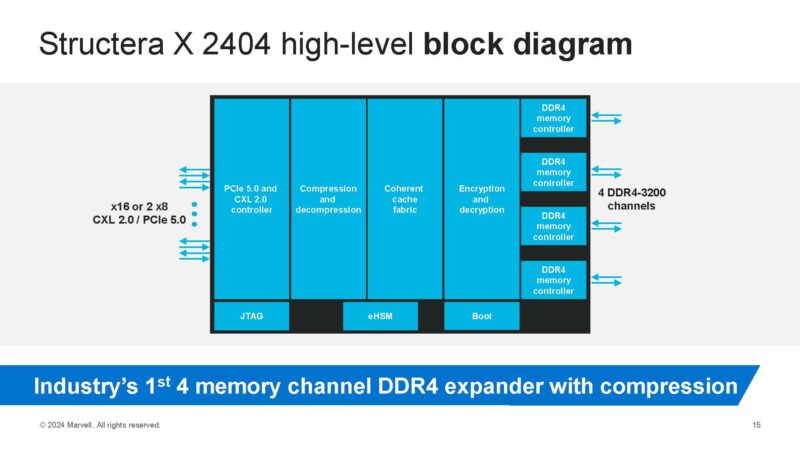

Marvell Structera X 2404: CXL 2.0 DDR4 4-channel Memory Expander

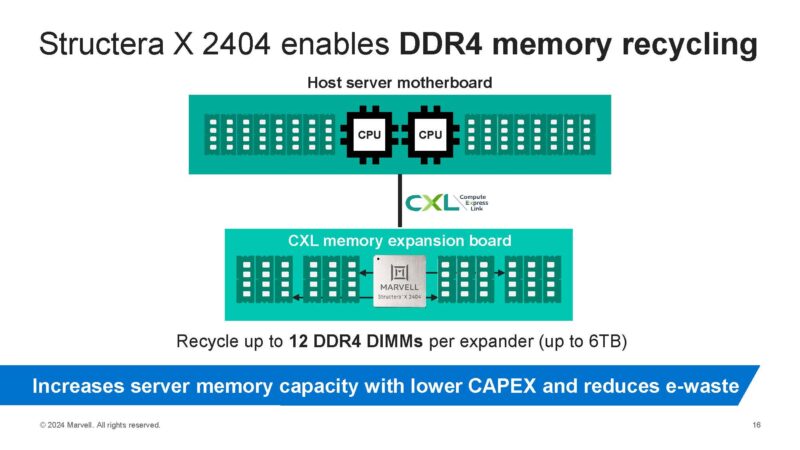

This is one that solves a huge challenge in the industry. The Structera X 2404 is a 4-channel DDR4 memory expansion device. What is more, one can plug up to three DDR4 devices per channel. That means, one controller can take up to 12 DIMMs.

Here is the block diagram for the memory expander.

Of course, the big use case is DDR4 memory recycling. If you are a hyper-scaler with tons of DDR4 servers still running, a very plausible model will be to pull DDR4 out of decommissioned servers and put the DIMMs into shelves with the Structera X 2404. DRAM is an enormous cost in hyper-scale racks, making this is an ample opportunity to lower costs through recycling. That is especially the case with LZ4 compression/ decompression.

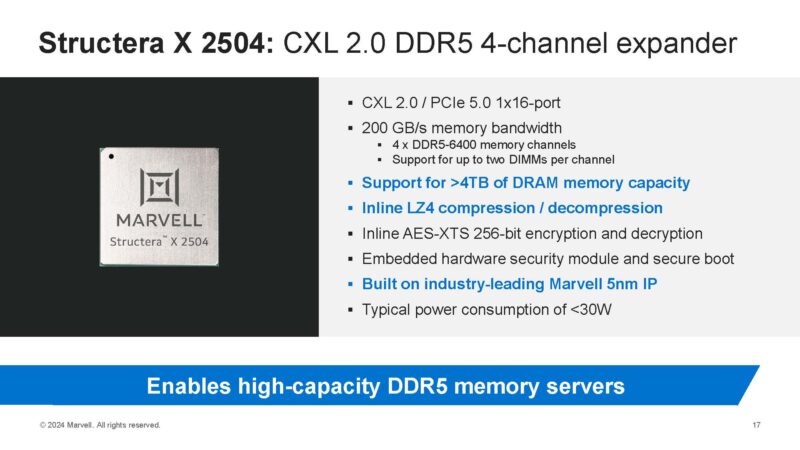

Depending on who you talk to in the industry, the CXL Type-3 DDR5 expanders for performance applications will be another major use case. Marvell also has a variant for that.

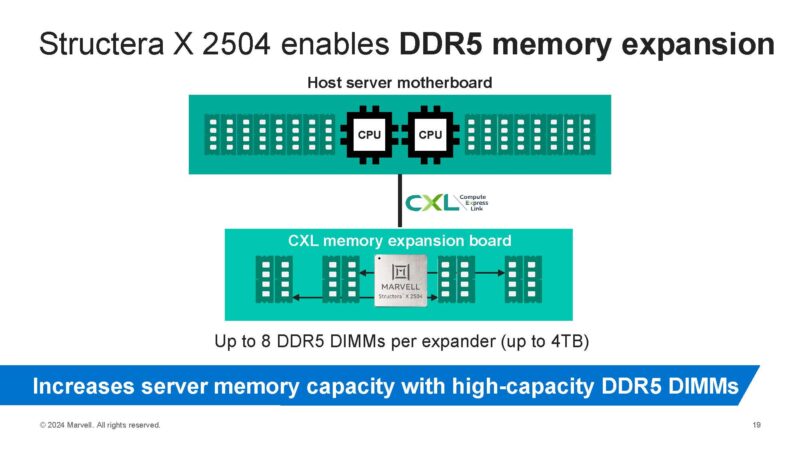

Marvell Structera X 2504: CXL 2.0 DDR5 4-channel Memory Expander

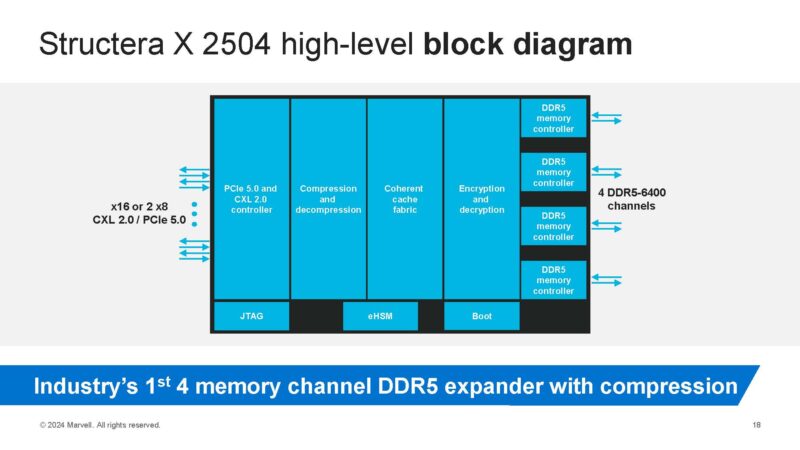

In many ways this is very similar to the 2404, except that it uses DDR5. Supporting up to DDR5-6400 in 4-channel 2-DIMMs per channel mode adds a lot of performance to the part.

Here is the block diagram.

The memory expansion bit is very interesting. Depending on your CPU and how you will want to integrate CXL memory expansion, you may need different capacities and performance profiles for your memory. Even Intel Xeon 6 variants have different CXL memory capacity capabilities for running in different modes (e.g., running CXL memory as its own pool versus an interleaved memory source.)

Whereas the DDR4 version is more about re-using an inexpensive source of memory, the DDR5 version can aim more for performance.

Final Words

We have previously shown off two channel devices from Astera Labs on STH. We will at least note that Astera Labs has had working units for a long time, and Marvell is a few months from launch. Still, Marvell is doing something different with its CXL memory expanders and something very different with the Arm Neoverse V2 core accelerator version.

This is a family where we cannot wait to get to use all three variants in action. Especially with the CXL 2.0 capability and CXL 2.0 switches starting to become a reality, the possibilities get a bit more exciting. Imagine a shelf with a CXL 2.0 switch and several of these accelerators adding more Arm cores to a system.

If you want to learn more about CXL, feel free to read our CXL 3.1 Specification Aims for Big Topologies piece to see where this is headed. The big question is when will we see them in the market with a sampling timeframe of Q4 2024.

Any plans to acquire and test these? It would be interesting to see performance on various pools or tiered memory.

I hope to George, but samples in Q4

Nice Idea

The X cards are intriguing. CXL memory seems like it should be able to offer capital-L large memory pools. These are an improvement ov the two DIMM stuff I’ve seen. It’s early days still I suppose.

The A cards, would not they be rather niche? Not everyone can leverage those cores.