These days, we hear a ton about liquid cooling due to the rise of AI clusters. You have likely heard many folks discuss liquid cooling as saving lots of power, but the question is: why? Today, we have a quick article to describe the impact of liquid cooling on an AI server.

Estimating the Power Consumption Impact of Liquid Cooling

A few quarters ago, we looked at liquid cooling in a server when looking at the Supermicro CDUs and liquid cooling rack. You can find that here:

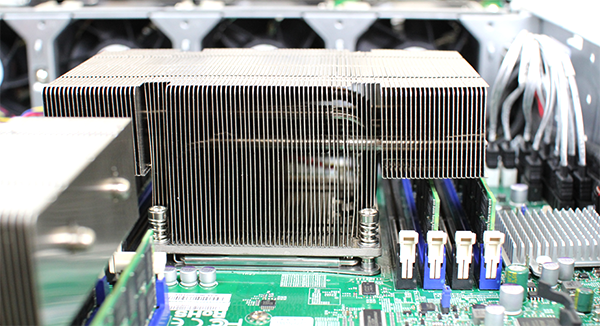

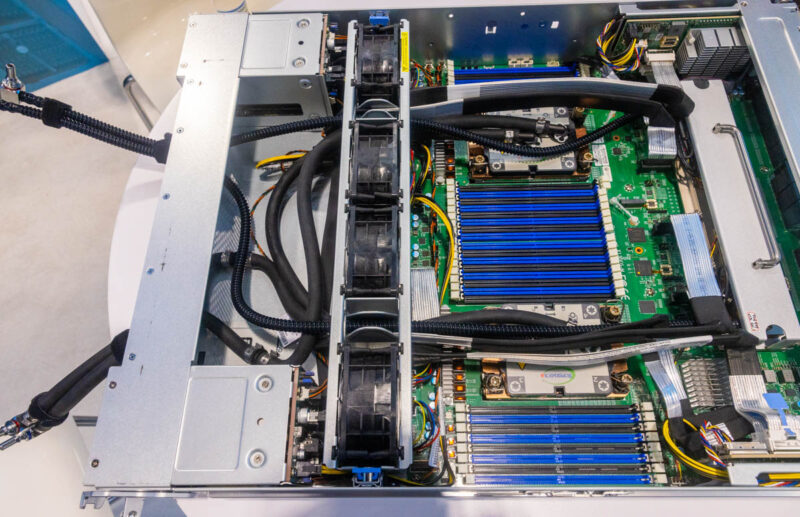

One of the biggest impacts to liquid cooling is not lowering the GPU/ AI accelerator or CPU power consumption. Instead, it is lowering the power consumption of the system due to fans. In a standard server, fans are placed in a midplane partition and blow air through a heatsink.

That airflow is then channeled to the rear of the chassis. Here is an extreme example.

Power is used by the fans that move air through the heatsink and out of the chassis. Using liquid cooling that heat is exchanged to liquid (usually water with anti-fungal and anti-corrosive additives) and then the warmer liquid exits the chassis. As a result, the fans that are still in the chassis can spin at slower speeds. The slower speeds mean that we have lower power consumption.

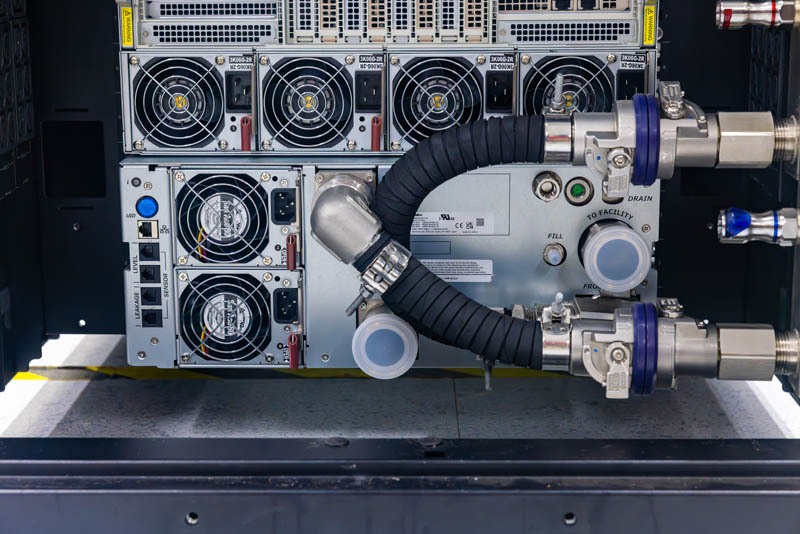

There is, however, another impact once the heat leaves the chassis. In air-cooled data centers, CRAH or air handlers are usually needed to exchange the heat with liquid and then that heat is exchanged outside of the data center at scale.

With liquid cooling, that heat exchange can occur directly via an efficient liquid-to-liquid CDU and removed via facility water loops.

This direct liquid-to-liquid heat exchange is usually much more efficient than going chip to air to air handler to liquid. That is why we often say that liquid cooling has benefits for server and rack levels, as well as for data center power consumption.

Typically, a modern server uses 10-20% of its power for the fans cooling the server. Direct-to-chip liquid cooling, by far the most popular type today, can remove a large portion of this heat so that the fans only need to cool lower-power devices like NICs, memory, and more. DLC does not remove all of the heat of a server, but often 80% or more. As a result, the power dedicated to cooling often falls to 5% or less of the overall server power consumption. Many factors impact this, such as the components used and the design of the server, as well as the physical height and depth of the fans.

One component of liquid cooling power savings is the 8-15% in-chassis power savings, which lessens the load on PDUs, busbars, and so forth in the data center. That can also then be factored into right-sizing power supplies in systems for optimal efficiency. The other component is removing environmental cooling in data centers, which can save on maintenance.

Final Words

Liquid cooling is happening, and we expect that by 2025, the vast majority of AI clusters will be liquid-cooled. Some are going beyond just liquid cooling the CPUs and GPUs but also looking at how to efficiently cool the other components in a rack. We are going to have more on this in the near future on STH.

If you want to learn more about data center power consumption, you can see the video we did here: