Going through OCP Summit 2024 photos, we spotted hte Enfabrica ACF-S. Earlier this year we covered the Enfabrica ACF-S A HUGE Multi-Tbps SuperNIC at Hot Chips 2024. Now, instead of slideware, we also saw the actual chip, and it is a big one.

Enfabrica ACF-S Multi-Tbps SuperNIC Shown

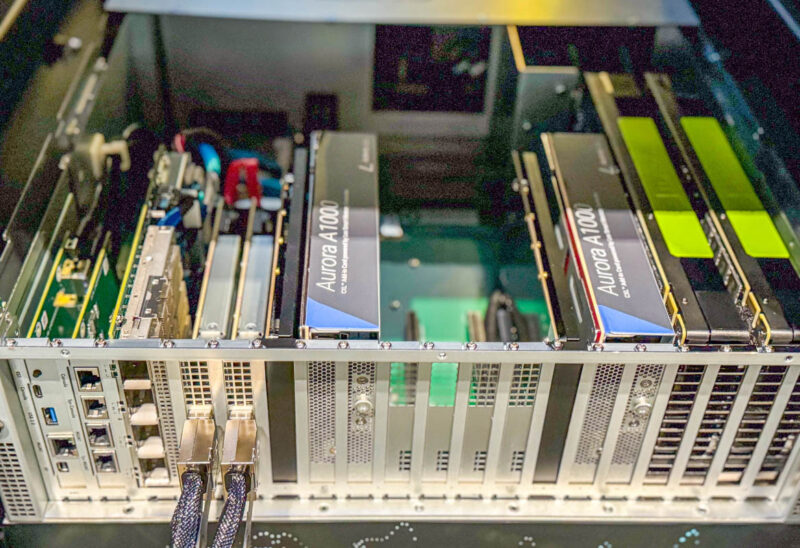

At OCP Summit, we found this box filled with all kinds of different cards including older NVIDIA GPUs, the Astera Labs Leo-based Aurora A1000 cards, and more.

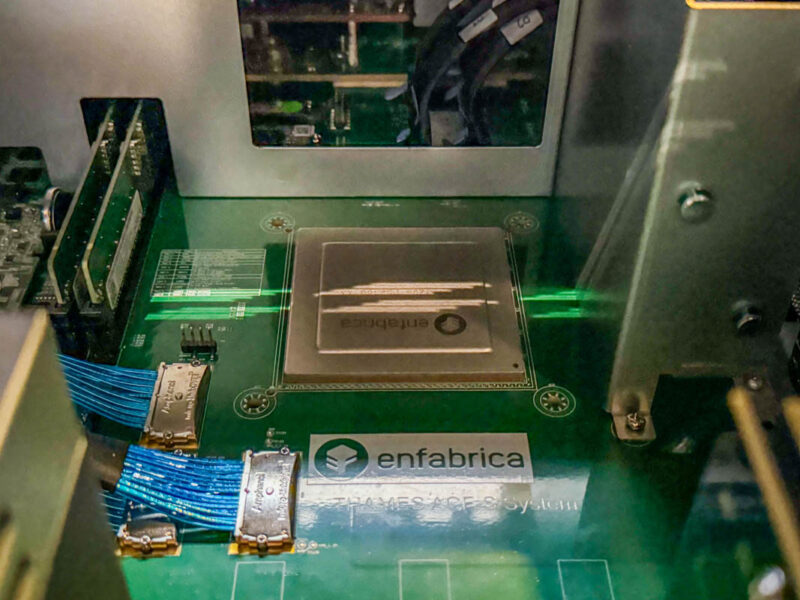

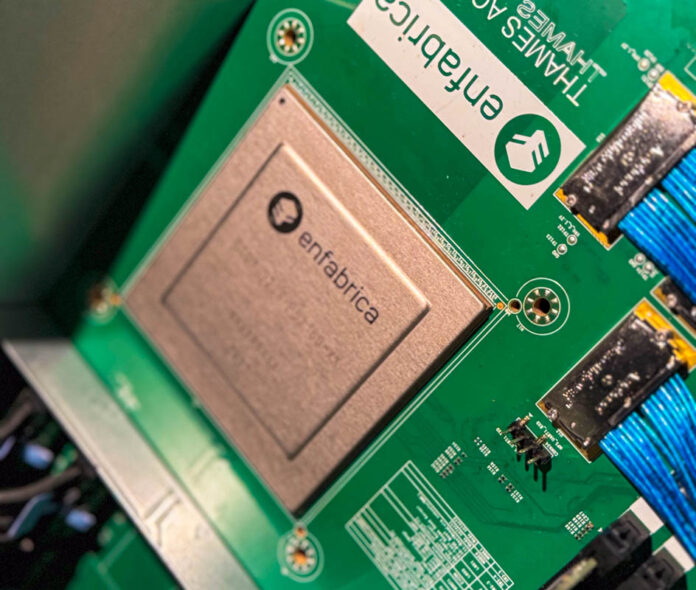

Pulling the system out a bit, we found that the chip inside was not a normal server chip. Instead, this is an Enfabrica ACF-S chip.

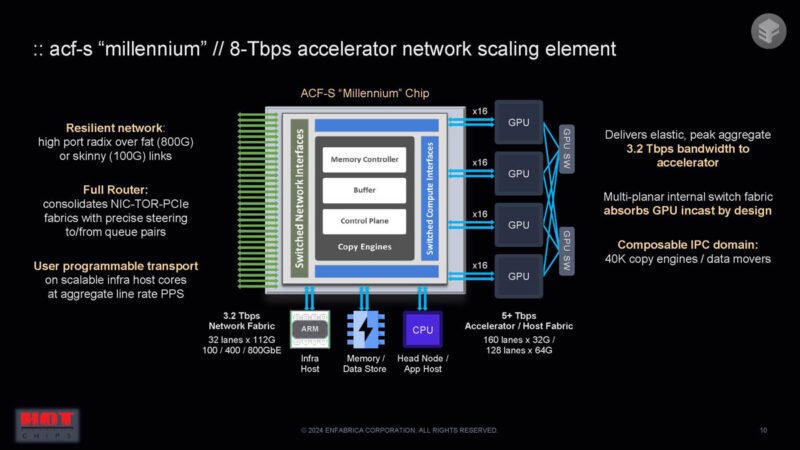

The quick overview of the chip from our Hot Chips 2024 coverage is that the chip has PCIe links as well as network links. Instead of traditional architectures where there might be two GPUs on a PCIe switch and then two NICs also on that switch, the ACF-S part can handle multiple GPUs and multiple network interfaces on the same chip. That lowers the number of add-in cards in a system, as well as providing the capability for inter-GPU communication directly through the larger switch chip.

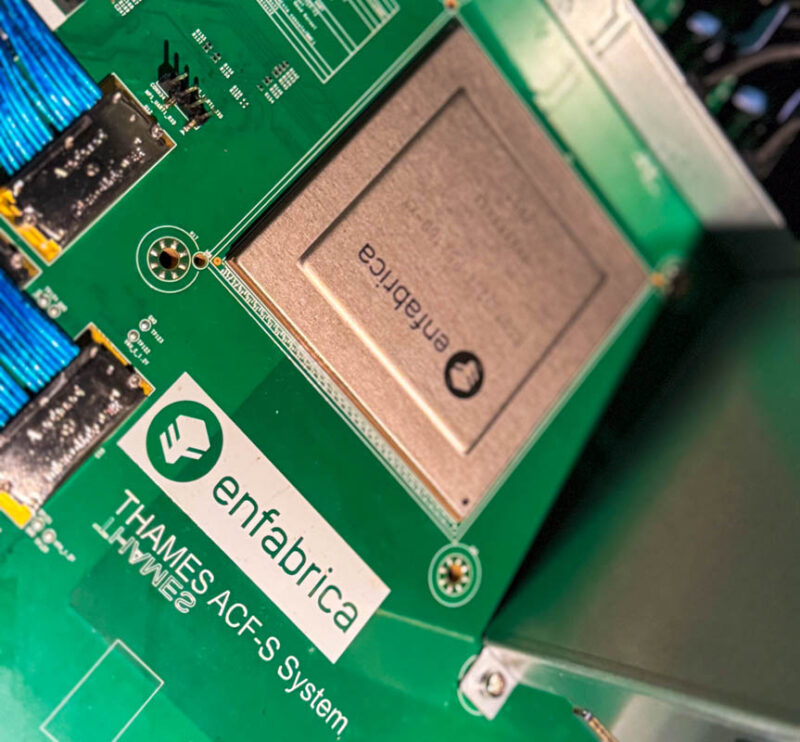

Checking out the chip, it is certainly large. The fixture we saw feels like a test fixture with tons of different slots built-in.

The board itself was marked with Enfabrica and then the Thames ACF-S system.

Final Words

This is a chip that has the STH team excited. The ability to combine NIC and PCIe switch functionality and potentially yield more efficient networking seems awesome. The big question is now when will we see the new chips hit the market. Hopefully we get a box with one of these Enfabrica ACF-S chips in 2025. This is one of those technologies that could make a meaningful impact to GPU servers in the future.

I would presume that this chip supports bifurcation down to 8x PCIe lanes to host an 8-way GPU setup. Similarly with leverage two of these chips in a system to provide redundancy inside the host system and be able to connect to each of the GPUs in an 8+8 PCIe lane fashion for full bandwidth there. That’s be an aggregate 6.4 Tbit/s of networking bandwidth that’d can be spread across 100 Gbit to 800 Gbit links. Very impressive.

I guess the only thing left on the wish list would be more PCIe lanes to add some additional CXL 2.0 memory expanders or to increase host CPU bandwidth where main memory sits.

Storage would be another reason for more lanes as well as being able to bifurcate down to 4x lane widths.

I love the detail on the Thames silkscreen to include the reflection found on the Thames Television ident as the letters reflected off of a facsimile of the River Thames