I have used many Intel Pro/1000 PT Quad network adapters to provide extra ports in servers for a few years now. They are well supported Intel 82571GB-based cards that are re-branded by Dell and other vendors. The big advantage to cards like these are that they allow users to add a lot of network ports into a single PCIe slot. While the Intel Pro/1000 line is well known, one other brand I found sold by a few vendors on ebay was the Silicom PEG6I six port network adapter. These cards are built around three Intel 82571EB controllers, each responsible for two ports on the back plane. I decided to try two of these cards out from online sources.

Usually I try focusing on the latest generation of technology. What I have realized (perhaps all too slowly) is that a big portion of my readers purchase a mix of new hardware and server pulls, especially when it comes to buying a CPU or add-in card. What makes these remarkable is the fact that they run in the sub $150 range when they are found as server pulls on places like eBay (see here for the PEG6I eBay search where there is a seller with quite a few for $125 + 5 shipping right now.) The Intel Pro/1000 PT Quad on the other hand goes for around $200 (see here for the Pro/1000 PT Quad eBay search.) Both cards utilize the same generation of Intel Gigabit network controllers, but the Pro/1000 PT Quad costs $50/ port while the PEG6I costs under $25 per port. 50% more network ports for a 25% lower price makes this a unique value.

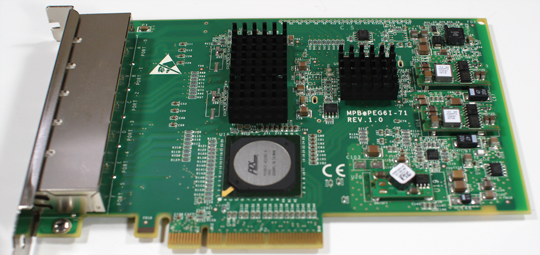

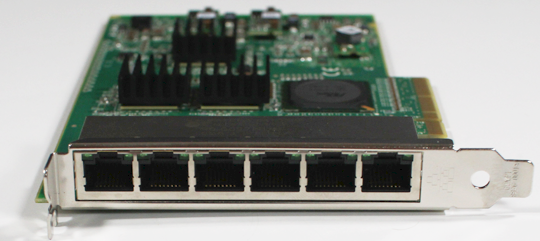

The card itself is a PCIe x8 card and one can see the trio of heatsinks covering the Intel 82571EB Ethernet controllers. Each chip handles two ports for the cards 6-port total. With the six ports, one has to be mindful that the card will take up a full-height expansion slot, so for those users with 2U chassis that need low-profile cards, these cards are not for you, although Intel does make a low-profile Pro/1000 Quad adapters.

A few notes on these cards are warranted. First the cards are capable of PXE booting off of any of the six ports and one will see the fairly standard PXE boot screen, six times. While it is great that the functionality exists, it does slow server boot times considerably. I added one of these cards to my pfSense box, forgetting to disable the PXE boot features and watched the appliance take an extra thirty seconds or so to boot. Bottom line, if you do not need it, disable it.

Next, six ports, contrary to popular belief, will not work like RAID 0 for Ethernet when running link aggregation. In essence one cannot put one of these in a server and client, hooked into a 24-port switch and transfer a video file at 600-800MB/s. However, if six or more clients are trying to access the server, using six ports will help quite a bit.

So far I have not used ESXi 4.x or ESXi 5.x with these cards. I have used various Linux distributions such as Ubuntu desktop and server as well as Windows Server 2008 R2 and saw this card immediately get recognized. I have decided to keep one of these for my Hyper-V server. I can report back after I get these in an ESXi server, but overall very happy with the cards in general.

For those looking, here is the Silicom PEG6I spec page for those that are wondering and here is the PEG6I eBay search one more time as they are not always available. Happy hunting for cheap Intel-based network adapters!

Very interesting NIC, please report back ASAP with information regarding ESXi compatibility!

i saw that seller had 10+ this morning and now 0. those went fast. congrats to those who gt them

Please excuse the numpty question, but when can’t the ports be linked when using a suitable switch?

Hello, I have a question. When you made the statement about link aggregation, were you just talking in general or is there something specific about these cards (vs a retail intel quad port etc) that keep them from being able to aggregate bandwidth when using a compatible switch supporting 802.3 LACP? Do these specific cards have a driver or hardware limitation or were you just trying to point out some everyday qualities of link aggregation. Thanks.

Hello, I have a question. When you made the statement about link aggregation, were you just talking in general or is there something specific about these cards (vs a retail intel quad port etc) that keep them from being able to aggregate bandwidth when using a compatible switch supporting 802.3 LACP? Do these specific cards have a driver or hardware limitation or were you just trying to point out some everyday qualities of link aggregation. Thanks.

michael: I was just pointing out everyday qualities of link aggregation. Quite a few users new to link aggregation assume that it works like RAID 0 for disks. An example would be if I could get real-world transfer of 125MB/s per port using two 6 port NICs in two machines would let one transfer a big file (like a milti-GB ISO) at 750MB/s (6* 125MB/s). We know this is not how LACP works, but I just wanted to point that out.

Patrick, were you able to test out ESXi compatibility? Very interested in that. Thank you.

Aaron, installing this now on the new ESXi box. Will have a post by tomorrow with results.

How timely that I should be just reading about these, and the posts are actually current. I’m curious about vSphere 4/5 compatiblity as well, as the price is pretty good for home lab equipment.

whats the use case of this card? it provides more ports but why?

there are several usage scenarios :

1. multiple internet connections(providers). If you have two providers coming in the same router – gateway you’d need at least 3 ports (2 for providers one for internal network)

2. aggregating bandwidth – if you need more than 1 GBs here you go :)