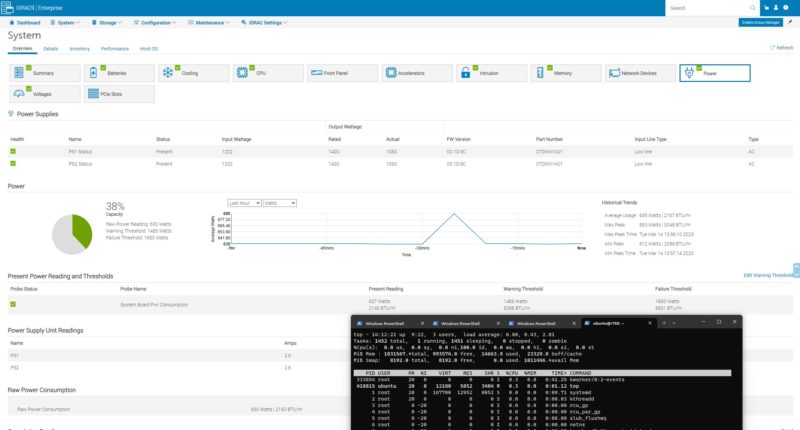

Dell PowerEdge R760 Power Consumption

Power was provided via two Dell 80Plus Platinum 1.4kW PSUs. Dell has many PSU configurations, so this is just one option.

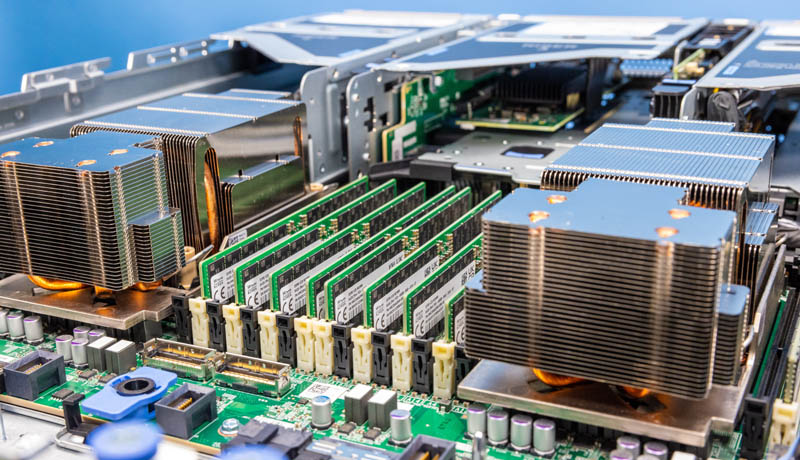

Something we noticed is that our server was set up for a very high-performance config. Its idle was over 600W. We had to pull the server from the data center and tested in the studio just to validate this.

With this configuration, we would expect around 1kW to be the maximum power consumption. There are a ton of options though. Adding more and faster NVMe storage can have a huge impact on power. Likewise, adding GPUs or other accelerators can increase power. We also did not have top-bin 350W SKUs. Intel’s next-generation CPUs for this socket will also have higher TDP so if you are reading this at the time of 5th Generation Intel Xeon Scalable, that will be another consideration.

That highlights the challenge of designing modern servers. A Dell PowerEdge R760 might spend its lifetime in a lower-end configuration running under 200W. Another might spend its useful life running over 2kW. Dell’s design takes into account this 10x gap in scenarios.

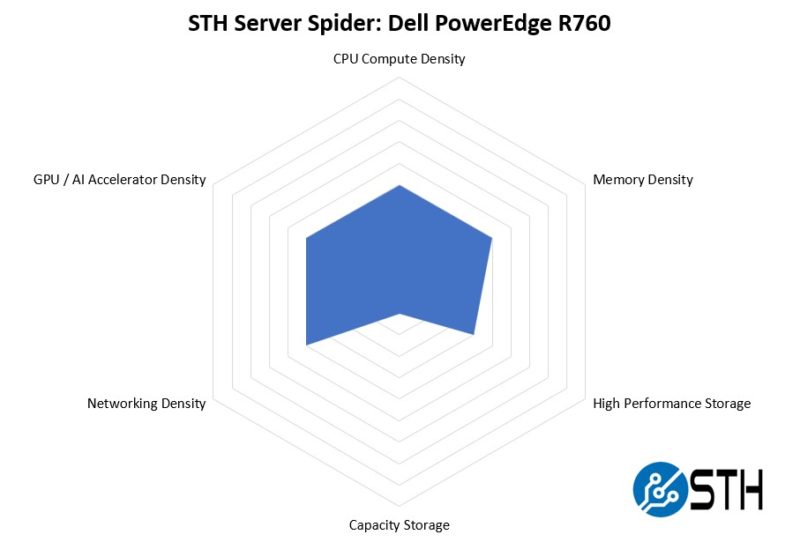

STH Server Spider: Dell PowerEdge R760

In the second half of 2018, we introduced the STH Server Spider as a quick reference to where a server system’s aptitude lies. Our goal is to start giving a quick visual depiction of the types of parameters that a server is targeted at.

The Dell PowerEdge R760 is a very versatile server. It is not meant to fit the maximum number of 3.5″ hard drives per U, handle a NVIDIA HGX H100 GPU assembly, or fit the most RAM or CPU per U into the densest racks. Instead, the PowerEdge R760 is designed to handle a range of scenarios on one common platform.

Key Lessons Learned

The Dell PowerEdge R760 is fascinating. Working on this server, and having worked on previous generations and generations of servers from other vendors, there is one overriding sense one gets. There are layers of iterations built into the designs of most components. The Dell BOSS is a great example where it is one of, if not the most, elaborate M.2 solutions on the market.

From a physical design perspective, many other servers seek to minimize costs and have very simple designs. The PowerEdge R760 feels like it is designed to just be nice. It is like having real wood trim in a high-end car. It is not necessary, but it is very nice. Part of that is due to just how many roles a PowerEdge R760 and variants with the same motherboard are designed to fulfill.

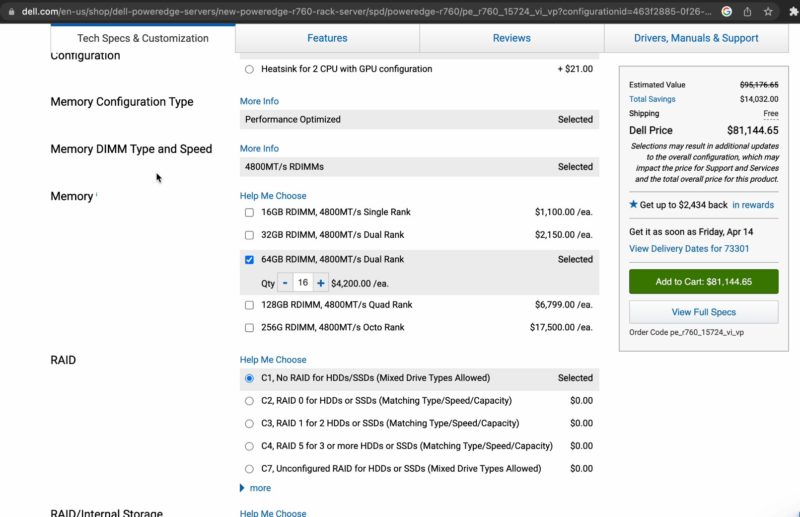

The other key lesson learned was the comical online configurator pricing. Here is a great example, and also one of why we do not publish list prices usually.

The 32GB DIMM list price is $2150 each. 64GB DDR5 RDIMM? That is $4200. In the video, I said we have been paying less than $400 for 64GB RDIMMs, and I remember from when we did the Why DDR5 is Absolutely Necessary in Modern Servers that the 32GB DIMMs we used, at that time, were under $200 each. Dell’s list prices are more than 10x what we are paying.

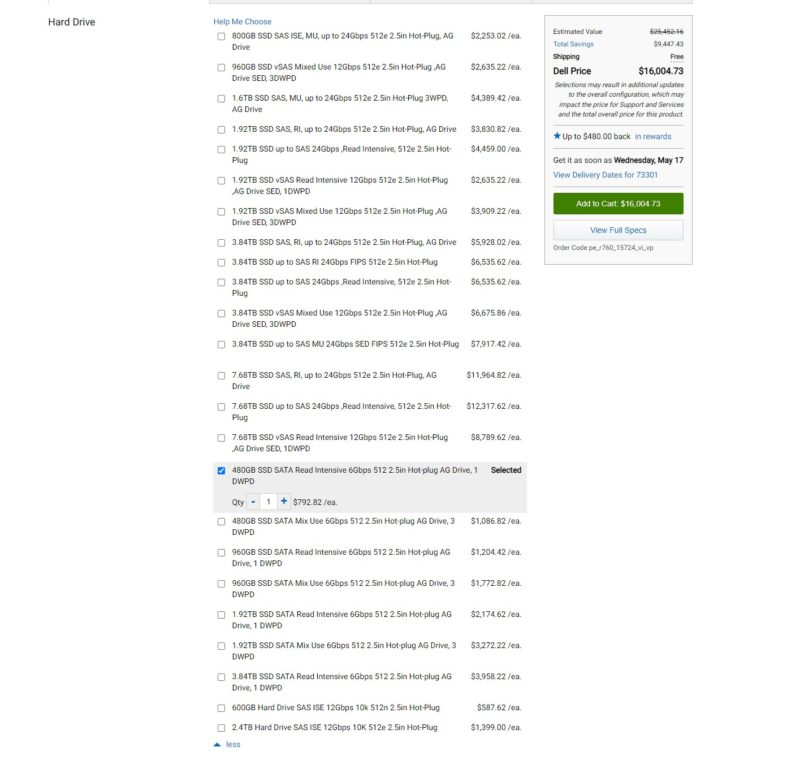

Another view is the SSD side. A 960GB SATA SSD should be well under $100 these days, even for a data center variant. Not $1200 to $1800.

That is because the web configurator list prices are essentially fantasy numbers, even with the automatically applied discounts. The tool seems as though it is meant to generate configurations to be priced via a sales rep discounting process, and potentially drive service revenue, not to actually be purchased online.

In the early 2010’s I lead a team at PwC to re-do the pricing, discounting, and sales exception process at a large competitor to Dell EMC, so this is not shocking as a 60% discount was common in the industry at that time. What was shocking was seeing prices that were intended to be discounted by 90%+ in a US list price. Ten years ago, OEMs had special list prices for countries like China in that range just so procurement teams there could walk away with a huge win by securing 90% discounts. I have not seen that same strategy in use with US customers as often until checking the price on our configuration.

Final Words

The Dell PowerEdge R760 is a very nice server that is going to appeal to the massive install base that Dell has. If we go back 3-5 years to servers like the PowerEdge R740 this new generation offers 2:1 or better server and socket consolidation ratios. That is simply awesome.

The design of the PowerEdge R760 is so flexible and has years of methodical know-how that have gone into each physical part. As someone who reviews dozens of server models a year across major vendors, it is obvious how much thought went into the design.

Overall, if you are a fan of PowerEdge, or servers in general, then the new Dell PowerEdge R760 is simply awesome.

—- Advertisement —-

Also, see STH hosting Massive Supermicro X13 Generation for 4th Gen Intel Xeon Scalable Launch content.

On BOSS Cards: Previous generations required a full power down and removing the top lid to access the M.2 card to change BOSS sticks. This new design allows hotswapping BOSS modules without having to remove the device from the rack. Very handy for Server Admins.

I’m loving the server. We have hundreds of r750’s and the r740xa’s you reviewed so we’ll be upgrading late this year or next thx to the recession.

Pricing comments standout here.

I’m in disbelief that Dell or HPE doesn’t just buy STH to have @Patrick be the “Dude you’re gettin’ a PowerEdge” guy. They could pay him seven figures a year and it’d increase revenue by nine to whoever does it. I’d be sad to lose STH from that tho

Shhhh….that worked with Anand, Kyle, Ryan, Cyril, Geoff, Scott….

I had a look at these server and preference the lenovo above them, I got a quote for a bunch of NvME drives and they wanted to put 2 Percs in to cover the connectivity their solution was expensive in inflexible especially if you put the NvME on the raid controllers I think it only does Gen3 and only 2 lanes per dive or something like that

the solution I got from lenovo with the new genoa chip was cheaper , faster and better because of their anybays and the pricing on the U3 drives was pretty good as well especially b/c I only wanted 16 cores and they have a single CPU version

you need to take the time to real the full technical documentation for this server withe the options and how stuff connects.

Agreed that the pricing is bonkers. I built a R7615 on Dell public, and it came out to $110k, while on Dell Premier it was $31k. A Dell Partner had built that out and tried to say $60k was a reasonable price, based on the lack of discounts Dell gave them. Mind you a comparable Lenovo or Thinkmate was $5-7k less than the $31k.

Second is their insane pricing on NVMe drives in particular. In a world where you can get Micron 9400 Pro 32TB’s for ~$4,200 retail (from CDW no less), Dell asking $6,600 for a 15.36TB Kioxia CD6-R (which is their “reasonable” Premier price) is shameful! I think they are actively trying to drive people away from NVMe, despite the PERC12 being a decent NVMe RAID controller for most use cases. Whenever I talk to a Dell rep / partner, they try to push me to SAS SSDs since NVMe “are so expensive”. You’re the ones making it so damn expensive!

Dell really should get more criticism / kick back for these tactics.

Tam, FYI the PERC12 is based on the Broadcom SAS4116W chipset and has a 16x PCIe Gen 4 interface and I believe is 2x Gen 4 lanes per SSD. Still a bottleneck if you are going for density of drives, but fairly high performing if you can split it out to a few more half capacity drives. E.g. four 15TB drives instead of two 32TB drives.

There are a couple of published benchmarks showing the difference, for example:

https://infohub.delltechnologies.com/p/dell-poweredge-raid-controller-12/

So, if you do need hardware NVMe RAID, such as for VMWare with local storage, the PERC12 looks like a solid solution. But they do have direct connect options to bypass using a PERC if you prefer that.

Thanks for that link Adam. The Broadcom docs for this gen chip, with 2, 8x connectors (8 PCIe lanes per connector), show that # of lanes assigned can vary by connector, and can be 1, 2 or 4 per drive, but must be the same for every drive on a specific connector. See page 12 of

https://docs.broadcom.com/doc/96xx-MR-eHBA-Tri-Mode-UG

Keep in mind though that the backplane needs to support matching arrangements. Not sure what Dell is doing there. It’s an issue on at least some Supermicro AFAIK.

So glad to see the latency came down to NVMe levels in that PERC12 testing and write IOPS improved so much. As a Windows shop, hardware RAID is still our standard.

Agreed on the silliness of the Dell drive pricing though – had to go Supermicro due to it on some storage servers, though I much prefer Dell fit/finish, driver/firmware and iDRAC for our small shop. I was able to get around it by finding a Dell outlet 740xd that met our needs one time as well (and was packed with drives so risk of needing to upgrade was very low).

Whereas I can buy a retail drive (of approved/qualified P/N) and put it in my Supermicro servers.

SIX HUNDRED WATTS IDLE AND 2000 DOLLAR MEMORY STICKS? What is this, man.

Do you know where the power is going for a 600w idle figure? You mentioned a specific high performance state config; is that some sort of ‘ASPM is a lie, nothing gets to sleep ever, remain in your most available C-states, etc.’ configuration(and, if so, are there others?); or is there just a lot going on between the lowest power those two Xeons can draw without going to sleep and the RAM, PERC, etc?

I wouldn’t be at all surprised to see 2x 300w nominal Xeons demand basically all the power you can supply when bursting under load, that’s normal enough; I’m just shocked to see that idle figure; especially when older Dells in the same basic vein, except with older CPUs that have less refined power management, less refined/larger process silicon throughout the system generally, plus 12x 3.5in disks put in markedly lower figures.

I think the R710 I was futzing with the other day was something like 100-150w; and that was with a full set of mechanical drives, full RAM load, much, much, less efficient CPUs, etc. Lower peak load, obviously, since the CPUs just can’t draw anything like the same amount the newer ones can; but at idle I would have expected it to be worse across the board.

thanks for the reply Adam, I did realize that after I posted it but you cant edit I dont think , the other thing was the requirement I was quoted to have 2 percs in stalled. If I was building some think “special” especially with NvMEs I would go the intel server you can really muck around with them , support is a bit of a pain unless you a big guy, but for what you save you can afford to actually buy a couple of spares, like a mainboard ect, they have connectivity on their boards directly behind the drive bays with MCIO connectors all run flat out if you needed it. I have a unit here with VROC I was testing but got some funny results and dont have the coin to buy really good drives to see if issue still arises.

I would love STH to actually build a VROC server and do some numbers with some quality components since you probably have most of it hanging around

I completely agree about VROC, I requested that in a comment on another STH article. Since VROC is supported by VMWare, I’m really curious what performance looks like versus software raid, ZFS & a current gen hardware RAID controller.

Also as a general question for all readers- does anyone know in practice how this generation of Dell servers handles non-Dell NVMe drives? What little Dell publishes just states there are certain NVMe features that are required.

Maybe STH could test tossing in a couple of Micro 9400 drives into the R760?

I created a thread about VROC on the forums and shared my very limited experience. Figured we do not want to pollute these comments further. I agree there is a dearth of information available on it which is a problem as there is a need for such solutions.

https://forums.servethehome.com/index.php?threads/intel-vroc-2023.39815/

Super thread on VROC.

Yuno put it about as well as anyone, Dell has lost their damn minds and their entire company must be high to think this crap is okay. I just saw today they’re still selling a desktop with an nvidia 1650 in it for almost $1000 ( https://www.dell.com/en-us/shop/desktop-computers/vostro-tower/spd/vostro-3910-desktop/smv3910w11ps75397 ), what kind of bizarro world are we living in?

What kind of performance are you seeing with the BOSS setup? I just received a PowerEdge R360 with a BOSS-N1 configured for RAID1 and I’m getting 1400MB/s READ and 222MB/s WRITE. I can’t find any reviews stating the read/write performance.