Dell S5232F-ON Internal Hardware Overview

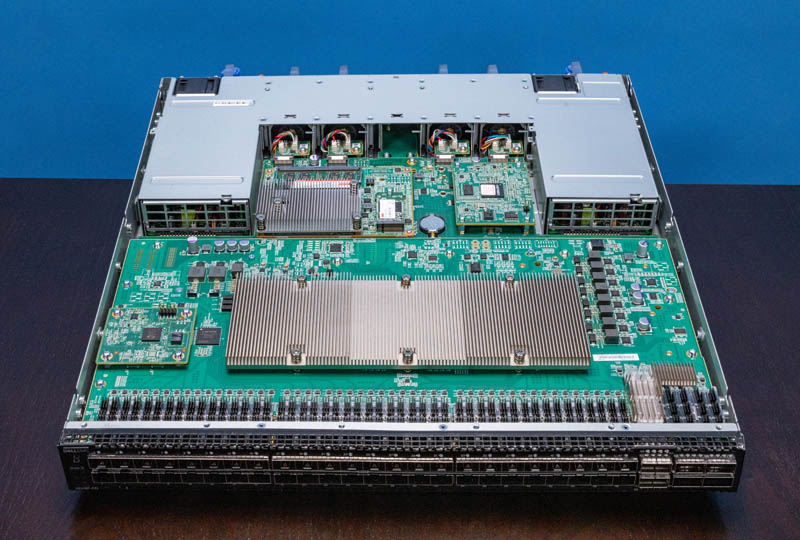

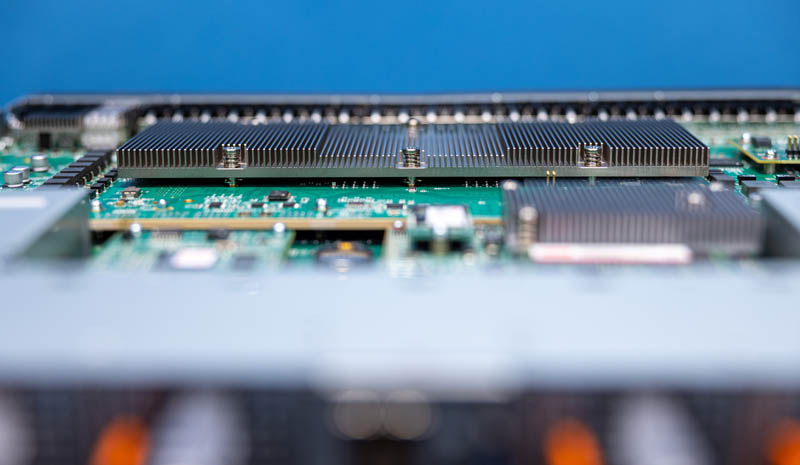

Inside the switch, we have a fairly standard layout with ports, the switch chip and its PCB, and then the management and power/ cooling. This is similar to many other switches that we have seen.

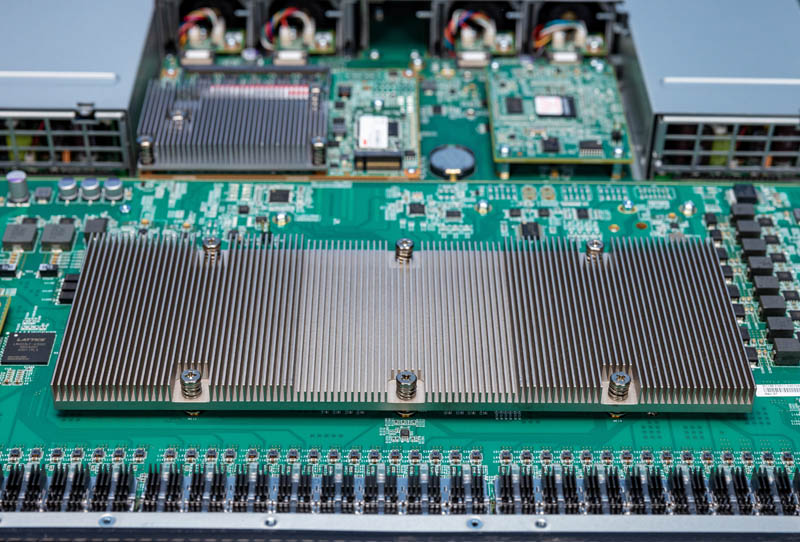

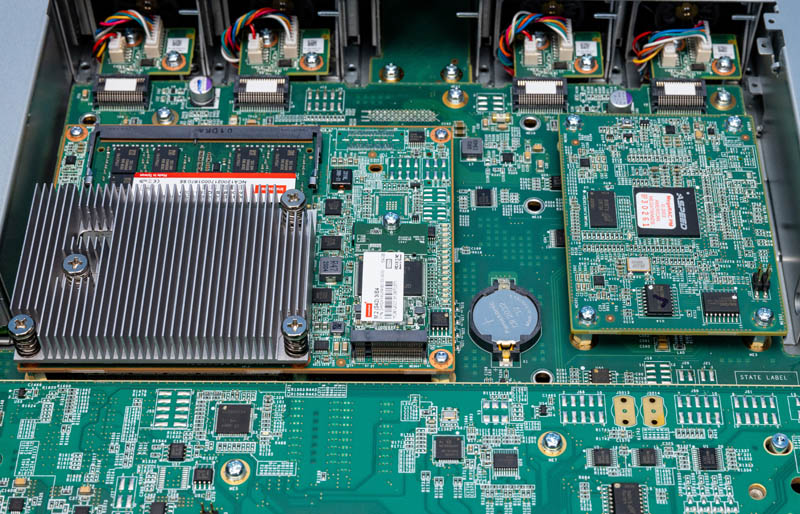

The switch chip here is the Broadcom Trident 3. It is covered by a fairly large heatsink.

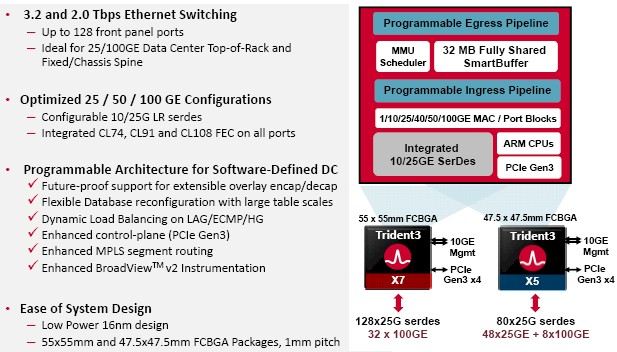

The Broadcom Trident 3 is a 16nm switch chip that is designed for more features while the Tomahawk line is designed for outright performance. Dell also has Tomahawk-based Z series switches in this generation. The Trident 3 family spans the X2, X3, X4, X5, and X7 models ranging from 200Gbps to 3.2Tbps. This switch only needs 2Tbps of performance because that matches the port count.

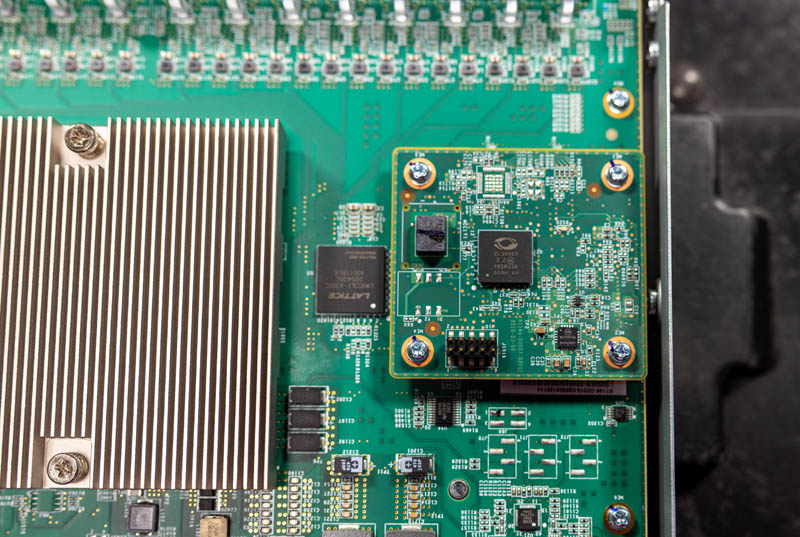

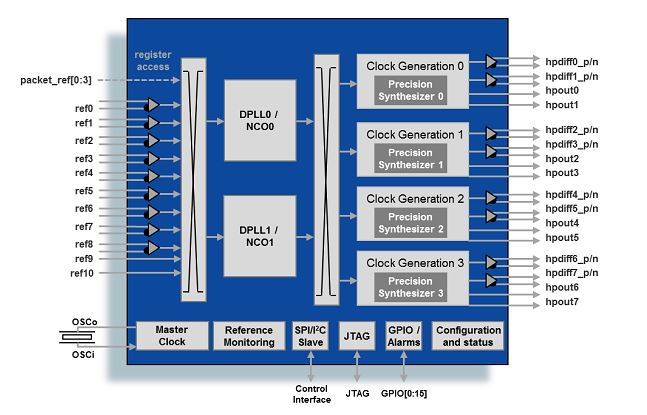

There is also a Microsemi ZL30363 (now Microchip) riser board. This Microsemi ZL30363 is an IEEE 1588 and synchronous ethernet packet clock and dual-channel network synchronizer.

Feel free to look it up a bit more, but here is the block diagram for that.

The other side of the PCB is relatively lower density.

Behind the main switch PCB, we have the PCB that handles the power supply inputs, fan modules, and management boards.

As with the newer S5232F-ON (and unlike the S5148F-ON) the CR2032 battery, while still on this main PCB, is now accessible when the management boards are installed.

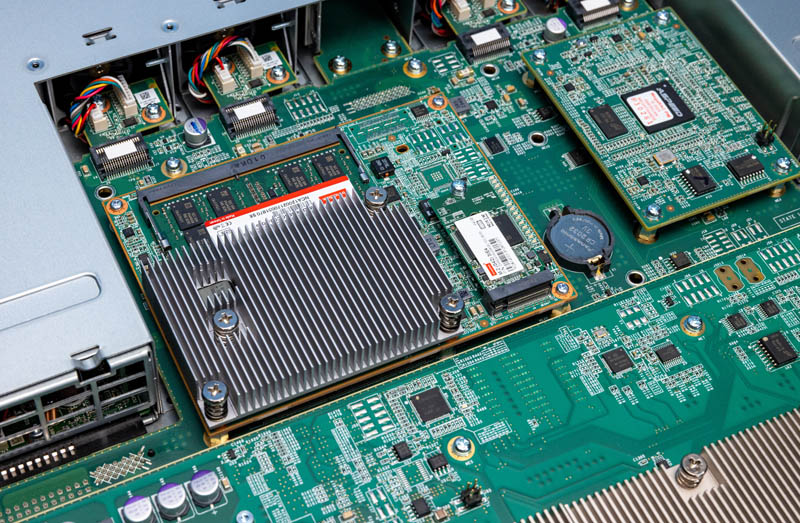

One of the big changes in the S5200-ON generation, aside from the switch chip, is the move to an Intel Atom C3000 series “Denverton” chip. This is a five-year newer processor than was found on the S5100F-ON series. Here is an example from a Supermicro motherboard that we took for the launch of the Atom C3000 series. You can learn more about Denverton on STH. We hosted Denverton Day Official STH Intel Atom C3000 Launch Coverage Central when Intel decided to release the part and not publicize it so we have a massive amount of content on the Atom C3000 series.

In this generation, the SSD storage is now up to 64GB versus 16GB in the previous generation. The 16GB of onboard memory is found via the SODIMM on top of the board but the second SODIMM is actually below the management PCB.

Another huge change is that the S5232F-ON has a standard BMC. While Dell EMC uses a proprietary iDRAC controller on its PowerEdge line, some non-PowerEdge servers, as well as the vast majority of servers outside of the big 3 legacy server vendors Dell, HPE, and Lenovo use the ASPEED BMCs. Large hyperscalers use the ASPEED parts not just in servers but also switches, storage, arrays, JBOFs, JBOGs, and so forth. We even have IBM Power9 servers that use ASPEED BMCs so it is also the high-end legacy vendors using them.

Here, the ASPEED BMC has a MegaRAC PM sticker with “American Megatrands” on it where the company’s name is American Megatrends. We covered this in our Dude this should NOT be in a Dell Switch… or HPE Supercomputer piece. We are not going to go into that here, but you can read the above watch the video if you have not already.

Since Dell and AMI say that this is fine, we are going to treat it as such, even though there was quite a division in how our readers felt about this being in a $10K switch.

Next, we are going to look at the software implications of this switch’s hardware.

A Small Typo:

Next to those we have four QSFP28 100GbE ports. That gives us 800Gbps of uplink bandwidth and 1.2Gbps of 25GbE server connectivity.

Should be more like 1.2Tbps.

I found an Easter Egg in this article! It says the power supplies are 750 kW, so I imagine with two of them it would power around 50,000 PoE devices at 30 W each :)

What is the purpose of switches like these? We use 32 port 100G arista 7060s and use 4×25 breakout cables, and 4x 100g uplinks per ToR giving 800g to the rack itself. Obviously we have additional ports for uplinks if necessary, but it hasn’t been, but when I evaluated these switches I wasn’t sure what the usage was because it doesn’t provide the flexibility of straight 100g ports.

I would love to see what a DC designer would have to do to deal with a system that has dual 750,000w PSUs. lol

I just have to say thank you for your review of this switch – especially your coverage of Azure SONiC, which is on my road map for a parallel test installation we set our hearts on being able to roll out when we’re moving to the new software infrastructure that’s the result of years of development in order to reduce our monoliths to provide the seeds for what we hope to become our next only more populous monolith installation of expanded service clusters that made more sense to partition into more defined homologated workloads. We’re entirely vanilla in networking terms, having watched and waited for the beginning of the maturity phases of adoption, which I think is definitely under way now. To be able to deploy parallel vanilla and SDN (SONIC) switchgear on identical hardware, is precisely the trigger and encouragement that we’ve been looking out for – thanks to STH for bringing the news to us, yet again.